|

||

|

||

| ||

Contents

So, not so much time has passed since the release of the NVIDIA GeForce 6600 series, and now ATI is announcing its "Canadian answer". The X700 series also comprises several video cards, united by the same codename of the chip - RV410. The new series is oriented solely to PCI-Express, so we cannot expect the AGP counterparts in the nearest future (or for good). No matter how you feel about this series, insulting for the AGP segment still covering almost 99% of users. You can wax indignant over the behavior of the leading manufacturers, but progress is progress. It's appropriate here to remember that the market will finally cross the T's, and the demand may force the manufacturers to listen to users' needs and release the AGP counterparts. Note that it will be easy for NVIDIA (as it already has an HSI bridge, which converts AGP to PCI-E and vice versa) and not so simple for ATI, who does not possess such a bridge. Our research and measurements demonstrated that ATI is wrong to support RADEON 9800 PRO on the AGP market as an alternative to X700 in the PCI-E segment, because 9800 PRO does not demonstrate proper performance, which will pertain to the new video cards up to 200 USD. So what is left to ATI is to drop the prices for its old products down to $150 and lower. This is of small profit, considering the expensive PCB and the 256-bit memory bus. So, more de-featured versions of 9800 Platinum Edition based on the 128-bit bus will most likely come. The "speed" they demonstrate is a shame. So ATI will probably have hard times on the AGP market in the $150-250 price segment, especially if NVIDIA releases the AGP version of GeForce 6600, which is quite possible. We'll leave forecasts and assumptions at that, and focus on the new product released by the Canadian company, in order to temporarily put aside the AGP market and compare RADEON X700XT with GeForce 6600GT. Official RADEON X700XT/PRO (RV410) specification

Specification on the RADEON X700XT reference card

A list of existing cards based on RV410:

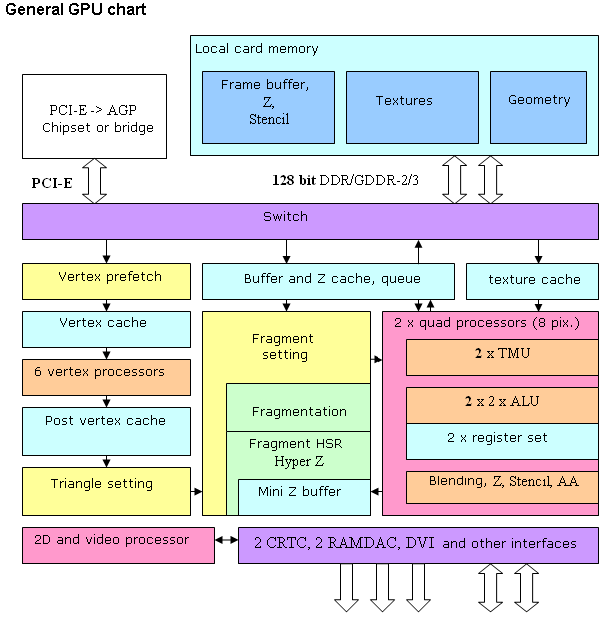

As we can see, there are no special architectural differences from R420, which is not surprising - RV410 is a scaled (by means of reducing vertex and pixel processors and memory controller channels) solution based on the R420 architecture. The same situation as with the NV40/NV43 pair. Moreover, as we have already noted, this generation features extremely similar architectural principles in both competitors. What concerns the differences between RV410 and R420, they are quantitative (bold elements on the diagram) but not qualitative - from the architectural point of view the chip remains practically unchanged. Thus, we have 6 (as before, which is potentially a pleasant surprise for triangle-greedy DCC applications) vertex processors and two (there were 4) independent pixel processors, each working with one quad (2x2 pixel fragment). As in case with NV43, PCI Express is a native (that is, on-chip) bus interface, and AGP 8x cards (if there appear any) will contain an additional PIC-E -> AGP bridge (displayed on the diagram), which will have to be designed and manufactured by ATI. Besides, note an important limiting factor - two-channel controller and a 128-bit memory bus - we'll analyze and discuss this fact later on, as we did with NV43. The architecture of vertex and pixel processors and of the video processor remained the same - these elements were described in detail in our review of RADEON X800 XT. And now let's talk about the potential tactical factors: Considerations about what and why has been cut downOn the whole, at present we have the following series of solutions based on NV4X and R4XX:

Similar picture, isn't it? Thus we can predict that the weak point in the X700 series, as in case of the 6600 series, will be high resolutions and full-screen antialiasing modes, especially in simple applications. And the strong point - programs with long and complex shaders and anisotropic filtering without (or, for ATI possibly with) MSAA. We'll check this assumption later in game and synthetic tests. It's difficult to judge now how reasonable the introduction of 128-bit memory bus was - on the one hand, it cheapens chip packaging and reduces a number of defective chips, on the other hand, the cost difference between 256-bit and 128-bit PCBs is small and is excessively compensated by the cost difference between usual DDR and still expensive high-speed GDDR3 memory. Perhaps, from the manufacturers' point of view the solution with a 256-bit bus would be more convenient, at least if they had a choice. But from the point of view of NVIDIA and ATI, who manufacture chips, very often selling them together with memory, the 128-bit solution with GDDR3 is more profitable. Its impact on performance is another story, the potential restriction of excellent chip features because of the considerably reduced memory passband is obvious (8 pipelines, 475 MHz core frequency). Note that the Ultra suffix is still reserved by NVIDIA - considering a great overclocking potential of the 110 nm process, we can expect a video card with the core frequency at about 550 or even 600 MHz, 1100 or even 1200 (in future) memory and with the name 6600 Ultra. The question is how much it will be? To all appearances, vertex and pixel processors in RV410 remained the same, but the internal caches could have been reduced, at least proportionally to the number of pipelines. However, the number of transistors does not give cause for trouble. Considering not so large cache sizes, it would be more reasonable to leave them as they were (as in NV43), thus compensating for the noticeable scarcity of memory passband. All techniques for sparing memory bandwidth - Z buffer and frame buffer compression, Early Z with an on-chip Hierarchical Z, etc. Interestingly, unlike NV43, which can perform blending writing not more than 4 resulting pixels per clock, pixel pipelines in RV410 completely correspond to R420 in this respect. In case of simple shaders with a single texture RV410 will correspondingly get almost double advantage in fillrate. Unlike NVIDIA having sufficiently large in terms of transistors ALU array, which is responsible for postprocessing, verification, Z generation, and pixel blending in floating point format, RV410 possesses modest combinators and that's why their number was not cut down so much. However, in PRACTICE the reduced memory band will almost always not allow full 3.8 megapixel/sec writing, but in synthetic tests the difference between RV410 and NV43 can get noticeable in case of a single texture. The solution to retain all 6 vertex units is no less interesting. On the one hand, it's an argument in the DCC area, on the other hand we know that in this area it's all up to drivers, OpenGL in the first place - traditionally strong aspects of NVIDIA. Besides, floating blending and Shaders 3.0 may be valuable there - exactly what the latest ATI generation lacks. Thus, the solution concerning 6 vertex pipelines and active RV410 positioning on the DCC market looks disputable. The time will show whether it was justified. We'll check all these assumptions in the course of our synthetic and game tests.

Technological innovationsOn the whole, there are no innovations in comparison with R420. Which is no disadvantage in itself. In comparison with NV43:

Before examining the card itself, we'll publish a list of articles devoted to the analysis of the previous products: NV40/R420. It's obvious already that the RV410 architecture is a direct heir to R420 (its chip capacity was halved).

Theoretical materials and reviews of video cards, which concern functional properties of the GPU

I repeat that this is only the first part of the review devoted to the performance of the new video cards. The qualitative components will be examined later in the second part (3D quality and video playback).

So, reference card RADEON X700XT. Video card

We can see that the product is closer to X600XT in its design; but unlike this card, X700XT has seats on the back of the PCB for additional memory to get the 256-MB solution. The PCB also has a seat for RAGE Theater (VIVO).

Cooling device.

The cooler removed, you can see the chip. Let's compare the core dimensions in RV410 and R350. Why R350 of all? Because this chip also incorporates 8 pixel pipelines, and it has twice as little vertex pipelines as well. With all that it's based on a 0.15-micron process technology, while RV410 is already based on the 0.11-micron process technology.

Well, the smaller core dimensions are quite expectable due to a thinner process technology. Though the number of transistors in the chip is not reduced. And still, one can assume that some part of its caches or other technological elements was cut down. Our examination will show it all...

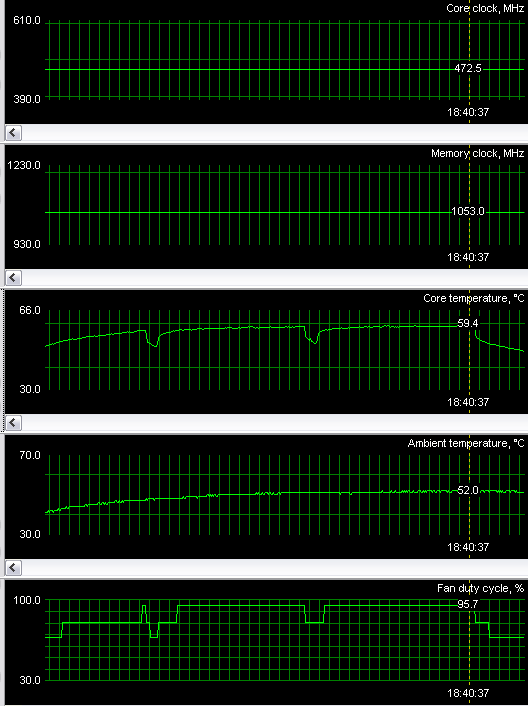

Now let's return to operating temperatures of the card and the cooler noise. Due to the regular responsiveness of Alexei Nikolaychuk, the author of RivaTuner, the next nonpublic beta-version of this utility already supports RV410. And, moreover, it is capable not only to change and control card's frequencies, bus also monitor temperatures and a fan speed. Here is what the card demonstrated operating at regular frequencies without external cooling in a closed PC case:

Though the temperatures were not rising so intensively and reached only a mark below 60 degrees, the cooler behaved "nervously", as you can see on the bottom graph above, which displays the cooler operation percentagewise from its maximum possible rpm. As I have already noted, it causes very disagreeable noise.

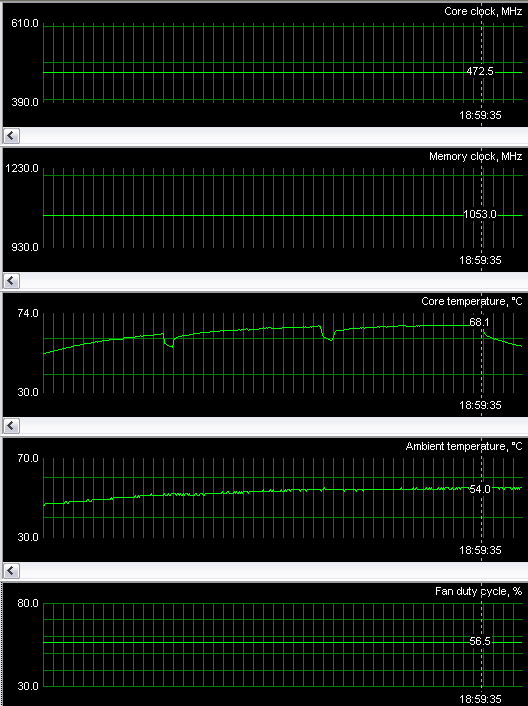

Let's take advantage of the RivaTuner feature to control the fan and fix its speed at such a level when its noise is not alarming and is hardly audible - it's approximately 55-56% of its rotational speed.

The temperatures of the core and of the card on the whole have raised not very high, and are still in the secure zone. Why this overcautiousness with the cooler? We don't know the answer yet and hope to get explanations from ATI. Installation and DriversTestbed configurations:

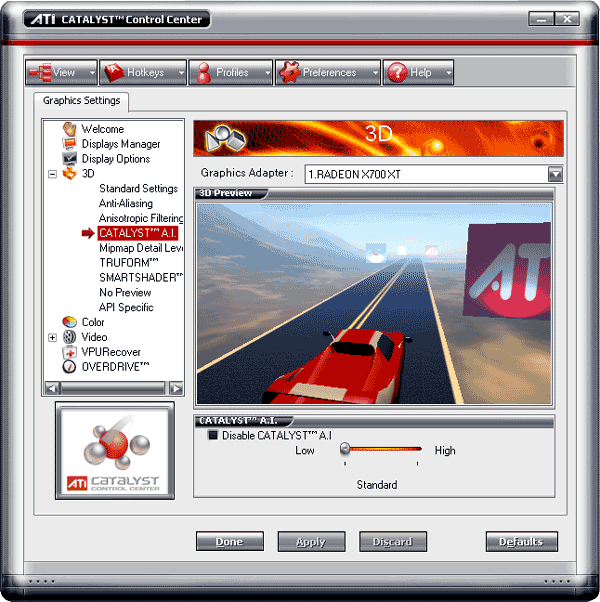

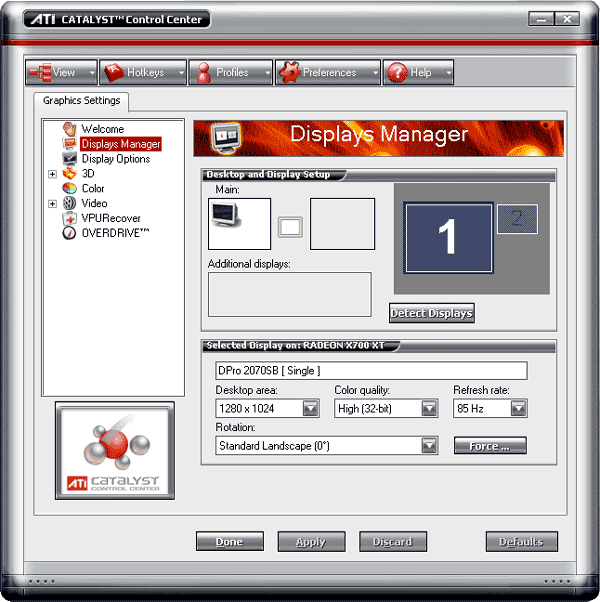

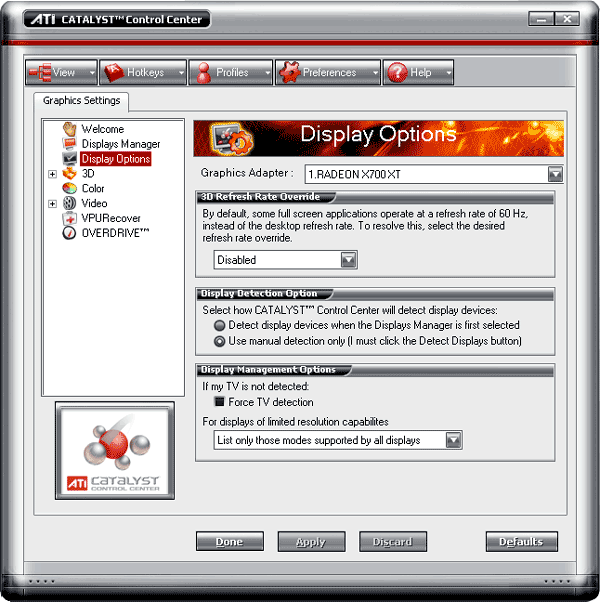

VSync is disabled. As we can see, ATI has prepared a new version of drivers to the launch of RADEON X700. The salt of it is CATALYST Control Center. However this utility was officially released earlier, in 4.9. But why do we lay stress on this program in this article? The answer is simple: CCC is the only way to use such new features as 3D optimization control. But let's not put the cart before the horse. First of all it should be noted that CCC is a large program that takes up much hard disk space and takes long to download from Internet. Plus Microsoft .NET 1.1, which adds 24 MB. CCC will not work without it. Is it worth the download expenses? At first glance it really is. But you should take a closer look. From here you can download (or open) an animated GIF file (920K!), which demonstrates all CCC settings.

And here we shall touch upon only those settings that are interesting from the point of view of innovations in 3D graphics control.

We can see the settings called CATALYST A.I. They enable so called optimizations of drivers for various games, as well as general filtering (trilinear and anisotropy) optimizations. There are three grades:

We shall publish the performance results of all the three modes in X700XT below, in the section devoted to the analysis of speed. The quality aspect will be analyzed in the next article. According to ATI, the optimizations support the following games:

So, it seems like a useful thing. Performance tests will prove that. We'll see what happens with the quality later.

Proceeding with the CCC examination, a summary tabbed page with all major settings looks interesting:

I would recommend this tabbed page to start managing 3D. Of course, those tabbed pages with some function controls have their advantages, at least you can see the results of activating different functions on a 3D scene looped in a window.

I also want to note the comfort of sampling frequencies controls:

And a friendlier TV interface:

But working with CCC also has major disadvantages. First of all, it is an irritatingly slow interface. When you switch one of the controls and click APPLY, the program "thinks" for half a minute (sometimes you even feel as if it has already frozen) but then the normal operation restores. Nervous users may fall in stupor or psychosis, or decide to throw this piece of software out.

So, ATI programmers still have some issues to improve. Lots of them. IN THE DIAGRAMS with the ANIS16x lettering, the results of GeForce FX 5950 Ultra and GeForce PCX5900/5750 are obtained with active ANIS8x. It should be noted that by default the driver optimizations are enabled and set to LOW/STANDARD, so the main comparisons with their competitors were carried out exactly in this operating mode of X700XT. Test resultsBefore giving a brief evaluation of 2D, I will repeat that at present there is NO valid method for objective evaluation of this parameter due to the following reasons:

What concerns the combo of our sample under review and Mitsubishi

Diamond Pro 2070sb, this card demonstrated the excellent

quality in the following resolutions and frequencies:

[ The next part (2) ]We express our thanks to ATI

for the video cards provided to our lab. Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||