|

||

|

||

| ||

When NVIDIA launched two 55nm solutions based on the overhauled GT200, AMD did not respond with its own cards, as it often happened, although rumors about some improved RV770 were already circulating the web. However, the company announced a new graphics card based on the improved RV7x0 GPU in early April. NVIDIA did not want to surrender the initiative, so it launched another model -- GeForce GTX 275. This graphics card is a direct competitor to the new solution from AMD. And today we are going to examine it. As usual, NVIDIA specifies that GeForce GTX 275 uses the latest GPU, offers the best performance and functionality (PhysX, CUDA) for its price. No cheating here, but it's actually the 55nm GT200. It differs from GTX 260 and GTX 285 only in some characteristics: the number of active execution units and clock rates. This product fit between GeForce GTX 260 and GeForce GTX 285 in terms of performance and price. As we have already mentioned, the new card has been designed to oppose RADEON HD 4890, the most powerful single-GPU card from AMD. This corresponds to the $260 price tag -- more expensive than GTX 260, but cheaper than GTX 285. Note that some price ranges are very crowded now. There are a lot of solutions with similar characteristics and performance. Let's take NVIDIA for example: overhauled GeForce GTX 260 with 216 stream processors is not much slower than the GTX 275 even theoretically, and their results will be even closer in real games. The GTX 275 should perform on a par with the discontinued GTX 280, and its overclocked modifications can come closer to the GTX 285. They cannot compete with the dual-GPU GTX 295, of course, it's in a different price range. But the $199-299 segment is already overcrowded in our opinion. Game tests will prove whether we are right or wrong. If you are not familiar with the GeForce GTX 200 (GT200) architecture, you can read about it in our baseline review. This architecture was developed from G8x/G9x with some modifications. The detailed information about unified architectures of NVIDIA G8x/G9x/GT2xx can be found in the following articles:

We proceed from the assumption that you are already familiar with the GT200(b) architecture. Now we shall examine the new card from the GeForce GTX 200 series based on a 55nm GPU. The theoretical part will be very short again, because we have already examined the architecture and overhauled solutions based on GT200b. GeForce GTX 275

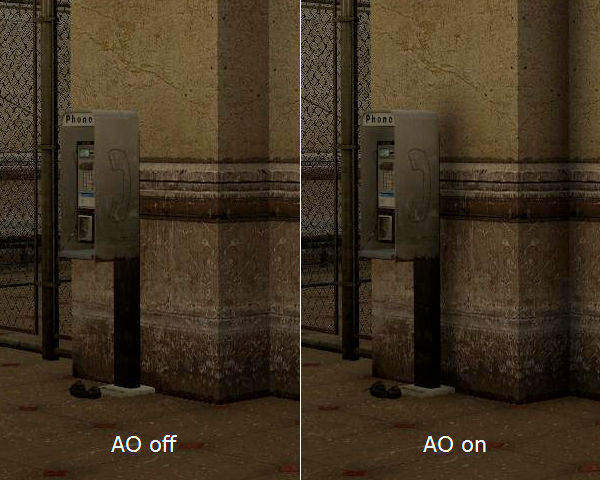

GeForce GTX 275 reference specifications

As GT200b chips are manufactured by the 55nm process technology, NVIDIA managed to replace the old GeForce GTX 260 and GTX 280 with new faster products. Along with improved performance, this solution consumes much less power than its predecessors. As usual, we should take a look at memory volume and the name of this product. This amount of video memory is determined by the PCB from the overhauled GTX 260 card with a 448-bit memory bus. Thus we have 896 MB here. It's sufficient for the great majority of applications, and our tests in games will prove it once again. The title is also simple. The new card from NVIDIA is a tad faster than GTX 260, so it was logically called GTX 275. This change in product number makes sense to users, it shows that the card stands in between GTX 260 and GTX 285. Figure five in the end stands for the 55nm GPU, and thus improved characteristics (power consumption and heat release). By the way, the overhauled GTX 260 with 216 SPs should have been logically called GTX 265. Architecture and featuresWe are again forced to tell you that we have nothing interesting to add here, because GT200b is no different from GT200, it's just smaller and consumes less power. The improved GT200 architecture based on the 55nm process technology makes NVIDIA GeForce GTX 275 faster, quieter, and less power hungry than GTX 280, while their relative performance is very close to each other. That could be the end of our theoretical part, but we decided to describe the other peculiarities, GPU part being self-explanatory. Graphics driver v.185As it often happens with new cards, NVIDIA timed the rollout to coincide with the release of the new video driver (v.185). It's a major update since v.180 that includes performance improvements for single- and multi-GPU solutions as well as new functions. Performance changes are provided by the following modifications: optimized texture memory management in Direct3D10 applications, higher efficiency of Z-culling and buffer compression for antialiasing, SLI scaling improvements, especially in games cased on the Half-Life 2 engine. The highest performance gains appear in high resolutions with antialiasing. Difference between Drivers v.182 and v.185 in these conditions amounts to 5-25% (it's even higher in Mirror's Edge with antialiasing), according to NVIDIA. Along with many bug fixes (and new bugs, of course), there appeared support for CUDA 2.2 that added useful features for developers and accelerated GPU-assisted computations in some tasks, as well as added Ambient Occlusion to some 3D games. Ambient OcclusionAmbient Occlusion (AO) is a global lighting (shadowing) model used in 3D graphics, which makes scenes look more realistic by calculating light intensity on object surfaces. Brightness value of each point on the surface depends on mutual positions of other objects in a scene. More simply, Ambient Occlusion calculates how much a given surface is blocked from light sources by other objects, and how much dissipated light it gets. The traditional light model calculates a surface color solely from its characteristics and light source properties. Objects in the light throw shadows, but they do not affect lighting conditions of the other objects in a scene. AO improves the traditional light model by calculating shadows from objects standing in the light. Here is an example:  These fragments show the effect from forced AO in Half-Life 2. The usual render mode with 'flat' lighting is on the left, Ambient Occlusion is on the right. When AO is enabled, angles between surfaces are shaded, as they get less light because of other objects. As a result, we get a more realistic image, deep and tridimensional. AO can be enabled in driver options. This feature is supported by application profiles in NVIDIA Control Panel, as each game needs individual adjustments for the algorithm. As for now, AO is supported in 22 popular applications: Assassin's Creed, BioShock, Call of Duty 4, Call of Duty 5, Call Of Juarez, Company of Heroes, Counter-Strike Source, Dead Space, Devil May Cry 4, F.E.A.R. 2, Fallout 3, Far Cry 2, Half Life 2 (games from this series), Left 4 Dead, Lost Planet: Colonies and Extreme Condition, Mirror's Edge, Portal, Team Fortress 2, Unreal Tournament 3, World In Conflict and World of Warcraft. The effect from AO is best noticeable in indoor scenes in the following games: Counter-Strike Source, Half Life 2, and Mirror's Edge. There is also a noticeable AO effect for grass and other vegetation in the open spaces in Company Of Heroes, World In Conflict, and World of Warcraft. The most technically advanced games, such as Crysis and STALKER: Clear Sky, initially support AO, it in their code. So they don't need to enable this feature in the drivers. It must be noted that AO does not make a big visual difference in many cases, but it may significantly reduce overall performance, as it's a compute-intensive algorithm. Performance drops from enabled AO may reach 20-50% depending on a given application, its graphics settings, and resolution. So it's up to you to decide whether you need this minor render quality enhancement or not. NVIDIA PhysXWe cannot help mentioning the PhysX technology in a review of a graphics card from NVIDIA. Especially as dozens of games support it, and NVIDIA already interested three biggest game developers in this technology last year: Electronic Arts, 2K Games, and THQ. And they already promised to use it in their future projects. It's supported by the most popular engines and development tools: Unreal Engine 3, Gamebryo, SpeedTree, Natural Motion, etc. Besides, PhysX is a cross-platform technology as far as it's possible. Along with PCs and all desktop consoles, it's also supported by iPhone. On a different level, of course. Apart from already familiar games with PhysX support (GRAW 2, Warmonger, MKZ, Unreal Tournament III, Mirror's Edge, Cryostasis, etc), NVIDIA presents PhysX-capable games that have appeared or will appear in 2009: Star Tales, Shattered Horizon, Underwater Wars, Metro 2033. There have already appeared patches with improved physics effects for two games ( Sacred 2: Fallen Angel and Cryostasis). Sacred 2: Fallen Angel was released last year in Autumn. And since that time Ascaron has been working on the patch to add GPU-assisted physics effects to the game. Most changes have to do with various effects with many particles -- weather and spell effects. Here is an example.  All particles used in these effects (foliage and stones in this case) collide with the ground and other objects physically correctly, they are also affected by wind. About 10000-20000 particles are simulated in each frame, so this job can be done at acceptable speed only by a GPU. According to NVIDIA, the speed of hardware accelerated physics computations grows more than fivefold. Cryostasis uses such effects as dripping water and melting ice, physically correct particle systems: water, sparkles, snow, dust, as well as cloth simulation: flags, clothes, etc. Besides, the April patch will update physics effects. As in the demo version, the game will have water simulated with particles and other GPU-accelerated physics effects. But not by patches alone. New games with PhysX support will be released. One of interesting titles with this support is Star Tales -- social network games (whatever it means) from a Chinese developer QWD1. Judging by its description, Star Tales is sort of an online version of The Sims based on Unreal Engine 3 with hardware-accelerated PhysX. Characters in this game use cloth simulation, their clothes look very realistic. Besides, the game uses particle systems. The company released a technical demo and a benchmark to evaluate these new features: The Star Tales benchmark uses several characters with cloth simulation, the scene contains about 14000 vertices to imitate cloth physics. Falling petals (their collisions with characters and floor) also demonstrate physically correct behavior. It's a complex job for a CPU, and Star Tales uses a GPU to compute frames at a sufficient frame rate. According to NVIDIA, even GeForce GTS 250 provides rendering in high resolutions with top graphics settings and cloth simulation at the frame rate above 30 FPS, which cannot be said about powerful CPUs. Among other games with PhysX support to be released this year NVIDIA mentions Underwater Wars from Biart Studio (action, end of 2009), Metro 2033: Last Refuge from 4A Games (first-person shooter, end of 2009) and Shattered Horizon from Futuremark (first-person shooter). They are all interesting, of course, but we don't know that much about these projects. Anyway, NVIDIA has an obvious market advantage, even though its competitor showed Havok demos using OpenCL for GPU acceleration at GDC. Even if there appear games with this support, they will also work with NVIDIA GPUs (as they support OpenCL just fine), and they will accelerate physics effects through Havok as well. It does not cancel their advantage in PhysX. That is, while GPUs from other manufacturers are limited to the Havok engine working with OpenCL, NVIDIA offers products supporting both popular physics engines: Havok with OpenCL and PhysX with CUDA. PhysX may eventually get support from other APIs for parallel computing. But it's a political issue rather than a technical one. NVIDIA CUDAGeForce GTX 275 supports CUDA just like any other modern card from NVIDIA. Much time has passed since the appearance of the first CUDA-applications for common users, such as Badaboom (to transcode video). The market is finally stirring. We can mention the following CUDA-applications that have been released recently:

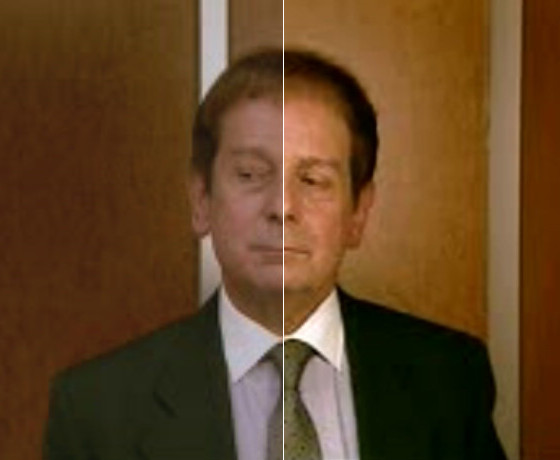

We already described some of the applications in our articles. Now let's examine some of the new programs. MotionDSP vReveal -- this application is used to process video data, it improves quality of low-bitrate video, such as online or mobile video clips. That's how it looks like (original video on the left, improved version on the right).  This picture hasn't been taken from advertising materials, where horrible video is magically turned into HD Video, almost like in blockbusters from Hollywood. That's what vReveal really does. As we can see, brightness and contrast changes have a strong effect on video quality. Besides, we can notice a minor resolution improvement (look at the collar and other lines). But these are not miracles. It's a static example, dynamic results are more impressive. Unlike usual post processing of each frame separately, this utility analyzes up to 30 frames before and after the current frame. This is done to stabilize the image and eliminate shaking, common among non-professional video recorded with small cameras without tripods. When vReveal uses only a CPU, it slows down almost fivefold. According to NVIDIA, even an inexpensive GeForce GTS 250 is twice as fast in this task as one of the fastest CPUs from Intel -- Core i7 920. We'll try to check it up in our future articles. Another interesting application is LoiLo SuperLoiloScope "Mars". It's a simple video editor supporting high resolutions (1920x1080). Its features: GPU-assisted GUI, hardware-assisted H.264 video decoding and encoding(!), hardware-assisted video processing, filters and effects. When these functions are executed by a GPU, they run ten times as fast as in case of a pure-CPU version. GeForce 3D VisionGeForce GTX 275 also supports GeForce 3D Vision. We already described this stereo imaging technology. Now we'll deal with several more features. GeForce 3D Vision kit includes the following items: wireless LCD shutter glasses, IR emitter (USB), nose pieces, cleaning cloth, CD with software, CD with demo programs, Guides, and cables: VESA stereo, DVI-HDMI, USB.  The wireless glasses use a high-quality optical system with high resolution and wide viewing angles compared to passive glasses. The glasses use the IR technology to transfer data, so they work well within six meters from the emitter. Up to 40 hours of battery life. The key advantage of GeForce 3D Vision is its excellent compatibility with games. NVIDIA's stereo driver provides integrated support for stereo rendering in games. The list includes over 350 of such titles. The stereo drivers are based on the same code as the usual drivers, and stereo support in games is similar to SLI profiles with ready settings for each game. They also support 3D video players and stereo viewers. By the way, support for external interfaces in GeForce GTX 275 does not differ from what we saw in previous models: GeForce GTX 260 and 285. The graphics card is equipped with two Dual Link DVIs supporting HDCP. You can use adapters to output video to the popular HDMI, audio in this case will be received from a sound card. Windows 7 readinessProbably for the lack of really interesting innovations in new solutions, both manufacturers mention their readiness for the upcoming Microsoft Windows 7. Both AMD and NVIDIA tell us about their tight cooperation with Microsoft, how well their drivers support this operating system, and what excellent feedback they get from Microsoft people. They even show diagrams where competing graphics cards demonstrate worse results than their own products. It's too early to evaluate 3D performance in Windows 7. This operating system hasn't been released yet. However, we can already discuss some of its features. It will be the first OS to support computing with CPUs and GPUs. Windows 7 features an updated driver model WDDM 1.1 that accelerates 2D and 3D rendering, offers improved video memory management and higher performance. Aero interface in the new system supports DirectX 10 acceleration, plus new APIs to accelerate 2D graphics: Direct2D and DirectWrite. But the most important GPU-related feature is DirectX Compute -- compute shaders in DirectX 11. DirectX Compute will bring hardware acceleration to image processing (various post filters), video processing (denoise, autocontrast, transcode, etc). As in case of NVIDIA CUDA, compute shaders will be used in games (physics effects, ray tracing, post processing, etc) as well as in technical and scientific computations. NVIDIA already offers DirectX Compute demos: rendering an ocean expanse, particle systems, and N-body simulation. These demos use compute shader features in DX11, doing complex parallel computing (FFT, bitonic sorting, etc) with CUDA-compatible GPUs. Compute shader support is very important for the industry, because unlike CUDA, it's not tied to a single manufacturer, and programs will run on all compatible GPUs from different manufacturers. Design

Pay attention to the comparison with the old GTX 260 (it's well posed, as both GTX 260 and GTX 275 have a 448-bit memory bus). The fact is, the first GTX 260 were based on the GTX 280 design (512-bit), only the number of memory chips we decreased from 16 to 14, thus cutting the bus down to 448-bit and reducing memory volume to 896 MB. However, later on engineers designed a new PCB for the GTX 260 to reduce manufacturing costs. It was based on the 448-bit bus and had only 14 memory seats. This very PCB was used for the GTX 275. In fact, the only difference between GTX 260 and 275 is in their cores: the number of stream processors in the former is cut down, while the latter has 240 stream processors as in the GTX 285. The cards from BFG and Zotac are actually copies of the reference design, so there is no point in discussing them. But the Palit product is very interesting. For one, it's the first card of this level (Hi-End) in our lab, which is manufactured by a NVIDIA partner. Until quite recently, all such cards were manufactured by third-party plants by NVIDIA's orders. So all partners offered the same cards, only labels, frequencies, and bundles were different. But now NVIDIA sells not only ready cards, but also GPUs, and some vendors can make cards on their own. Moreover, we can see on the photos that Palit uses a sterling 512-bit PCB for its GTX 275 (and most likely for the GTX 260), which was apparently designed for the GTX 285. It just lacks two memory chips, so the bus is automatically reduced to 448 bits, and memory volume is reduced from 1024MB to 896MB. Is it really cheaper to design a PCB with 16(!) memory chips on one side from scratch than to buy a ready solution from NVIDIA? I don't believe it. However, Palit would have hardly manufactured such products at a sacrifice. So it must be cheaper this way. This card has a drawback: for some strange reason SLI connectors were moved in comparison with the reference design, so it's impossible to use the card from Palit (based on this PCB) in a SLI configuration with other GTX 2xx solutions (of the reference design). They can work only with their full counterparts from Palit. Or you will have to find a flexible SLI bridge (which are very rare) that allows some offset between connectors. I'll stress the important point once again: all cards are 270 mm long, just like the 8800 GTX/Ultra. So a PC case should be large enough to accommodate this device. Besides, width of the housing does not change along the card, so a motherboard should have 30mm of empty space behind the used and the neighboring PCI-E x16 slots. Graphics cards of this series feature soundcard connectors for transmitting audio stream to HDMI (via a DVI-to-HDMI adapter). That is, the graphics card does not have an audio codec, but it receives audio signal from an external sound card. So, if this feature is important to you, make sure the bundle contains a special audio cable. Zotac and Palit cards have original TV-Out interfaces that require a special adapter to output video to a TV set via S-Video or RCA. Maximum resolutions and frequencies:

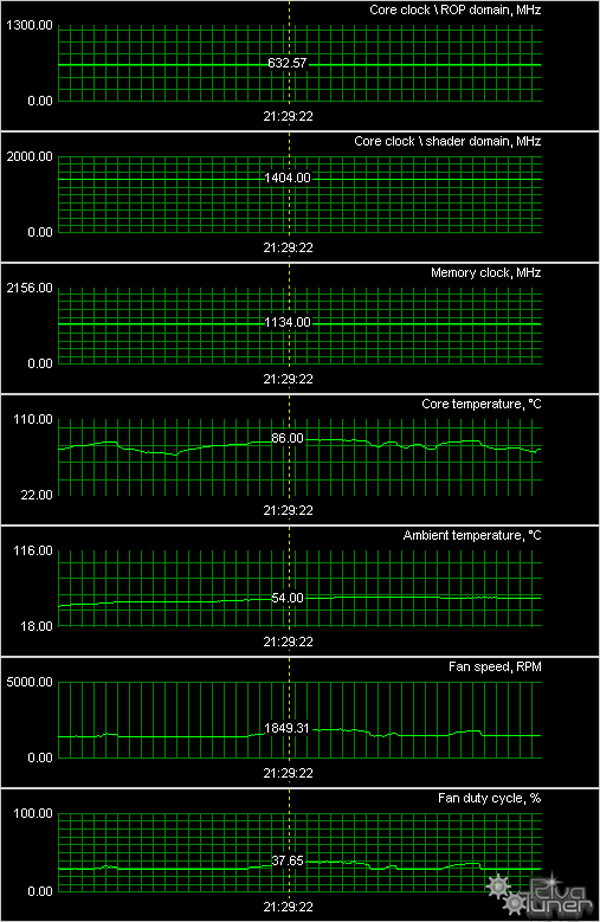

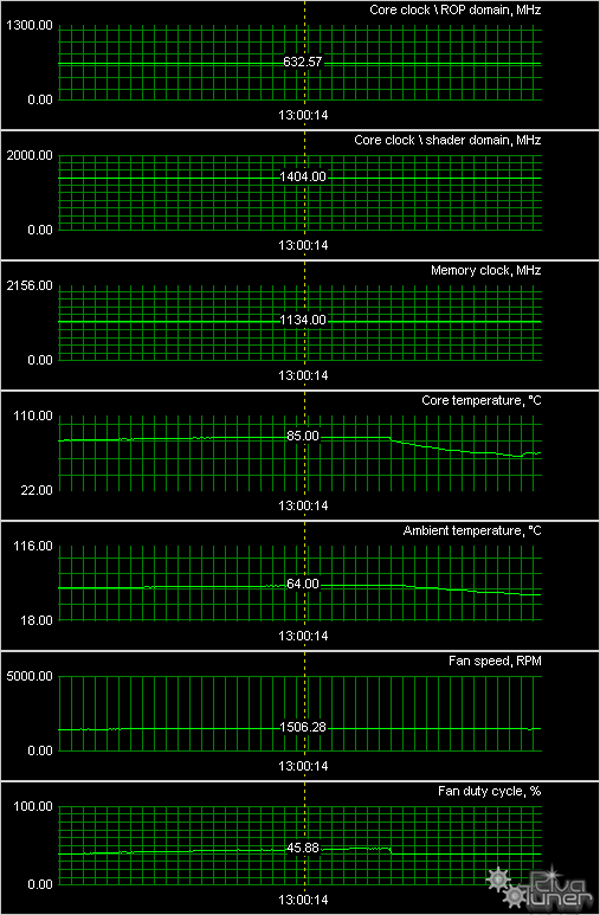

As for HDTV, a review is available here. CoolingAs two cards have the same cooler, we'll examine the one on the reference card. The Palit card has a special cooler that will be examined separately. The die was manufactured on week 52, 2008 or in the very end of 2008. Not surprising, considering that it does not differ from the GTX 285 as far as the core is concerned. We monitored temperatures using RivaTuner. As all these cards are just copies of the reference design and they operate at the same frequencies, we'll publish common results. Palit GeForce GTX 275 896MB NVIDIA GeForce GTX 275 896MB (reference) BFG GeForce GTX 275 OC 896MB Zotac GeForce GTX 275 AMP! Edition 896MB As we can see, temperature grows proportionally to operating frequencies of the card. The maximum level is 93°C, which is a borderline case. It's not a problem for the card itself, as it can endure temperatures above 100°C, but it can be critical for the entire system unit. The reference cooler should be more efficient. This also applies to the Palit card. BundlesA basic bundle should include the following items: a user manual, a software CD, a DVI-to-VGA adapter, a DVI-to-HMDI adapter, a component output adapter (TV-Out), and external power splitters. Let's see what accessories are bundled with each card. PackagesPerformance in gamesTestbed:

Benchmarks:

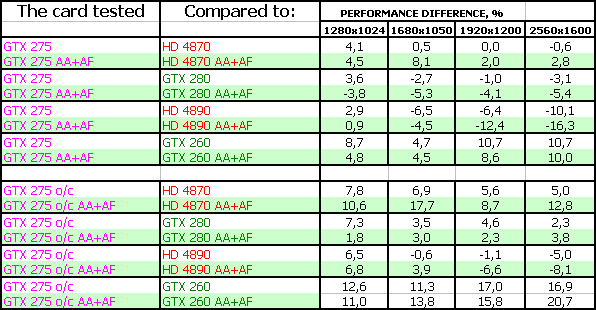

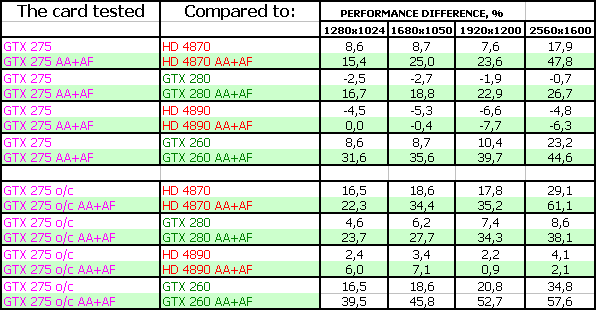

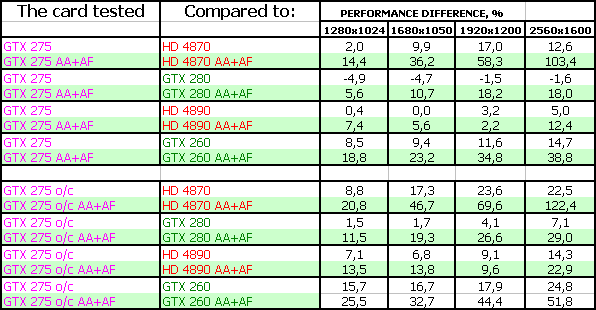

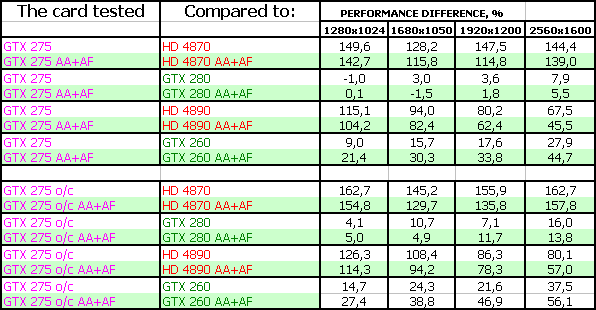

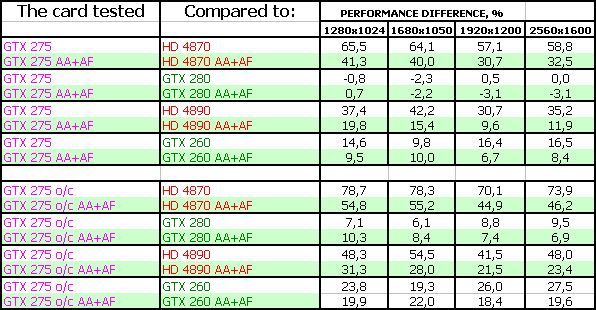

Note that performance charts are located on dedicated pages. S.T.A.L.K.E.R. Clear SkyPerformance charts: S.T.A.L.K.E.R.  World In ConflictPerformance charts: World In Conflict  CRYSIS, Rescue, DX10, Very HighPerformance charts: CRYSIS, Rescue, DX10, Very High  CRYSIS Warhead, Cargo, DX10, Very HighPerformance charts: CRYSIS Warhead, Cargo, DX10, Very High  Far Cry 2 Company Of Heroes 3DMark Vantage, Graphics MARKSPerformance charts: 3DMark Vantage, Graphics MARKS  Devil May Cry 4, SCENE1Performance charts: Devil May Cry 4, SCENE1  Lost Planet EC Colonies, SCENE1Performance charts: Lost Planet EC Colonies, SCENE1  ConclusionsWhat have we got here? A mighty competitor to the new AMD RADEON HD 4890. Indeed, GTX 275 demonstrates excellent results, significantly outperforming its competitor in most tests. But will it have an adequate price? If the HD 4890 and GTX 275 have the same price tags, the choice will be easy -- GTX 275, even though it has less memory (896 MB is more than enough these days). But if the HD 4890 card is cheaper, lower temperatures and power consumption of the 4890 will outweigh advantages of the GTX 275. However, it will depend on personal preferences, some users will choose GTX 275 because of higher fps, despite its higher power consumption and heat release. Others will go for the product with lower operating temperatures. We should justify the cooler installed on the GTX 275: it's quieter than the one on 4890. BFG GeForce GTX 275 OC 896MB is a reference card. Nothing much to say about its design. It operates at a little higher frequencies. So having test results of the reference GTX 275 as well as the card from Zotac, you can figure out the performance level of this BFG product. The manufacturer offers a 10-year warranty for this card after registration on its web site. Zotac GeForce GTX 275 AMP! Edition 896MB is the same reference card, but it comes with a better bundle. Besides, it has much higher operating frequencies. It's also backed up by the 5-year warranty. Palit GeForce GTX 275 896MB is made by Palit. Despite the problem with offset SLI connectors, it's still a very interesting product with a quiet cooling system. Haven't NVIDIA seen such a small performance difference between the 275 and 285 models? The GTX 275 is apparently killing its senior brother, offering just a little lower performance for much less money. The best example here is to compare the overclocked GTX 275 and 285 cards. That's when you feel this marketing mistake. However, the GTX 285 may be leaving the market soon, to be replaced with something like GTX 290 (or a dual-GPU solution like GTX 295, but operating at reduced frequencies, or a single-GPU 285 card operating at higher frequencies). But it's only our conjecture. As for now, we can only state that the GTX 275 is a very good product. Competition with the 4890 card is very intense. It's very difficult to point out a leader here, because both products have pros and cons. We should mention one drawback of the 4890, which is not present in the GTX 275 -- quality of video drivers. With hand on heart, I can say that NVIDIA drivers are much more pleasant to deal with. In case of Catalyst, we always worry whether its installation will go smoothly or not (various problems come and go from version to version, including failures to detect cards, etc). That may be another argument for this or that product. We also got official information from NVIDIA about a very large run of GTX 275 cards, so we disprove the previous comment about this card being a limited edition.

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. | |||||||||||||||||||||||||||||||||||||||