|

||

|

||

| ||

Much time has passed since our previous analysis of video decoding performance and quality in ATI and NVIDIA video cards. Since that time ATI was bought by AMD, both companies launched new video cards with improved support for video decoding. Software support for hardware-assisted video decoding in drivers is also constantly evolving. The situation with performance and quality may change several times a month. So, we again decided to test how well AMD and NVIDIA GPUs cope with decoding and displaying video data. Playing HD video with high bitrate, encoded into modern compression formats, such as H.264, WMV and VC-1, has high system requirements. There are no problems with performance, when you play video of any format in low resolutions. But some new CPUs barely cope with software decoding of modern formats in high resolutions (1920x1080) and high bitrates. The main load used to fall on a CPU, but modern video cards are now taking up more and more video decoding and post processing tasks. Modern video chips from AMD and NVIDIA contain special dedicated units, which are used to accelerate video decoding and post-processing. The latest solutions offload CPUs almost completely. One of such cards participates in our tests. Support for hardware-assisted playback, DirectX Video Acceleration (DXVA), requires special decoders (for example, NVIDIA PureVideo Decoder, CyberLink MPEG2 and H.264 video decoder) and players with DXVA support. We use available DXVA decoders for hardware acceleration of MPEG2, WMV, VC-1, and H.264. Various video chips have different level of support for hardware acceleration of video decoding, it depends on a model of a video card and its GPU. Some low-end solutions used to have limited support for hardware-assisted HD video decoding. But now the situation changes - both in AMD and NVIDIA. High-End GPUs of the R600 and G80 families do not support all features, offered by Mid- and Low-End solutions. In this review we are going to analyze comparative performance of decoding and playing video of various formats, from MPEG2 to VC-1, with three different video cards. Testbed Configuration, Software, and Settings

We used three video cards in our tests, based on GPUs from two main manufacturers:

The drivers that were installed: AMD CATALYST 7.4 for AMD cards under both operating systems and ForceWare 158.22/158.43 for NVIDIA cards under Windows XP and Windows Vista. Vista tests are necessary, because hardware-assisted video decoding features of G84, used in GeForce 8600 GTS, are currently available only on this operating system. Unfortunately, we had no opportunity to test new cards from AMD based on the latest GPUs of the RADEON HD 2000 family. But according to our colleagues, RADEON HD 2900 XT does not support all the features and does not differ much from previous solutions of this company in terms of hardware support for video playback. Software (players, codecs):

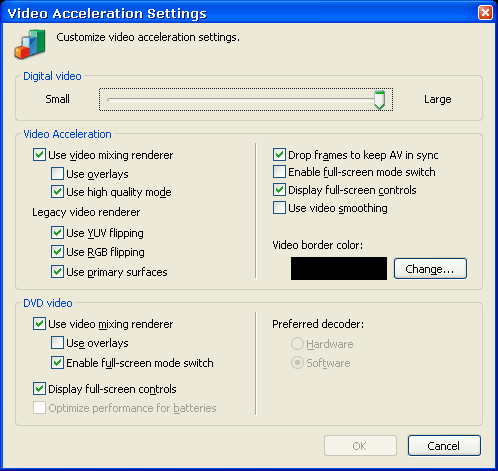

We chose CyberLink solutions from PowerDVD 7.3 Ultra as the main decoders for MPEG2 and H.264, because this is one of the most popular players along with WinDVD and Windows Media Player, and it also supports hardware acceleration on all video cards, including the latest ones - GeForce 8600 GTS. We enabled hardware acceleration in PowerDVD settings and in the corresponding decoder ("Enable hardware acceleration" and "Use DXVA"). In AMD CATALYST Control Center we enabled "Pulldown Detection". In NVIDIA ForceWare control panel we enabled "Use inverse telecine". The other options were left by default. You can see the key Windows Media Player 11 performance options on the screenshot:  "High quality mode" in WMP settings is responsible for using Video Mixing Renderer 9 (VMR9), performance tests were run in 1920x1080. We used Windows Media Video Decoder to decode WMV and VC-1 video. For H.264 video we used CyberLink 264 Decoder Filter and CoreAVC DirectShow Video Decoder. What concerns MPEG2, we used NVIDIA Video Decoder and CyberLink Video/SP Filter. Videos we used in our tests

Our test materials include video clips in all popular formats and two resolutions: 1280x720 and 1920x1080, and a couple of non-standard resolutions. We decided to give up videos of lower resolutions completely - if we ignore some quality issues that have to do with adaptive deinterlacing for MPEG2, there are no problems with such video playback. We run our tests in 1920x1080 with a special utility that uses Windows Media Player 11 ActiveX - it measures CPU load during playback and provides some other WMP11 statistics. We measured the average CPU usage while playing each video file. We also register such parameters as skipped frames per second and framerate. But these values are not well-posed for various combinations of video clips and decoders, so they are difficult to use. The average CPU load is used to determine performance of hardware-assisted video decoding by AMD and NVIDIA cards. You should keep in mind that several background processes are running during any tests. So we should take into account possible small fluctuations in CPU load and a corresponding measurement error. In other words, ±3% difference does not mean an advantage of this or that solution, because it may be caused by the measurement error. Video Decoding Test IssuesIt's not an easy task to run such tests, especially with relatively new video formats. Hardware acceleration does not always work ideally with all decoders and video types. For example, several developers of players and H.264 decoders declared about support for hardware acceleration in their solutions. But these were promotional statements, because H.264 acceleration either didn't work or worked with great problems. For example, some time ago there appeared PowerDVD 7.0 with H.264 support (version 1.6.0.1528). But its hardware acceleration did not work. When this decoder is used, hardware acceleration is enabled, but it does not work. Unfortunately, in our previous article we used this very version, so we got incorrect results. Besides, the acceleration may work or not work with various models of video cards even from the same company. It especially concerns low-end and mid-end solutions of previous generations, such as GeForce 6600 and RADEON X1300. There were cases, when acceleration snapped into action on one card and failed to do it on the other card with the same sequence of actions. These are not all problems of hardware-assisted decoding. For example, CyberLink H.264 decoder in DXVA mode always disabled deblocking, which is noticeable on color gradients. It should be noted that some versions of decoders managed to work in hardware accelerated mode with video cards from one manufacturer and failed to work with video cards from the other manufacturer depending on drivers. But that's not all. There are different types of video data: DVD, files, streaming video transmitted from satellites. There are lots of players for various formats, and universal decoders must work in different players developed for various video types. But some popular decoders (for example, Nero Video Decoder) work only with players, which makes them useless and impossible to use in our test. We can mention three popular universal H.264 decoders: ffdshow, CoreAVC, and CyberLink. All of them can work in most players with various video types. Hardware acceleration is supported only by CyberLink. CoreAVC is the fastest software decoder. And ffdshow is free and very popular. Another difficulty with testing HD video playback is that corresponding optical drives and discs are not widespread. For example, we have no opportunity to test Blu-ray and HD-DVD and output video to an HD monitor with HDCP. So we decided to content ourselves with testing video clips of various formats and bitrates, which reflect well CPU and GPU loads, when you play the above-mentioned discs, except for VC-1. Along with performance of solutions supporting Avivo and PureVideo, our last review touched upon video quality issues. Tests of most formats show that there are no noticeable problems with quality. For example, H.264 decoders provide identical video quality. Only their brightness, saturation, contrast settings and the lack of deblocking in the hardware accelerated mode may differ. We planned to evaluate MPEG2 quality (the most problematic format in this respect) in a special HQV test developed by Silicon Optix to analyze MPEG2 decoding quality. One of the biggest drawbacks of this test is its subjectivity, because it's up to a tester to determine video quality in each subtest by comparing the resulting image with the etalon. But in our opinion, the biggest problem with this test is that both GPU manufacturers optimized drivers for it. So they demonstrate close results that barely miss maximum possible values. The difference in scores earned by the latest solutions from AMD and NVIDIA in this test is very small. So there is little sense in this quality test. Anyway, we can say for sure that AMD and NVIDIA solutions with hardware support for MPEG2 decoding provide much better video quality than purely software decoding does. Test ResultsLet's proceed to the main point - performance tests. Low-res MPEG2 playback hasn't been a problem for modern systems with powerful CPUs and GPU support for a long time. Such video decoding loads a CPU by 5-10% maximum. That's why we focused on modern formats in this article: H.264, WMV9, VC-1. MPEG2 is represented solely by HD video clips of high bitrate. We'll start our tests with the most interesting format - H.264. The system was tested with two decoders - all-software CoreAVC Pro without hardware acceleration support and CyberLink 264 Decoder Filter supporting DXVA from PowerDVD 7.3 Ultra. We've also published the CPU load for the software H.264 decoder from CyberLink. Tests with hardware acceleration were run separately on Windows XP and Vista. H.264The first results show that software decoding of H.264 in high resolution seriously loads a powerful dual-core processor. There is no jerking or skipped frames of course, but the load on both cores exceeds 50% - that's serious. Software CoreAVC decoder is a tad faster than CyberLink in these conditions, but the difference is not very big. CyberLink decoder works well with all video cards, CPU load with the G80 and R580 cards is a tad higher on Vista than on Windows XP. Perhaps, that's the effect of 3D interface in the new operating system from Microsoft. Hardware support for decoding video seems to work better in G80 than in R580, but the difference in CPU load is not very big. The situation with NVIDIA G84 is much more interesting. It seems that the acceleration does not work on Windows XP at all (CPU load is very similar to that with software decoding). But it does not shoot forward on Vista and demonstrates unprecedented results. CPU load in this case is below 8%! Perhaps, the new GPUs indeed have powerful dedicated units for video processing... Unlike the first video fragment, the second file in H.264 format is progressive and does not use interlacing. Let's see if performance differs with different decoders and video cards this time. CPU load without deinterlacing dropped in all modes, except for a hardware-accelerated mode in G84 on Windows Vista. 8 or 9% - the difference is not big, it may be a result of a measurement error. This time CoreAVC was more efficient than CyberLink in software mode. It almost caught up with the hardware accelerated mode on a video card from AMD. Video decoding takes up more CPU resources on Vista again. The difference is more noticeable on NVIDIA G80, perhaps their drivers are not fine-tuned enough for the new operating system. G84 again demonstrates the same picture - no hardware acceleration on XP and its efficient usage on Vista. Pay attention to another video fragment with a higher bitrate. The situation is practically identical to the previous test, but CPU load is higher in all modes. Software decoding in CoreAVC is much more efficient than in CyberLink. GeForce 8600 GTS demonstrates usual results for H.264 - just 8% of CPU usage, but only on Vista. The last thing to do in the H.264 section is to analyze results demonstrated with another video fragment of twice as high bitrate. We again see a similar situation. CoreAVC is a tad more efficient in software mode, Vista has a tad higher requirements to CPU performance, G84 demonstrates the standard result on the new OS - about 8% CPU load, but the acceleration does not work on Windows XP at all. There are no differences between G80 and R580 - the former copes with decoding a tad better. Let's draw a bottom line under H.264 decoding - the new mid-end solution from NVIDIA is an ultimate winner here with one proviso - acceleration works correctly only on Windows Vista so far. Then follows GeForce 8800 GTX (much slower). The card from AMD based on the prev-gen GPU is still slower. CoreAVC demonstrates good results. They are often similar to results in modes with hardware acceleration in R580. And software mode in CyberLink requires too much CPU resources. MPEG2Let's proceed to MPEG2, the oldest and most popular format among those we test here. We decided not to use video fragments in the popular DVD resolution. So we started right from 1920x1080. We made the task even more difficult by choosing video fragments with two fields (interlacing). Decoders require high-quality and fast post processing (deinterlacing) in the first place for decoding and playing such formats. Let's see how our contenders cope with it. We used PowerDVD and PureVideo decoders. The former was tested on XP and Vista, we also tested it in the software mode. This video fragment uses interlacing and high bitrate - 18 Mbps. We can see that MPEG2 is too simple for modern CPUs and GPUs. It's very easy for them to decode such a stream with a high bitrate in 1920x1080. All hardware accelerated modes demonstrated similar results, using approximately half as much CPU resources as software decoding with a DirectShow filter from CyberLink. Interestingly, PureVideo is less efficient on NVIDIA cards than CyberLink. The situation is contrary on the AMD card. That may be the fault of the difference in post processing quality. Both video cards from NVIDIA support hardware acceleration of video decoding more efficiently than RADEON X1900 XT. Windows Vista again increases the load on a CPU in all modes, though not that much. Let's analyze results obtained in the second MPEG2 video (progressive, the highest HD resolution, even higher bitrate.) This video fragment was not tested on Vista. But everything is clear anyway, results will be a tad worse there than on Windows XP. So it becomes clear that the video fragments require much decoding resources owing to interlacing, not because of their bitrate. Video cards experience most problems with efficient deinterlacing. This time R580 and G80 demonstrate similar results, while G84 shoots forward. But all these results are perfect compared to software decoding. MPEG2 conclusions are simple - there are no problems with performance here, CPU load with purely software algorithms does not exceed 20-30%, and this figure drops to measly 10-15% when hardware features of modern GPUs are used. NVIDIA GPUs cope with the task a tad better regardless of a decoder, although PureVideo can have qualitative advantages, which are outside the scope of this article. Both solutions from NVIDIA, G80 and G84, demonstrate a similar CPU load, while RADEON X1900 XT is slightly outscored. CPU performance was sufficient to decode, deinterlace, and display MPEG2 video in all cases, including the purely software decoding mode. WMV9Diagrams with WMV decoding test results will be simpler, because we used a single decoder from Microsoft. It comes as part of Windows Media Player, so it's the most popular WMV decoder. Although ffdshow contains an alternative decoder, there is no point in using it in our tests. The first WMV HD video fragment has a non-standard resolution (1440x1080), it's encoded with an average bitrate of 8 Mbps. As in the previous cases, the test video fragment does not require much CPU resources to decode. It takes up a tad more than a quarter of CPU time in the purely software mode. In case of this video fragment, CPU load was a tad higher with the AMD video card than with GeForce 8800. But the difference is not very big. There are no problems with video playback in this case. But G84 does not support WMV decode acceleration on Windows XP. Let's have a look at the other video fragments. The next video has a higher bitrate and full resolution (1920x1080). Perhaps different results will be demonstrated with this material. So, the most complex WMV video fragment with a high bitrate takes up just a little more CPU resources. G84 results match the result obtained with software decoding. G80 accelerates WMV and offloads the CPU by 1.5. The AMD card slips up this time. Windows Media Player 11 (as well as our test utility that uses the corresponding ActiveX component) would crash, when we played this video fragment with enabled hardware acceleration. It may happen because of bugs in the drivers, because this video plays well, when DXVA is disabled. And now we'll analyze results for the last WMV video fragment (average bitrate - 8 Mbps, 1440x816). These results are more interesting. CPU load is almost identical in all modes. It looks like in this case DXVA of WMV9 does not work with all our video cards for some strange reason (non-standard resolution?) We have nothing to add. Here are our conclusions on the WMV format - our testbed copes well with all our video fragments in software mode, including 1080p materials with high bitrates. There may exist WMV video data that require much resources to decode, but we have never seen them. GeForce 8800 GTX and RADEON X1900 XT demonstrate similar results. But the AMD video card failed to play one of the test fragments, probably because of a problem in this very driver version or our combination of PC components. In other cases it's only insignificantly slower than GeForce 8800 GTX. What concerns GeForce 8600 GTS, its hardware acceleration of WMV format does not work on Windows XP so far, we are waiting for updated drivers. VC-1WVC1 format aka Windows Media Video 9 Advanced Profile corresponds to "Advanced Profile" of the VC-1 standard. This format supports both progressive video data and data with two fields. This format is much more efficient than WMV, especially for encoding interlaced video. Unfortunately, we didn't find free VC-1 video fragments in 1920x1080 with high bitrate, and we couldn't use Blu-ray or HD DVD, because we didn't have necessary equipment. So we had to content ourselves with WVC1 data in 1280x720. We chose two video fragments with 60 frames (not fields) per second. It looks like the native decoder from Microsoft or AMD/NVIDIA drivers cannot use hardware acceleration to play WVC1 data. All video cards and software decoding mode demonstrate practically identical results. They speak of high requirements of VC-1 in such conditions: 1280x720 at 60 fps. Can you imagine what will happen in 1920x1080? Unfortunately, we don't have such video fragments. Let's have a look at the results obtained with the second video fragment: That's it, the results are similar. So it's not hard to draw a conclusion about the WVC1 format - there is no hardware acceleration for this WMV format so far. Perhaps, CyberLink decoder can use hardware acceleration for such data from HD-DVD and Blu-ray, but we haven't tested them for the above-mentioned reasons. ConclusionsHaving conducted tests of hardware acceleration for decoding video of various formats, we can say for sure that modern video cards can successfully accelerate video playback in most formats, including high definition video with high bitrates, to say nothing of such old formats as DVD. The modern level of software and hardware support allows to decode any format in 1920x1080 without any problems with performance. Here is our general conclusion - the testbed coped with playback of all video fragments, but not all decoders and video cards demonstrated equal results. For example, CoreAVC sometimes successfully competes with hardware-assisted CyberLink decoder with H.264 files. But the latter supports hardware acceleration, especially efficient with GeForce 8600 GTS on Windows Vista. MPEG2 decoders demonstrate similar results - both CyberLink and PureVideo efficiently use hardware acceleration to play MPEG2 videos. The dual-core processor installed in the testbed copes well with playing all videos of various popular formats, resolutions, and bitrates in software mode. It uses a tad more than half its resources in the peak case. So, comparing video cards by their performance and CPU usage to decode video makes little practical sense. It's useful only for old CPUs or when you want to reduce power consumption of your media center. Hardware accelerated modes significantly offload a CPU. The NVIDIA G84-based video card copes with H.264 format especially well (only on Vista so far), while GeForce 8800 GTX generally outperforms the AMD solution of the previous generation - RADEON X1900 XT. Quality conclusions will be very short. Analysis of this issue is outside the scope of this article, but we had some problems with RADEON X1900 XT. For example, when we tried to play MP10_Striker_10mbps.wmv with enabled DXVA, Windows Media Player 11 (wmplayer.exe) would crash with a run-time error. We also intended to use MPEG2 mallard.m2t video with a high bitrate, but the AMD solution also had problems with its playback - we saw only several stripes of different colors. When DXVA is disabled, both video fragments are played well, which indicates possible problems with the current AMD drivers. Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. |