On the example of

BFG GeForce 9800 GTX 512MB PCI-E,

MSI GeForce 9800 GTX 512MB PCI-E (N9800GTX-T2D512),

Zotac GeForce 9800 GTX 512MB PCI-E

Why did it happen that the rollout of G92 (after G80) hadn't changed marketing names of graphics cards from 8xxx to 9xxx? And why did the company launch 8800GT/GTS instead of 9800GT/GTS (it will confuse users) in Autumn 2007, although 9800GTX does not differ much from 8800GTS 512 except for a small frequency difference?

Perhaps because NVIDIA minds are constantly busy with breakfasts, lunches, and dinners just like the girls on the collage. None of 1500 heads in the company could think beforehand and come up with the right decision. Users were very confused by 8800 GTS in Autumn, they didn't understand why the card with 512 MB of memory was much faster than the card with 640 MB. Another puzzle was why NVIDIA didn't radically change the name, having radically modified the bus, video memory size, and the core. Then they thought better of it, and the Mid-End card with 64 processors got the name 9600 GT.

And now they have to explain to users again that 9800 GTX does not differ much from 8800 GTS 512, just overclocked. The core remains the same (G92), just a new revision.

Part I: Theory and Architecture

After NVIDIA rolled out two graphics cards on G92 (GeForce 8800 GT and GeForce 8800 GTS 512MB), it became clear that the days of GeForce 8800 GTX (and Ultra, replaced with GeForce 9800 GX2) were numbered. The overhauled GeForce 8800 GTS often outperformed the GTX card, being slower in rare cases of insufficient memory bandwidth and/or size. That's when the old GTX is better than the 512 MB GTS.

In the process of renaming products to GeForce 9xxx, NVIDIA launched another graphics card based on G92 - GeForce 9800 GTX. It's the most powerful single-GPU graphics card, which is designed to replace GeForce 8800 GTX. And probably GeForce 8800 GTS 512MB in the nearest future. However, at this moment GeForce 9800 GTX stands in between GeForce 9800 GX2 and GeForce 8800 GTS 512 MB. NVIDIA notes that the new GeForce 9800 GTX is faster than GeForce 8800 GTX in most (the key word here) applications and settings. They make a reservation for a reason - in some cases performance may be affected by lower video memory size and bandwidth.

With deep regret we have to say that the theoretical part about the new product will be very short and not very interesting, as nothing has changed in this architecture for a long time already, it's similar to the old G80. There are not many changes in G92, they are all described in our previous articles. Moreover, as far as its characteristics are concerned, GeForce 9800 GTX is similar to GeForce 8800 GTS 512MB, it just operates at a tad higher frequencies.

In other words, the biggest difference is in memory bandwidth. There are also minor differences in fill rates and shader performance. That's it! It's all the more incomprehensible why design GeForce 8800 GTS 512MB such a powerful card that it almost catches up with the GTX model, and now launch the new GTX card, little different from that GTS product, modifying PCB and introducing some minor changes to clock rates. Perhaps NVIDIA just wants to revise the entire series, and all existing GeForce 8800 cards will be replaced with some GeForce 9800 product (similar to 8800 GT - 9800 GT).

Before you read this article, you should study the baseline theoretical articles - DX Current, DX Next, and Longhorn. They describe various aspects of modern graphics cards and architectural peculiarities of products from NVIDIA and AMD.

These articles predicted the current situation with GPU architectures, many assumptions about future solutions were confirmed. Detailed information about the unified architecture of NVIDIA G8x/G9x solutions can be found in the following articles:

As we mentioned in previous articles, G9x GPUs are based on the GeForce 8 (G8x) architecture and enjoy all its advantages: unified shader architecture, full support for DirectX 10 API, high-quality methods of anisotropic filtering and CSAA with up to sixteen samples. The new GPUs feature improved units (TMU, ROP, PureVideo HD) and the 65-nm fabrication process, which allowed to reduce manufacturing costs and raise operating frequencies:

GeForce 9800 GTX

- Codename: G92

- Fabrication process: 65 nm

- 754 million transistors

- Unified architecture with an array of common processors for streaming processing of vertices and pixels, as well as other data

- Hardware support for DirectX 10, including Shader Model 4.0, geometry generation, and stream output

- 256-bit memory bus, four independent 64-bit controllers

- Core clock: 675 MHz

- ALUs operate at more than a doubled frequency (1.688 GHz)

- 128 scalar floating-point ALUs (integer and floating-point formats, support for FP32 according to IEEE 754, MAD+MUL without penalties)

- 64 texture address units, support for FP16 and FP32 components in textures

- 64 bilinear filtering units (like in the G84 and the G86, no free trilinear filtering and more effective anisotropic filtering)

- Dynamic branching in pixel and vertex shaders

- 4 wide ROPs (16 pixels) supporting antialiasing with up to 16 samples per pixel, including FP16 or FP32 frame buffer. Each unit consists of an array of flexibly configurable ALUs and is responsible for Z generation and comparison, MSAA, blending. Peak performance of the entire subsystem is up to 64 MSAA samples (+ 64 Z) per cycle, in Z only mode - 128 samples per cycle

- Multiple render targets (up to 8 buffers)

- All interfaces (2 x RAMDAC, 2 x Dual DVI, HDMI, HDTV) are integrated into the chip

Reference GeForce 9800 GTX Specifications

- Core clock: 675 MHz

- Frequency of unified processors: 1688 MHz

- Unified processors: 128

- 64 texture units, 16 blending units

- Effective memory frequency: 2.2 GHz (2*1100 MHz)

- Memory type: GDDR3

- Memory: 512 MB

- Memory bandwidth: 70.4 GB/sec.

- Maximum theoretical fill rate: 10.8 gigapixel per second

- Theoretical texture sampling rate: up to 43.2 gigatexel per second

- 2 x DVI-I Dual Link, 2560x1600 video output

- Double SLI connector

- PCI Express 2.0

- TV-Out, HDTV-Out

- Power consumption: up to 156 W

- Dual-slot design

- Recommended price: $299-$349

So, there is nothing interesting again. As you can see from characteristics, the "new" G92-based card differs from GeForce 8800 GTS 512MB in frequencies of the GPU and shader units. Even though the new solution has its memory bandwidth increased, it's still lower than that of the old GeForce 8800 GTX. Besides, its memory size is smaller. That may be the reason why GeForce 8800 GTX is slower in some modern games under certain conditions.

I repeat that 512 MB of video memory (according to our recent research) is presently sufficient for most modern games. But judging by the latest games, such as Crysis, this memory size may still be insufficient for top graphics cards operating in high resolutions. In such cases GeForce 9800 GTX will be outperformed by GeForce 8800 GTX with 768 MB of video memory of higher bandwidth.

We can understand why the dual-GPU graphics card does not use 2 x 1024 MB of video memory - it would have been too expensive. But why the new fastest single-GPU card is not equipped with 1 GB is a mystery. On the other hand, have a look at the recommended price - it's not a top one. It may be a trial product launched before the real top card with the new architecture, which should take up the top position in the series. As for now, we've got GeForce 9800 GX2 with 512 MB per GPU.

GeForce 9800 GTX contains two 6-pin PCI-E power connectors. The graphics card won't work, if you plug only one power cable. PSU requirements include a corresponding power capacity. A single GeForce 9800 GTX is recommended to be used with at least a 450 W power supply unit that can provide no less than 24 A along 12 V lines. What concerns SLI, you should use a 750-W PSU, 3-way SLI - 1000 W.

Architecture

We are again forced to repeat, because G92 hasn't changed. The G9x architecture was announced last year in Autumn. Considering that it's just a slightly modified G8x architecture, it had appeared back in 2006. The main difference between G92 and the old G80 is the 65-nm fabrication process used in the former. It allows to reduce the costs of complex GPUs, decrease their power consumption and heat release, and raise their operating frequencies. They have the same number of ALUs and TMUs. Another significant difference of the new GPU is its 256-bit bus versus 384-bit one, we already mentioned that.

As we wrote in articles about GeForce 8800 GT and 8800 GTS 512MB, G92 is a modified G80 GPU, upgraded to a new fabrication process with some architectural changes: fewer ROPs and improved TMUs, as well as a new compression algorithm in ROPs (more efficient by 15%). Everything has been described in detail in our previous articles. Here is the basic diagram of G92:

I'll repeat one more time that 64 texture units in GeForce 9800 GTX won't be faster in real applications than 32 units in GeForce 8800 GTX/Ultra in most cases, as they usually use trilinear and/or anisotropic filtering - in this case TMU performance will be the same, without taking frequencies into account. But when non-filtered samples are used, G92-based cards will be faster.

3-Way SLI

Unlike the dual-GPU GeForce 9800 GX2, a couple of which can work in Quad SLI mode, in this case we can form the so-called 3-Way SLI with three GeForce 9800 GTX . This feature is supported by motherboards on NVIDIA nForce 680i, 780i, and 780a. For this purpose each graphics card has two SLI connectors, they are used to connect the cards with a special triple bridge:

The first implementations of 3-Way SLI used Alternate Frame Rendering (AFR), which is of higher priority than SFR in all modern games that use complex shaders, multi-pass rendering, and complex post processing. In this case three frames are processed simultaneously at once, the frame rate grows almost linearly to geometry, texture, and shader performance. There are fewer problems with compatibility as well.

According to NVIDIA, 3-Way SLI built with three GeForce 9800 GTX cards increases the frame rate by 130-150% versus the single graphics card. It goes without saying that the company means high resolutions, maximum graphics quality settings with enabled antialiasing and anisotropic filtering. The difference will be smaller in other conditions. But it will be good for heavy applications, like Crysis.

There is no getting away from AFR problems, of course. We've already mentioned latencies introduced by AFR (to be more exact, they are not reduced as FPS seems to grow). They are practically unnoticeable in a dual-GPU system. However, you may already notice them in a 3-Way SLI system, because the frame rate grows, but the latencies are not reduced. Games seem to run smoother than on a single-GPU system. But it's almost just as uncomfortable to play, as when a single GPU fails to provide the average frame rate of at least 30 fps in the same conditions.

HybridPower

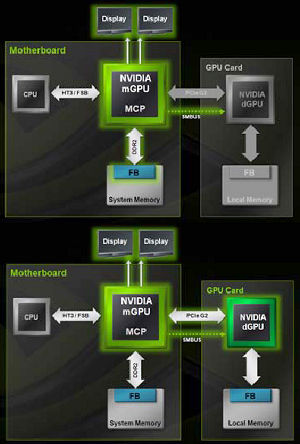

GeForce 9800 GTX also supports one of two main features of the Hybrid SLI technology - HybridPower. This technology automatically switches the GPU between an external graphics card and the GeForce core integrated into the chipset depending on the load. This feature works only with motherboards supporting HybridPower. These are its two main modes:

The diagram shows two HybridPower modes of a SLI system. The first mode is intended for everyday usage, and for hardware-assisted video playback. In this case, the system uses resources of the integrated graphics core, while the installed graphics card may be disabled. And the second mode is used for 3D applications that actively use features of the installed graphics card. In this case the card works in high gear.

When HybridPower is enabled, video output from an external graphics card is routed to the integrated graphics core and fed to the video-out on the rear panel of the motherboard. So you can use integrated and discrete graphics with the same physical connector. In everyday applications HybridPower disables discrete graphics, conserving power and reducing noise generated by GPU coolers. But when you need the entire 3D capacity of the installed graphics card, it's powered up and it starts its usual hot and noisy job.

PureVideo HD

Like all other G9x graphics cards, GeForce 9800 GTX uses all software improvements in PureVideo HD, which appeared in new driver versions starting from ForceWare 174, added for GeForce 9600 GT. The most important innovations in PureVideo HD include dual-thread decoding, dynamic adjustment of contrast and saturation.

Another interesting new feature in the latest version of PureVideo HD is support for Aero interface in Windows Vista during hardware accelerated video playback in a window. This was not possible in previous versions. We covered it all in our GeForce 9600 GT review.

Support for external interfaces

Reference GeForce 9800 GTX cards come with two Dual Link DVIs with HDCP support and one HDTV-Out. HDMI and DisplayPort connectors can be replaced with adapters from DVI to HDMI or DisplayPort correspondingly.

So, we've covered theoretical aspects of the overhauled solution, GeForce 9800 GTX. The next part of this review will analyze synthetic tests to determine weaknesses and fortes of the graphics card. We'll find out how this card performs relative to other models, as well as its only competitor from AMD.

Write a comment below. No registration needed!