|

||

|

||

| ||

TABLE OF CONTENTS

Once upon a time there lived... a user. A common user. OK, he might be already a-d-v-a-n-c-e-d. Because he was experienced with computers. He liked to play games, especially RTS. He scorned the appearance of 3D accelerators - "who needs to pay extra money for game decorations?" Days turned to weeks and months, the glide was calling, post processing was luring, texturing and raster units were bewitching... The user got used to it... so much that he got addicted to 3D. He knew that if a game bore the 3dfx label, it must be cool. You could see another world behind the display, it was not flat anymore. And then came Unreal... The user got up to its neck into it... Deeper and deeper... 128õ128 16-bit textures were not enough anymore... The new philosophy came to replace the old one - Rivism! Yeah, only Riva.. Onward to better graphics... You all know what happened later... Riva morphed into GeForce. Voodoo went bankrupt (no one came to its shrines anymore and left money there), RAGE died of shame for its bilinear filtering and changed the name to RADEON, a starname no one heard of. Savage was caught, tied and thrown to the VIA reservation, where this company lives on the house... Matrox... That's a real tragedy. Millennium was over as well as Voodoo, no one was attracted by the famous name... Matrox exerted itself intending to overcome its competitors... and then it gave birth to Parhelia. The two suns were dull and artificial, only people in Montreal liked them. And there came shaders... Yep, at first programmers had drawn triangles on a white sheet, painted them, and told users about photorealism. Everything was simple before shaders: programmers used a sliding rule (it was done by transistors in GPUs; NVIDIA, ATI, and 3dfx had special departments to equip all transistors with sliding rules) to multiply, divide, get mean values - it was done to get a color number. To paint a pixel with. But a number of transistors must be GREATER than a number of pixels. That's all. If it was vice versa, transistors needed a second pass... With a break, of course, because even such realism was very slow. On the other hand, it still yielded 35fps, and all users were happy. Ok... shaders... Clever Microsoft said enough! Enough of the stone age. 3D graphics should be processed in a different way. Transistors should learn to use a programmable calculator instead of a slide-rule. Just enter several operations at once and get the result. It's much more flexible and easier for developers. The ball started rolling. SM 1.0, 1.1, 1.3, 1.4 (at this stage the Canadian company declared that SM 1.4 would be used only by itself for nice demos). There was no stopping Microsoft - SM 2.0. That's where NVIDIA fizzled out, as it was carrying the dead body of 3dfx... It was too heavy... Before abandoning it, the company picked its pockets for valuable specialists, who successfully... flunked the launch of GeForce FX... Calling after ATI, NVIDIA carelessly attached the old bus and equipped its product with a "vacuum cleaner" to avoid overheating... To make the story short, for two years Californian company had been generating cheerful reports about technological advances, while the engineers tried to keep low... They were designing SM 3.0! Microsoft was out there, of course. So, the combined efforts (bursts from NVIDIA and consistent scattering of rakes from ATI) brought both companies on the same level... Neither 6800 Ultra, nor RADEON X800 XT were to be found... They were hidden so well that gamers offered lots of money for such a miracle. A year and a half ago they would have fainted from $500. And now they were ready to part with $600, to turn their computers into ovens, ruin their PSUs, and make graphics stunning. FarCry was released, you know... True gamers will understand... But that's nothing. Shaders were divided. They could either compute vertices or paint pixels. There was no flexibility. Yep, flexibility again, it's always in deficit. There cannot be excessive memory in a system unit, and there cannot be excessive flexibility for game developers. So... a new solution came from California, with unified shaders that could do everything, where each transistor was equipped with its own PDA, smartphone, and notebook. Besides, there were great many of them... The core grew in size, but it still worked and remained manageable. Now the driver estimates a game and distributes roles. But this miracle with 128 processors was already reviewed, it's GeForce 8800 GTX. But we know that the new series from NVIDIA consists of two cards now:

The second product will be reviewed here. By the way, it's already available in stores. So we reviewed production-line cards from two manufacturers. I already mentioned them before, so let's proceed straight to the examination. Video Cards

We can see that the main difference between the 8800GTS and the GTX is power supply units, they are of different lengths, so the cards' sizes are different. Left parts of the PCBs are absolutely identical. In fact, the PCB of the GTS card uses the same 384-bit bus, it just lacks two memory chips that constitute the difference in buses and local video memory. Pay attention to the PCB length - 220mm again instead of 270mm, like in the GTX. There could be some problems with the GTX because of its length - the card has grown by 5cm, so it cannot be used in some PC cases. The photos also show that the GTS PCB requires ONE 6-pin PCI-E cable from a PSU instead of two. But requirements to the PSU are not changed much: you need a 400W (honest) PSU with the 12V channel supporting at least 20A. By the way, the card itself consumes approximately 130W under 3D load and about 90W in other cases. The cards have TV-Out with a unique jack. You will need a special adapter (usually shipped with a card) to output video to a TV-set via S-Video or RCA. You can read about TV Out here. The cards are equipped with a couple of DVIs. They are Dual link DVI, to get 1600õ1200 or higher resolutions via the digital channel. Analog monitors with d-Sub (VGA) interface are connected with special DVI-to-d-Sub adapters. Maximum resolutions and frequencies:

We should mention clock rates of the cores. We found out previously that an increase in ROP frequency raised the frequency of the shader part proportionally INSTEAD OF BY THE SAME DELTA! That is:

What concerns the products from BFG and XFX, they act similarly here. They differ only in bundles and stickers on their coolers. We already mentioned the cooling system - the GTS cooler is similar to that in the 8800GTX, only a tad shorter. I'll just remind you about its peculiarities and quote a fragment of the 8800GTX review.

Now have a look at the processor.

8800 GTS — G80 is manufactured on Week 37, 2006. A smear of paint on the cap is probably a mark of the GPU with locked processors

NVIDIA NVIO

You can see well that there are practically no visual differences between GTS and GTX, which was to be expected. Remember that graphics output is now up to a separate chip - NVIO. It's a special chip that incorporates RAMDAC and TDMS. That is the units responsible for outputting graphics to monitors or TV were removed from the G80 and implemented as a separate chip. It's a reasonable solution, because RAMDAC won't be affected by interferences from the shader unit operating at a very high frequency. The same chip is also used for HDCP support.

Bundle.

Box.

Installation and DriversTestbed configuration:

VSync is disabled.

The latest RivaTuner beta, written by Alexei Nikolaychuk, now supports this accelerator.

We overclocked both cards, but the BFG card was better: 640/2000 MHz!!!. It demonstrated super results! By the way, test results, published below, will show that the GTS catches up with its top brother at this frequency. I want to remind you again that overclocking results depend on a given sample, no one can guarantee that your sample will work at the same frequency.

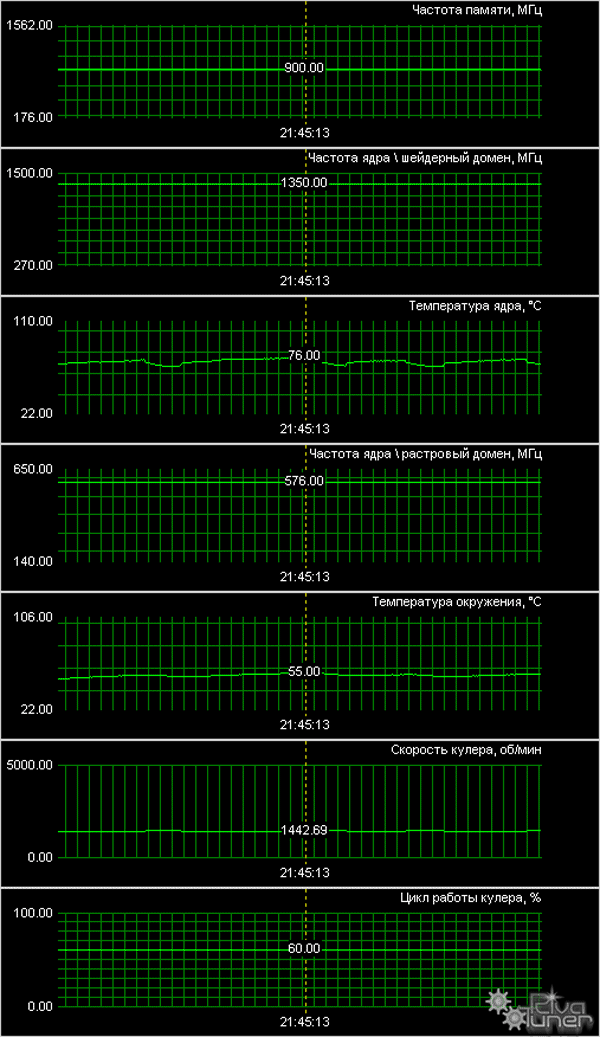

BFG GeForce 8800 GTS 640MB PCI-E  The first screenshot shows how the card performs at the standard frequencies. The second screenshot shows its performance at increased frequencies, equal to operating frequencies of the 8800GTX.

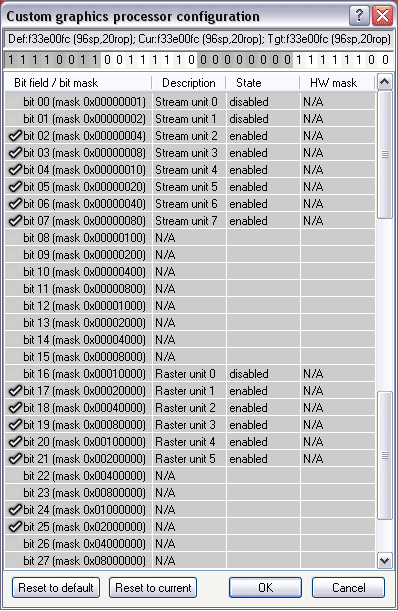

The new version of RivaTuner to be released within the next few days can now disable GPU processors. Note, it can only DISABLE them so far. The work is in progress and we cannot promise so far that it will be able to unlock anything. But we can already say that it can disable processors in the GTX card. You cannot expand the bus from 320bit to 384bit, of course. The GTS just doesn't have enough memory chips. I repeat that the work is in progress. You cannot expect the author to add a feature to unlock processors in the GTS card at once.

Note that this ÀÀ was not selected at random. It's a compromise: on one hand, it's not much different from ATI AA6x; but on the other hand, GF 7xxx does not support AA above 8x. Besides, this ÀÀ mode is visually sufficient to my mind, because you cannot see the differences with the naked eye. You should take into account that the 8800 cards will be compared in high resolutions.

The screenshots above demonstrate the HQ mode. And one more thing: we just won't compare the cards and analyze their performance WITHOUT AA+AF, these numbers are published for your information only. You should understand that such cards should be loaded at least with AA4x+AF16x! We also publish data on the GTS card operating at the GTX frequencies, so that you could evaluate a performance drop, when a couple of processors and a rasterizer are disabled. Test results: performance comparisonYou can look up the testbed configuration here We used the following test applications:

You can download test results in EXCEL format: RAR and ZIP

FarCry, Research (No HDR)

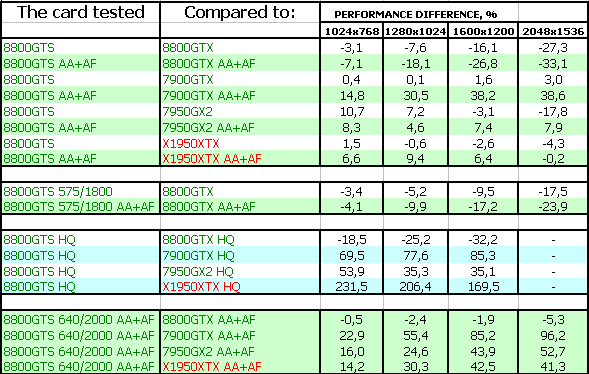

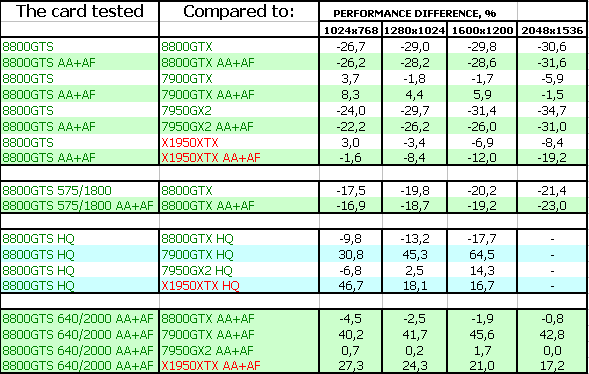

Test results: FarCry Research (No HDR)

We can see that the 8800GTS in AA+AF mode offers performance on the level of the X1950, or a tad higher. But HQ mode (really justified in this game) makes the new card a winner. The Middle-End product from the new NVIDIA series outperforms all previous leaders. FarCry, Research (HDR)

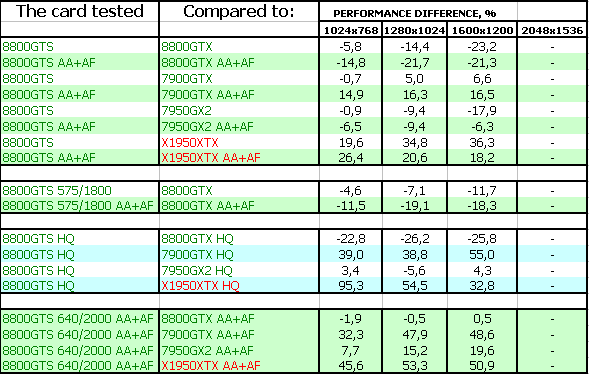

Test results: FarCry Research (HDR)

HDR adds problems, the 8800 GTS is outperformed by the ATI card. However, HQ mode puts everything into place, the new card is victorious again.

F.E.A.R.

There are no surprises here, the 8800GTX is the ultimate winner.

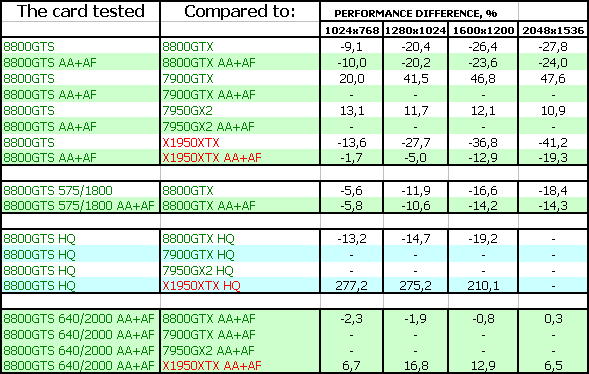

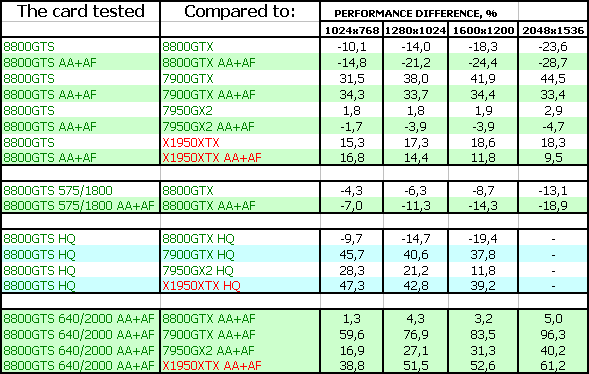

Splinter Cell Chaos Theory (No HDR)

Our conclusions are similar to those for Far Cry: the GTS is outperformed in AA+AF mode, but it shoots forward in HQ mode. Splinter Cell Chaos Theory (HDR)

The same thing. Call Of Duty 2

The same situation here, but the new card outperforms the X1950XTX in AA4x+AF16x mode. But the 7950GX2 is faster. Half-Life2: ixbt01 demoTest results: Half-Life2, ixbt01

We shall analyze only HQ mode here. The 8800 GTS is victorious, no comments are necessary.

DOOM III High mode

Chronicles of Riddick, demo 44

Test results: Chronicles of Riddick, demo 44

Experienced readers know well that openGL games favour all NVIDIA products. According to our tests, it concerns not only games based on id Software engines.

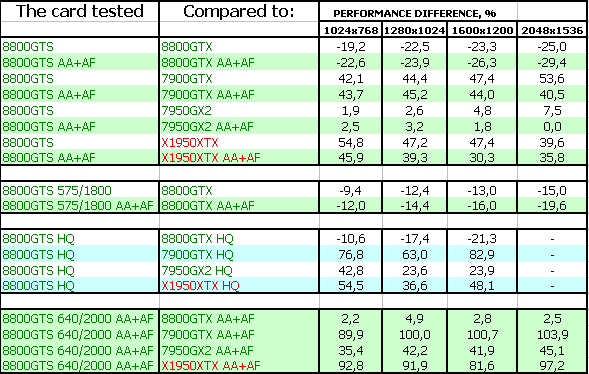

3DMark05: MARKS

In this case lots of processors and the high frequency of the shader unit produce an expectable result: victory everywhere, only the 7950GX2 with its two cores is unreachable. 3DMark06: SHADER 2.0 MARKS

Test results: 3DMark06 SM2.0 MARKS

We have the same conclusions here, even the 7950GX2 failed to outscore the new product. 3DMark06: SHADER 3.0 MARKS

Test results: 3DMark06 SM3.0 MARKS

The 8800GTS is evidently victorious. ConclusionsXFX GeForce 8800 GTS 640MB PCI-E - I'll describe this card as the GeForce 8800 GTS on the whole. This accelerator with its recommended price of $449 evidently removes the 7900GTX and the 7950GX2, which must get cheaper or perish. Besides, our tests show that the GTS successfully competes with the X1950 XTX, because it now possesses all advantages of ATI cards (anisotropy of higher quality and support for HDR+AA). So if the 8800GTS is somehow priced like the X1950, you should choose the GTS card. You'll have to think hard though, if you need VIVO - it's available in ATI cards, but top modern cards from NVIDIA lack it. But this minor detail shouldn't be a problem, I think.

What concerns the card from XFX, it's just a reference card. That's why XFX adds only the bundle and the box. The shorter PCB will make the card very popular. All GeForce 8800 GTX/GTS cards are manufactured in Flextronics and Foxconn plants. Their quality is controlled by NVIDIA. Then the company just sells them to its partners. None of NVIDIA's partners manufactures these cards on its own! Just drill it like an axiom. When you buy the 8800GTX/GTS card, different vendors mean just different bundles and boxes. That's it! BFG GeForce 8800 GTS 640MB PCI-E is practically a copy of the previous product, the same reference card with a different sticker. It has a very good bundle.

On the whole: GeForce 8800 GTS is a very good card for its price. We can recommend it, if the prices are within promised limits. You should also keep in mind that GTS cards can be easily overclocked. If you overclock it to the level we did, the card may yield the GTX performance! It certainly cannot be done with the X1950XTX.

You can find more detailed comparisons of various video cards in our 3Digest.

BFG GeForce 8800 GTS 640MB PCI-E gets the Excellent Package award (November).

We also decided to give our Original Design award to NVIDIA for its excellent GeForce 8800 GTS.

ATI RADEON X1300-1600-1800-1900 ReferenceNVIDIA GeForce 7300-7600-7800-7900 ReferenceWe express our thanks to

BFG Russia and Mikhail Proshletsov personally for the provided BFG video card

Andrey Vorobiev (anvakams@ixbt.com)

November 25, 2006 Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. | |||||||||||||||||||||||||||||||||||||||||||||||||||||||