MSI

NX6800-TD128,

Galaxy

Glacier GeForce 6800

based

on NVIDIA GeForce 6800

|

Contents

- Introduction

- Video

cards' features

- Testbed

configurations, benchmarks

- Test

results: Serious Sam: The Second Encounter

- Test

results: Code Creatures DEMO

- Test

results: Unreal Tournament 2003

- Test

results: Unreal II: The Awakening

- Test

results: RightMark 3D

- Test

results: TRAOD

- Test

results: FarCry

- Test

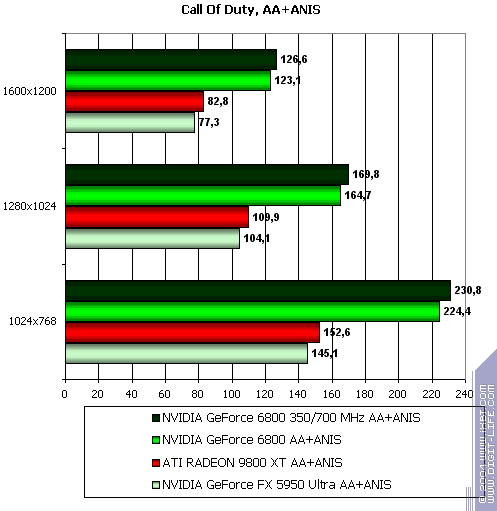

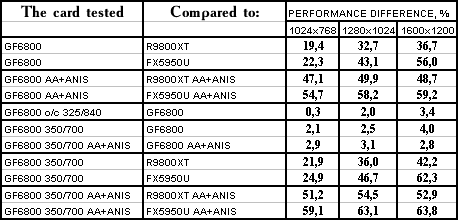

results: Call Of Duty

- Test

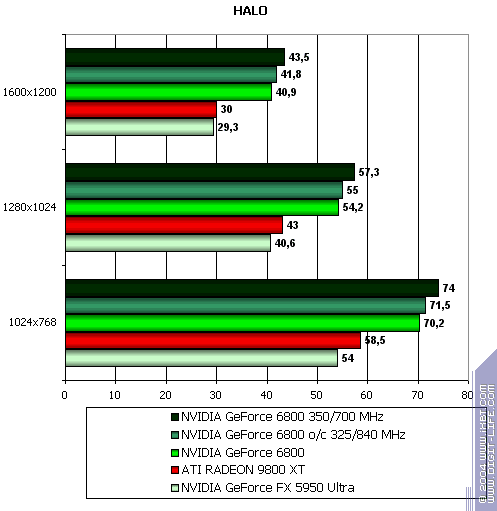

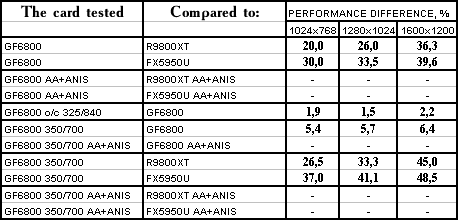

results: HALO: Combat Evolved

- Test

results: Half-Life2(beta)

- Test

results: Splinter Cell

- Test

results: Quake3 Arena

- Test

results: Return to Castle Wolfenstein

- Test

results: AquaMark3

- Test

results: DOOM3 (Alpha)

- Test

results: 3DMark03 (marks)

- Conclusions

We

proceed with our tests of video cards based on NVIDIA GeForce 6800, which are gradually

appearing on the market. It's a Low End model, which is intended to replace such

previous High End cards as RADEON 9800 XT and GeForce FX 5950 Ultra. ATI does not

yet have anything to offer in this segment, which could extrude RADEON 9800 XT from

the market (or could at least dramatically drop its prices, which is however hardly

possible because these cards are long out of production and stores offer the cards

that were purchased by the dealers long ago at high prices).

But

NVIDIA already has such products on sale at prices even lower than that of GeForce

FX 5950 Ultra or RADEON 9800 XT, which is very pleasing.

So,

what is 6800? It's a 12-pipeline chip dressed into narrow CPU bus bandwidth. Indeed,

you can see that the same memory operating not at 350 (700) MHz in GeForce FX5900

XT is installed on GeForce 6800. But for all that FX5900 XT has only 4 pipelines

and 8 texture units (compare with 12 pipelines and 12 texture units). The NV40 core

itself is not different from GeForce 6800 Ultra or GT in its features, it only has

fewer pipelines.

Theoretical

materials and reviews of video cards, which concern functional properties of the

GPU ATI

RADEON X800 (R420) and NVIDIA

GeForce 6800 (NV40)

So

in this material it'll be interesting to learn how strong the influence of CPU bus

bandwidth is on 6800 (in my tests I overclocked the memory on the card from 700 to

840 MHz, and I didn't touch the core). And due to the fact that the core of the Galaxy

card operates at 350 MHz instead of 325 MHz, we shall be able to learn how strong

the influence of GPU frequency raise is with the same CPU bus bandwidth (it's really

interesting how effective the memory resource saving technologies are with this relatively

low bandwidth).

Yes,

I have touched above the topic about the card from Galaxy. This company is almost

unknown in our country, though its products can be found on the shelves, but very

often as noname. Though you can see that it has even High-End products, with original

designs at that. The powerful cooling system allowed the specialists from this company

to increase the core frequency by 25 MHz to make their product deliberately a tad

faster than the other cards. Our tests will demonstrate exactly how faster it is.

I

think that there is no need in telling you about the MSI company, it's the oldest

brand in our market, its video cards have been known since 1998.

MSI

products stand out by the fact that MSI plant produces not only copies of reference

cards but cards of own design, especially it concerns cooling systems. You shouldn't

also forget that MSI offers almost the best bundle for its video cards we have ever

seen.

So,

in our laboratory we have tested two video cards based on GeForce 6800, which, according

to our research, is the best buy for those who have 350-370 USD for a powerful state-of-the-art

accelerator.

Video

Cards

| Galaxy Glacier GeForce 6800 |

|

| MSI NX6800-TD128 |

|

| Galaxy Glacier GeForce 6800 |

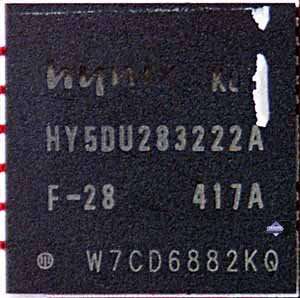

| The cards have an AGP x8/x4 interface, 128 MB

DDR SDRAM allocated in 8 chips on the front PCB side.

Hynix memory chips. 2.2ns memory access time, which corresponds

to 450 (900) MHz. The memory operates at 350 (700) MHz. GPU

frequency in MSI NX6800-TD128 is 325 MHz, and in Galaxy Glacier

GeForce 6800 the GPU frequency is 350 MHz. 256bit memory bus.

12 (3x4) pixel pipelines.

|

|

| MSI NX6800-TD128 |

| The cards have an AGP x8/x4 interface, 128 MB

DDR SDRAM allocated in 8 chips on the front PCB side.

Hynix memory chips. 2.8ns memory access time, which corresponds

to 350 (700) MHz, at which the memory operates. GPU frequency

in MSI NX6800-TD128 is 325 MHz, and in Galaxy Glacier GeForce

6800 the GPU frequency is 350 MHz. 256bit memory bus. 12 (3x4)

pixel pipelines.

Later we found out that this video card actually

has 16 pipelines (4x4) instead of 12. We don't know what was

the reason for such chip in GeForce 6800 and whether all such

cards will be equipped with a 16-pipeline GPU.

|

|

| Comparison with the reference design,

front view |

|

Galaxy Glacier GeForce 6800

|

Reference card NVIDIA GeForce 6800

|

|

|

|

MSI NX6800-TD128

|

|

|

| Comparison with the reference design,

back view |

|

Galaxy Glacier GeForce 6800

|

Reference card NVIDIA GeForce 6800

|

|

|

|

MSI NX6800-TD128

|

|

Both

cards are obviously of the same design based on the reference one. In fact the PCBs

differ only in lacquer colors. The layout of memory chips is similar to GeForce FX

5900, actually they have a similar design. The only external power connector is located

in the upper part of the card. A 300Wt power supply unit will be sufficient for the

card's operation in an average system block. I want to note that the memory in the

video card from Galaxy offers great overclocking potential and thus overclockers

are sure to like this card.

But

the cooling systems in these cards are different.

| MSI NX6800-TD128 |

|

Look at the very effective system from ARX (well-known cooler

manufacturer) based on a narrow copper heatsink sprawled on

the PCB.

The heatsink is narrow enough for the cooler not to cover

the slot next to AGP. A powerful fan is on the left, it can

operate at 5000 rpm, but this revolution frequency is either

enabled by the driver in case of graphic processor overheating,

or it is specified by a slider on top of the heatsink.

The cooler is similar to those we have previously seen in

ATI RADEON 9800 XT (X800) - see the bottom photo on the right.

At least it has the same operating principle.

|

|

|

|

| Galaxy Glacier GeForce 6800 |

|

The video card from Galaxy possesses a cooling system from

Arctic Cooling. We have already come across the same system

in HIS video cards based on RADEON X800.

Galaxy Glacier GeForce 6800 just has a different plastic color

(blue instead of colorless/transparent). It's a very effective

cooling system, which, despite its high rpms, has a silent fan-turbine

blowing out the air from the system block, unlike the previous

one. It goes without saying that the card blocks the slot next

to AGP because of its huge size.

|

|

|

Let's

return to the cooler installed on the card from MSI. Looking at this strikingly effective

cooling system, which occupies only one slot, we decided to check (1) what will happen

to the temperature condition if we set the fan rpm slider to minimum; (2) how this

cooler will operate in GeForce 6800 Ultra, considering that the PCB mounting holes

are identical.

The

device is firmly attached to the card with 8 screws (4 screws at chip corners, and

4 screws between memory chips).

Thus,

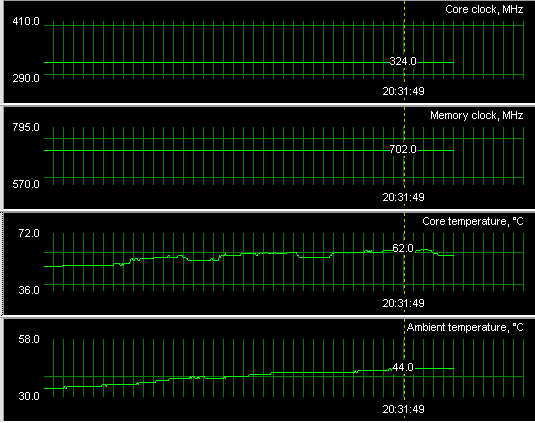

with maximum fan rpms in GeForce 6800 we got the following temperature curve (we

used RivaTuner to take temperature readings (written by Alexei Nikolaichuk AKA Unwinder)):

We

can see that the maximum temperature (in 3D, FarCry) was 62 degrees centigrade at

GPU. This is very good and there is much in reserve. And now let's set the slider

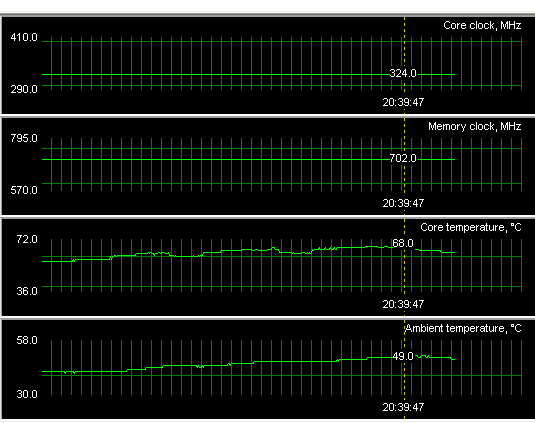

to minimum:

The

temperature rose to 68 degrees centigrade. This also has much in reserve so we can

fearlessly recommend the minimum position of the slider, in this case you will not

hear the fan at all.

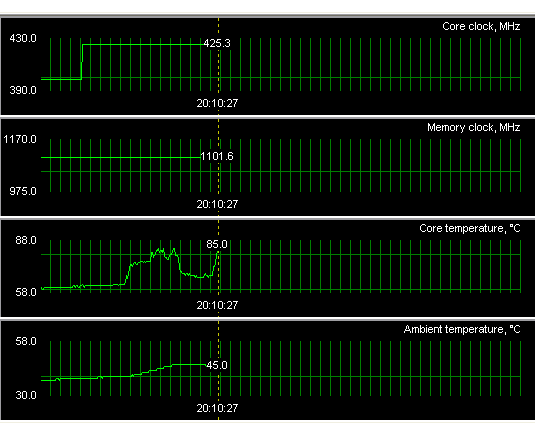

Now

let's install this cooling system to 6800 Ultra (slider to High, that is to maximum

rpm):

You

can see the temperature quickly rise to critical 85 degrees, and... the card froze

(you can see the break in readings).

Alas,

the design of this cooler does not really fit 6800 Ultra, it's obviously not sufficient.

Perhaps we should have increased rpm, but this cooler can hardly handle it. Besides,

for a sufficient cooling effect 6800 Ultra needs separate heatsinks for the memory

and the chip. Why? - It's very simple: a slightest misfit of the heatsink for the

chip heights will result in a microgap between the chip and the heatsink. In 6800

this gap can be compensated by the thermopaste viscosity, but in 6800 Ultra it cannot

be compensated.

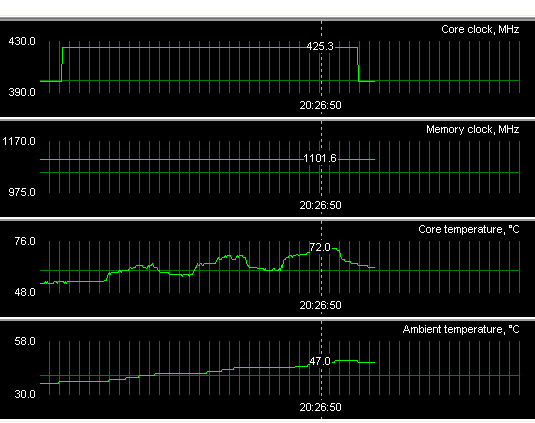

Below

is a temperature curve for 6800 Ultra with an original cooler:

Yes,

the temperature here does not rise above 72 degrees. It should be specially noted

that all the experiments were conducted inside the case with disabled case fans.

You

can see the chip under the coolers. It has an FCPGA package, the crystal size is

impressive (we have already talked about it before). It's clear that it physically

contains 16 (not 12) pipelines, 4 of them are disabled (to think logically the crystals

are sorted by their nonoperability; but they may be sorted that way by marketing

reasons, we are still looking for an option to enable these pipelines in a smooth

software way)

Let's

examine the package contents.

| MSI NX6800-TD128 |

| The box contains a user's guide, a lot

of CDs (14 items!) with various software (see the photo on the

right), TV extension cords, DVI-to-d-Sub, SVideo-to-RCA adapters,

external power supply splitter. The bundle is fit for a king!

|

|

|

| Galaxy Glacier GeForce 6800 |

| The box contains a user's guide, CD with drivers,

3 CDs with games (Chaser, MotoGP, game demos), PowerDVD, TV extension

cords, DVI-to-d-Sub, SVideo-to-RCA adapters, power supply cable.

Note that the bundle contains two modern games: Prince of Persia

and SCPT (the latter is on DVD!), which is a good thing. |

|

And

now look at the boxes:

Now

about overclocking the video card from MSI. The tests conducted are quite enough,

the memory was overclocked to 840 MHz. I didn't specially overclock the core (in

fact, I'm not up to overclocking, this data is provided only as a reference, because

you cannot draw conclusions from the overclocking potential of a SPECIFIC card sample).

Concerning

the video card from Galaxy, its memory managed to operate even at 500 (1000) MHz!

This is possible obviously due to 2.2ns memory chips. So I repeat, this video card

is a real boon for overclockers.

Installation

and Drivers

Testbed

configurations:

- Athlon

64 3200+ based computer

- AMD

Athlon 64 3200+ (L2=1024K) CPU

-

ASUS

K8V SE Deluxe mainboard

on VIA K8T800

- 1

GB DDR SDRAM PC3200

- Seagate

Barracuda 7200.7 80GB SATA HDD

- Operating

system: Windows XP SP1; DirectX 9.0b

- Monitors: ViewSonic

P810 (21")

and Mitsubishi

Diamond Pro 2070sb (21")

- ATI

drivers v6.458 (CATALYST 4.7); NVIDIA v61.76

VSync

is disabled.

Test

results: performance comparison

We

used the following test applications:

- Serious

Sam: The Second Encounter v.1.05 (Croteam/GodGames) - OpenGL, multitexturing, ixbt0703-demo,

test settings: quality, S3TC

OFF

- Unreal

Tournament 2003 v.2225 (Digital Extreme/Epic Games) - Direct3D, Vertex Shaders, Hardware

T&L, Dot3, cube texturing, default quality

- Code

Creatures Benchmark Pro (CodeCult) - game test demonstrating how the card operates

with DirectX 8.1, Shaders, HW T&L.

- Unreal

II: The Awakening (Legend Ent./Epic Games) - Direct3D, Vertex Shaders, Hardware T&L,

Dot3, cube texturing, default quality

- RightMark

3D v.0.4 (one

of the game scenes) - DirectX 8.1, Dot3, cube texturing, shadow buffers, vertex and

pixel shaders (1.1, 1.4).

- Tomb

Raider: Angel of Darkness v.49 (Core Design/Eldos Software) - DirectX 9.0, Paris5_4

demo. Maximum quality, we only disabled Depth of Fields PS20.

- HALO:

Combat Evolved (Microsoft) - Direct3D, Vertex/Pixel Shaders 1.1/2.0, Hardware T&L,

maximum quality

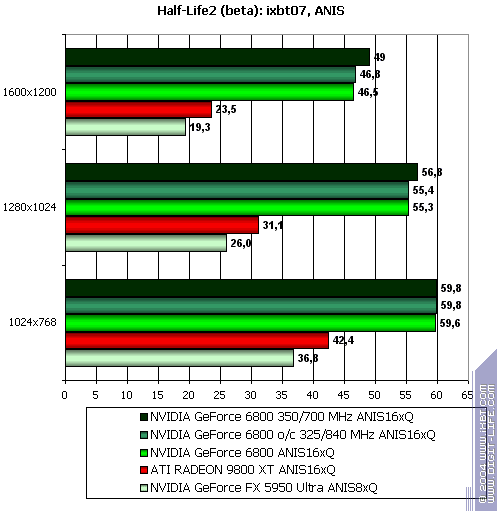

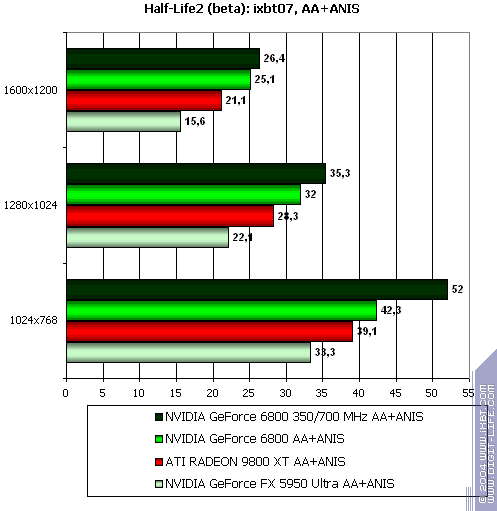

- Half-Life2

(Valve/Sierra) - DirectX 9.0, demo (ixbt07.

Tested with anisotropic filtering disabled, and also in a heavy mode with AA and

anisotropy.

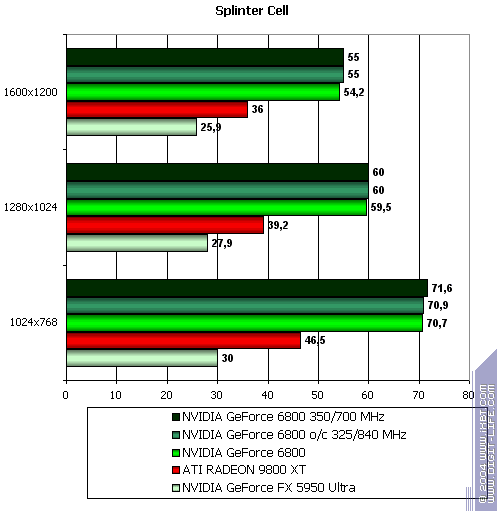

- Tom

Clancy's Splinter Cell v.1.2b (UbiSoft) - Direct3D, Vertex/Pixel Shaders 1.1/2.0,

Hardware T&L, maximum quality (Very High); demo 1_1_2_Tbilisi

- Call

of Duty (MultiPlayer) (Infinity Ward/Activision) - OpenGL, multitexturing, ixbt0104demo,

the test settings - Very High, S3TC

ON

- FarCry

1.1 (Crytek/UbiSoft), DirectX 9.0, multitexturing, demo01 (research) (the game is

started with the key -DEVMODE), the test settings - Very High.

- 3DMark03

v.3.40 (FutureMark) - DirectX 9.0, multitexturing, maximum possible test settings.

- AquaMark3

(Massive Development) - DirectX 9.0, multitexturing, maximum possible test settings.

- Quake3

Arena v.1.17 (id Software/Activision) - OpenGL, multitexturing, ixbt0703-demo, maximum

possible test settings: detailing

- High,

texture details

- T70;4, S3TC

OFFcurved

surfaces are smoothed by the variables r_subdivisions

"1" and r_lodCurveError

"30000" (note

that the default value of r_lodCurveError is "250"!)

- Return

to Castle Wolfenstein (MultiPlayer) (id Software/Activision) - OpenGL, multitexturing,

ixbt0703-demo, maximum possible test settings, S3TC

OFF

- DOOM

III (alpha version) (id Software/Activision) - OpenGL, multitexturing, maximum possible

test settings, S3TC

OFF

If

you want to get the demo-benchmarks, which we use, contact me at my e-mail.

ANIS

mode on the diagrams means Anisotropic 16x Quality (FX5950 - 8x Quality), AA - Anti-Aliasing

4x Quality

I

want to note that the diagrams below display the data obtained with "regular"

GeForce 6800 (12 pipelines).

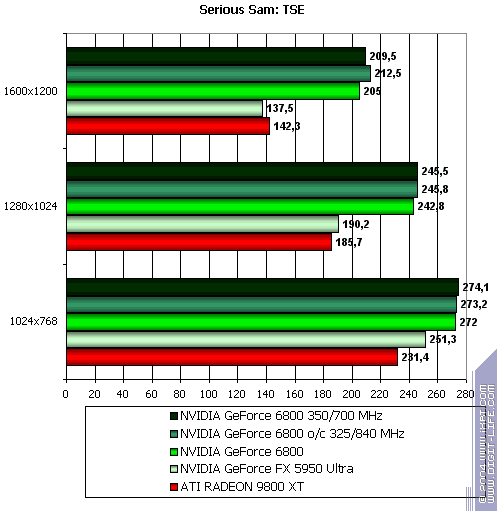

Serious

Sam: The Second Encounter

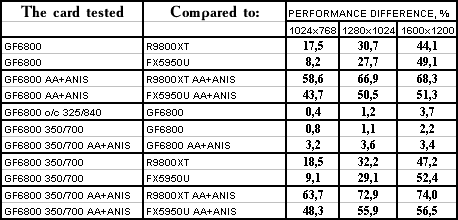

Thus,

on the whole:

- NVIDIA

GeForce 6800 versus ATI RADEON 9800 XT - up to 66% advantage!

- NVIDIA

GeForce 6800 versus NVIDIA GeForce FX 5950 Ultra - up to 51% advantage

- NVIDIA

GeForce 6800 with memory overclocked to 840 MHz - the effect is insignificant, up

to 3.7%, which is within the allowed precision of measurements. Interestingly, this

game requires much RAM. Yet HSR operates well in 6800.

- NVIDIA

GeForce 6800 with the core overclocked to 350 MHz - there is almost no gain even

at heavy load. Comparing this with the previous effect, there is some contradiction.

Perhaps even with a heavy load it all depends on system resources and the effect

of the video card performance gain is minimal.

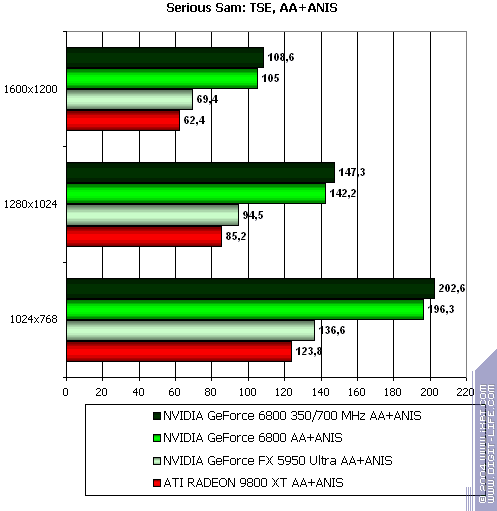

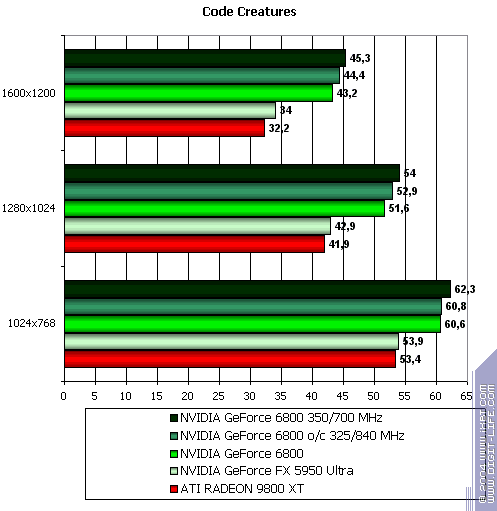

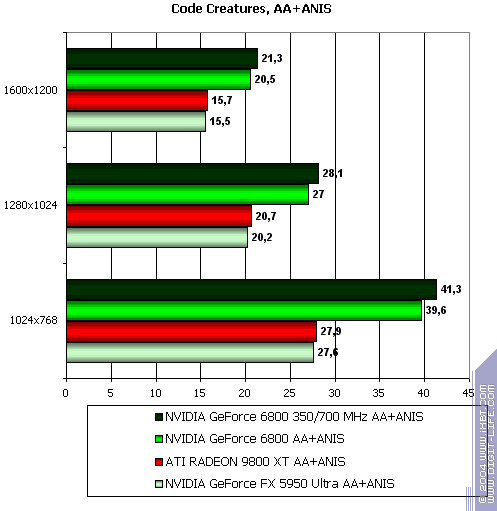

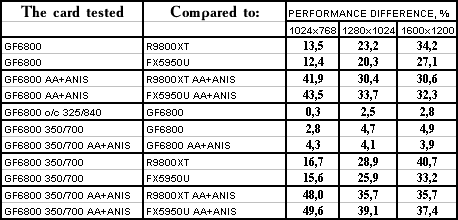

Code

Creatures

Thus,

on the whole:

- NVIDIA

GeForce 6800 versus ATI RADEON 9800 XT - 34% advantage

- NVIDIA

GeForce 6800 versus NVIDIA GeForce FX 5950 Ultra - much the same, the advantage is

up to 44%

- NVIDIA

GeForce 6800 with its memory overclocked to 840 MHz - similar to the previous test,

CPU bus bandwidth expansion gave nothing, though in this case a larger part of load

is on the core.

- NVIDIA

GeForce 6800 with its core overclocked to 350 MHz - confirms the above, the effect

is almost twice as great, though percentage wise the core was overclocked less than

the memory.

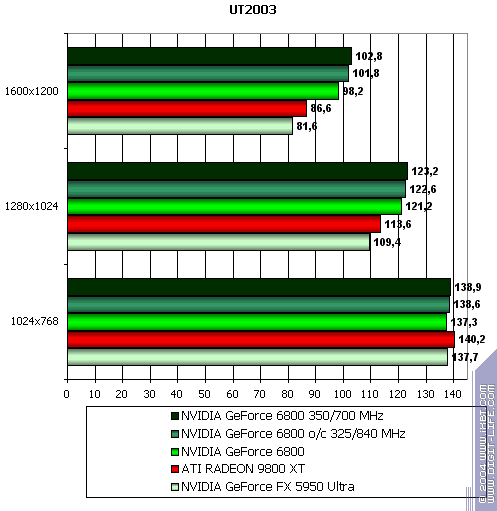

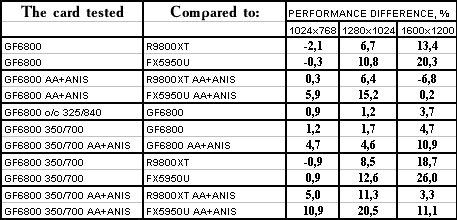

Unreal

Tournament 2003

The

easiest modes without AA and anisotropy: on the whole 6800 is the leader, if not

to take into account the parity in 1024x768. But here the game is up to system resources,

everything depends on software, not hardware.

The

final heaviest mode with AA and anisotropy: very much the same. The only discord

is a performance drop of 6800 in 1600x1200.

Thus,

on the whole:

- NVIDIA

GeForce 6800 versus ATI RADEON 9800 XT - overall victory, but in heavy modes there

are parity situations

- NVIDIA

GeForce 6800 versus NVIDIA GeForce FX 5950 Ultra - earnest victory with the score

up to 20%

- NVIDIA

GeForce 6800 with its memory overclocked to 840 MHz - there is still no effect (HSR

"rules")

- NVIDIA

GeForce 6800 with its core overclocked to 350 MHz - the gain is more considerable,

but still insignificant in light modes, and much more considerable with AA and AF

(which again testifies that the game depends on CPU etc).

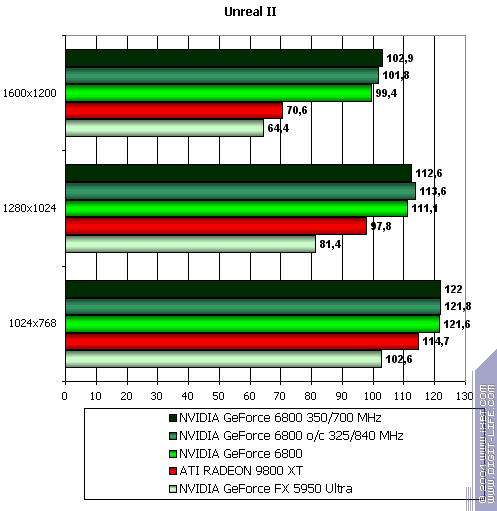

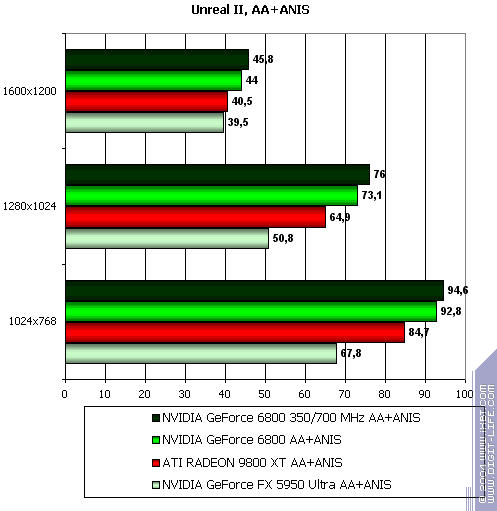

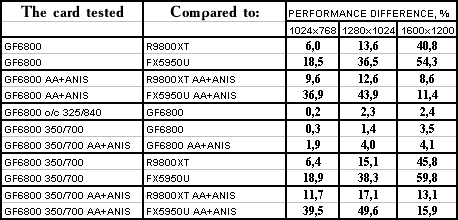

Unreal

II: The Awakening

Thus,

on the whole:

- NVIDIA

GeForce 6800 versus ATI RADEON 9800 XT - victory, 6800 outscores with 12-40% depending

on the resolution

- NVIDIA

GeForce 6800 versus NVIDIA GeForce FX 5950 Ultra - the same results, but the advantage

reaches 54%

- NVIDIA

GeForce 6800 with its memory overclocked to 840 MHz - no effect

- NVIDIA

GeForce 6800 with its core overclocked to 350 MHz - minimal effect.

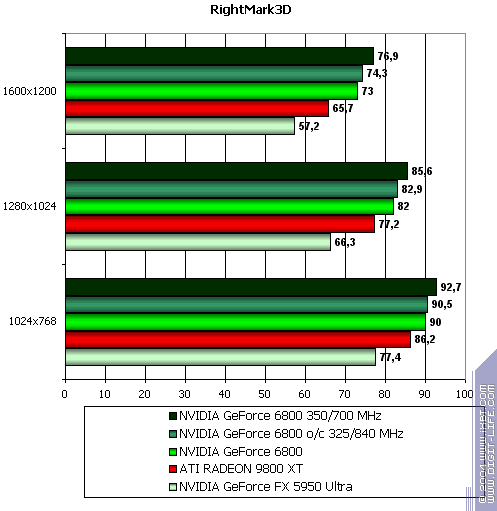

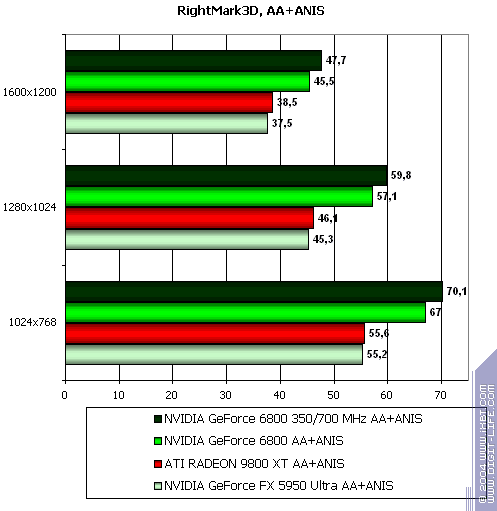

RightMark

3D

Thus,

on the whole:

- NVIDIA

GeForce 6800 versus ATI RADEON 9800 XT - 6800 is the champion, its advantage increasing

simultaneously with the load

- NVIDIA

GeForce 6800 versus NVIDIA GeForce FX 5950 Ultra - also victory, the advantage is

up to 27%

- NVIDIA

GeForce 6800 with its memory overclocked to 840 MHz - null effect (it's quite clear,

the core is loaded)

- NVIDIA

GeForce 6800 with its core overclocked to 350 MHz - there is little gain, which is

quite logical.

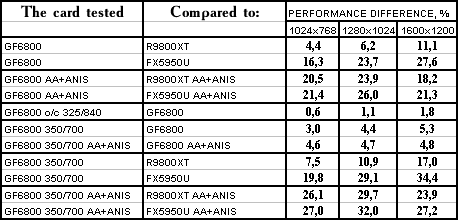

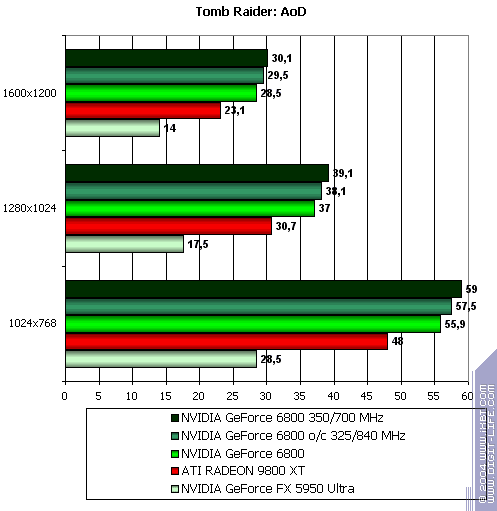

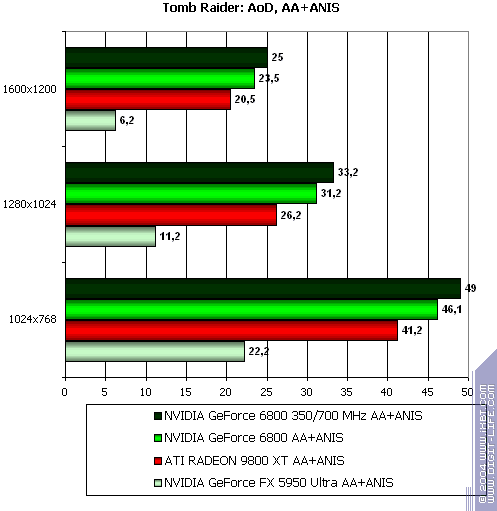

TR:AoD,

Paris5_4 DEMO

Thus,

on the whole:

- NVIDIA

GeForce 6800 versus ATI RADEON 9800 XT - not a bad gain of 12-23%

- NVIDIA

GeForce 6800 versus NVIDIA GeForce FX 5950 Ultra - phenomenal performance gain. It's

all up to shaders. And we all know how slow they were in the GeForce FX series.

- NVIDIA

GeForce 6800 with its memory overclocked to 840 MHz - minimal effect

- NVIDIA

GeForce 6800 with its core overclocked to 350 MHz - the gain is a little more considerable,

but still the low CPU bus bandwidth is the bottleneck.

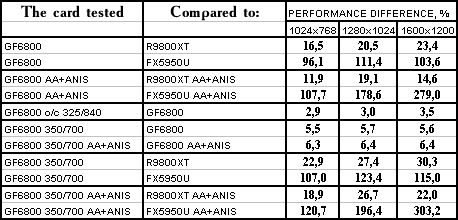

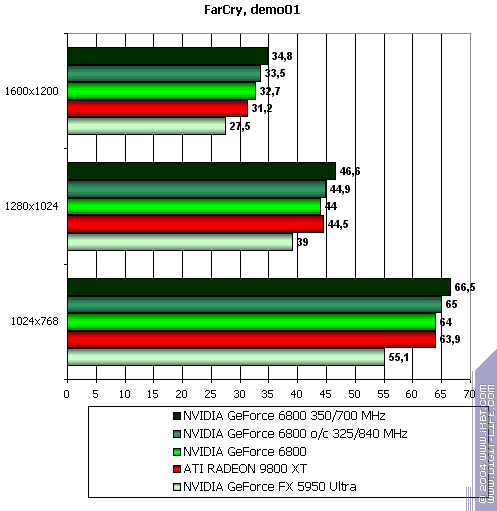

FarCry,

demo01

The

easiest modes without AA and anisotropy: small gain.

The

heaviest final mode with AA and anisotropy: these modes result in performance gain

leap.

Thus,

on the whole:

- NVIDIA

GeForce 6800 versus ATI RADEON 9800 XT - from almost parity without AA+AF (it was

all up to the system resources) to 41% performance gain in 6800!

- NVIDIA

GeForce 6800 versus NVIDIA GeForce FX 5950 Ultra - a holiday for 6800: huge advantage!

- NVIDIA

GeForce 6800 with its memory overclocked to 840 MHz - almost no effect

- NVIDIA

GeForce 6800 with its core overclocked to 350 MHz - the game loads the core heavily,

that's why its core overclocking results in more gain.

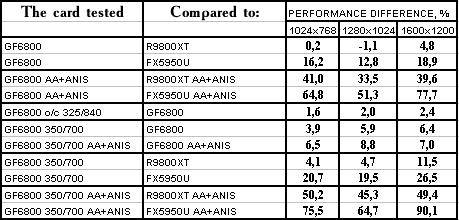

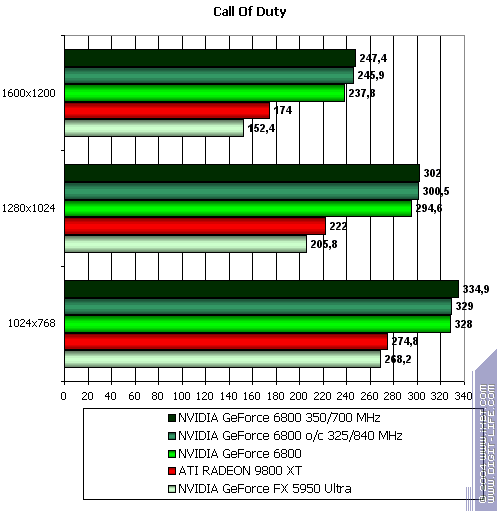

Call

of Duty, ixbt04

Thus,

on the whole:

- NVIDIA

GeForce 6800 versus ATI RADEON 9800 XT - victory! With the score of 50%!

- NVIDIA

GeForce 6800 versus NVIDIA GeForce FX 5950 Ultra - the same results, but the advantage

already reaches 60% (just imagine the game running 60% faster!)

- NVIDIA

GeForce 6800 with its memory overclocked to 840 MHz - almost no effect

- NVIDIA

GeForce 6800 with its core overclocked to 350 MHz - the same results.

HALO:

Combat Evolved

Thus,

on the whole:

- NVIDIA

GeForce 6800 versus ATI RADEON 9800 XT - 20-36% advantage

- NVIDIA

GeForce 6800 versus NVIDIA GeForce FX 5950 Ultra - the same results, but the figures

reach 30-40%

- NVIDIA

GeForce 6800 with its memory overclocked to 840 MHz - no effect

- NVIDIA

GeForce 6800 with its core overclocked to 350 MHz - there is little gain, the game

mostly depends on the core potential.

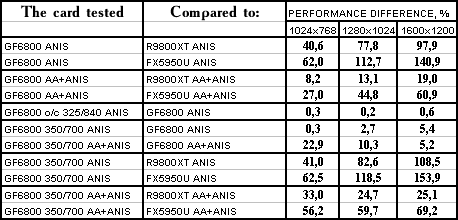

Half-Life2

(beta): ixbt07 demo, ANISO 16xQ

Thus,

on the whole:

- NVIDIA

GeForce 6800 versus ATI RADEON 9800 XT - it's interesting to see the reverse picture.

No, 6800 has not lost! But as a rule the gain is higher with AA+AF in comparison

with easy modes, but here everything is vice versa. That's very strange, it's most

likely the drivers' fault, they must have overloaded the card in heavy modes.

- NVIDIA

GeForce 6800 versus NVIDIA GeForce FX 5950 Ultra - almost the same picture.

- NVIDIA

GeForce 6800 with its memory overclocked to 840 MHz - nothing new

- NVIDIA

GeForce 6800 with its core overclocked to 350 MHz - there is some gain, which is

characteristic of this game.

Splinter

Cell

Thus,

on the whole:

- NVIDIA

GeForce 6800 versus ATI RADEON 9800 XT - huge gain!

- NVIDIA

GeForce 6800 versus NVIDIA GeForce FX 5950 Ultra - the huge becomes giant!

- NVIDIA

GeForce 6800 with its memory overclocked to 840 MHz - nothing!

- NVIDIA

GeForce 6800 with its core overclocked to 350 MHz - much the same, it's up to the

system resources.

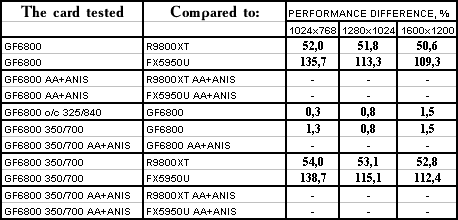

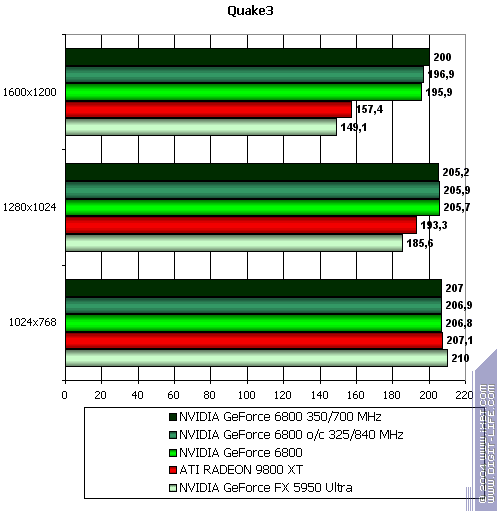

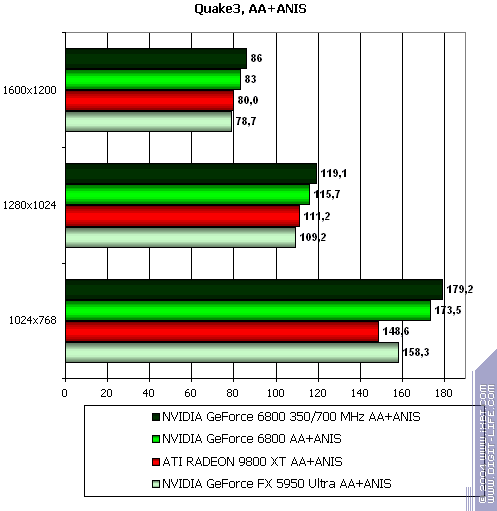

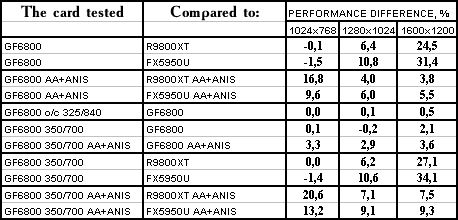

Quake3

Arena

Thus,

on the whole:

- NVIDIA

GeForce 6800 versus ATI RADEON 9800 XT - some quivering of performance gain depending

on the resolution (actually this game has always depended on system resources in

powerful video cards)

- NVIDIA

GeForce 6800 versus NVIDIA GeForce FX 5950 Ultra - victory, but somehow very strange,

the driver is fooling around again.

- NVIDIA

GeForce 6800 with its memory overclocked to 840 MHz - zero

- NVIDIA

GeForce 6800 with its core overclocked to 350 MHz - almost nothing.

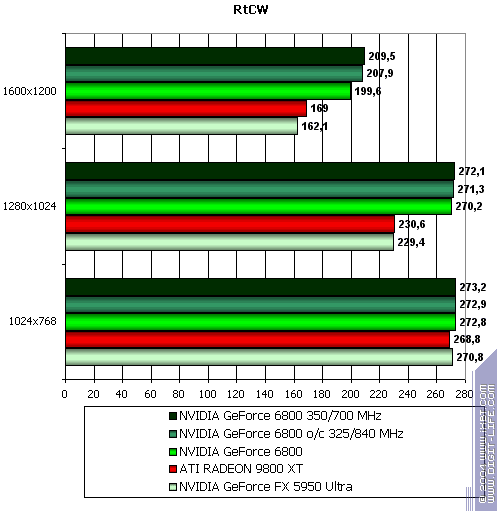

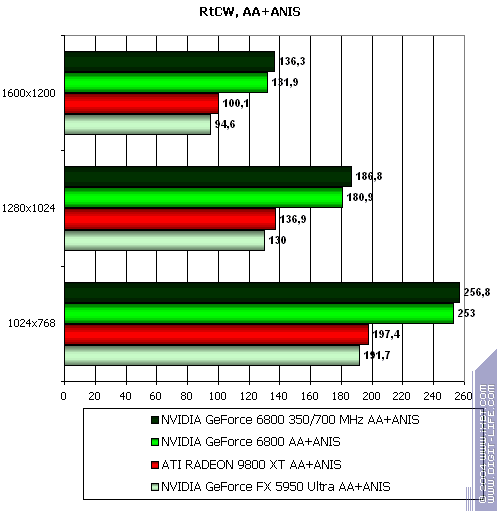

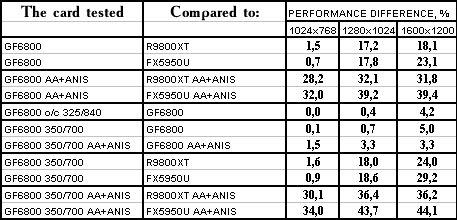

Return

to Castle Wolfenstein (Multiplayer)

Thus,

on the whole:

- NVIDIA

GeForce 6800 versus ATI RADEON 9800 XT - victory with 17-32%

- NVIDIA

GeForce 6800 versus NVIDIA GeForce FX 5950 Ultra - the same results

- NVIDIA

GeForce 6800 with its memory overclocked to 840 MHz - a little gain in 1600x1200

- NVIDIA

GeForce 6800 with its core overclocked to 350 MHz - the same results

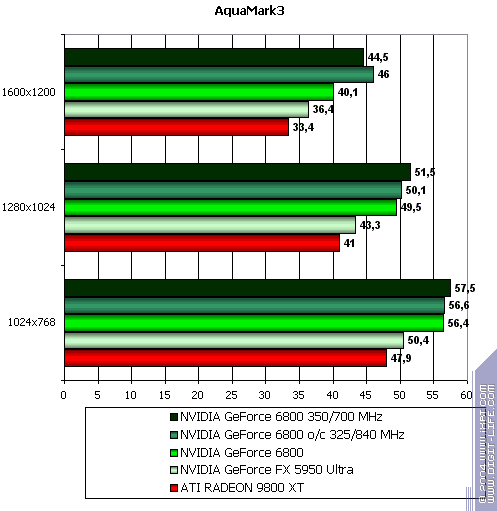

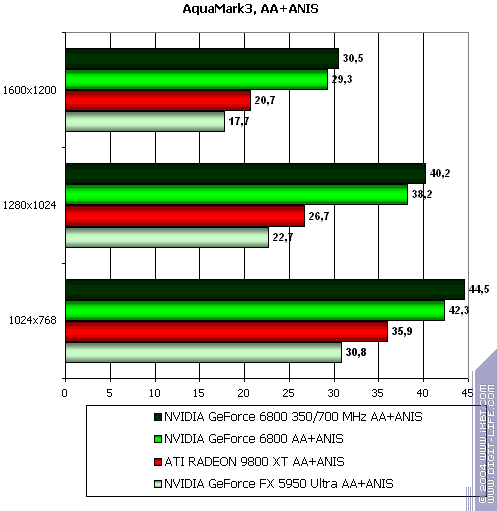

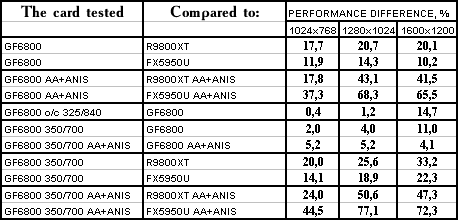

AquaMark3

Thus,

on the whole:

- NVIDIA

GeForce 6800 versus ATI RADEON 9800 XT - confident victory with 18-41%

- NVIDIA

GeForce 6800 versus NVIDIA GeForce FX 5950 Ultra - the same results, the advantage

is over 65%

- NVIDIA

GeForce 6800 with its memory overclocked to 840 MHz - we can see that for the first

time the CPU bus bandwidth expansion produced positive results, 14% gain (obviously

in the highest resolution). I would have never thought that this test (strongly overloading

the core) would depend on the memory clock.

- NVIDIA

GeForce 6800 with its core overclocked to 350 MHz - the effect is even lower than

the previous one.

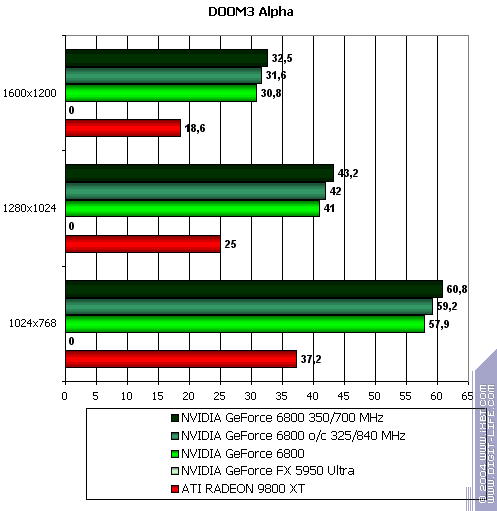

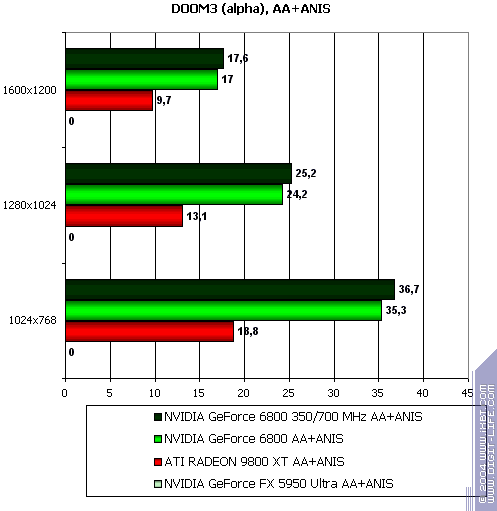

DOOM

III (alpha)

Unfortunately,

this test couldn't be run on FX 5950 (driver), so we shall compare only with 9800

XT.

Thus,

on the whole:

- NVIDIA

GeForce 6800 versus ATI RADEON 9800 XT - excellent results, 88% gain!

- NVIDIA

GeForce 6800 with its memory overclocked to 840 MHz - almost no effect

- NVIDIA

GeForce 6800 with its core overclocked to 350 MHz - little gain

3DMark03

- MARKS

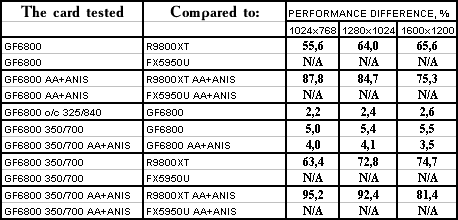

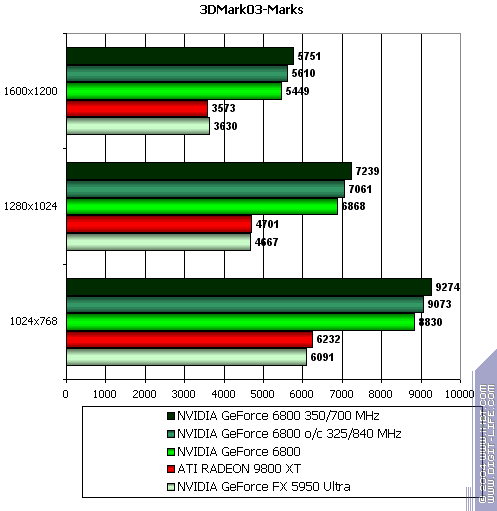

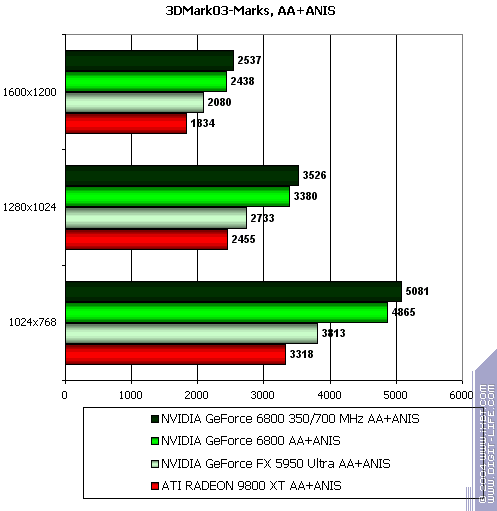

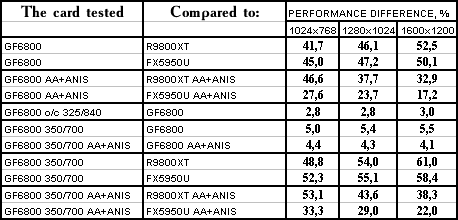

Thus,

on the whole:

- NVIDIA

GeForce 6800 versus ATI RADEON 9800 XT - the usual victory with 50%

- NVIDIA

GeForce 6800 versus NVIDIA GeForce FX 5950 Ultra - the same results

- NVIDIA

GeForce 6800 with its memory overclocked to 840 MHz - almost no effect

- NVIDIA

GeForce 6800 with its core overclocked to 350 MHz - there is some gain, but...

Conclusions

First

of all I want to note that strangely enough with its 12 pipelines and the core clock

at 325 MHz (3900 billion texels/sec) GeForce 6800 is quite a balanced video card

with CPU bus bandwidth implemented via memory at 350 (700) MHz. Considering that

the fill rate of RADEON 9800 XT is 3300 billion texels/sec, plus its combination

with 730 MHz memory clock and decent performance gain over 9800 XT (up to 50-60%),

the effectiveness of GeForce 6800 speaks for itself. Every single MHz of this chip

is much more effective despite the practically identical CPU bus bandwidth in 9800

XT.

Our

tests proved that the card does not really need the raise in memory clock, which

allows NVIDIA and its partners to considerably reduce the net cost of these cards

in comparison with GeForce 6800 GT (high-performance GDDR3 is very expensive now

and constitutes a great part of the net cost of video cards).

Now

about the cards. Galaxy Glacier GeForce 6800 is the first.

As

you can see from the test results, a frequency raise by 25 MHz produces little effect,

but it's still pleasant and you can get moral satisfaction from it :). But I repeat

that this card is equipped with 2.2ns memory instead of the standard (in such cases)

2.8ns memory, that's why it can be considerably overclocked. However, as we have

previously seen, this will not have a brilliant effect.

The

cards look wonderful as well as their operation. Besides, the Arctic Cooling cooler

is almost noiseless, and overheating is not an option with 6800. What concerns the

2D quality, our sample was quite all right, it was comfortable to work in 1600x1200

at 85Hz.

MSI

NX6800-TD128.

This

video card has a nice design, the cooler perfectly matches the red PCB. It can also

be made almost noiseless, our research proved that the heat dissipation will not

deteriorate.

Astounding

package contents makes this video card a cherished purchase for those who have 350-370

USD for a state-of-the-art 3D-accelerator (the retail price for early august in Moscow).

I

note once again that this sample is equipped with 16 pipelines instead of 12.

Both

cards are stable, there are no reprimands, the quality is good.

In our 3DiGest you can find more detailed

comparisons of various video cards.

According

to the test results MSI NX6800-TD128 merits the Excellent Package award (July).

This

card (MSI NX6800-TD128) also merits the Original Design Award (July).

Write a comment below. No registration needed!

|

|

|

|

|

|