Contents

- Introduction

- Graphics card features

- Testbed configuration, benchmark list

- Test results

- Conclusions

Graphics card performance

Those of you who are into 3D graphics will understand the charts themselves. And newbies and those who have just begun choosing graphics cards can read our comments.

First of all, you should browse our brief references dedicated to modern graphics card series and underlying GPUs. Note clock rates, shader support, and pipeline architecture. You should understand that 8800 series made the "pipeline" term obsolete. Now there are stream processors handling games' shader instructions, texture processors handling pixels (texturing, filtering). In other words, everything's separated. And only after texture operations are complete data is written to memory buffer, like always. Before it has been like that: take a triangle, process it with a shader instruction set, texture it, filter it, and send it along. This has come to an end. Now drivers together with special thread processors decide which stream processor will do what and when. The same can be said about texture processors.

Secondly, you can browse our 3D graphics section for the basics of 3D and some new product references. There are only two companies that release graphics processing units: ATI (recently merged with AMD and obtained its name) and NVIDIA. Therefore all information is generally separated in two respective parts. You can also read our monthly 3Digest, which sums up all graphics card performance charts.

Thirdly, we'll see how the examined graphics card performs in benchmarks.

Remember that today many games are bottlenecked by CPUs and systems in general, so even the most powerful graphics card might not be able to show its potential in AA 4x and AF 16x modes. Therefore, examining previous top-performing products, we have added a new HQ (High Quality) mode, which stands for:

- ATI RADEON X1xxx: AA 6x + adaptive AA + AF 16x High Quality

- NVIDIA GeForce 7xxx/8xxx: AA 8x + TAA (MS with gamma correction) + AF 16x

This choice is not accidental, but is a result of a compromise: on the one hand we are still close to ATI's AA 6x, on the other hand GF 7xxx doesn't support AA higher than 8x. On the third hand, I believe this AA mode is visually enough, since a naked eye won't notice any differences, considering that we are to compare 8800 at high resolutions.

Above you can see screenshots demonstrating the HQ mode.

And another thing: we are not going to compare these cards and analyze their performance without AA+AF! Such values are provided purely FYI. Everyone should understand that such top-performing products must be loaded with at least AA 4x + AF 16x!

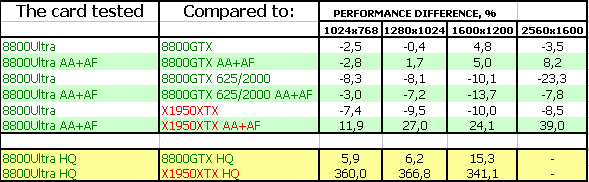

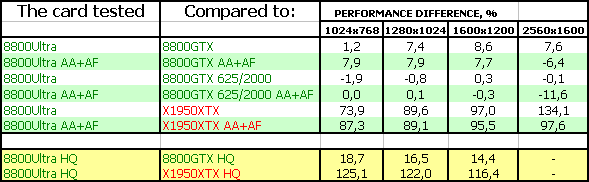

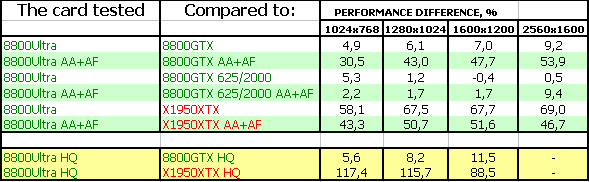

FarCry, Research (No HDR)

Test results: FarCry Research (No HDR)

For our comparisons we, among other cards, used the solution from EVGA with 625 MHz core clock (e.g., higher than that of 8800 Ultra.) However this one had 1450 MHz shader unit clock rate vs. 8800 Ultra's 1512 MHz. Nevertheless, we can see that FarCry likes ROP and texture processor clock rates more, making 8800 Ultra lag behind the cheaper card from EVGA.

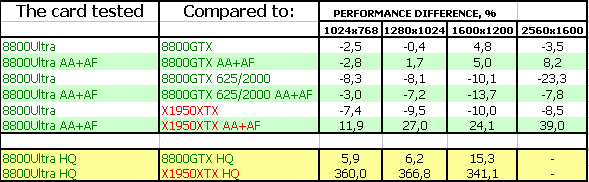

FarCry, Research (HDR)

Test results: FarCry Research (HDR)

A similar picture.

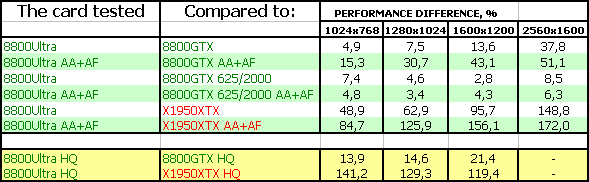

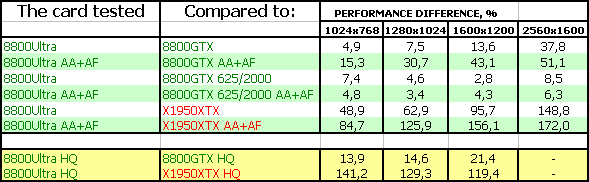

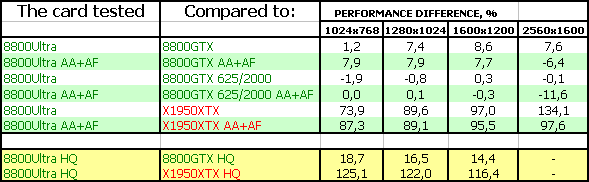

F.E.A.R.

Test results: F.E.A.R.

And this game is already very sensitive to shader unit clock rate, since it puts heavy shader load onto graphics cards. Thus we can see that 8800 Ultra (1512 MHz) outperforms the product from EVGA (1450 MHz).

The advantage over a usual 8800 GTX reaches 20% in the HQ mode! The increased bandwidth has a strong effect as well.

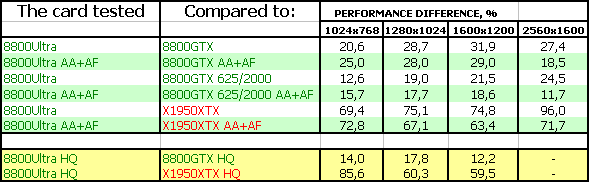

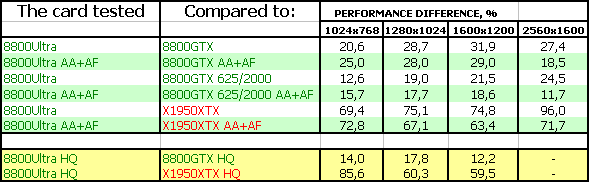

Splinter Cell Chaos Theory (No HDR)

Test results: SCCT (No HDR)

This game loads shaders even more! So, we can see how 8800 Ultra leaves all competitors far behind thanks to the highest shader unit clock rate.

Splinter Cell Chaos Theory (HDR)

Test results: SCCT (HDR)

A similar picture.

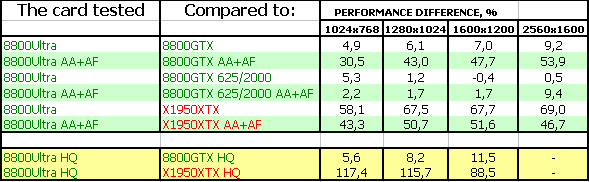

Call Of Juarez

Test results: CoJ

Strangely enough, but this game doesn't provide load on shaders that heavy, so 8800 Ultra can become a leader again. Therefore EVGA's card, with its higher ROP and texture processor clock rates, wins.

Company Of Heroes

Test results: CoH

This game is in-between SCCT and CoJ, where shader unit performance is slightly more critical. So, 8800 Ultra demonstrated results close to those of overclocked 8800 GTX from EVGA and even outperformed it a bit.

It's unclear why there is nearly 50% gap between usual 8800 GTX and 8800 Ultra in AA+AF mode. There's something strange with drivers...

Serious Sam II (No HDR)

Test results: SS2 (No HDR)

And this game again considers ROP and texture processor clock rates more critical, so the card from EVGA became leader. Nevertheless, in the HQ mode 8800 Ultra outperformed the nominal 8800 GTX by 15%.

Serious Sam II (HDR)

Test results: SS2 (HDR)

Nearly similar picture, just the advantage over 8800 GTX is somewhat smaller.

Prey

Test results: Prey

It's just the same.

3DMark05: MARKS

Test results: 3DMark05 MARKS

3DMark06: SHADER 2.0 MARKS

Test results: 3DMark06 SM2.0 MARKS

3DMark06: SHADER 3.0 MARKS

Test results: 3DMark06 SM3.0 MARKS

The picture is ambiguous even in synthetic benchmarks where, it seemed, shader unit should rule. But test results show us there are other important factors as well. And the fact that 8800 GTX, with core clock increased to 625 MHz, sometimes outperforms 8800 Ultra (612 MHz) despite former's lower shader unit clock rate signifies much.

Write a comment below. No registration needed!