Battle of

ATI RADEON X800 XT and NVIDIA GeForce 6800 Ultra

- Fragment Two:

450 MHz NV40 and New Tests of Both Cards

|

CONTENTS

- Introduction

- Features of the videocards

- Configurations of testbeds, test tools

- Synthetic tests in D3D RightMark

- Test results: Quake3 ARENA

- Test results: Serious Sam: The Second Encounter

- Test results: Return to Castle Wolfenstein

- Test results: Code Creatures DEMO

- Test results: Unreal Tournament 2003

- Test results: Unreal II: The Awakening

- Test results: RightMark 3D

- Test results: TRAOD

- Test results: FarCry (performance and quality)

- Test results: Call Of Duty

- Test results: HALO: Combat Evolved

- Test results: Half-Life2(beta)

- Test results: Splinter Cell

- Test results: Colin Mcrae Rally04 (performance and quality)

- Test results: Max Payne-2 (performance and quality)

- Test results: Painkiller (performance and quality)

- Test results: Need For Speed: Underground (performance and quality)

- Test results: Pirates of the Carribean (quality)

- Test results: Vietcong (quality)

- Test results: 3DMark03-Game1

- Test results: 3DMark03-Game2

- Test results: 3DMark03-Game3

- Test results: 3DMark03-Game4

- Test results: 3DMark03-MARKS

- Test results: AquaMark3

- Driver NVIDIA version 61.11

- Conclusions

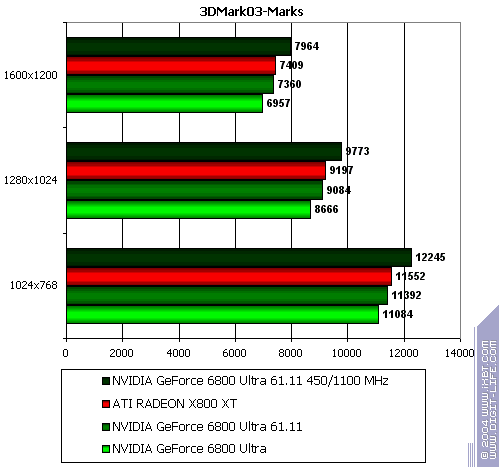

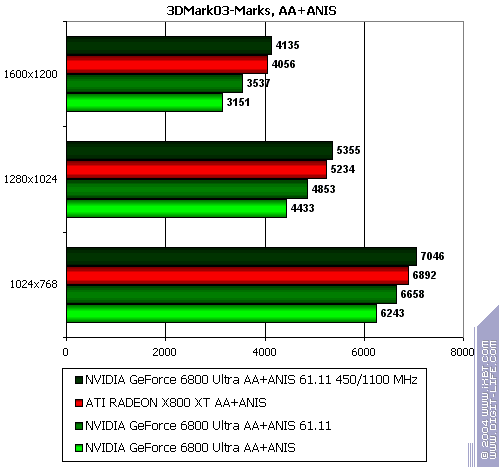

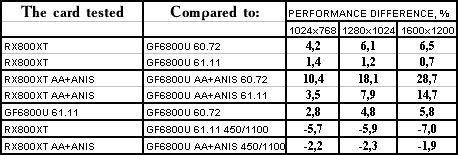

3DMark03 - MARKS

The lightest mode (no AA or anisotropy): X800 XT wins over a 400 MHz NV40 and loses to a 450 MHz one.

The heaviest mode (both AA and anisotropy): the same thing.

Thus:

- NVIDIA GeForce 6800 Ultra 450MHz vs. ATI RADEON X800 XT: no total estimate, as there are no clear-cut victories and losses.

- NVIDIA 61.11 vs. 60.72: an expected 5-percent increase on average.

If we consider 3DMark03 a DX9 test, then the advantege should be given to Game4, and X800 XT's victory becomes obvious. But if we take the formula that the benchmark authors suggested, then this is mostly a DX8 test and thus, we should give the victory to NV40 450 MHz. In other words, there are no winners and no losers.

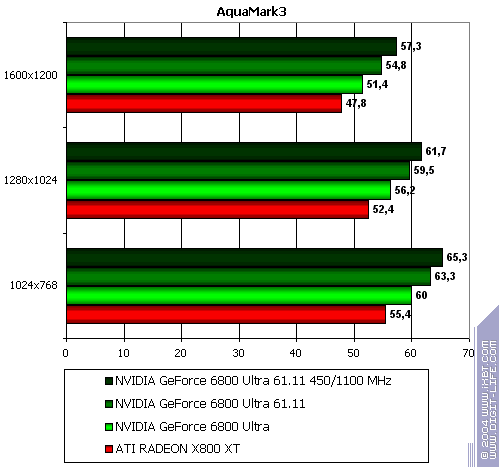

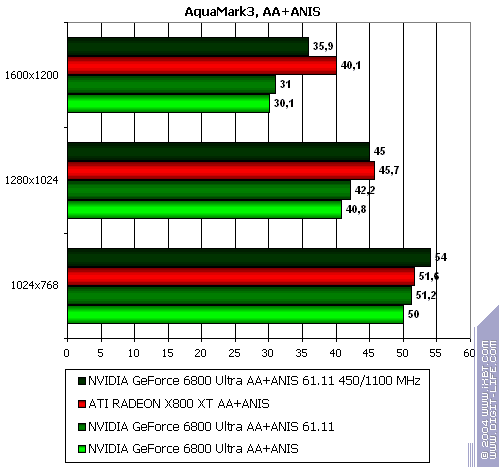

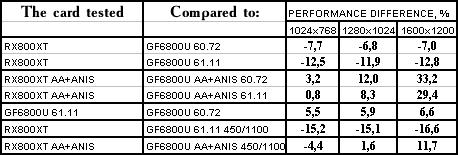

AquaMark3

The lightest mode (no AA or anisotropy): NV40 clearly wins.

The heaviest mode (both AA and anisotropy): luck smiled to ATI here.

Thus:

- NVIDIA GeForce 6800 Ultra 450MHz vs. ATI RADEON X800 XT: no definite estimate as both rivals have victories and losses.

- NVIDIA 61.11 vs. 60.72: a good 6-percent increase.

Another synthetic test and it brings uncertainty once again. Of course, if we add all the pluses to all the minuses, we'll get a parity, but is it really a viable method? I don't think so.

Driver NVIDIA 61.11

- It should be installed either on a CLEAR OS or after the previous version is uninstalled and the register is cleared, but not OVER older versions.

If these conditions are fulfilled, 61.11 works all right.

- There are problems with the control panel. You can increase frequencies but you can't bring them back to the default level. In spite of our repeated attempts to remove overclocking, the driver sets increased frequencies all the same. You can only get back to

default if you remove the driver and reinstall it.

- Our research revealed no visible cheats in 61.11, although we can't guarantee that this will be the case in all games. Thus, all performance increases were the result of real and useful optimisations.

It should be repeated that when we say "harmful cheats" we mean an artificial performance growth at the expense of quality.

- As for FarCry and the quality in it, it is patch 1.1 that is to blame (we won't discuss who was behind all this as we have no evidence). The picture looks very much like a lowered shader execution precision.

- Concerning work in 3DMark03, the benchmark authors themselves admitted that 60.72 is a good driver version for NV40, from their point of view, and 61.11 has little difference from it in this respect.

Conclusions

- An up-to-450MHz core frequency increase doesn't guarantee the victory to GeForce 6800 Ultra. Many tests (especially those based on recent games) showed that

RADEON X800 XT is still better than the accelerated NV40.

- Two questions arise wherever we see 450 MHz GeForce 6800 Ultra overtaking its rival:

- Will all serial cards have this frequency? -- The answer is likely to be "no", considering that these cards are already appearing on the North American market, and it is 400-MHz variants that are being on sale. Thus, such frequency is likely to be implemented only in "special chips" for cards made by Gainward and other companies that like to issue "special" products with increased performance.

- But even in this case, will the prices for 450-MHz cards be within USD 499? Word has it that they will cost about USD 599.

- We don't know how many X800 XT cards will be produced and fear that the chips will be almost "hand-selected" to work at such frequencies and with 16 pipelines. And we're not sure if they can prove really competitive to NVIDIA products.

- Nor do we know if 525/1150-MHz frequencies will remain in X800 XT Platinum Edition which has an announced price of USD 499.

- We can't predict the pricing as soon as both companies' products appear on the market. Much will depend on which company will first start to lower the price so that to make its product more attractive.

- What we see right now is that X800 XT is more preferrable for new games with shaders, considering, among other things, its lower energy consumption and lower need of superpowerful PSUs. On the other hand, we haven't yet seen a new NV40 revision that is declared to be less demanding in terms of power. So it's too soon to make conclusions.

- The new 61.11 version was very useful for GeForce 6800 Ultra and ensured some gains for the accelerator without using "harmful cheats". Hopefully, FarCry developers will improve the terrible NVxx quality in patch 1.2. Then the comparison will be more objective.

I would also like to mention NVIDIA's love for hiding trumps (i.e. more optimised drivers) in their sleeves till a certain moment. So, who knows what NV40 will show in a while. I admit that it can be done without using "harmful cheats".

Concerning the so-called optimisations for benchmarks, even authors themselves admit that NVIDIA (or ATI) can optimise something at their own convenience and even replace shaders. We're ordinary testers, and there's nothing we can do about it. And most media seem unwilling to do anything. Can you remember any of them discussing quality, cheats and that sort of thing? I can't. :-(

Positive optimisations that don't result in quality decrease deserve appraisal. And if this is the case with 61.11, then loud applause to

NVIDIA programmers and fie upon FarCry patch 1.1 authors (or upon those who made the developers do it).

I would also like to praise ATI developers: their X800 XT is quite up to the mark. Surely, time will show which product is better, but at least it's really a champion's claim. We'll also see if they were right or not to ignore shader version 3.0 in this product.

Speaking of which, the Internet is full of heated debate what is better, 3.0 or 3Dc. At the moment, we don't have version DX 9.0c or NVIDIA drivers supporting 3.0, and thus, we can't estimate NV40 capabilities in this respect. If 3.0 show really good performance (not worse than 2.0 have), if the techniques provide the developers with additional flexibility, and if 3.0 are installed in serial middle/low-sector cards (so many if's), the technology will take on. And then ATI wil have no chioce but to introduce 3.0 into their future products. But if 3.0 have problems and aren't realised in an optimal way, chances are they'll die without having evolved. Because two years after, Longhorn and shaders 4.0 will appear.

As for 3Dc, much will depend on ATI itself. It is hardly a secret that the technology's main trump -- easy introduction into games -- may not reach many people, especially small-time developers.

Well, that brings me to the end of the article. Our next material will deal with quality -- namely, quality of filtering realisations, and it concerns trilinear filtering in the first place.

You can see more detailed comparisons of various videocards in our 3Digests.

|

|