|

||

|

||

| ||

Contents

At the end of January we published our first unique article that reviewed a video card and a motherboard together. That was because Gigabyte 3D1 could operate only with a motherboard from the same manufacturer – K8NXP-SLI. Now I decided to carry on the tradition and to review another kit, so to say. However, it's a relative kit, because Extreme N6800 video cards can operate with any motherboards supporting PCI-Express. So, in our today's article you will read about the operation of the above mentioned video card alone and of two such cards in SLI mode based on the ASUS A8N-SLI-Deluxe motherboard; you will also get a glimpse of this motherboard on NVIDIA nForce4 SLI. At the end of the review you will find a link to the list of our articles devoted to the latest generation products, where you can learn what we have already written about the new NV41. It's a reworked NV40 core, which now lacks 4 pixel and 1 vertex pipelines, and its AGP support was replaced with the PCI-E module, so that the chip can support the new interface on its own, without the HSI bridge. It has become a counterpart of GeForce 6800 AGP in its performance with its 12 pixel and 5 vertex pipelines. Our readers will learn about features of this ASUSTeK product as well as about the SLI operation and the motherboard on nForce4 SLI. In the article about NV41 and the cards based on this chip, I noted that the PCI-E sector was too small and the products for this market were so many that it was very difficult to position a given product, because the prices might vary from day to day depending on its novelty and demand. That's why the newly-made GeForce 6800 PCI-E cannot be thrown into the scale – it has no counterpart from the other side (of course, we mean ATI), because the closest counterpart X800 XL, though appeared on sale, is too pricy and it's obviously more expensive than NV41. And the cheaper X800 is on sale in the form of an unannounced 256 MB product. It's not clear how long it will be so, because a 128 MB counterpart is to be released at a much lower price. It's a total farrago. That's why I'll compare it both with the X800 XL and the X800 256MB. In total, GeForce 6800 SLI will be much more expensive than even the GeForce 6800 Ultra and RADEON X850 XT PE flagships. But it will be compared with them anyway. So, let's start with GeForce 6800 PCI-E from ASUSTeK.

Video card

The card obviously copies the reference design. It consumes less than 75 W, so it does not need external power supply. Perhaps it's one of the reasons for the memory chip frequencies being considerably lower than the nominal.

Let's have a look at the graphics processor.

I have already written before that the PCI-E interface obtained by the chip and the cut down pipelines resulted in a vertically elongated die form, unlike NV40. I repeat that this chip is manufactured in Taiwan, so NV41 is quite possibly manufactured by TSMC already instead of IBM.

Let's get on with the examination.

Bundle

Package

ASUS A8N-SLI-Deluxe Motherboard

Not to encumber this article, a brief description of this product is brought to a separate page. Installation and DriversTestbed configurations:

VSync is disabled. Both companies have enabled trilinear filtering optimizations in their drivers by default.

Test resultsBefore giving a brief evaluation of 2D, I will repeat that at present there is NO valid method for objective evaluation of this parameter due to the following reasons:

What concerns our samples under review together with Mitsubishi Diamond

Pro 2070sb, these cards demonstrated identically excellent

quality in the following resolutions and frequencies:

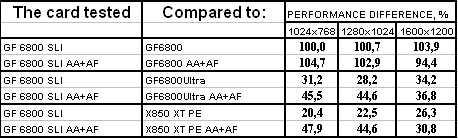

Test results: performance comparisonI will note right away that we have already tested a single 6800 before. Besides, it will soon appear in our 3Digest. That's why we shall publish only the 6800 SLI test results. We used the following test applications:

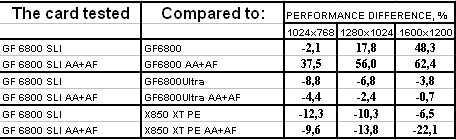

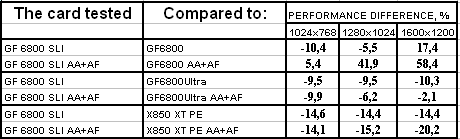

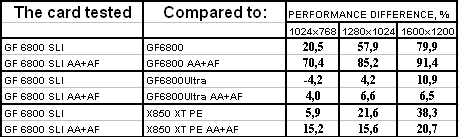

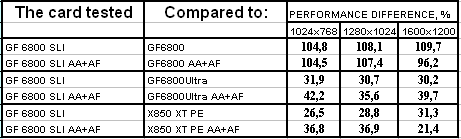

TR:AoD, Paris5_4 DEMO This test demonstrates GPU power in terms of shader calculations. That's why there is almost nothing surprising that the 6800 SLI outscored a single card nearly twofold. Is this 10-15% advantage over the top products enough to exonerate the expenses? - It's all up to the prices. If two 6800 cards cost like a single 6800 Ultra or X850 XT PE, then the answer is yes.

FarCry, Research The 6800 SLI hasn't managed to reach the twofold advantage here, because the game is CPU-critical. The performance is just 1.5 times as high. But our tandem was defeated in the competition with top products, especially by the X850 XT PE, which was full 20-25% faster. FarCry, Regulator

The same picture.

FarCry, Pier Actually, the 6800 SLI tandem has demonstrated its cost-ineffectiveness here as well.

Half-Life2: ixbt01 demo

And here, considering only AA+AF modes (it's stupid to buy two cards 300-400 USD each and disable these functions), the 6800 SLI fares quite well. Of course, if the occasional quality issues in the drivers are fixed.

Half-Life2: ixbt02 demo

Fiasco again. Yes, this scene is much more critical to a processor, so the payoff from the 6800 SLI is minimal.

Half-Life2: ixbt03 demo

The situation is similar to the previous one, so we can say nothing good about the 6800 SLI so far.

DOOM III Developers finetuned the drivers for this game in the first place I guess, that's why the brilliant results of the 6800 SLI tandem. That's not only up to drivers, the game itself loads heavily the GPU, and thus the dividends from SLI are considerable.

3DMark05: Game1

3DMark05: Game2

3DMark05: Game3

3DMark05: MARKS The situation is similar to TRAoD, this test loads GPU with shader calculations, and it's quite clear that 3Dmark lacks gameplay. That's why SLI has demonstrated brilliant results. But is a couple of games and 3DMark worth buying two video cards at a price higher than any top accelerator?

ConclusionsSo, we have reviewed GeForce 6800 PCI-E from ASUSTeK (a single card and two such cards in SLI mode) plus the motherboard based on nForce4 SLI, which also participated in all these tests. The ASUS Extreme N6800 256MB is practically a copy of the reference design. It's more expensive than its AGP counterpart, first of all because it's equipped with 256 MB instead of 128. It should be noted though that the memory is DDR1, and thus the price difference must not be very large. I repeat that the memory operating frequencies are reduced relative to the chip nominals and I have only one assumption why it was done: not to exceed the 75 W power consumption limit and avoid installing external power connectors (and the corresponding componentry). This card is positioned right between the GeForce 6600GT and GeForce 6800GT, sometimes going down to the performance level of the former. But you shouldn't forget that the star feature of all 256-bit video cards is active AA mode. When it's enabled, the 6600GT is left far behind. I hope the prices will settle down and you'll be able to buy such cards within 300-320 USD. In this case the 6800 SLI tandem will get its share of demand, because its total price will not be too higher than the price of a single 6800 Ultra or X850 XT PE. Sometimes this "sweet couple" outscores these top accelerators in performance. Not everywhere, not always. That's why it's too early to speak about SLI popularity with such cards. We should also take into account that there are some driver glitches resulting in occasional quality issues. Besides, not all new games support SLI, for example this feature does not work in Chronicles of Riddick. Here is an illustrative example from FarCry (1.9MB, DivX 5.1) with enabled marker demonstrating which part of the scene is processed by which card. SLI in action. And here (3.3MB, DivX 5.1) you can see the situation in Chronicles of Riddick, where SLI simply doesn't work. That's why there is no such marker, though it's enabled in drivers. Modern incarnation of SLI is not just dull coupling of two video cards that works in all applications a priori, as it used to be in times of Voodoo2. Now it depends on driver support, which must detect a game and enable a certain optimization mode for SLI. You should always bear in mind that a fresh game will not necessarily show its full potential in SLI mode. It will simply fail to work until NVIDIA programmers release a new driver version with added support for this game. What concerns the ASUS A8N-SLI-Deluxe motherboard, this product is good in all respects: assemblage convenience, PCi-E x16 slots spaced apart to be able to install even two-slot video cards, BIOS settings, excellent operation. But the cooler noise, which is difficult to get rid of (not because it's impossible to replace it, but because BIOS requires the 8000 rpm speed – have a look at the utility screenshots, which demonstrate this rotational speed), makes this excellent product very disappointing. Just fancy that! Constantly listening to that bee buzz accompanied by howling at rpm changes – that's not serious for a High-End product. nForce4 SLI overheats very much, even such cooler is heated to 50-60 degrees. To say nothing of a lower rotational speed. Designers should have thought about it when they planned the layout and should have left more space around the chipset to install a large flat heatsink with a low-noise fan instead of the puny thing that looks good but yells like a siren (3MB, AVI DivX 5.1).

In our 3Digest you can find more detailed

comparisons of various video cards.

Theoretical materials and reviews of video cards, which concern functional properties of the GPU ATI RADEON X800 (R420)/X850 (R480)/asus-6800pcie (nv44)/X700 (RV410) and NVIDIA GeForce 6800 (NV40/45)/6600 (NV43)

Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||