|

||

|

||

| ||

Contents

Today we have an unusual review, because it is devoted not to a separate video card or cards, but to an entire kit consisting of a unique accelerator based on two GeForce 6600GT cards and a motherboard based on NVIDIA nForce4 SLI. This decision is engendered by the policy of Gigabyte towards the new 3D1 video card, which can operate only with Gigabyte K8NXP-SLI motherboard. That's why there is no point in writing a separate review of a video card, which is tied to a motherboard. We don't know whether the 3D1 will be on sale separately or just as a kit component. Gigabyte has not yet decided on this. This video card will be issued in a limited edition. The K8NXP-SLI without 3D1 will certainly appear on the market. As usual, it will be a "no XP" modification, that is with fewer bells and whistles in the bundle. What suggested the idea to combine two GeForce 6600GT processors on a single PCB? The product will require a motherboard with SLI anyway! I have been thinking hard about the expedience of such a solution, and nothing but marketing and advertising considerations crossed my mind. Of course, 3D1 guarantees SLI, because the two conventional video cards on one PCB are surely identical. According to our tests, SLI is an uncertain thing, not every pair of video cards can easily form it: the driver meticulously "tries" each video card. But it's also clear that buying two GeForce 6600GT cards from the same manufacturer gives 99% guarantee that SLI will be formed, especially if you buy them together. Price difference? It goes without saying that two video cards are more expensive than one, even such a topnotch one. But you lose one of SLI advantages: flexible upgrade of graphics system capacities – if you have enough money you buy a single accelerator, and as soon as you have spare money, you can buy another one and build up 3D capacity. In this case you will have to buy two accelerators at once, even if they are on a single PCB. I repeat, this purchase is reasonable only if its price is considerably lower than the total price for two video cards. Can this video card theoretically operate not only on an nForce4 SLI based motherboard but say, on iE7525 as well? Besides, Gigabyte announced a motherboard on i915 with SLI support. Theoretically it's possible, but I repeat that the 3D1 can operate only on the K8NXP-SLI. At least for now. Perhaps it will later be supported by the motherboard on i915. The manufacturer announced that 3D1 performance exceeds GeForce 6600GT SLI (of two video cards). This is not surprising. I'll tell you the reasons below. Because it's high time to examine out main hero.

Video card

It looks like the card was designed by people who decided to decorate it with an unusual memory chip pattern for the New Year's Eve – it's impossible to understand why they are rotated at 45 degrees. All the more so as the processors are not displaced. A tad later I got the following information from Gigabyte: this solution presupposes memory chips to be located at equal distance from a processor, which according to designers saves PCB room and makes the cards more compact. Of course, this video card has exceeded the PCI-E limit of 75W by the total power consumption of both GPUs and the doubled number of memory chips. That's why the card is equipped with an external power connector as in senior models. Let's have a look at the graphics processor.

These two chips are obviously no different from the regular 6600GT installed on separate video cards. The card itself operates with cunning: its PCI-E x16 is in fact not an x16 slot, but a doubled x8. A single PCB just accommodates two independent video cards. The only connection between them is an on-board counterpart of the synchronizing adapter, which we install on two SLI video cards. But the system sees TWO VIDEO CARDS. So it's clear that this video card will be supported only by motherboards, which can apply two X8 signals to the PCI-E x16 slot. Our conclusion: this product is doomed to be tied to Gigabyte motherboards. Though all motherboards on nForce4 SLI can change x16 to x8, they can hardly apply 2x8 to the first slot. By the way, the first thing I did when I got the 3D1 was to install it into ASUS A8N SLI, which was in the testbed up to that moment. The system booted, I installed video card's drivers, and then the system froze at the initialization process. Q. E. D.

Let's get on with the examination. We are not going to review the bundle, because the card and the motherboard have the common package contents (that's why you are advised to read the next chapter on the K8NXP-SLI).

Gigabyte K8NXP-SLI motherboardNot to encumber this article, a brief description of this product is brought to a separate page. Installation and DriversTestbed configurations:

VSync is disabled. Both companies have enabled trilinear filtering optimizations in their drivers by default. It should be noted that the 3D1 is detected by RivaTuner, so there are no problems with overclocking. What concerns the frequencies, they got raised just to 540/1250 MHz (not much of overclocking for this GPU). But there are severe limitations due to the PCB layout and stability of both processors when overheated.

Test resultsBefore giving a brief evaluation of 2D, I will repeat that at present there is NO valid method for objective evaluation of this parameter due to the following reasons:

What concerns our samples under review together with Mitsubishi Diamond

Pro 2070sb, these cards demonstrated identically excellent

quality in the following resolutions and frequencies:

Test results: performance comparisonWe used the following test applications:

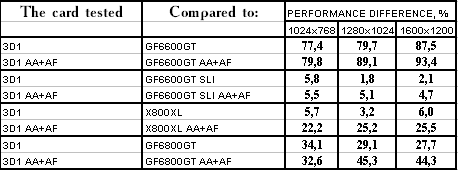

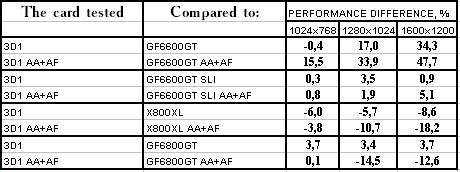

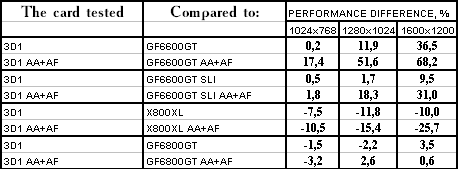

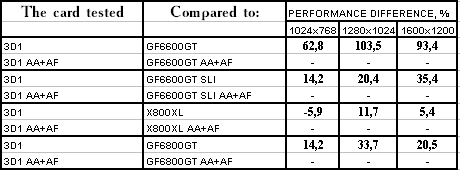

TR:AoD, Paris5_4 DEMO In fact, this test demonstrates the capacity of shader units. That's why the 3D1 is an obvious leader here. Its advantage nearly reaches 100% relative to the regular 6600GT. But don't forget that memory operates at increased frequencies in this card.

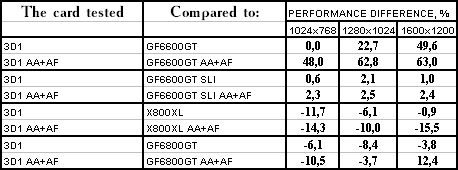

FarCry, Research The advantage over the 6600GT does not exceed 63% – it can be explained by the processor-critical game, and even maximum load on the video card cannot deplete its resources. But the way, there is practically no difference from SLI either. What concerns the competition with ATI – it's defeated both by X800 XT (it's a more expensive card, so it's OK) and by X800 XL (and that's not good of the 3D1). FarCry, Regulator

The same picture.

FarCry, Pier No comments, the same alignment of forces. This game has been bad luck for NVIDIA products for a long time :).

Half-Life2: ixbt01 demo

The game is still more critical to processors, that's why the effect from 3D1 (as well as from SLI in general) does not exceed even 50% of gain. And again it's defeated by ATI products. It should be said though that the GeForce 6800GT cards were not that bad.

Half-Life2: ixbt02 demo

The picture is almost the same, with the only difference that 3D1 lost more scores and was outscored more by ATI products and its senior brother 6800GT.

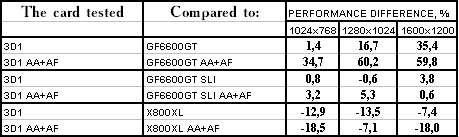

Half-Life2: ixbt03 demo

An interesting aspect. The X800 XL is obviously victorious in this test, but our card demonstrates noticeable gain relative to the 6600GT SLI! Exactly in AA+AF modes (memory plays its role?). And another thing: almost on a par with the 6800GT, and the latter is obviously more expensive.

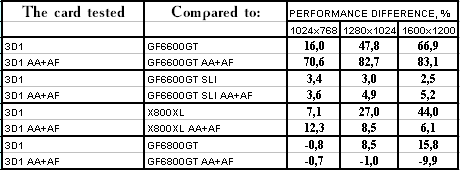

DOOM III This game uses technological advantages of the NV4x architecture again, and you can see how much gain SLI provides. Increased memory frequency brought 5% of advantage over the 6600GT SLI. The competition with the 6800GT also looks good (to say nothing about the defeat of ATI X800).

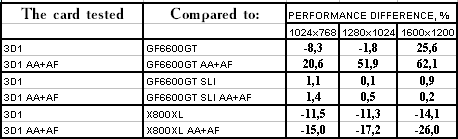

3DMark05: Game1

3DMark05: Game2

3DMark05: Game3

3DMark05: MARKS In fact the result could have been predicted after TRAoD tests, because 3DMark05 lays special stress on shader performance. And we have two 500 MHz processors, 16 pipelines in total, you can know the power when you look at it. That's why 3D1 is certainly a leader.

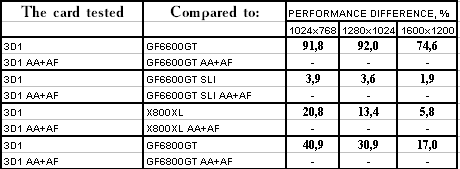

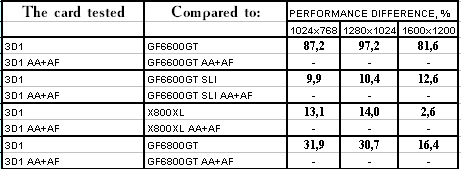

At the end of the review I'd like to say that all the problems we previously had with quality in SLI cards were fixed in drivers 71.20. That's very good. ConclusionsOne can say that the success of this KIT is up to the price! If it's adequate, we'll have: - an excellent motherboard with a full set of features for the AMD Athlon 64/FX platform (Socket939). It has disadvantages as well (we are reviewing purely constructive peculiarities of the board so far) – they are described here. - a very powerful solution – 3D1, which capacity nearly reaches that of the 6800GT, but cheaper. It's clear that the more games oriented to the general use of shaders we have, the more advantageous 3D1 will be. But 3D1 also has negative points. Firstly, it's actually outscored by the cheaper X800 XL in popular games, except for DOOM III. Secondly, power supply requirements (external power supply is mandatory, while the X800 XL does not need it). Thirdly, large dimensions of the 3D1. As this card can be used only with the K8NXP-SLI, it means that SATA connectors located right under the video card will be unavailable. Fourthly, the second PCI-E slot is disabled in case of 3D1, so you cannot use it to install the second video card for video output to 4 monitors. The main conclusion: the necessity of this solution in general. I repeat, if the price is ok, lower than the price for a similar motherboard plus two 6600GT cards, then this purchase is justified. If it's cheaper than a motherboard plus X800 XL, its success will be guaranteed. Now have a look at the calendar, 2005 has begun and it means that the market is divided into AGP- and PCI-E - sectors as 95% to 5%. The question is: will this kit or separate K8NXP-SLI become the engine to pull PCI-E out of the low demand mire? Time will show. In our 3Digest you can find more detailed

comparisons of various video cards.

According to the test results, Gigabyte 3D1 (2xGeForce 6600GT) gets the Original Design award (January).

Theoretical materials and reviews of video cards, which concern functional properties of the GPU ATI RADEON X800 (R420)/X850 (R480)/gigabyte-3d1 (nv44)/X700 (RV410) and NVIDIA GeForce 6800 (NV40/45)/6600 (NV43)

Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||