|

||

|

||

| ||

Characters:

Popular synthetic actors of finished and scheduled full-length 3D movies are also taking part in this movie. Around the scenario: "Run, Industry, run"! The NVIDIA's GPU codenamed NV30, so long-awaited and one of the most arguable

GPUs, was announced on November 18, 2002. It was coupled with

the announcement of a new marketing name of GeForce FX. First

NVIDIA was going to give a new name to a new trademark to

emphasize its importance but then it turned out that it was

impossible to refuse its mark. According to the public-opinion

polls, the GeForce brand is known to much more people than

the company's name NVIDIA is. It's like with Pentium. The

parallel with Intel, which is a recognized locomotive of the

IT industry is well suitable here as at the moment NVIDIA

is a flagship on the 3D graphics ocean, like 3dfx was in its

time, a creator of the first really successful hardware 3D

solution. Symbolically, while working on the NV30 the developers

used a lot of ideas of the 3dfx's project codenamed Mojo which

failed to be completed. No secret that the GeForce FX is an incarnation of a so called flexibly programmable graphics architecture, i.e. a graphics processor. Therefore, this chip should be called GPU, but on the other hand, this term was earlier used for less flexible solutions of the previous generation of accelerators (let's call it the DX8 generation: NV2x, R200 etc.). Let's glance at the transient process from a fixed architecture to flexibly programmable ones:

This is an "evolutionary revolution" whose current stage is not completed yet. There is one or maybe several stages coming in the near future:

Well, we'll see what we will see. But two latter steps can bring in here much more than two DX versions both because of Microsoft and a guileful intent of graphics chip makers. On the other hand, while in terms of such evolutionary layout the current stage looks expected, users and programmers can take it as a revolutionary period as it provokes a switchover to utilization of capabilities of flexible programming of accelerators. Big flexible pixel shaders, even without the command stream control, are able to bring to PC an earlier unreachable visual level making a much greater jump compared to the first attempts of the previous generation clamped by the awkward assembler code of shaders and a limited number of pixel instructions. Quality, rather than quantity, can win this time, and the epoch of DX9 accelerators can become as significant as the arrival of the 3dfx Voodoo. If you remember, the Voodoo wasn't conceptually the first. But it did provoke a quantitative jump of accelerators, which then turned into a qualitative jump of development of games for them. I hope this time the industry will be given a powerful spur caused by the possibility to write complex vertex and pixel shaders on higher-level languages. Let's leave aside the issue concerning a revolutionary nature of DX9 solutions which are so much spoken about among sales managers. Just must say that while the revolutionary character of the solution as a whole is yet to be proved, the revolutionary character of the separate technologies of the GeForce FX is undoubted. The accelerators are gradually approaching common general-purpose processors in several aspects:

The accelerators are striding toward CPUs and they have already outpaced average general-purpose processors in the number of transistors or peak computational power. The issue of convergence depends only on time and flexibility. CPUs are also nearing them by increasing their performance, especially in vector operations, and soon they will be able to fulfill yesterday's tasks of graphics acceleration. Moreover, the degree of brute force parallelism of CPU is growing up as well - just remember the HT or multicore CPUs. The direct confrontation is not close, but it will definitely take place, and primarily between trendsetters in one or another sphere rather than between the classes of devices (the outcome will be called CPU (or CGPU :). Wallets of users are being fought for now, and graphics in this sphere doesn't lose to CPUs. Before going further I recommend that you read (if you haven't yet) the following key theoretical materials:

Now we are finishing the digression and turning to our main hero - GeForce FX. GeForce FX: leading role in focus

Straight away: the key specifications of the new GPU:

And now look at the block diagram of the GeForce FX:

Functions of the blocks:

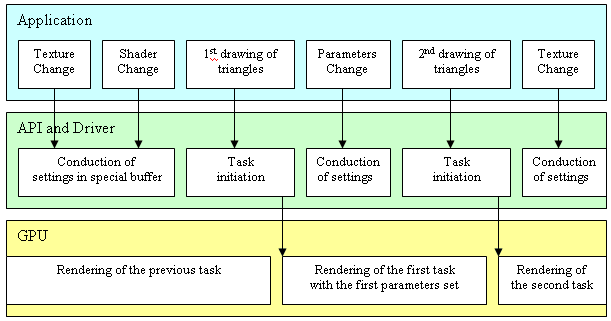

Let's go through the main units of the new chip approximately in the same order as a usual data flow goes. Along the way we will comment on their functions, architecture (the basic one regarding the DirectX 9.0 and additional capabilities of the GeForce FX) and make small lyrical digressions. System interfaceObviously, data the chip works with, programs and commands it processes are formed by the CPU with the help of drivers, and then they get into the graphics chip via a special graphics bus (AGP) which acts as a system interface. Take a close look at it:

As you can see, the program addresses the API quite often changing different parameters (in modern applications changing of parameters implies primarily establishment of new textures and shaders) and then it sends a buffer with geometrical data for drawing which is to be fulfilled by the accelerator using earlier defined shaders and textures. But API requests are not sent directly to the accelerator - it would be too prodigal. Different settings are accumulated by the driver in a special buffer in the system memory, and only when the time to draw a certain geometrical picture with them comes, the accelerator is given that buffer with saved settings (commands), a shader code and links to geometrical data to be drawn. Starting from this point of time the accelerator interprets the buffer itself adjusting its internal units and draws the geometry it was given selecting data from the local and/or system memory via the system interface (the geometrical data are often located in its memory, entirely or partially). The drivers do not wait for the accelerator to complete its work, they return management to the program and keep on collecting settings and commands in a new buffer. They will have to wait only if the request for drawing comes before the accelerator finishes the previous work. By the way, it wouldn't be correct to call the data geometrical because they can have a flexible, set by the program, record format for each vertex. The record consists of a set of basic types of data - such as floating-point and integer values and vectors, color values etc. How these parameters are to be interpreted, which of them define geometrical coordinates of a pixel in space, which are the numbers of combined textures and what are texture coordinates or color values or other parameters of pixel shaders - all these issues are decided by the code of a vertex shader, by i.e. a programmer. You thus describe your internal formats of object presentation and program the accelerator so that it can interpret them correctly. The functions earlier fulfilled by CPUs are now put on the shoulders of a flexibly programmable processor. In the GeForce FX the system interface is realized via an AGP bus and, in particular, its third version. Let me sum up the differences and new features of the AGP 3.0 standard also known as AGP 8x in comparison to the AGP 4x:

Will the AGP 3.0 bring advantages for the accelerators of the

latest generation? First of all, it depends on programs. Actually,

new complex pixel shaders and nontrivial models of rendering

and lighting increases both the number of used textures and

their size and the number of parameters transferred with each

vertex which describe properties of drawn materials. Besides,

the geometrical performance of the new chip has markedly increased

compared to the previous generation, which is also going to

add requirements for the bandwidth of the system bus. But

most modern applications use scenes of moderate complexity

the textures for which can be fully put into the accelerator's

memory. And geometrical data do not take more than a third

of the bandwidth of the AGP 4x. Cinematographic quality which is so much discussed these days in connection with the GeForce FX certainly must increase demands to the system bus as so highly detailed scenes can take gigabytes. The question is how soon it's going to arrive. Most likely, more than one generation will change before we can see a scene of quality and size comparable with today's popular synthetic movies executed on the hardware level. However it can be, but the AGP 8x support is necessary as the bandwidth of the AGP 4x is not sufficient anymore. It's also necessary to comply with the modern standards and to reduce delays (when addressing the accelerator) which is becoming a real enemy today. There is nothing to worry about - the new AGP version will be enough for the GeForce FX. Probably, it is the last classical AGP in its present form - the next general-purpose graphics bus will probably be based on serial technologies developed within the frames of the 3GIO project. Memory controller and local bufferThe unexpected decision of NVIDIA to use a 128bit memory bus coupled with the high-speed DDR II memory caused a lot of disputes. On the one hand:

On the other hand:

Nevertheless, I have no doubts that the experts from NVIDIA thought over their decision well. And they most likely had definite figures obtained in course of emulation or utilization of the test chips. The strongest advantage is the memory optimization technologies. Using such technologies it's possible to easier reach an effective memory throughput comparable or even greater than that of 256 bit solutions. Further we will look into this question in detail. The memory controller is based on the 4-channel scheme (4x32) typical of NVIDIA products, which showed excellent results on the chips of the previous generation. The internal caches of the chip are connected to 4 memory channels and the system bus by a full crossbar, like in the previous generation.

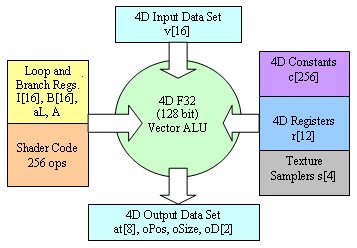

There are 4 main data streams formed when the chip's working - three for reading (textures, frame buffer, geometrical information) and one for recording (frame buffer). Besides, a part of or all geometrical information can come from outside via the system bus. A 4-channel memory controller suits best of all for such scenario - in case of two channels the performance will drop significantly and with more than 4 channels it won't raise much though the number of chip's outputs and complexity of the board will be much greater. In this respect it's interesting to take a look at the RADEON 9500/9500 PRO - in this configuration the R300 has only two working memory controllers, and it's quite possible that this factor is determining in its inferior performance. For cheaper versions of the GeForce FX it will be possible to have a less efficient memory but still 4 channels used in the 128 bit configuration. From the system interface and local memory interface data proceed to the chip's internal caches - a geometry chip, a frame buffer chip and a texture chip. Then they go to respective units. Let's start with the geometry: Vertex processorsThe GeForce FX has three (!) independent vertex processors which fully comply (and even exceed) with the DX9 specification for vertex shaders 2.0. Even in current applications the geometrical performance of such tandem must be 2..3 times higher than that of the geometrical unit of the NV25 both thanks to the greater number of the processors and the higher core's clock speed. But that's not all. Here is a general block diagram of the vertex processor of the DX9:

So, intermediate data are processed and stored in the floating-point format F32. At the input the shader's program has up to 16 4D vectors - the source geometrical data we were speaking about above. At the output we have a vertex position in the screen coordinates, a pixel size if sprites are involved, 8 vectors of texture coordinates and 2 vectors of color values which are then interpolated (while triangles are rendered) for each pixel. After interpolation values of these vectors will get into the pixel shader as input parameters. Besides, we have 256 constant vectors assigned from outside and 12 temporary general-purpose registers used for storing intermediate data. There are also 4 special registers - samplers which let the vertex shader select values from textures for using textures as displacement maps and other similar effects.

From this point of view a vertex processor reminds any other general-purpose processor. But what's about programmability? A shader is a program which controls a vector ALU processing 4D vectors. A shader's program can be 256 ops long but it can contain loops and transitions. For organization of loops there are 16 integer registers of counters I which are accessible from the shader only for reading, i.e. they are constants assigned outside in an application. For conditional jumps there are 16 logic (one-bit) registers B. Again, they can't be changed from the shader. As a result, all jumps and loops are predetermined and can be controlled only from outside, from an application. Remember that this is a basic model declared by the DX9. Besides, the overall number of instructions which can be processed within the shader with all loops and branches/jumps taken into account is limited by 65536. What for such strict limitations? Actually, to meet such requirements the chip can do without any logic controlling execution of loops and jumps. It's enough to organize successive execution of shaders up to 665536 instructions and unroll all conditions and loops in advance in the driver. Actually, every time the program has its constants controlling branch and jump parameters changed, we have to load into the chip a new shader. The R300 uses exactly such approach. Exactly this approach lets us have only one set of control logic and a copy of vertex program shared by all vertex processors. And this approach doesn't make the vertex processor normal - we can't make on-the-fly decisions unique for each vertex taking into account criteria calculated right in the shader. Moreover, such unrolling of jumps and loops can make a process of replacement of the shader or its parameters controlling jumps quite demanding in terms of CPU resources. That is why ATI recommends to change vertex shaders as seldom as possible - the cost of such replacement is comparable to change of an active texture.

NVIDIA made a step further making vertex processors dynamically controlled. Each vertex processor of the GeForce FX has its own indicator of a current command and a set of logic controlling jumps and loops. Such processor is more complicated but more flexible in programming. We can use newly obtained computational results as determining parameters in loops and conditions as it's done in usual programming languages for usual general-purpose CPUs. We can say that the vertex processors of the GeForce FX advocate an individual approach to each vertex. :) Such solution makes the chip more complicated but it also simplifies the work of programmers. For example, it's not necessary to divide an original model into several parts for loading or for modulation to process its different parts using different methods - one can implement necessary selection inside the vertex shader and use just one shader instead of three-four different ones. NVIDIA gives a good example in its documentation for developers. Moreover, it takes quite a lot of time to replace a vertex shader both because of checking and compilation, and significant latency of the system AGP bus. It's possible that it will be much more advantageous to use a big shader consisting of several dynamically switched algorithms than frequent changes of small and specialized shaders.

Besides, it's possible to use various optimizations, for example, to calculate light from a close source by one algorithm and from a far source by another algorithm - this will also let us save on performance. We can interrupt execution of a shader, for example, according to a certain condition, to make calculations more optimized. It's interesting that the GeForce FX incorporates three vertex processors according to the number of pixels in a triangle, instead of four like in the ATI's product. It's clear that dynamic jumps made NVIDIA use additional transistors. Three processors can be a weak point and a quite balanced solution - we still don't have enough information on a performance of a separate vertex processor per unit of clock speed. Like in case of usual CPUs it can differ much in different solutions. The previous tests with synthetic applications showed that NVIDIA could make perfectly balanced products. Besides, a frequency of the core of the GeForce FX is noticeably higher than that of the RADEON 9700 PRO. In the practical testing we will see how balanced the new chip is and compare it with the ATI's products. And right now let's take a glance at the comparison table of capabilities of the vertex processors:

The parameters in bold type allow NVIDIA to speak about the support of shaders on the 2.0+ level. Of course, the dynamic execution is desired. Sooner or later all shaders, both pixel and vertex, will get it as an obligatory requirement. But now, within this generation of accelerators and API, it's not clear how popular it's going to be with programmers. What if they will use the least common denominator (like it was with pixel shaders 1.4), i.e. the base version 2.0. I think it will be so in the near future. But this new solution of NVIDIA can be supported more widely in case of OpenGL where programmers have to write two versions of a code quite often. Also remember that the set of instructions of vertex shaders in the DX9 was extended compared to the DX9, with normal trigonometric functions and conditional write and reorder instructions. It's interesting that realization of trigonometric functions in the GeForce FX is very quick - it takes the same time to calculate SIN or COS as a couple of additions (!). It seems that it operates with special matrix execution units together with big tables of constants. Here are commands supported by the vertex processors of the GeForce FX:

One more curious peculiarity of the GeForce FX is realization of displacement maps. Actually the chip doesn't have a separate hardware unit controlling tessellation of N-Patches and displacement maps - there is just a possibility (which is beyond the vertex shaders 2.0) not only to use in the shader data selected from a texture but also record computational results of a vertex shader into a certain memory place which can be interpreted as a texture or a vertex buffer. This won't be open for developers and will be meant only for internal use, in particular, for realization of DM without a separate hardware unit. But in future DX versions it will be possible to record calculated data from a vertex shader into accelerator's memory, up to generation of an arbitrary number of new vertices necessary for support of arbitrary HOS on the shader level. Pixel processors and texture units

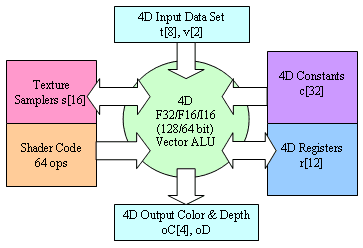

First of all, let's again look at the logic diagram of a pixel

processor in terms of basic requirements for the PS 2.0 DX9:

So, at the input we have 8 texture coordinates interpolated across the triangle surface (with perspective correction) and two interpolated color vectors. Originally, these vectors were calculated for each triangle vertex, but to obtain their values for each pixel rendered by a pixel shader we have to interpolate them depending on a position of a given pixel relative to the vertices. From the programmer's point of view these vectors can contain anything, not just texture coordinates and color. The pixel shader will define how to use them. At the output we have up to 4 different color values (each being a 4D vector) recorded into different output frame buffers and one depth value which we can change and record. We have at our disposal 32 constant vectors and 12 temporary vector registers. This is how pixels shaders are arranged in the R300, according to the letter of the DX9 specification, except one extension used both in the R300 and in NV30 but still not mentioned in the DX9 documentation - we can use in the shader a calculated depth buffer value and an original color value of a given pixel for realization of our own blending or for making certain decisions. In course of execution of a shader we can fulfill texture fetching, up to 16 different textures are available (the depth of nesting of dependent fetches mustn't exceed 4). For texture fetching there is a special command which indicates where to send the result, from which texture (one of 16 registers of samplers) and according to which coordinates data are to be fetched. Contrary to the previous generation this is a normal command of a shader and can be used in any place in any order. But the number of commands of fetching is limited - although the total length of a shader can reach up to 96 instructions, the number of requests to textures mustn't exceed 32 and number of other instructions mustn't exceed 64. As we have 8 coordinates interpolated across triangles, we can't call these 16 textures normal in terms for the old accelerators. If earlier a TMU was a unit including both a mechanism of texture fetch and filtering and an interpolator of texture coordinates, now there are no such TMUs. Now we have two separate units - a set of interpolators and a set of units fulfilling texture fetching by request of a shader. Multiple effects need access to different textures according to equal coordinates or, for example, multiple fetches from one texture according to dynamically calculated coordinates, that is why such approach seems to be justified. Contrary to a vertex processor which always works with the F32 data format, a pixel processor (like in R300 and in NV30) supports three formats- F32, F16 and integer I16 (R300) / I12 (NV30). The latter two formats are not just useful for compatibility with old shaders 1.x but also provide speed gains in calculations. In the DX9 the system of commands of a pixel processor is similar to the system of commands of a vertex one:

There are separate commands of texture access (fetch) and pack/unpack commands for storing nonstandard sets of scalar and floating-point variables in standard formats of textures and frame buffer. This is a good way to save on the memory's throughput and space taken by source data and shader's results when implementing complex effects. Note that recording (modification) of depth values makes unjustified all HSR optimizations and attempts of early determination of visibility which boost up performance of modern accelerators. So, if you use or modify data on depth in the shader, the hardware early HSR will be disabled, and the overall rendering efficiency will fall down. In the sphere of pixel shaders of the GeForce FX the requirements of the DX9

are exceeded again:

(*) 16 F32 registers or 32 F16 registers (**) Each used F32 constant is worth two commands, i.e. the number of constants * 2 + the number of commands do not exceed 1024. Each used F16 or I12 constant is worth one command. The GeForce FX is certainly very flexible here. But will that be needed for programmers for anything beside NVIDIA's demo programs? But we have multiple "no's" exactly for the pixel shaders. First of all, the performance of commands drops down twice (at least) while processing floating-point data compared to integer data (this is a pure computational performance without accounting for losses caused by increased data volumes). A pixel processor of the GeForce FX can execute up to two integer and one floating-point command per clock, i.e. it acts as a superscalar processor in case of integer operations. This maintains an acceptable speed comparable with solutions using stages in case of execution of shaders 1.x. Secondly, floating-point textures cannot be filtered (!). If we want to fetch bi- or trilinear filtered values from a texture stored in the floating-pint format we must program filtering in the pixel shader using for it 4 or 8 texture fetches and a dozen of computational instructions. This must cool down our striving for increased data precision (with calculations there is only double drop). Well, there are new capabilities, but we must wait for a new edition of the chip (NV35) or a next generation (NV40) to use them. It's interesting that NVIDIA managed to realize texture fetching commands without any delays. Each texture fetching can be fulfilled at a clock even if the neighboring fetches are dependent and located near by. This can give the GeForce FX a considerable advantage over the R300 in case of complex shaders. Actually, the chip has a pool of 8 units for unpacking, fetching and filtering of textures. But at a clock it's possible to fulfill only one command of data fetching from a texture in each pixel processor, and we can get up to 8 textures per clock. But the units can fetch 8 discrete samples per clock, i.e. implement trilinear filtering without speeding down (like in the R300). But in case of bilinear textures the GeForce FX unit gets an advantage - such a scheme lets it fetch values of two different bilinear textures at a clock one of which will be directly sent to the chip and the other saved and delivered at the next stage. What for are such tricks? In a complicated scenario when fetching of simple bilinear textures are followed by a lengthy anisotropic filtering this can help increase the overall effectiveness of execution f a shader saving one clock. The time will show whether it's worth doing, but many scenes contain one or two textures which are quality-filtered by the anisotropy and several additional bilinear ones. That is why such scenario of economy mustn't be something unusual. In simple cases we get a similar picture:

But the clock speed of the GeForce FX is higher, and secondly, in some combined

systems when bilinear, anisotropic and trilinear textures

take turns in one shader, the GeForce FX can have a certain

advantage (potentially) over the RADEON 9700 at equal frequencies.

But a real effectiveness of a dynamically configurable pool

of texture units is yet to be studied. The main advantage

will be the above mentioned lack of delays when fetching dependent

textures. And here sample of the situation when GeForce FX fetch two bilinear and one

anisotropic (16õ) textures in 3 tacts, for that R300 use 4 tacts:

It's interesting that when working with traditional integer textures (8 bits

per component) values are processed by an exponential function

(gamma correction). An exponent value corresponds to the sRGB

standard. It allows saving and editing correctly an image

with a wider dynamic range, despite the 8bit data representation.

This feature is default for the DX9 chips and integrated into

the R300 and NV30. Below is an example (from ATI J) of using

such gamma correction when rendering an image:  The right picture shows more typical lighting in a cathedral. And this picture was calculated using the gamma correction. The speed of trilinear filtering is crucial for modern chips. NVIDIA did nothing cardinally new here - the NV30 keeps on using adaptive algorithms of full anisotropy inherited from the NV25. The developers say however that they made slight optimizations which can be enabled in the driver settings and allow for a certain performance boost without noticeable quality losses. However that can be but 16 texture units and a high core clock speed must tell upon the performance markedly. We'll see how frightful it can be for ATI products with their traditionally efficient anisotropy. The NVIDIA's approach to realization of anisotropy primarily depends on computational resources of the chip and after that on the memory's bandwidth. ATI lays the most load on the memory using the algorithm based on RIP cards. Such approach was one more argument for a 256 bit bus for ATI and a 128 bit bus with a higher clock speed of the chip for NVIDIA. Full-screen anti-aliasing and frame buffer compressionThe most interesting ability of the GeForce FX is frame buffer compression, when both depth values and color values can be compressed. According to NVIDIA, the compression algorithm works without losses but this should be verified - how one can speak about a constant compression ratio of 4:1 in case of lossless compression? Either the compression ratio will vary (and then they specified its typical value) or there will be losses. However, with a good algorithm the losses can be pretty small. We'll prepare some tricky synthetic tests to find out that. The buffer compression has a lot of advantages:

By the way, the R300 also uses compression algorithms for color information in the frame buffer, but they are not so effective. But the main advantages with a good implementation of a frame buffer will be obtained with full-screen anti-aliasing! Remember the MSAA? No additional calculations are needed - all samples within the AA unit are formed from one result calculated by a pixel shader. The only factor that causes performance drops is a necessity to transfer forth and back a frame buffer which at 4x AA will be four times bigger. At the same time, in the 4x mode most AA units have two or even one unique color value. We certainly must use such an opportunity and code effectively information of such MSAA buffer by recording only available colors and a bit mask of their arrangement. With the MSAA we can make up for the increase size of the buffer (maybe just a couple of percent of additional data will remain). Besides, the buffer will also be compressed by the general compression algorithm. So, the moderate modes of the MSAA are going to be almost costless (for example, 4x and 4xS) and higher settings are going to be very cheap in case of the GeForce FX. The advantage can be so considerable that it will be able to overlap the gap between NVIDIA and ATI products in the combined modes which are the most interesting for current and upcoming applications. The GeForce FX also includes a new MSAA mode 8x, and a new hybrid SS/MSAA mode 6xS (this one only for DirectX). That is why the chip can record up to 8 MSAA samples from one value calculated by a pixel shader. So, the developers of the GeForce FX counted mostly on the frame buffer compression, especially in the FSAA modes. In this respect, usage of the 128 bit bus can prove that the hope is justified. Dressed-up hero: GeForce FX based reference card.

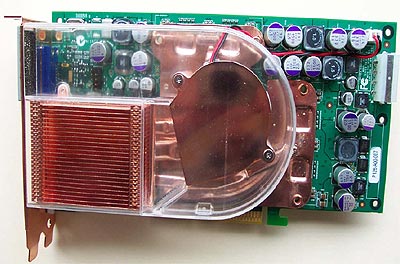

Here is a reference design of a card based on the GeForce FX:

It houses a connector of external additional power, one DVI, one TV-Out and one VGA connector. The Silicon Image chip controls the DVI interface while the Phillips chip works with TV-Out. Despite the TV-Out and DVI interfaces integrated into the GeForce FX this card uses a tried solution based on external interface chips. According to NVIDIA, when the integrated interfaces and the software are debugged the external interface chips will be removed. 8 128 MB 128 bit DDR II memory chips from SAMSUNG, 1 GHz of effective data transfer. The memory chips are located quite far from the chip - it seems that the 128 bit bus and improved interface characteristics of the DDR II allowed for a simpler layout. The chip is officially clocked at 500 MHz, but its future versions can have different frequencies (maybe even higher). The card can use a cooling system with external air intake and tap, which is advertised by OTES:

Distribution and return: conclusion by a film criticI'm looking forward to the final scene knocking together the RADEON 9700 PRO and NVIDIA GeForce FX. The battle will take place on the arena of synthetic DX9 and real DX8/DX9/OpenGL tests. I think the most interesting scene is going to be in the extremely popular, though not finished yet, Doom III game. This game is able to influence the life of several generations of video cards of one or the other camp. NVIDIA has traditionally strong OpenGL drivers and a high clock speed of the chip, while ATI comes with a wide memory bus and a line of products which goes ahead the time. However, the preliminary data indicate that the game will have a computational character, which suits NVIDIA well. And here are some figures:

Note that the claimed effective memory bandwidths are equal! Well, we can't verify it as the memory optimization techniques can't be disabled. But we estimate the real efficiency of these algorithms as 1.5 times for ATI and 2.0 times for NVIDIA of a physical bandwidth in typical scenarios. That is why an effective bandwidth is probably about 28 GB/s for ATI and 32 GB/s for NVIDIA, which can provide at least a 30% gain for NVIDIA in typical applications. Armed with these figures ATI plans on production of a chip clocked at 400..500 MHz started in the first half of 2003, which will be more affordable and not less efficient - RV350, and in the second half of 2003 we will see a .13 chip running at 500-600 MHz (R400), a future competitor against an optimized version of the NV30 (let's call it NV35). In the first half of 2003 NVIDIA will introduce two new players - NV31 and NV34 whose niche can be estimated by experienced users. The chips will be based on the mastered 0.13 fab process and use units developed for the NV30. Besides, it's possible they will release NV30 versions working at a higher core speed - 550 or even 600 MHz - in the NVIDIA's lab the first chips were running error free at such frequencies. From a user's point of view, the RADEON 9700 (not PRO) can be a very strong rival for the NVIDIA's solutions as it carries an attractive price/performance ratio for users who are not totally indifferent to games. In the sphere of mid-range and budget DX9 accelerators - RADEON 9500/9500 PRO there are no products from NVIDIA, but a bit later we will see the NV3X solutions. The higher-level language for shaders in the DX9 will tell positively upon development of products supporting new technologies. Many programmers didn't want to deal with shaders because of too complicated development and debugging of an assembler code. The NVIDIA's initiative of creation and maintenance of an open compiler of a higher-level language for shaders (CG) and an interpreter of files similar to DX9 effects (CG FX) will have a positive effect as well. Both products have source texts available and support both APIs (DirectX and OpenGL) which will make works loads simpler for OpenGL programmers. There are plugins for popular 3D graphics packets which export models and materials in the form comprehensible for the CG FX. First samples of the GeForce FX based cards will be available in December, the mass deliveries of the chips will start in January, and in February the cards will be widely available for users. The start price is not fixed yet but in the first weeks it will be within the range from $399 to $499 depending on the situation on the memory market and a region. Now, take seats in the cinema hall - the block-buster promises to be thrilling! Moreover, I think that it will remain unpredictable till the end ... I wonder when we can have such graphics in a real-time mode

on our screens...  Aleksander Medvedev (unclesam@ixbt.com)

Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. |