|

||

|

||

| ||

Part 2: Video cards' features, synthetic tests

TABLE OF CONTENTS

ATI Technologies' counter-offensive: RADEON X1800 (R520), X1600 (RV530), and X1300 (RV515) series: Part 1. Theoretical materials

When you are into 3D graphics (not programming, but reviewing ready solutions), the borderline between the real life and what we see in games and demos is gradually disappearing, like it or not. Sometimes you walk and see a nice tree. While you would had just admired it or wondered what kind of tree it was; now you also think how many polygons it would take for an "honest" model, that is each leaf as a separate object (or even segmented, as leaves bend in the wind). How many objects there would be in such a tree, and what processors it would take to calculate motions of each leaf, branch in the wind... Of course, even one such tree would be too heavy a task for the most powerful desktop computers, rigged up according to the last word in IT. At Ibiza, where we spent two days at ATI Technology Days, "3D" thoughts also came to our heads from time to time:

It's a pity that the calls for photorealism, cried out by marketing departments six years ago, are still nothing more than dreams. Thank God, games are starting to catch up with the progress of 3D graphics hardware. Those super speeds above 150 fps, which could be seen two or three years ago in popular games, are almost extinct now. Graphics accompanied by game settings are getting increasingly complex (publishers still require from developers to make games compatible with new as well as old low-end cards, though there are a lot of changes here: as DirectX 9.0 compatible video cards have already conquered the Low-End sector as well, publishers eased their press on developers "to make it run on MX cards". So developers don't need to adjust a DX9 game to DX7). Nearly a year and a half ago NVIDIA entered the market with its new products, featuring almost everything provided by Microsoft in DirectX 9.0c: SM 3.0 support, dynamic branching, texture fetch in vertex processors, etc. Canadian ATI Technologies, competing with the Californian company, made a different decision: why hurry up and spend transistors on these new units, when they'd better increase the number of pipelines using a new process technology and raise frequencies to an unprecedented level. And slightly reinforce shader units, upgrading them to 2.0b (in fact, just adding support for long shaders). And traditionally introduce new techniques, which no one would use later on (remember TruForm, which is currently disabled by default even in ATI drivers, as it's not used), we mean 3Dc. Was it a right decision to ignore SM 3.0 at that time, leaving NVIDIA to develop and introduce to game developers? — That's a vexed question. On the one hand, if the engine of technologies (NVIDIA) introduced it, the history of its interaction with developers hints that it will gain recognition for the new ideas by hook or by crook. Even large game developers will start using SM 3.0 in their engines. Canadians would then have to take up the trend and introduce SM 3.0 support to their products. It's the right approach in business terms, but it looks very bad from the ethical point of view (why some people push on new things getting the bumps and black eyes, while the others come only when the road is clear and ready?). On the other hand, no matter how all-powerful NVIDIA is, its resources are limited. And when 55% of the desktop graphics market belong to ATI, game developers (publishers, in the first place!) have to take it into account and slow down the advent of SM 3.0 to real engines and games. And the fact that SM 3.0 just starts to appear in the red-while camp has done its negative duty. They should have introduced innovations together not to mislead developers. But the fact remains, it happened, written on the scroll of history, and cannot be undone. Now that the Canadians have switched to the new process technology and decided to spare some transistors for SM 3.0 units, the time is close when full DX9.0c will be introduced to new games on a large scale. ATI has embarked upon a new course and considers it the only true way! (the proof is on the photo below: Nikolay Radovsky, ATI representative in Russia, pointing at the new development horizons :-), just like a local Lenin :-)) What concerns us... we can only study new test results, testbeds, video cards, and what not, especially if something glitches or fails... (Pavel Pilarchik from PCLab.pl (Poland) is wrestling with R520 tests at Ibiza). Thus, drawing a bottom line under the above said, I repeat that the Canadian company put forward new solutions from Low- to High-End, which support new identical technologies based on SM 3.0 in the unified way (and AVIVO, we shall talk about it later). All the details were already covered by Alexander Medvedev in the first part of the article. At ATI Technology Days we received a set of samples including 4 video cards to be planted into the majority of price segments on the market.

Here are movies, which briefly illustrate the new products.

Video Cards

We can see well that the Canadian engineers used a brand new design for the X1800 series. Up to now we have dealt with video cards based on modifications of the RADEON 9700 PRO (4 chips on each side of a PCB, totaling 8 chips, short cards, the power circuits are spread along the PCB). When the design of NVIDIA products was cardinally different and had been progressing from GeForce4 Ti (the power supply unit is at the end of the PCB). Starting from NV35 and up to recently, USA engineers have been using the circular layout of memory chips around the core, trying to arrange them on a single side of the PCB. Only the 7800GTX has broken the tradition of installing memory chips next but one on both sides of the PCB in 256 MB cards, though there are 16 seats for memory chips, totaling 512 MB of RAM (in the FX5900 series, 128 MB cards has memory chips only on the front side of the PCB, while 256 MB modifications contained memory chips on both sides). And now we can see that ATI engineers have come to a similar design. The card has become longer (by the way, it matches the GeForce 7800GTX dimensions, you can see it in our movie — the links are in the beginning of the article). Memory chips are arranged in a semicircle around the core, the power circuit is at the end of the card. I repeat that both the X1800XT and the X1800XL have identical PCBs. That is they are identical in design. Besides, they have the same core, it just operates at different frequencies (by the way, note the large gap between 500 MHz and 625 MHz. I'm sure, there will appear an intermediate product, as required). It should be also mentioned that three cards out of the four are equipped with a couple of DVI jacks. Moreover, they are Dual link DVI, which allow resolutions higher than 1600x1200 via a digital channel (owners of huge LCD monitors have already complained of this limitation several times). Now let's review the cooling systems. First of all, in the X1800XT. The cooler is evidently borrowed from the X850XT. It's essentially the same, but the heatsink inside is made of copper now and its dimensions are larger. The turbine, which drives the air outside, is absolutely the same. You will have to put up with irritatingly loud roar for 10-15 seconds after startup, but then the rotational speed drops and the device gets almost noiseless. I have never seen the cooler to raise its rotational speed, even under the heaviest load. There are two disadvantages: dimensions (this video card takes up two slots because of the cooler) and startup noise. There is only one major advantage: the hot air is driven out of a PC case, which is very important for hot elements inside. And the card gets very hot! I repeat, our readings demonstrate that the X1800XT has higher power consumption than the 7800GTX (about 100-110 W versus 80 W). Though I saw this card operate in a barebone-kit with a 350 W PSU. Now let's proceed to the cooling system in the X1800XL. Even though the device is obviously smaller, much thinner, this solution is not good, as the fan periodically changes its rotational speed under 3D load (the effect of overheating), which is not at all comfortable for your ears. The cooler also has a copper heatsink in a housing, but in this case the turbine drives the air in the opposite direction — inside the system unit. That's all right for a low-clocked core, but I think that the overall temperature of the card is still rather high.

Now about the cooling system in the X1600XT and the X1300PRO. Both cards have the same cooler. Though small, this device is quite noisy. It resembles a cooler from the X700 XT, where the cooler ran at frenzied rpms despite its copper heatsink, narrow air-gaps of the heatsink generated noise. The situation repeats itself in this case. I hope ATI partners will find an opportunity to install their own cooling systems, quieter and no less efficient. Now let's have a look at the processors. When the R520 is compared with the G70, you will see right away that the products are manufactured by different process technologies. Despite the approximate parity in the number of transistors, G70 has a noticeably larger surface. The RV530, with its much more transistors, has also the same surface as the X700 XT. And again, the RV515 is approximately equal to the RV380 in its chip surface, though it has much more transistors.

Installation and DriversTestbed configurations:

VSync is disabled.

Pictures >>>

In fact, the driver control panel was not changed much. Only AVIVO menu was added (it will be covered in the fifth part of this article) and new Adaptive AA and HQ Aniso options appeared in 3D settings. It should be noted that the cards work much better with TV-out, there is an option to output full picture of a movie to TV (or a second receiver) irregardless of its resolution. You probably remember that a monitor should be connected to the lower jack, if you intend to use a X1800 card with a TV and a monitor simultaneously. Synthetic testsD3D RightMark Beta 4 (1050) and its description (used in our tests) are available at http://3d.rightmark.org You can look up and compare D3D parameters here: D3D RightMark: X1800 XT, G70 We carried out our tests with the following cards:

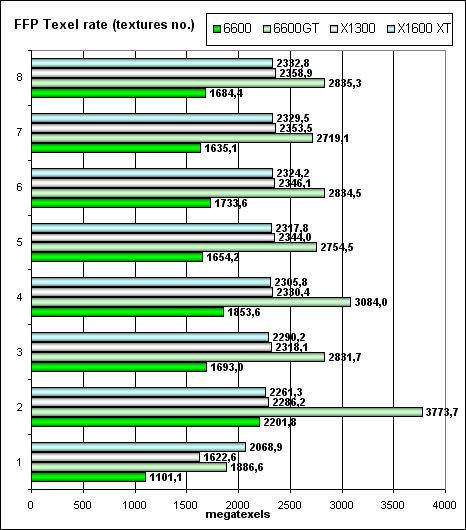

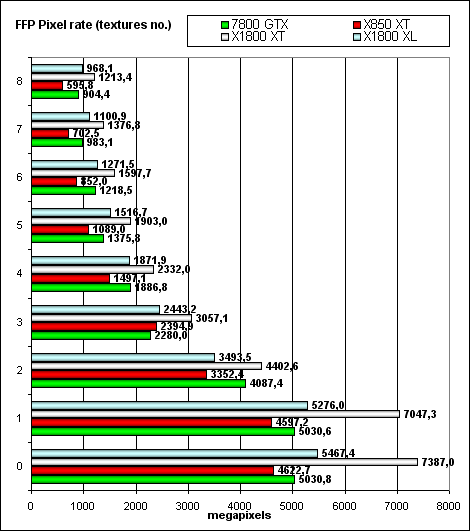

Pixel Filling testPeak texelrate, FFP mode, various numbers of textures applied to a single pixel:

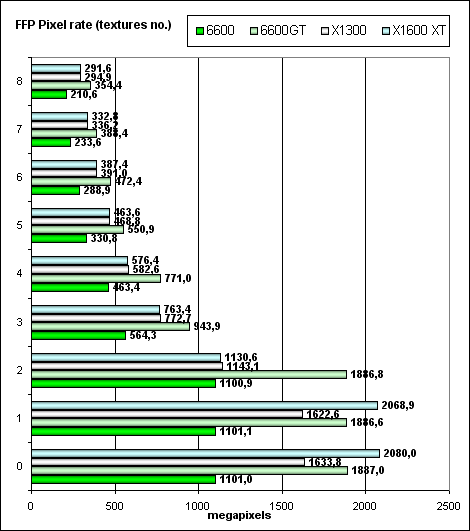

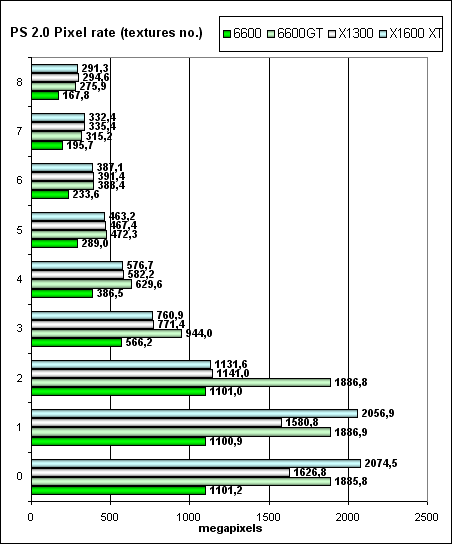

In case of senior cards (the first diagram), ATI is definitely victorious. That's the effect of more efficient memory operations, including its speed and data compression before caching. Even the greater number of pipelines does not help the 7800 GTX (though the defeat is not that strong, to speak of NVIDIA's inferiority). But in the middle end segment, the picture is not so advantageous for ATI — that's the effect of the difference in the RV530 texture unit numbers — only 4, obviously insufficient in this case. This fact balances the RV515 with the RV530 in this test, that's not normal — prices for these solutions are almost twice as different. I suspect that 4 texture units in the RV530 are a desperate shift, more such units must have been initially planned. In real applications, this nasty effect will be smoothed out. But not much, you will see it later, to forget about it or consider it justified. And now what concerns the fillrate and pixelrate of a frame buffer, FFP mode, for various number of textures applied to a single pixel:

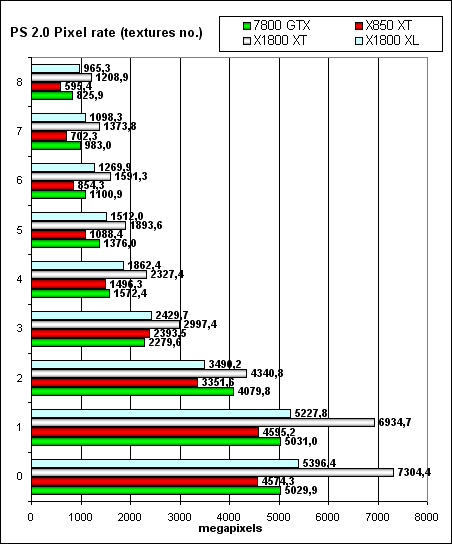

ATI leads in the senior segment in case with one texture (with fixed color). The leadership is retained further, but it's not that large already. But considering only 16 pipelines in the R520, we can speak of a better (in this case) architecture and forecast even larger breakaway of the future R580. The sad effect of the four texture units takes it toll in the middle end sector again — ATI outperforms opposing NVIDIA cards only in case of 1-2 textures. As the number of textures grows, the RV530 noticeably loses its positions. Twofold difference in the number of texture units is no trifle. Let's see how the fillrate depends on shaders (2.0):  the same picture for the middle end:  So we can establish a fact that nothing has changed — FFP as well as shaders operate in the same way in terms of hardware (FFP is emulated by shaders) and demonstrate the same results. The same noticeable problem with few texture units in the RV530 and the same advantage of the R520 in fillrate, despite fewer pixel pipelines. Geometry Processing Speed testThe simplest shader — maximum bandwidth in terms of triangles:

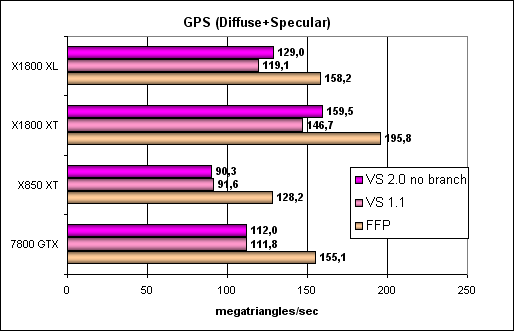

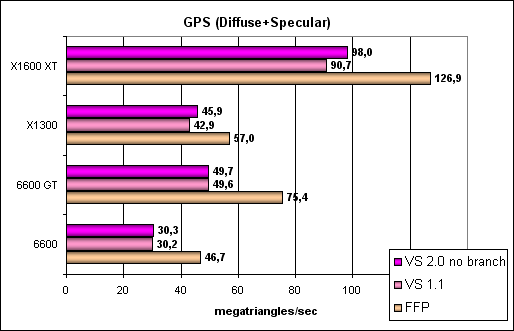

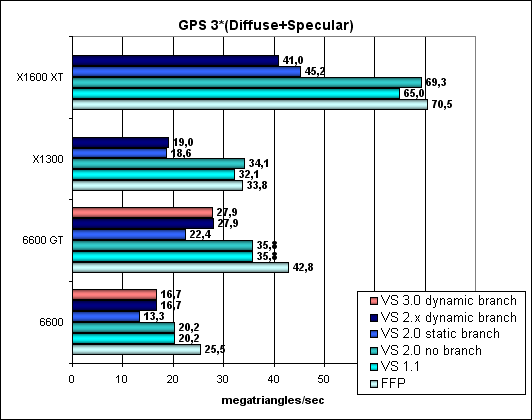

So, the G70 is still an absolute leader, while the X1800 has not gone too far from the X850 XT in terms of peak geometry performance. ATI kicks ass in the middle end sector — NVIDIA is noticeably inferior. It means that ATI features a more effective and wider unit for setting up and culling triangles. A more complex shader — a single mixed-light source:  Let's make the task more difficult for the middle end sector as well:  As you can see, FFP is a tad faster everywhere — the effect of special hardware units for its emulation (there was a time when ATI neglected this opportunity, but that's not the case now). And now the most difficult task: three light sources. We also included options without branching, with static and dynamic branching:

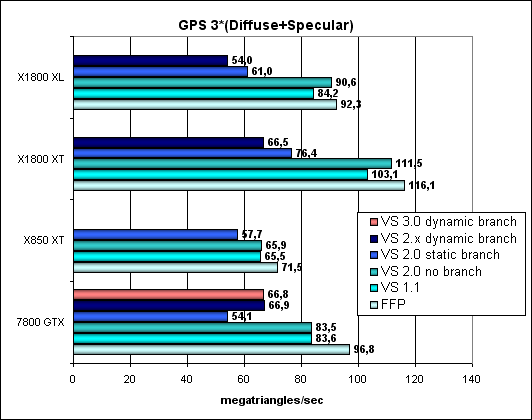

Here ATI is the first in all cases. As the task grows more complex, the 7800 advantage in processing simple triangles disappears and the X1800 XT core clock gives ground for its geometric capacities to show themselves. Interestingly, while shaders with dynamic branches can now be executed by ATI products, for some reason the same Shaders (specified as 3.0) are executed in the wrong way — to all appearances, we've found an error in drivers or glitches when it interacts with our test. Anyway, we have the same branches in the 2.X dynamic profile as well as in 3.0 and we can evaluate the efficiency of dynamic branching in ATI. It's lower than the static version, like in NVIDIA, the overheads are a tad higher. But it's higher in absolute terms due to the more powerful geometry unit. Unfortunately, the new products from ATI do not support texture access from vertex shaders. So, except for no texture access and glitches in compiling and executing Shaders 3.0, vertex units from ATI are praiseworthy — everything is up to the mark! Pixel Shaders TestThe first group of shaders, which is rather simple to execute in real time, 1.1, 1.4, and 2.0:

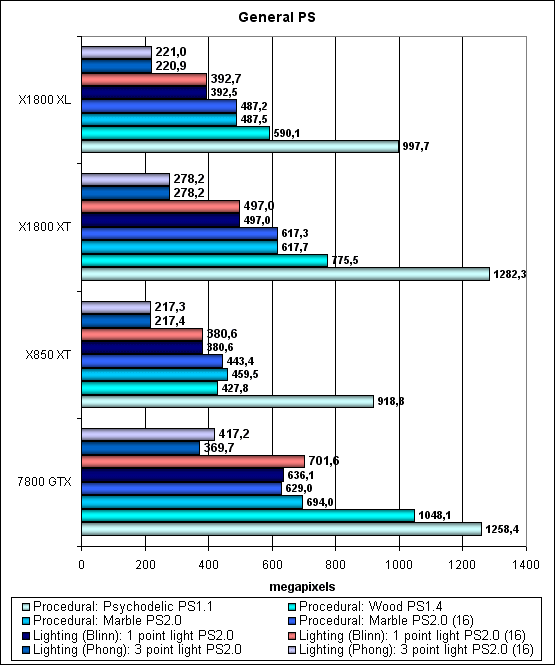

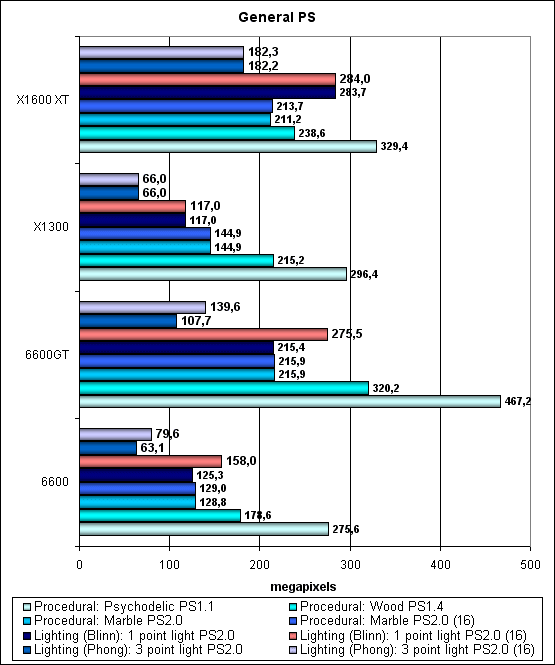

The top group demonstrates the approximate parity between the 7800 and the X1800 XT — the efficient pipelines and the clock on the one hand and 24 pixel pipelines on the other. But we can again see an annoying behavior of the RV530 in the middle end group — not good. 4 texture units spoil everything again. Even 12 pixel pipelines do not help the X1600. It is NOTICEABLY outperformed by the 6600 GT. Too bad! Interestingly, due to a merry-go-round of quads and consideration for temporal registers, NVIDIA still gains from 16-bit registers, when processing temporary data in a shader! But the ATI architecture is free from this dependence — it's based on a different principle and does not depend that much on the number and size of temporal variables, when a shader is compiled. That's laudable! It must produce a noticeable effect on the efficiency of complex shaders with calculations. Let's see what will change in case of more complex shaders:

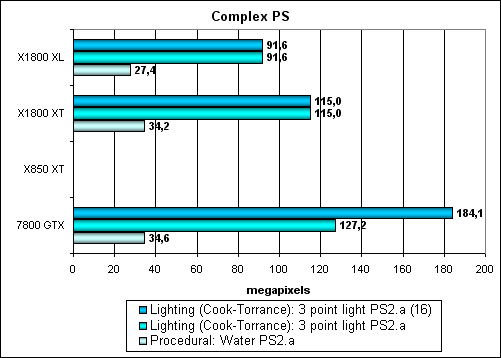

In the senior group, the 7800 is victorious in the 16 bit mode. But that's 16 bit with all potential artifacts. We can see parity in the FP32 mode. Interestingly, ATI is an absolute leader in the middle end sector. Even despite its 4 texture units. Right — a lot of calculations, fewer texture accesses. But don't forget that such shaders are rarely used in real games. I wish there had been 12 texture units — this chip would have made a show of the other tests as well. The 6600 GT would have been simply smashed by the X1600 XT, but that's not the case. A disappointing blunder or unfortunate circumstances... HSR testPeak efficiency (with and without textures) depending on geometry complexity:

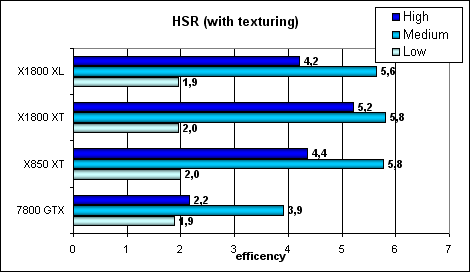

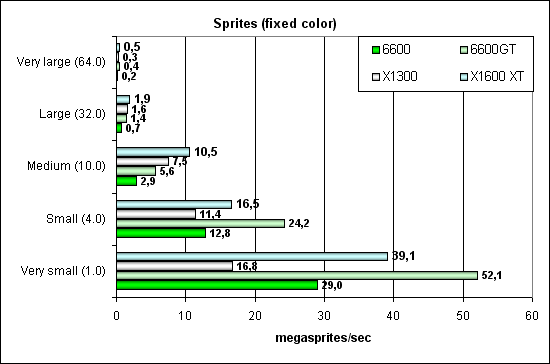

HSR efficiency of the top solution from ATI is traditionally higher. But due to lower latencies in texture operations, NVIDIA looks noticeably better, when we test HSR together with texturing. But the middle end situation is not that simple — with textures ATI looks even a tad worse than the 6600/GT, especially the RV515 — its HyperZ is obviously weaker than in the top ATI chips. Point Sprites test

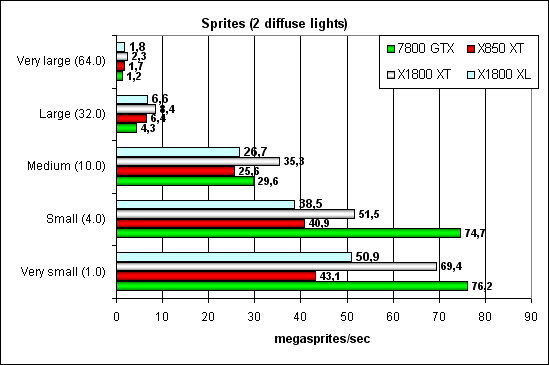

Everything is in the same way as before — NVIDIA copes better with small sprites, while ATI is better at large sprites. In case of lighting, ATI gains slightly more advantage — its vertex units are better. Conclusions on the synthetic tests

Wait for game tests and AVIVO review in future parts of this article. Stay with us!  Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. | |||||||||||||||||||||||||||||||||||||||||||||||