|

||

|

||

| ||

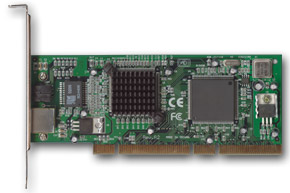

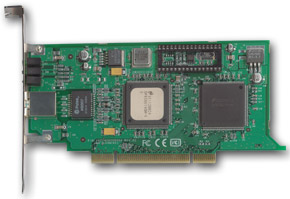

We keep on testing Gygabit network adapters and today we have cards meant both for a 32-bit PCI bus and boards with the 64bit PCI interface but back compatible with a 32-bit bus. This is exactly the PC bus mainboards of usual computers are equipped with. A 64-bit PCI bus is still a priveledge of server mainboards. They are used for other tasks, have several processors, and much more expensive. The theory on the Gigabit Ethernet was given last time. The today's article extends the last one; besides, we have altered the testing technique. BoardsFive out of seven adapters were assembled on a microcontroller from National

Semiconductor Corporation. They have two chips, one being a transceiver of the

physical level. The DP83861VQM-3 transceiver is identical for all five boards.

Some trasceivers have a heatsink above. It's for a manufacturer to decide whether a heatsink is necessary or not;

but I must say that the transceivers heat a lot. When changing the cards right

after switching off the computer, the chip or heatsink can burn. The transceiver

can work at 10/100/1000 Mbit/s in the full or half-duplex modes. It supports the

auto-negotiation mode for the speeds and modes listed above Microcontrollers installed on these boards which are 10/100/1000 Mbit Ethernet

solutions and that connect a network card with a PCI bus differ in a bit capacity

of the PCI bus. The 64-bit adapters have DP83820BVUW controllers, while the 32-bit

ones have DP83821BVM ones. Well, the only key difference is a bit capacity of the PCI bus they are working with. The other characteristics are identical:

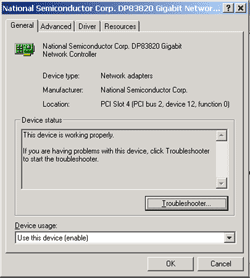

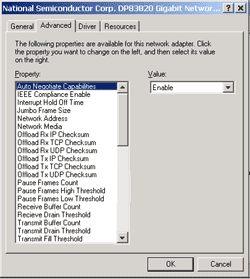

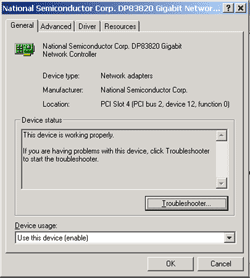

In course of installation under the Windows all 5 cards had a funny bug -

after rebooting and searching new devices the computer found an unknown additional

PCI device which couldn't be idntified and refused the driver I offered. Nevertheless, the adapters worked smoothlty and no other bugs were noticed.

The card has a 32-bit PCI interface. There are 6 LEDs behind, 3 informing about the connection speed of 10/100/1000 Mbit, and the rest showing collisions, full duplex and data transfer. The transceiver comes with a heatsink. For installation of the network adapter under the Windows 2000 we used the latest driver version downloaded from the company's site - 5.01.24.

The driver sports rich settings for adjustment and tuning of the card, the Jumbo Frame size can be set manually in steps of 1, the maximum size is unknown. However, the adapter worked flawlessly with the frame of 16128. For the Linux OS there are drivers only for the core of 2.2.x. They are lacking in the cores of the version 2.4.x, that is why we used the "National Semiconduct DP83820" driver v.0.18 integrated into the OS. Here the maximum size of the Jumbo Frame is specified - 8192. Note that without correcting the original driver code it's impossible to set a packet size over 1500.

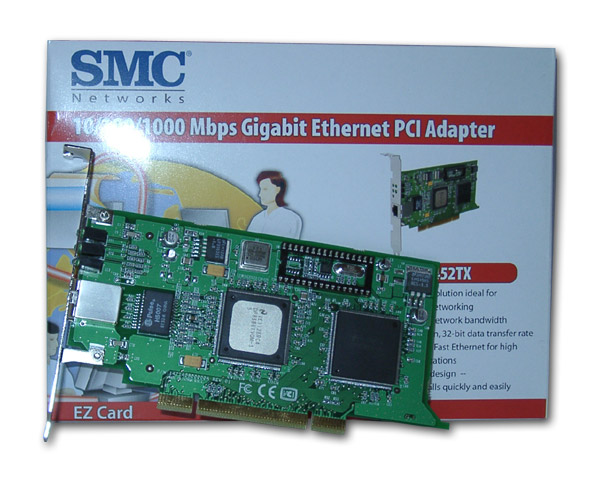

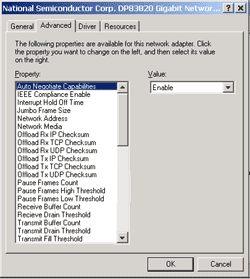

The second card is TRENDnet TEG-PCITX2 from the same firm. It has a 64-bit PCI interface and an older microcontroller's revision - DP83820BVUW. The transceiver is also equipped with a heatsink. The drivers under the Windows and Linux are the same as of the 32-bit model.  Next two cards come from SMC Networks. They both have two chips and are based on the above mentioned microcontrollers. There are no heatsinks.

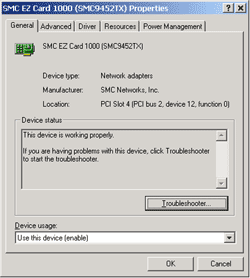

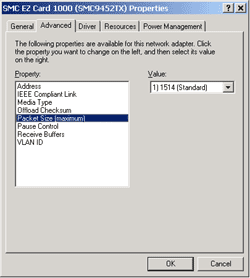

The SMC9452TX card has a 32-bit PCI interface and 5 LEDs, 3 notifying about the operating speed, and the other two about the link and activity. Both adapters have a curious PCB design - next to the interface Ethernet connector the card has its corner cut off while on the other side the card juts out. I have no idea what it's done for.

For the Windows we used the latest drivers from the company's site - 1.2.905.2001. There are less options and settings compared to the last time, and a Jumbo Frame size can have the following values: 1514, 4088, 9014, 10000, 16128. Although SMC offers drivers for the Linux for download, we used the driver integrated into the OS (the same) because the SMC's driver is quite old (dated middle of 2001).

The second adapter from SMC Networks - SMC9462TX - possesses almost the same characteristics but uses a 64-bit PCI bus (and, thus, another Gigabit Ethernet microcontroller). The situation with the drivers is absolutely the same.  The last two-chip card is LNIC-1000T(64) from LG Electronics.

The card has a 64-bit PCI interface. The transceiver is capped with a heatsink.

There are also 6 LEDs with the functions identical to the TEG-PCITX adapter.

For the Windows we used the drivers coming with the card as there were no newer drivers on the site. It seems that they are the reference drivers (identical to the TEG-PCITX) with the same functions. Their version is 5.01.24. The drivers for Linux were available only for the core 2.2.x, that is why in the tests we used the same OS-integrated driver.

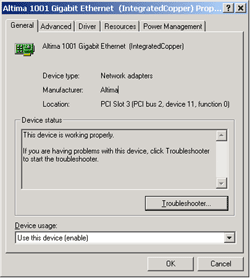

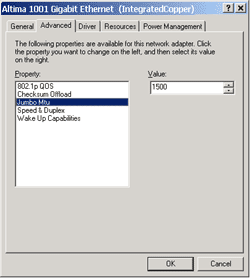

The card has three LEDs that show the speed of 10/100/1000 Mbit and the connection status. The LED blinks when data are transferred. This card has only one chip, and it comes with the new controller AC1001KPB from Altima. The controller is covered with a heatsink.

In the tests under the Windows we used the drivers coming with the card - 3.12.0.0 because ot the site they don't have a newer version. There isn't a wide range of options and settings. The size of the Jumbo Frame can have the following values: 1500, 2000, 3000 and 4000. The drivers for Linux are also supplied with the card; they were installed successfully and the system detected the adapter. But then the card refused to change the MTU size to more than 1500. The fast search of redefined single limit in the driver's source code gave no results. Probably, it's possible to enable Jumbo frames somehow (there are a lot of variables in the source code relating to the Jumbo), but there is no a single word about it. That is why this adapter was tested in the Linux only with the packets of 1500, i.e. without Jumbo frames.  And the last card is Intel PRO/1000 MT Desktop from Intel

Corporation. It was already tested last time, but today we ran the tests with

the newer driver version.   If you remember, this is a one-chip solution based on the Intel 82540EPBp microcontroller. The card has two LEDs, one displaying the connection status and data transfer, and the other (two-color) showing the speed of 10/100/1000 Mbit. Here are some parameters of the microcontroller:

The latest versions ofthe drivers for both OSes were taken from the company's site: v.6.4.16 for the Windows 2000 (it provides a wide range of options for configuration of the adapter; the Jumbo Frame can be of 4088, 9014 and 16128 bytes) and v.4.4.19 for the Linux (it can work only as a module). Testing techniqueTest computers:

The computers were connected directly (without a switch) with a 5m cable of the 5e category (almost ideal conditions). In the Windows 2000 for TCP traffic generation and measurements we used Iperf 1.2 and NTttcp programs from the Windows 2000 DDK. The programs were used to measure data rates and CPU utilization at the following Jumbo Frame sizes:

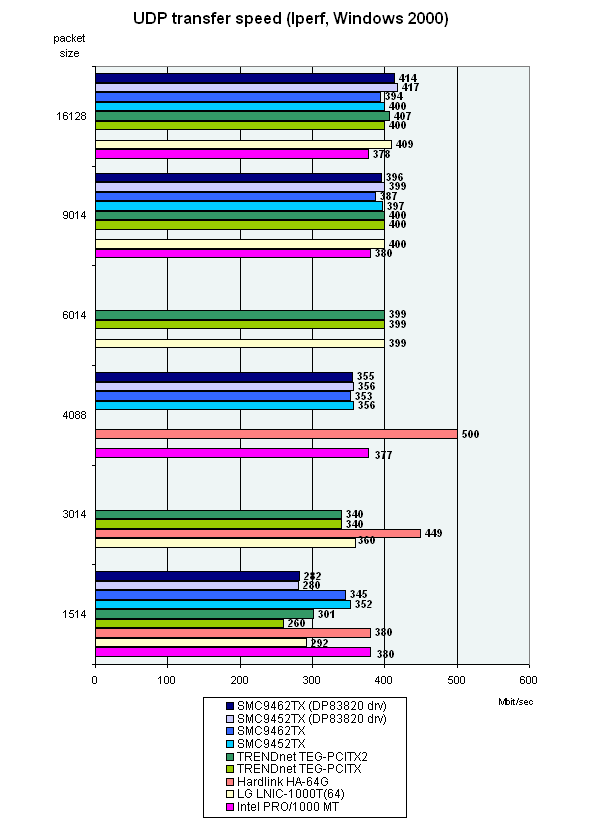

The cards that didn't support certain Jumbo Frame sizes weren't used in these tests. Besides, the Iperf was run in the UDP traffic generation mode. The stream speed of the UDP traffic was set to 200 Mbit which increased in the cycle up to 800 in 10 Mbit steps. The maximum speed reached was recorded as a test result. The OS was also slightly tuned up. The startup parameters of the programs and settings of the register are the following:

Hkey_Local_Machine\System\CurrentControlSet\Services\Tcpip\Parameters

Startup options of the Iperf in TCP mode: Startup options of the Iperf in UDP mode: Startup options of the NTttcp: Hkey_Local_Machine\System\CurrentControlSet\Services\Tcpip\Parameters

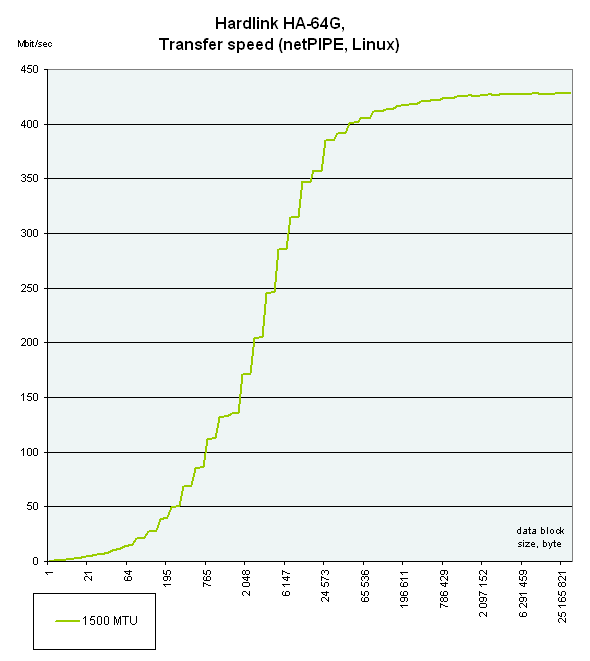

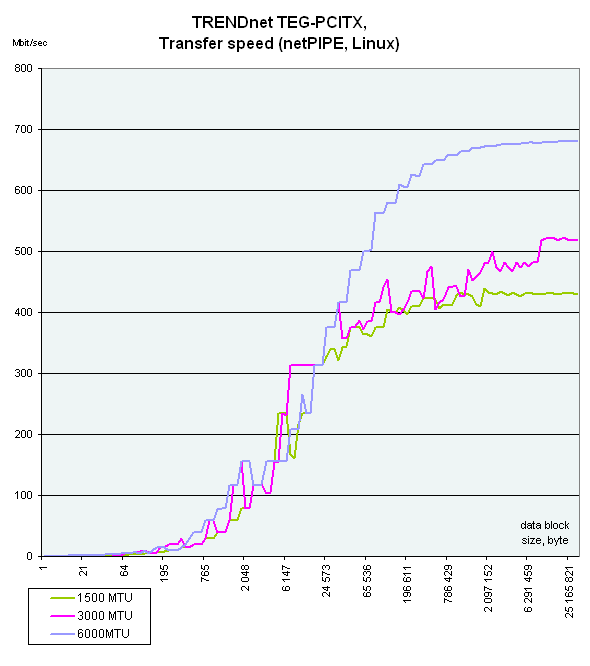

Startup options of the Iperf in TCP mode: Startup options of the Iperf in UDP mode: Startup options of the NTttcp: Each TCP test was run 15 times, with the best speed result chosen at the end. In case of the NTttcp the CPU's load was measured with the program's own means, and in the Iperf it was done with the system monitor of the Windows 2000. In the Linux OS for traffic generation and measurements we used the netPIPE 2.4. The program generates a traffic with a gradually growing size of the data packet (a packet of the size N is transferred several times, the number of transmissions is inversly proportional to its size, but not less than 7). Such method shows the percentage of the channel utilization depending on the size of data transferred. The size of the Jumbo Frame was changed by changing the MTU in the settings of the network interface by command ifconfig eth0 MTU $size up In the tests the following MTU sizes were used:

Startup options of the netPIPE:

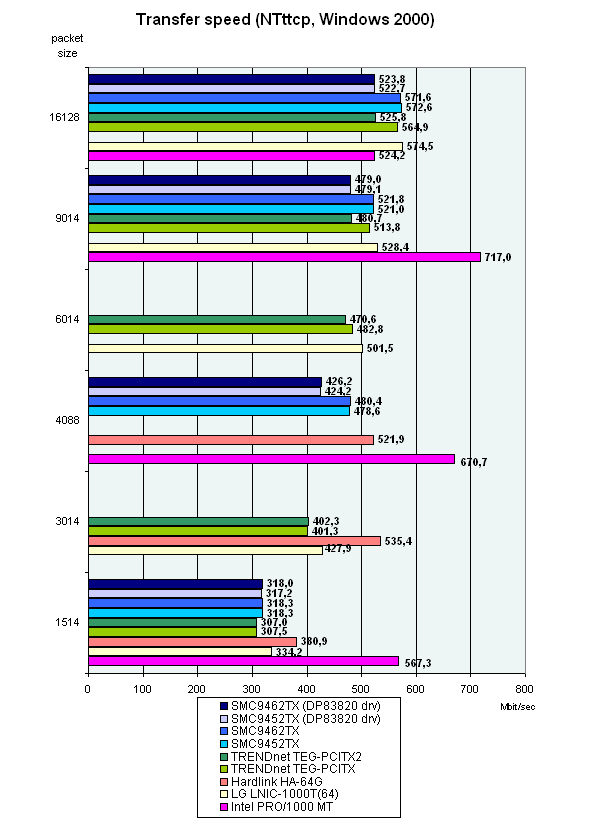

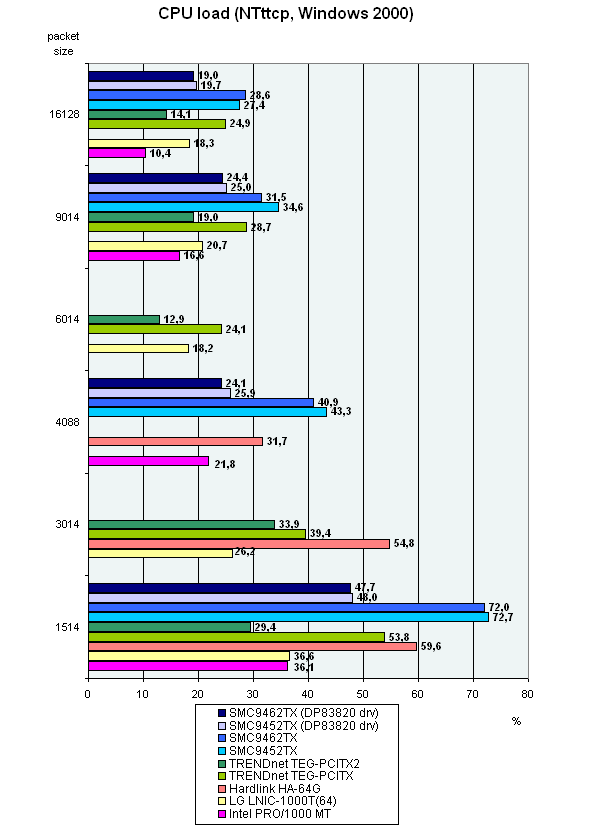

Test resultsThe SMC adapters were tested on their own drivers and on the reference one (originally developed for TEG-PCITX). These adapters are built on the same microprocessors, that is why the drivers are compatible. Only the SMC's driver demonstrates inferior performance and a greater CPU load. The other adapters were tested with their own drivers. 1. Windows 2000, transfer speed.

The Intel PRO/1000 MT Desktop comes forward in almost all the tests; the developers at Intel have obviously done their best. But with the Jumbo Frame of 16128 the Intel's adapter lost its advantage and showed the lowest speed. The second position is taken by the Hardlink HA-64G thanks to the new microcontroller (one-chip card). The other 5 cards show approximately identical scores. When running on their own drivers, the SMC demonstrates a higher CPU load with the speed comparable to the rest. It's well seen in the mode with the Jumbo frames disabled. But with the reference drivers the situation gets better. Probably, th problem will be solved with the new driver version, and now it's worth using the reference drivers. The UDP test must have a bottleneck somewhere because the scores of the participants are very close. Probably, the problem is on realization of this test in the Iperf. 3. Linux, MTU size.

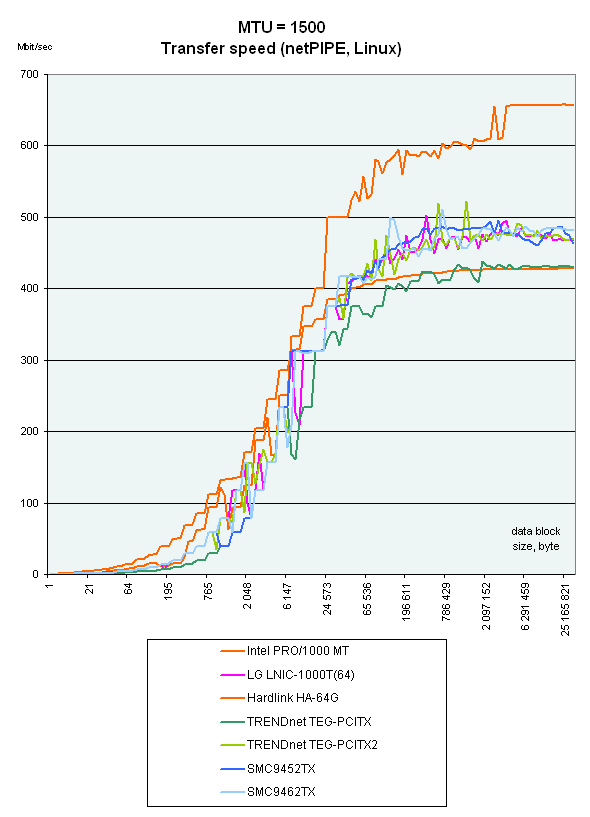

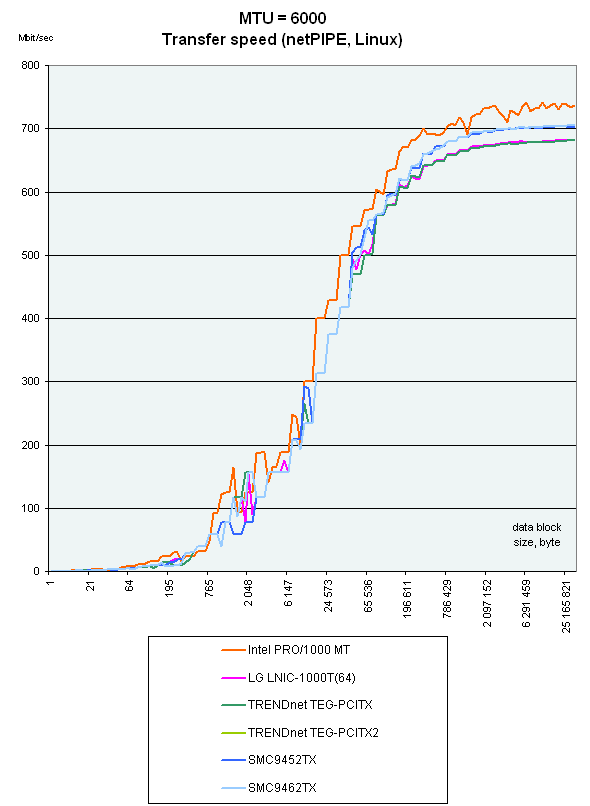

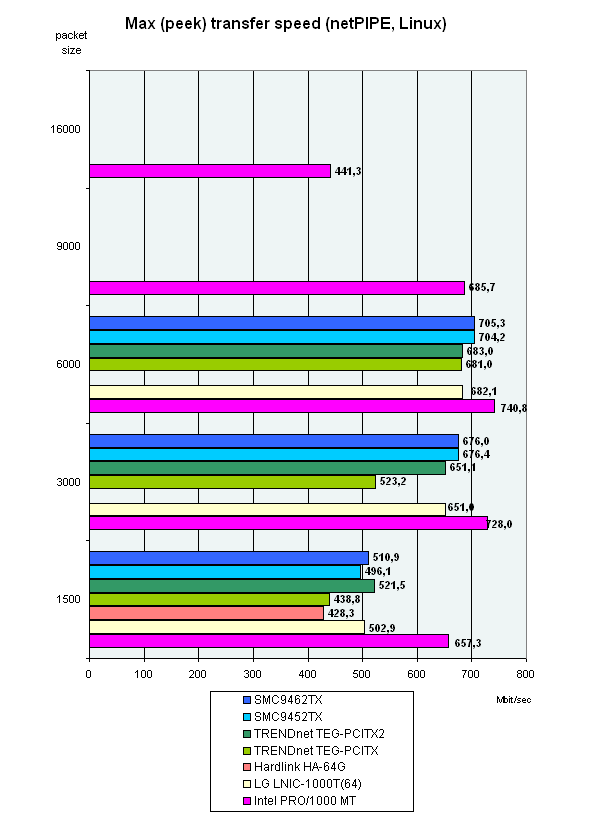

All the cards except the Intel PRO/1000 MT Desktop have expected results. In case of Intel, the data transfer rate goes up only to a certain level as the Jumbo Frame size increases. At the Jumbo Frame size of 16000 the speed falls down sharply. Besides, the difference between the speeds of identical cards with different PCI interfaces is minimal. 4. Linux, performance comparison with the equal MTU size.

The Intel PRO 1000/MT takes the lead when the frame sizes are small, but at 6000 all the cards peform almost equally. And at 3000 the TRENDnet TEG-PCITX slows down to an unknown reason. The last diagram is comparison of peak speed of all adapters in netPIPE. Note that the speed in the Linux is a bit higher than in the Windows. But frankly speaking this isn't a fully correct comparison as it involves peak speeds.  ConclusionJumbo Frames are certainly a useful thing. But the speed is still too dependent

on the driver, just look at the Intel PRO 1000/MT. It performs better compared

to the previous tests. But still, the maximum (1 Gbit) can't be reached

because of the 32-bit PCI bus. Nevertheless, the server (64-bit) adapters

were tested on the 32-bit PCI bus for comparison of both versions of the

cards. As you can see from the diagrams, there are almost no differences

in case of the 32-bit bus.

Evgeniy Zaycev (eightn@ixbt.com)

Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. |