|

||

|

||

| ||

The Moore's Law for cable networks:

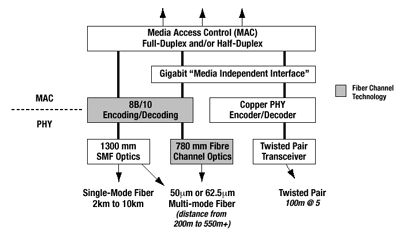

Every ten years the development of new technologies of information transfer requires a tenfold wider channel's bandwidth of a cable system.  The Gigabit Ethernet answers the call from the users who need higher data transfer rates. It lets us avoid bottlenecks in the networks at the expense of a tenfold wider channel's band, and it is primarily used in main lines of networks, for connection of servers and so on. At present, its area of application is wider than just networks, backbones of networks, computing clusters and distributed data bases. For example, such applications as stream video, video conferences, processing of complex images require a higher bandwidth, and with too many users a 100Mbit network may not cope with an increased data flow. Hence a so intense interest to this technology and increased sales of 1Gbit adapters. It's also fostered by price cuts for such devices. The IEEE standard adopted in June 1998 was ratified as IEEE 802.3z. At that time they used only an optical cable as a data transfer medium. A year later the 802.3ab standard also set the unshielded twisted pair of the 5th category as such. The Gigabit Ethernet is an extension of the Ethernet and Fast Ethernet standards which made a "good showing" for their 25-year history. It's 10 times as fast as the former and 100 times as fast as the latter, and the throughput of the Gigabit Ethernet reaches 1000 Mbit/s which is approximately equal to 120MB/s, i.e. it's nearing the speed of the 33MHz 32bit PCI bus. That is why 1Gbit adapters are produced both for a 32bit PCI bus (33 and 64 MHz) and for a 64bit one. The technology is back compatible with the previous standards of Ethernet (10 and 100 Mbit), which allows for smooth switching of the current networks over to higher speeds, possibly even without a need to change the current cable structure. The standard inherits all previous features of the Ethernet such as a frame format, CSMA/CD technology (transfer-sensitive multiple access with collision detection), full duplex etc. New touches are primarily connected with high speeds. But the real advantage of the Gigabit Ethernet is that it's based on the old standards. It has an appearance of the good old Ethernet (being its extension), and high speeds were reached without considerable changes in the protocol. Hence the second advantage of the Gigabit Ethernet compared to the ATM and Fibre Channel, - its low price. In general, the Ethernet prevails in the sphere of network technologies making over 80% on the world data transfer market). And I suppose it will keep on dominating in this sphere as the 10 Gbit Ethernet is right over the corner.  Originally the Gigabit Ethernet could use only optical cables as a data transfer medium - the 1000BASE-X interface. It was based on the standard of the physical level of the Fibre Channel (this is a technology of interaction of workstations, data storage devices and peripheral nodes). Taking into account that the technology had been approved earlier, such adoption reduced the time of development of the Gigabit Ethernet standard. The 1000BASE-X standard consists of three physical interfaces with the following characteristics:

Table 1. Technical characteristics of the 1000Base-X standards The 1000BASE-T standard adopted a year later established the unshielded twisted pair of the fifth category as a transfer medium. The 1000BASE-T differs from the Fast Ethernet 100BASE-TX in four pairs used (the 100BASE-TX could use only two). Data rush at the speed of 250 Mbit/s in each of 4 pairs. The standard provides duplex transmission, and each pair works in the bidirectional mode. 1Gbit transmission in the twisted pair in the duplex mode turned out to be more difficult to organize than in case of the 100BASE-TX, because of noticeable pickups from neighboring three twisted pairs and required development of a special scrambled noise immune transmission and an intellectual node of signal detection and regeneration on reception. The 1000BASE-T standard uses the 5-level pulse-amplitude encryption PAM-5 as an encryption method. The cable must meet quite strict requirements (they are concerned with pickups of a unidirectional transmission, return pickups, delays and a phase shift). It gave birth to the 5e category for the unshielded twisted pair. The Gigabit Ethernet must be recertified to comply with the new category. Well, most proprietary cables do pass it, and at present the 5e category is produced in most quantities. Mounting of connectors for the 1000BASE-T:

Fig 2. Mounting of connectors on a cable for the 1000BASE-T Interesting features were brought onto the MAC level of the 1000BASE-T standard. In the Ethernet networks the maximum distance between stations (collision domain) is defined according to the minimal frame size (in the Ethernet IEEE 802.3 standard it equals 64 bytes). The maximum segment length must let the bidirectional transmitter detect a collision before a frame transmission is completed (the signal must have time to reach the other segment end and return). So, for increasing transfer rates it's necessary either to make a frame size greater (thus increasing the minimal time of frame transfer) or to reduce a diameter of the collision domain. The second approach was taken for the Fast Ethernet. In the Gigabit Ethernet it was unacceptable because the standard inheriting such components of the Fast Ethernet as a minimal frame size, CSMA/CD and time slot will be able to work in collision domains not greater than 20 m in diameter. That is why they increased the time of transmission of the minimal frame. To provide compatibility with the previous Ethernet standard the minimal frame size was left 64 bytes and the frame got an additional field called carrier extension which added to 512 bytes. The field is certainly not added if the frame size is greater than 512 bytes. That is why the resulting minimal frame size is 512 bytes, the time slot increased and the segment diameter was raised up to 200 m (in case of the 1000BASE-T). Symbols in the carrier extension do not have sense, and a checksum is not calculated for them. This field is separated off at the MAC level at the reception stage, and higher levels work with minimal frames 64 bytes long. But although the carrier extension allowed preserving the compatibility with the previous standards, it also resulted in an unjustified waste of the bandwidth. The losses can reach 448 bytes (512-64) per frame in case of short frames. That is why the 1000BASE-T standard was modernized, and the conception of Packet Bursting introduced. It made possible to use the extension field more effectively. So, if an adapter or switch has several small frames to be sent, the first one will be transferred with the extension up to 512 bytes. And the following frames are transferred as is, with the minimal interval between them of 96 bits. The interframe interval is filled up with the carrier extension symbols. It works until the total size of transferred files reaches 1518 bytes. So, the medium doesn't "fall silent" while small frames are bering transferred, and a collision can occur only at the first stage, when the first small frame with the carrier extension (512 bytes) is delivered. This mechanism boosts up the network performance, especially in case of large loads, at the expense of reducing probability of collisions. But it was not enough. First the Gigabit Ethernet supported only standard

frame sizes of the Ethernet, from minimal of 64 (extended up to 512) to

maximum of 1518 bytes. 18 bytes are taken by the standard control heading,

and data are thus left with 46 to 1500 bytes. But even a 1500 bytes packet

is too small for a 1Gbit network, especially for servers transferring large

amounts of data. Just take a look at some figures. To transfer a file of

1 Gbyte via an unloaded Fast Ethernet network the server processes 8200

packets/sec and it takes at least 11 seconds. In this case 10% (!) of time

will be spent for interrupt processing for a computer of 200 MIPS because

a central processor must work on (calculate a checksum, transfer data into

the memory) every incoming packet.

Table 2. Characteristics of transmission of the Ethernet networks. In 1Gbit networks the load on the processor rises approximately ten times because of the reduced time interval between the frames and interrupt requests addressing the processor. The table 1 shows that even in the best conditions (frames of the maximum size), frames are separated at most by 12 microcesonds. With smaller frames the time interval shrinks even more. That is why in 1Gbit networks the frame processing (by CPU) stage is its weakest point. And at the dawn of the Gigabit Ethernet the actual data rates were too far from the theoretical maximum level as the processors couldn't cope the load. The obvious wayouts are the following:

At the moment both approaches are put to use. In 1999 it was suggested that a packet size be increased. Such packets are called Jumbo Frames and they can measure 1518 to 9018 bytes (equipment from some manufacturers support even greater Jumbo Frames. They reduce the processor's load as much as 6 times (in proportion to their size) thus boosting up the performance. For example, the maximum size of a Jumbo Frame of 9018 bytes reserves 9000 for data, beside a 18-byte heading, which corresponds to 6 standard maximum Ethernet frames. The performance gain is achieved not at the expense of losing several control headings (their traffic makes only several percents of the overall throughput), but at the expense of reduced time required for processing of such frame. Or, to be more precise, the time of frame processing remains the same, but instead of several large frames when each needs N processor cycles and one interrupt there is only one large frame to be processed. Besides, many modern network adapters use special hardware means to draw off a part of the load of traffic processing from the CPU. In case of a great traffic rate they use buffering - the CPU processes several frames one right after another. However, such niceties kick up the prices of such adapters. CardsToday we will review three cards of the Gigabit Ethernet standard (they are all Desktop versions (not server ones). And all of them were tested on the PCI 33 MHz bus. So, the first card is LNIC-1000T from LG Electronics.

The two-chip card uses microcontrollers from National Semiconductor

Corporation. The card has 6(!) LEDs which inform about speeds of 10/100/1000

Mbit (3 LEDs), and on collisions, data transfer and a full-duplex mode

(the other three). One of the card's controllers - DP83861VQM-3 - is hidden

under the heatsink. It is a transceiver of the physical level and is able

to work at 10/100/1000 Mbit/s in the half-duplex and full-duplex modes.

It supports the Auto Negotiation mode of speeds and modes from the list above (IEEE 802.3u). The second controller - DP83820 - is a 10/100/1000 Mbit Ethernet controller that connects a network card to a PCI bus. Its features:

The driver version is 5.0.1.24. It opens access to a wide range of settings of the card. During the tests we left default settings and changed only the size of the Jumbo Frame (1514, 4088, 9014 and 10,000 bytes). In the Linux OS the drivers didn't work, and we used the integrated National Semiconduct DP83820 driver ver.0.18. In this driver the size of the Jumbo Frame is limited by 8192. The next card is Hardlink HA-32G from MAS Elektronik AG.

The network card is based on the same two controllers like the previous

one. But it uses a different PCB and lacks a heatsink on the transceiver.

There are three LEDs that inform on the speed of 10/100/1000 Mbit and data

transfer in the blinking mode. The drivers were also used from the software

bundle coming with the card.

The driver's version in the Windows 2000 is also 5.0.1.24. But its interface offers much fewer settings than that of the previous card. All the settings remained default. The maximum size of the Jumbo Frame is unknown but the maximum size used in the tests (16128 bytes) could be set without problems. In the Linux OS the drivers supplied with the card refused to work with the default settings as well, and I turned to the OS's driver (the same as in the previous case). But there was an interesting problem. The cards couldn't detect the link in the Linux-Linux bundle. The network interfaces are raised up but the link is not established. When we rebooted one of the computers in the Windows 2000 the link appeared both with the straight-through and cross cables. The situation repeated with the 2.4.17, .18 and .19 cores. That is why the test results for this adapter in the Linux are not given. The last card we have for today is Intel PRO/1000 MT Desktop from Intel Corporation.

This is a one-chip solution (newer generation) build on the Intel 82540EPB microcontroller which fulfills functions of both chips of the above cards. The card has two LEDs one of them showing link and data transfer and the other (two-color) speed of 10/100/1000 Mbit. Here are some of the microcontroller's parameters:

The drivers for both OSs were taken from the company's site. The driver's version in the Windows 2000 is 6.2.33.0, and it provides wide configuration settings for the adapter. Nevertheless, we left the default settings and changed only the Jumbo Frame size (4088, 9014, 16128 bytes). Linux driver, ver.4.3.15 worked flawlessly. Testing techniqueTwo computers used in the tests consist of the following components:

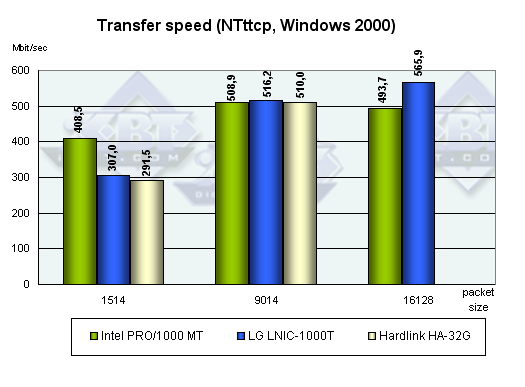

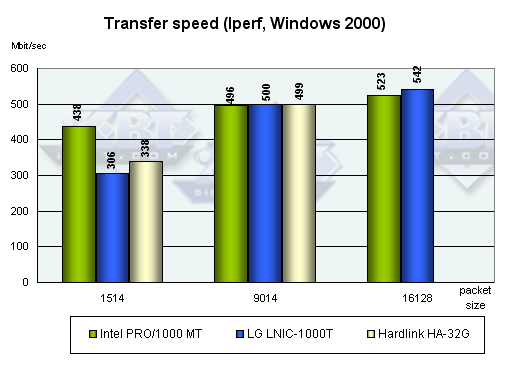

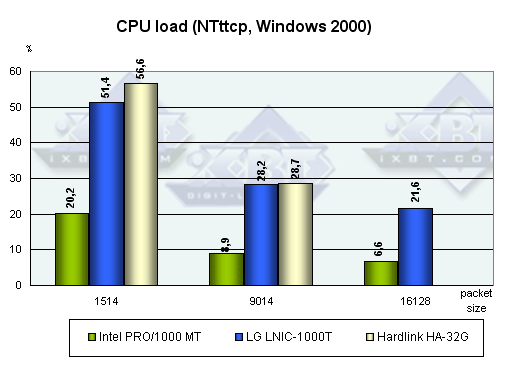

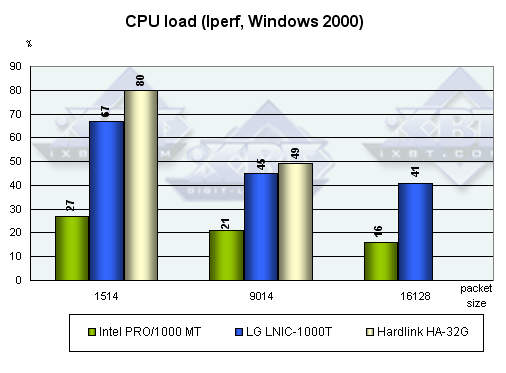

In the Windows 2000 for traffic generation and measurements we used Iperf 1.2 and NTttcp programs from the Windows 2000 DDK. Both programs were used to measure data rates and CPU utilization at the following Jumbo Frame sizes:

Hkey_Local_Machine\System\CurrentControlSet\Services\Tcpip\Parameters

Startup options of the Iperf: Startup options of the NTttcp: Hkey_Local_Machine\System\CurrentControlSet\Services\Tcpip\Parameters

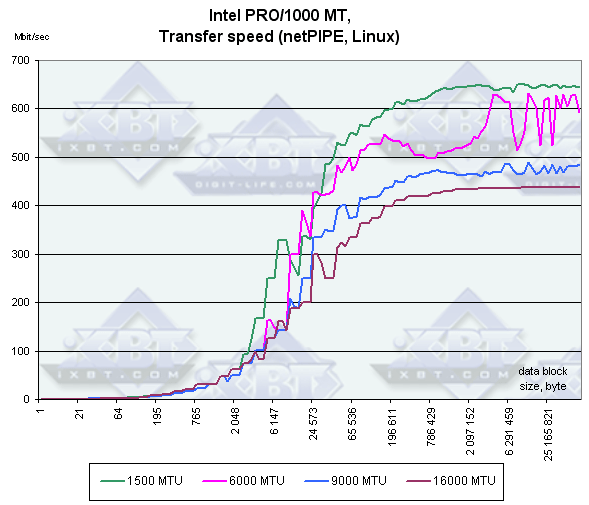

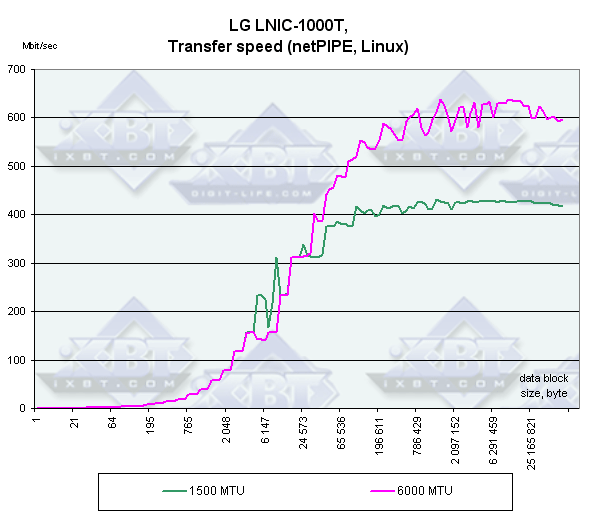

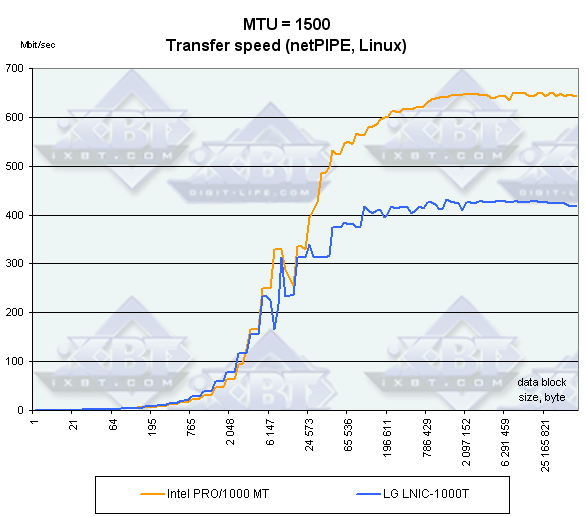

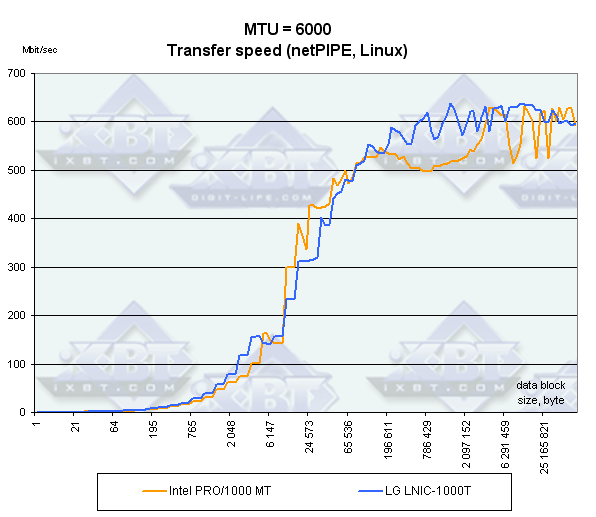

Startup options of the Iperf: Startup options of the NTttcp: In the Linux OS for traffic generation and measurements we used the netPIPE 2.4. The program generates a traffic with a gradually growing size of the data packet (a packet of the size N is transferred several times, the number of transmissions is inversly proportional to its size, but not less than 7). Such method shows the percentage of the channel utilization depending on the size of data transferred. The size of the Jumbo Frame was changed by changing the MTU in the settings

of the network interface by command

receiver: NTtcp -b 65535 -o logfile -P -r transmitter: NTtcp -b 65535 -o logfile -P -t Test results1. Windows 2000, transfer speed.

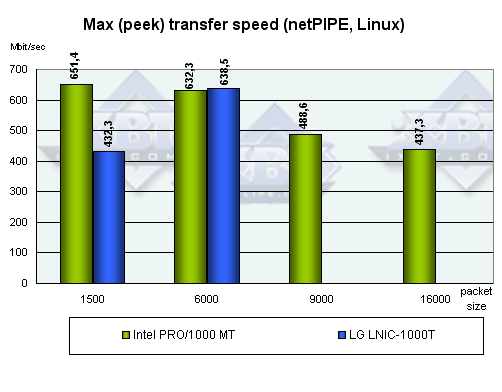

Strangely enough but the speed doesn't exceed 600 Mbit/s even with the Jambo frame of 16128 bytes. It's not clear where is a bottleneck as the processor is not loaded entirely anyway. It's also well seen that the Intel's adapter gets the smallest gain from the increased frame size - the speed goes up inconsiderably though the CPU' load falls down. The Intel PRO/1000 MT adapter loads up the processor least of all, it is twice better than its rivals because it's based on a newer controller. And with the disabled Jumbo Frame the Intel regains the crown in speed, and with the jumbo frames enabled the crown is handed in to the LG LNIC-1000T. Why the adapter falls behind the Intel's model is not clear - the problem is probably hidden in the driver. 3. Linux, MTU size.

4. Linux, performance comparison with the equal MTU size.

And here is a diagram where you can compare the peak performance of these two adapters at different MTU sizes. By the way, the resultant speed in the Linux is higher than in the Windows.  ConclusionThe tests show that the Jumbo Frames boost up the performance marginally, so it's better to use them in operation. They certainly must be supported both by a network adapter and a switch, but unfortunately not all of them provide such support. The Intel PRO 1000/MT adapter becomes un unequaled leader with the Jumbo

Frames, and it also provides the lowest CPU's load. Remember that this

is mostly on the account of a newer controller. The issue of a low speed

(not more than 600 Mbit) of all adapters remains open. And the next test

may clear the things up.

Evgeniy Zaitsev (eightn@ixbt.com)

Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||