|

||

|

||

| ||

Contents

GeForce 6200 TC official specification

Reference GeForce 6200 TC-16 specification

Reference GeForce 6200 TC-32 specification

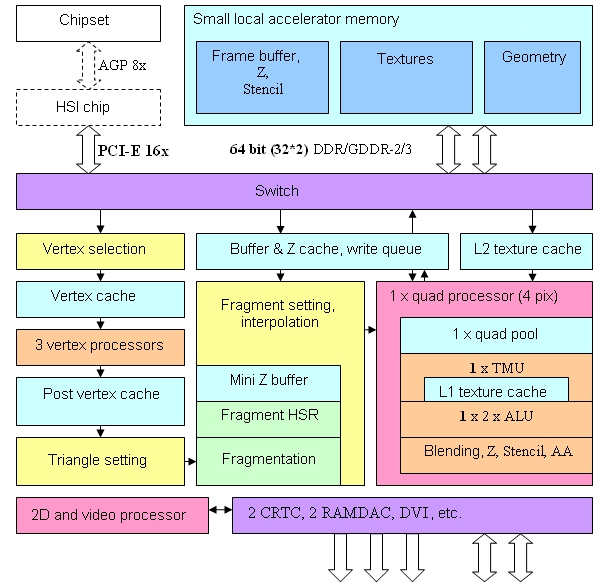

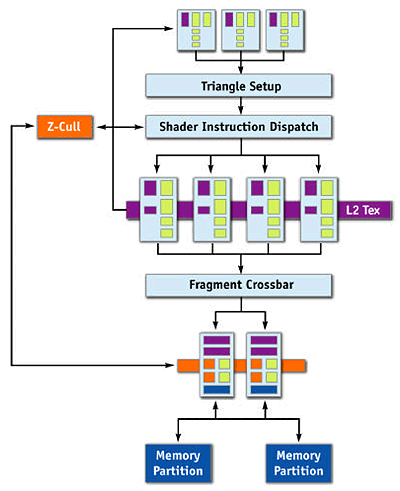

Chip architecture  There are no global architectural differences between NV40 and NV43, there are just some innovations in the pixel pipeline aimed at effective operations with system memory as a stencil buffer, but we shall cover this topic later. On the whole NV44 is a scaled (reduced number of vertex and pixel processors and memory controller channels) solution based on the NV40 architecture. The differences are quantitative (bold elements on the diagram) but not qualitative – from the architectural point of view the chip remains practically unchanged. So, we have 3 vertex processors, as in NV43, and one (instead of two) independent pixel processor operating with one quad (2x2 pixel fragment). PCI Express has become native, as in NV43 (that is implemented in the chip). And there seems to be no plans for the AGP 8x card with this chip, at least its TC modification (TurboCache), because the idea itself of the effective usage of system memory for rendering requires adequate throughput of the graphics bus both ways. Even if there appear cards with a 64MB buffer and an additional bidirectional PIC-E <-> AGP bridge (dotted line), their price will be outrageously high for the low-end segment and they won't be able to compete there with truly AGP 8x solutions. Besides, note an important limiting factor – two-channel controller and a 64-bit (!) memory bus – we'll analyze and discuss this fact later on. Judging from the chip package and the pin number, 64 bits are a hardware limit for NV44 and there will be no 128-bit cards. The architecture of vertex and pixel processors and of the video processor remained the same – these elements were described in detail in our review of GeForce 6800 Ultra. And now let's consider potential quantitative and qualitative changes relative to NV43:

Considerations about what and how has been cut downObviously, even taking into account that system memory is used via PCI Express, all 6200 TC models will suffer the insufficient memory bandwidth in the first place, both system and local. Peak fill rate in 6200 TC is 700 megapixels, while local memory bandwidth is more than twice as low (without taking into account potentially reduced caches and two-channel memory controller). Thus, we can predict that everything will be up to two parameters. System memory performance and its addressing via PCI Express (depends on a chipset and other system parameters) and complexity of executed shaders (complex shaders with a great number of instructions will not be that fastidious to memory and will probably manage to reveal the complete computing and fillrate potential of pixel processors). The weak point in the 6200 TC series, as in case of 6600 series, will be high resolutions and full-screen antialiasing modes, especially in simple applications. And the strong point – programs with long and complex shaders and anisotropic filtering without simultaneous MSAA. This tendency will be expressed particularly painfully, much more prominently than in the 6600 – that is one can say that these accelerators depend strongly on applications. To all appearances, its results compared to those of the competing series (for example the X300 * from ATI) will differ manifold, depending on a test, resolution, and settings. That is in some applications the 6200 TC will look on a par or even better and in other applications it will be noticeably outscored. Later on we shall verify this assumption with tests. It's hard to judge how justified 64 and even 32-bit memory buses are – on the one hand, this move cuts the price of the chip package and reduces the number of rejected chips; on the other hand, the price difference between PCBs for 64 and 32-bit is still lower than for 256 and 128! And this difference is richly compensated by the price difference between a usually inexpensive 200 MHz DDR and still expensive 350 MHz GDDR memory. Again, as in case with the 6600, from the manufacturers' point of view the solution with 128-bit bus and cheaper DDR memory (as in case of the X300) would be more convenient, at least if they had a choice. But from the point of view of NVIDIA, who manufactures chips, very often selling them together with memory, the 64-bit or even 32-bit solution with GDDR is more profitable. To all appearances, vertex and pixel processors in NV44 remained the same, not to take into account declared revisions to achieve effective system memory addressing from texturing and blending units. However, these are only words – in reality there are some reasons to believe that all these features are not that essential, they are implemented rather on the level of common cache manager and crossbar. They were initially included into the NV4X family, there was just no point in using them (on the level of drivers) in senior cards with faster, more capacious, and larger local memory. There is also no point in this technology in cards with the AGP interface, which will inevitably become the bottleneck (because of the low write speed into the system memory, comparable to PCI).

That's how NVIDIA explains the differences in its articles:

... regular architecture and NV44 with TurboCache:  You can see the difference due to data feed for textures and the additional way to write frame data (blending) into the system memory. However, the initial chip architecture with a crossbar treating the graphics bus practically as the fifth channel of the memory controller may have been capable of this from the very beginning (starting from NV40 or even earlier). And it's difficult to say whether NV44 actually has architectural changes in writing and reading data or these features are implemented on the level of drivers. On the other hand, we shall not deny that it would be optimal to have some paging MMU and dynamic swapping of data from system to local memory, which would be treated as L3 Cache. In case of such architecture everything falls to its place. The efficiency will be noticeably higher than discrete allocation of objects and minor hardware revisions will be justified. Especially as having tested this paging unit, one can use it in future architectures, which to all appearances will be equipped with such units without fail (because of DX Next requirements). In the first case everything depends on the driver, it determines which textures, geometric data and frame buffers and where to locate. In case of paging everything may be determined by hardware only, or one can mark some objects as "not cacheable", for example, geometric data or some frame buffers, which would provide no special gain in case of copying into local memory of the video card, but on the contrary would decrease the final efficiency. This distribution looks like a very difficult task and it actually requires constant (in each frame) analysis of values of internal chip performance counters. It must be done relative to various separate objects in its memory, because it's very difficult to predict the influence of access patterns in different cases. In the peak case, especially in case of TC-16, the system memory can be read even faster than the local memory. But system memory latencies and its real performance (DDR200-300*64 bit - CPU needs and competitive access arbitration) will result in lower efficiency than that of the 32-bit 350 MHz local option anyway. So, only practice will show how justified NVIDIA's solution was in case of 32-bit TC-16 and in case of 64-bit TC-32. But one thing we can say for sure – everything will be actually up to the price and applications popular among users. If the card costs $40 – that's one story, if $70 – another, because competing 128-bit solutions will strangle TurboCache with their 128-bit memory, which is more efficient anyway. Applications will also make themselves felt – intensive shaders or UltraShadow as in Doom 3 – and the 6200 TC goes great guns; simple shaders and full screen antialiasing – and it fails. We shall check all these assumptions and talk about the prices further.

Video Cards

Both cards are completely identical in PCB layout, the only difference is that the 16 MB card does not have one of the two memory chips.

We can see that the layout has been vastly modified. Personally, I am perplexed why such a primitive card with two memory chips has such a complex PCB. We still remember old cards with the same memory capacity, which were significantly simpler. Yes, sure, the frequencies are high. But why on Earth cut the bus down so much and raise the frequency? In my personal opinion, this approach seems unreasonable and even silly. Only an amateur can install ONE(!) but expensive BGA 2.8ns chip and an absolutely horrible bus (32 bit) instead of installing 4 or 8 old chips (TSOP, 5ns, for example), which will be CHEAPER, and leaving the bus alone. So, I have a lot of questions left unanswered after I examined the design of this card. The cooling system is primitive: just a heatsink, so we shall skip it. Let's have a look at the graphics processor.

You may notice that the chip dimensions are a tad smaller than those of its predecessors (for example, GeForce 6200/6600 – NV43). I mean the substrate, not the dye itself, which has also grown less though (that's clear, the number of pipelines is two times less). It indicates that NV44 has hardware support for the memory exchange bus of not more than 64 bit. And another question of mine: why such a complex PCB with a 64 bit bus?

Installation and DriversTestbed configurations:

VSync is disabled. Both companies have enabled trilinear filtering optimizations in their drivers by default.

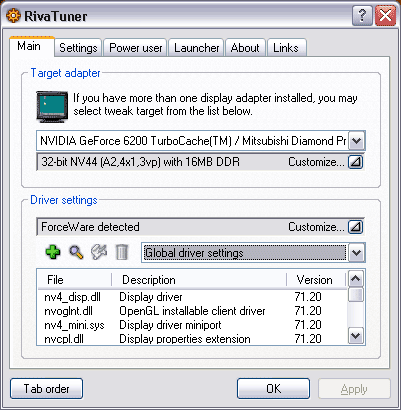

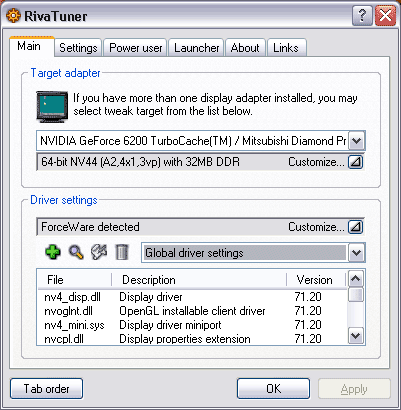

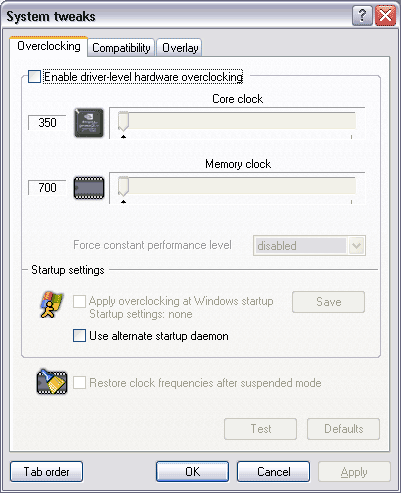

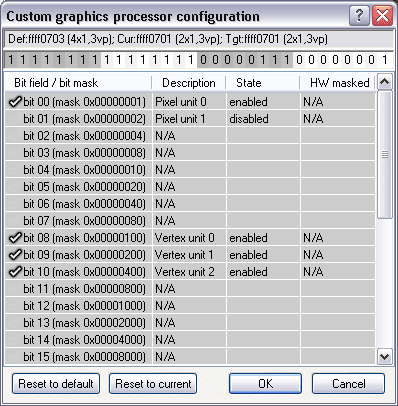

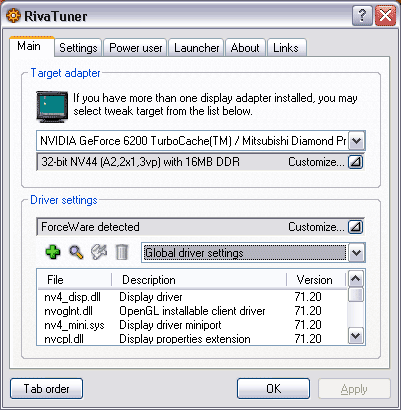

It should be noted that Alexei Nickolaichuk, the author of RivaTuner, has quickly added the nv44 support into his utility. It turned up that the chip has two bits in its registers responsible for each quad. That is, it would have been logical for the chip to have 8 pipelines, but 2 ROP. This is certainly beyond any logic and common sense (besides, FillRate tests demonstrated that there are actually 4 pipelines). That's why the only sound conclusion is that NV44 operates with pixel pairs rather than with quads. We are going to investigate into this issue.

Note that you cannot learn how much memory is actually installed on the video card but from RT :), because the drivers inform about the total capacity: local memory + part of the main RAM.

Test resultsBefore giving a brief evaluation of 2D, I will repeat that at present there is NO valid method for objective evaluation of this parameter due to the following reasons:

What concerns our samples under review together with Mitsubishi Diamond

Pro 2070sb, these cards demonstrated identically excellent

quality in the following resolutions and frequencies:

Test results: performance comparisonWe used the following test applications:

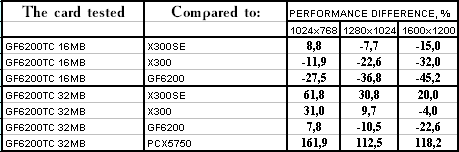

Unreal Tournament 2004

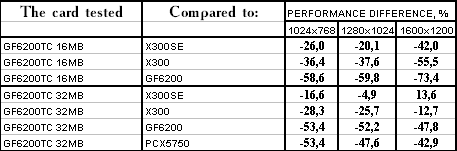

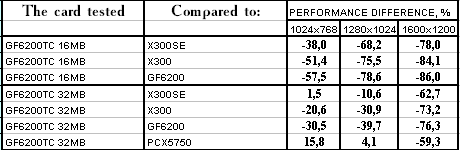

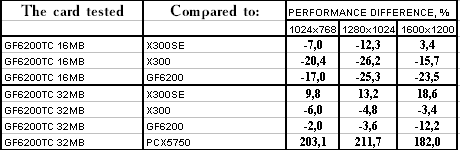

It's a sad picture so far for the new video cards, which are also more expensive at that (preliminary prices).

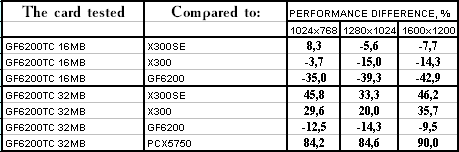

TR:AoD, Paris5_4 DEMO

The same results as in the previous test, failure. Certainly, the new cards look good against a more expensive PCX5750, but this card is already leaving the market pushed by the GF6200/6600. FarCry, Research

The results are still obviously negative. The only exception is that the 32 MB card outscored the X300SE in 1024x768. But the prices do not allow competition. If NVIDIA dropped its prices for the new video cards by 30-40 dollars, only then the picture would be better.

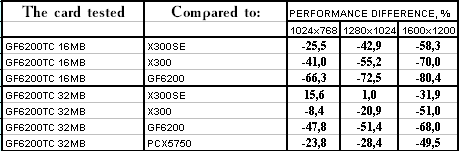

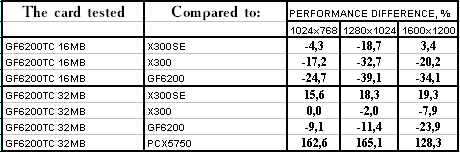

FarCry, Regulator

FarCry, Pier

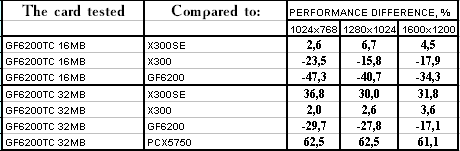

Half-Life2: ixbt01 demo

Half-Life2: ixbt02 demo

Half-Life2: ixbt03 demo

The same picture.

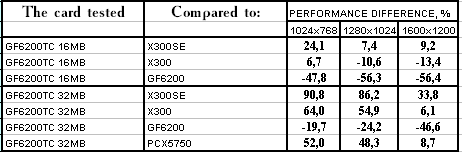

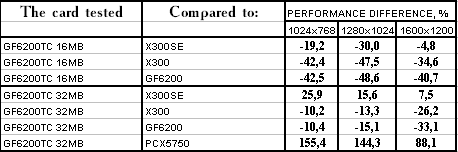

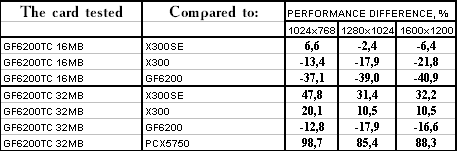

DOOM III

At least the new cards managed to demonstrate good results in this game.

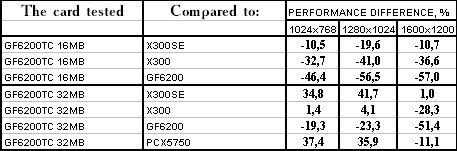

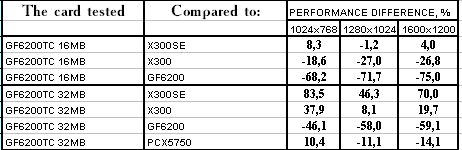

3DMark05: Game1

3DMark05: Game2

3DMark05: Game3

3DMark05: MARKS

The 32 MB video card is obviously victorious, though a cheaper modification with 16 MB is slightly outscored. ConclusionsThe main weak point is the memory bandwidth, the two-channel 64-bit controller, and writing results to the frame buffer. TurboCache is helpful, but it's not a cure-all. Good 128 bit solution may have a comparable price, being certainly a winner in performance. Complete compliance with NV40 in the set of supported features and the architecture of vertex and pixel processors is laudable, there is no need to take into account NV44 peculiarities in programs. Lesser complexity and consumption played their positive role. The clock frequency is high, and, judging from the technology, it can still be raised much higher in future – the only bottleneck is memory. I want to believe that such a narrow system bus and simple package will soon result in the cost reduction. At such preliminary prices (79 and 99 USD) they are obviously not faring well. The tests demonstrated that they will be in demand only if these cards are cheaper than their competitors (considerably cheaper, the price does not exceed $50-70). In this case video cards' performance obviously depends very much on drivers, TurboCache being provided by them. That's why their performance may still grow. Besides, as you can see, TurboCache was of little help to these cards. The objective was good – to reduce the cards' costs, because 128 and 256 MB of memory in a low end segment make up a considerable part of the cost. But what's the result? Higher prices instead of lower ones? Let's hope the prices will settle down. If the final retail prices are not 79, 99 and 129 USD for these products but 49, 69 and 89, these video cards will get a chance to being in demand, they will even be attractive. That's why we are not drawing final conclusions, they are impossible so far because everything is up to the prices for the new cards. In our 3Digest you can find more detailed

comparisons of various video cards.

Theoretical materials and reviews of video cards, which concern functional properties of the GPU ATI RADEON X800 (R420)/X850 (R480)/6200tc (nv44)/X700 (RV410) and NVIDIA GeForce 6800 (NV40/45)/6600 (NV43)

|

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook

Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved.