|

||

|

||

| ||

The last new product from ATI was released more than a year ago. Since that time there were only different variations of the RADEON which demonstrated that the largest and oldest video chipset and card maker had something to compete with against NVIDIA. Unfortunately, these attempts were awkward. I should admit that the Canadian company in this sphere of only two players (ATI Technologies and NVIDIA; Matrox couldn't hold its positions as a participant of the 3D accelerator market) worked without spirit. Since summer 2000 NVIDIA has been running its business successfully. The company offers a wide range of cards which are produced by many Asian firms. You can easily choose a card to your liking: from $40 to $400 with the respective functions. Besides, the competition between cards of the same type resulted in price cuts. Thus the company gets one more credit - game developers will provide the support for NVIDIA products since, for example, the GeForce2 MX is now one of the cheapest budget solutions. ATI has also introduced a whole line (apart from the flagship RADEON 64 MBytes DDR there are RADEON SDR, RADEON 32 MBytes DDR, RADEON LE, RADEON VE). Unfortunately, the company lost in the release terms and in volumes. Besides, the company follows a quite strange price policy. The release of the RADEON LE for only China helped to extend the market, but what was the cost? A 32 MBytes card with 6ns DDR memory costs less than $85, while the GeForce2 GTS is only now dropping lower than $100. But there was some problem. The company seemed to be unable to develop not crippled drivers. Take, for example, the RAGE 128 card which had a lot of bugs in games. The 1 year old RADEON gave rise to infinite blames as far as quality was concerned. It took the company a whole year to release a normal product which almost lacked for bugs in 3D graphics. There were no reasons for ATI to hope that such a cheap RADEON LE would attract much attention. Its relative popularity is explained by its overclocking potential. It is clear that the company used chips with some drawbacks for this card, like unstable operation of the HyperZ unit, that is why it was disabled in the RADEON LE and the frequencies were decreased from rated 166 to 148 MHz. But all RADEON LE worked flawlessly at 166 MHz as normal RADEON 32 MBytes DDR cards and could even be considerably overclocked. When this RADEON LE appeared, there were a mess in prices: a weaker RADEON with SDR memory cost sometimes higher than the RADEON LE with DDR. The RADEON 32 MBytes DDR froze at $120-130. This resulted in a huge gap in prices. The RADEON 64 MBytes DDR cost almost as the 32 MBytes card. NVIDIA products were at the same time more uniformly distributed in the video market. Another problem of ATI was that it had no partners in production of its cards. One body is nobody even if it has huge production facilities. ATI understood it only in spring 2001 and started looking for new partners. They have already found some, and I hope the new RADEON 7500/8500 will have a chance to succeed since those firms will compete and prices will drop. The new software will support both new RADEON 7500/8500 and previous ones. It means that the experience of bug fixing will be made use of. The new line of ATI contains now only two cards: RADEON 7500 and RADEON 8500. Later they are going to launch a professional FireGL 8800 card on the RADEON 8500. Today we will examine the RADEON 7500. Cards on this chipset are said to be produced both by ATI and by some Taiwanese companies such as Gigabyte, PowerColor and others. The RADEON 7500 is in fact the RADEON GRAPHICS based only on the 0.15 micro process which allowed lifting its frequency by a great margin. Besides, it features possibilities of a budget RADEON VE (output on two receivers, HydraVision technology etc.). The difference is only in the chip frequency: 290 MHz against earlier estimated 275. Thus, the 3D graphics core of the RADEON 7500 has two pipelines, each controlling 3 texturing units. That is why the peak fillrate will be 580 Mpixel/sec and 1740 Mtexel/sec with all 6 texture units enabled (there are almost no games which can use more than 2 TMU, that is why the real peak fillrate is 1160 Mtexel/sec). It is much less than that of the NVIDIA GeForce2 Pro (800 Mpixel/sec and 1600 Mtexel/sec), but we all know that the GeForce2 Pro (and all GeForce2 ones) is limited by the memory bandwidth. Even 200 (400) MHz DDR memory doesn't suffice for realization of the GeForce2 potential. However, unlike the the GeForce2 MX, the RADEON has an optimization of memory operation. I mean the HyperZ which economizes a video memory bandwidth. That is why the RADEON with SDR memory beats the GeForce2 MX of the same price in 32bit color. The RADEON DDR working at 166 MHz loses to the 200 MHz GeForce2 GTS, but it catches up with it in 32-bit color. That is why the release of a newer version of the same RADEON DDR will let ATI compete successfully against not only GeForce2 GTS cards but also against GeForce2 Titanium. You should remember also that the RADEON 7500 has two integrated RAMDACs (each of 350(!) MHz) and TV-out is implemented similar to the TwinView from NVIDIA (an image can be independently (of the monitor) displayed on a TV screen). I.e. now it is a normal video card with a powerful 3D, with a support of all respective features (except the DirectX 8.0) and a possibility to display an image on two monitors. Now let me take a closer look at the ATI RADEON 7500. CardThe ATI RADEON 7500 has AGP x2/x4 interface, 64 MBytes DDR SDRAM located in 8 chips on the right and back sides of the PCB.   ESMT (Elite Semiconductor) produces memory chips with 4 ns access time, which corresponds to 250 (500) MHz.  The memory works at 230 (460) MHz, like the NVIDIA GeForce2 Ultra. The only difference between them is that the Ultra has heatsinks, while the RADEON 7500 lacks for them. According to the documentation the sample board with RADEON 7500 rev.A12 operates at a 290 MHz core frequency and 230 MHz DDR memory frequency. But the experience of ATI demonstrates that production cards can work at a lower frequency than the pre-production samples. Fortunately, ATI refused to synchronize core and memory frequencies, that is why a decrease of the chip speed won't concern the memory. It is the first GPU working at such a high frequency. But the 0.15 micron technology and not a large number of transistors allowed using a relatively simple cooler with a fan:  Such a cooler is not a first on the market. ADDA coolers are often installed on cards from Creative and other companies. Another peculiarity that should be pointed out is that the latest versions of the PowerStrip utility do support the RADEON 7500/8500, that is why it can help you verify the processor frequencies (290/230 (460) MHz) and overclock it. The left side of the PCB has three connectors: VGA, DVI and TV-out. The RADEON VE has it the same (these cards are supplied together with a DVI-to-VGA adapter).  Let's compare the left parts of these cards:   The RADEON 7500 is on the left, and the RADEON VE is on the right. You see that despite the similar location of the connectors the design has changed much. First of all, it concerns parts with logic elements which are in charge of 2D quality. Besides, the PCB is larger. We tested how an image is displayed on two monitors (except an LCD one). This technology is similar to the Matrox G400/G450/G550 and NVIDIA GeForce2 MX TwinView. An image can be displayed both on two monitors (the same image on two monitors and desktop extension):  and displaying of an image on a monitor and on a TV screen simultaneously (the TV resolution can be as high as 1024X768):  The settings allow centering an image and changing its size. You can also exchange the first and the second receivers. The card ships both in a Retail package and in an OEM one. I don't know what accessories will come with the card, but I hope the set won't be worse than the Sample Kit:  OverclockingWith the additional cooling provided the ATI RADEON 7500 reached 320/270 (540) MHz! Earlier the 4ns memory never overclocked higher than 260 MHz! Note:

Installation and driversTest system:

Plus, ViewSonic P810 (21"), NOKIA 447Xav (17") and ViewSonic P817 (21") monitors. During the tests we used ATI drivers of v7.184. VSync was off, the S3TC technology was enabled, Z-buffer modes were maximum. For the comparative analyses we used the following cards:

Test results2D quality is traditionally high. At 1600X1200, 85 Hz you can play comfortably with a high-quality monitor. For estimation of 3D quality we used several tests:

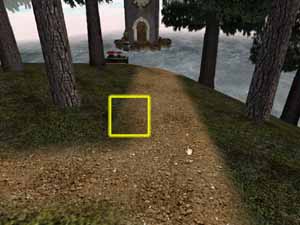

Quake3 Arenademo002, standard modesThe tests were carried out in two modes: Fast (16-bit color) and High Quality (32-bit color). Tests of the Athlon 1200 MHz based system  Tests of the Pentium4 1500 MHz based systems  In 16-bit color the RADEON 7500 falls behind the NVIDIA competitors by a great margin, but in 32-bit color it catches up with the GeForce2 Pro in 1024X768 and, when overclocked, it goes on a par with the GeForce2 Ultra. Taking into consideration the power of these cards, I can state that 32-bit color will be prevalent in 3D. demo002, highest quality and load modesThe detailing levels of geometry and textures were maximum, and the objects were extremely complicated because of curved lines (r_subdivisions "1" r_lodCurveError "30000"). Tests of the Athlon 1200 MHz based system  Tests of the Pentium4 1500 MHz based system  Here the RADEON 64 MBytes DDR lags far behind (scenes with certain "curved" objects were processed quite slow). demo002, anti-aliasing and anisotropic filtering testsSuch functions were integrated yet in the RADEON GRAPHICS. Let's, first of all, look at the performance with these functions enabled: Tests in the Pentium4 1500 MHz based system  Even at the highest anisotropy degree the performance drop is not considerable, that is why it makes no sense to consider intermediate levels (in the registry you can find figures 4 and 16 which correspond to High and Highest levels. I think that it is the maximum permissible number of texture samples in each case for realization of anisotropy). What about the quality with the anisotropy enabled? Bilinear filtering  Trilinear filtering  Anisotropic filtering (with trilinear one enabled)  The quality is undoubtedly higher. But the trilinear filtering doesn't work together with the anisotropy (the borders between MIP levels are well seen). So, you can make a choice: either a trilinear filtering or anisotropic one. Besides, the Direct3D can't force anisotropic filtering. Giants  Here the Radeon loses again in 16-bit color and wins in 32-bit one. This game is obviously optimized for the 3DNow!, that is why even the 1200 MHz Athlon wins from the 1500 MHz Pentium4. Considering that the Giants is a single-player game, which doesn't require hundreds of FPS, the RADEON 7500 suits it excellently for playing in 32-bit color. 3DMark2001Apart from carrying out standard tests at the rated and increased frequencies I tried to find out whether the RADEON 7500 is just an overclocked RADEON GRAPHICS. So, I had to lift the speed of the RADEON 64 MBytes DDR up to 200/200 (400) MHz (on higher frequencies the card hung) and to lower the speed of the RADEON 7500 to 218/200 (400) MHz (the PowerStrip, anyway, refused to decrease the core frequency further). But such a difference shouldn't badly affect the analyses. As you will see, the tests proved it (look at the hatched columns in each diagram). 3DMarks  As we can see the test clearly shows that RADEON 7500 is simply great card. Game1, Low details  The Game1 is a scene from car races when you can practice shooting. There are a lot of effects and detailed objects. In the 16-bit color the RADEON 7500 is far behind while in the 32-color it is among leaders. The overclocked Radeon beats even the GeForce2 Ultra! Game1, High details  This is rather a processor test because of a too complex scenes. In 16-bit color all go on a par, and in 32-bit one the RADEON 7500 is again ahead. And what about the quality? It is excellent!   Game2, Low details  This scene has a high overdraw factor, and traditional accelerators without optimization of operation with a Z-buffer implement a lot of unnecessary operations (they draw many invisible surfaces). The RADEON 7500 leads both in 16 and 32bit colors. Game2, High details  Here the ATI RADEON competitors are given a sound scolding to! Now look at the quality   Game3, Low details  Here the RADEON 7500 and the NVIDIA GeForce2 Pro perform equally good in 16-bit color and the former outscores the others in 32-bit color. Game3, High details  When the scene is coupled with various effects and more detailed objects the RADEON 7500 loses in the 16-bit color and doesn't shine in 32-bit one. But it was expected considering the fillrate difference and a small overdraw. Now look at the quality   The only thing that disappointed me is a cloud around the gun after shooting. The Max Payne is very close to this scene, it is based on the same engine. Let's look at the performance of the RADEON 7500 here. On the whole, it is ok, but there are problems with mist: it is striped and looks unnatural.  Another artifact concerns texturing in the REAL MYST:   There are no more reprimands, but if we find such later, we will inform you in our 3Digest. By the way, there you can also compare the performance of the RADEON 7500 and of other cards in different tests. In closing I should add that the software coming with the card includes a DVD player from ATI, which demonstrates an excellent image given by the RADEON 7500. The CPU utilization doesn't exceed 18%. ConclusionThis RADEON 7500 based card is positioned as a middle-level solution. It will suit many users both in working in different applications and in playing games. The RADEON 7500 competes successfully against the NVIDIA GeForce2 Pro, sometimes even outscoring it in 32-bit color. The RADEON 7500 carries two RAMDACs of 350 MHz each and can display an image on two monitors in almost any resolution (up to 2048X1536 inclusive). The ATI programmers have at last developed normal software. The RADEON 7500 will suit excellently those users who want the 3D performance comparable to the GeForce2 Pro (see our 3Digest) and who need the HydraVision technology at that. The price will be a determining factor for many, but I hope the card won't be overpriced. Suggested US price - $179, and $159 in France. Highs:

Lows:

Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. |