|

||

|

||

| ||

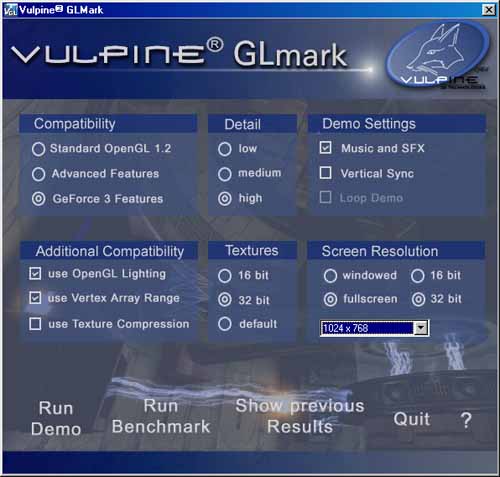

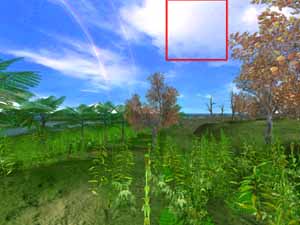

The other days we have considered the NVIDIA GPU GeForce3, and video cards on it. Having complained about the lack of the appropriate software for usage of all possibilities of the new processor we examined the GeForce3 with already classic Quake3 (OpenGL) and 3DMark 2000 (DirectX). All new possibilities of the GPU GeForce3 were checked with the examples from the DirectX 8.0 SDK. Right after the release of this chipset MadOnion company, 3DMark 2000 developer, promised to release a super grandiose benchmark 3DMark 2001 which can enable all possibilities and advantages of the DirectX 8.0. MadOnion has shown prodigious screenshots from the beta-version and released a video clip showing this program in demo mode. The date of debut of the program is kept in secret. Hope it will be at CeBIT'2001. However, a german company Vulpine which is dealing with development of 3D Rendering Engines based on their Vulpine Vision technology have released the Vulpine GLMark benchmark based on one of their engines - The Vulpine Vision Game Engine. The OpenGL serves a basis. Let's see whether the young company can compete against the venerable MadOnion with dignity. Note that the GLMark's zest lies in a full value support for NVIDIA GeForce3, i.e. a demonstration of the vertex and pixel shaders, as well as bump mapping via Dot3 or EMBM (in all cases the appropriate OpenGL extensions are used). Vulpine GLMarkCurrently, there is only 1.1 technologic version of the product, that's why in case of failures you should make allowance for this fact.  So, you have the main menu of the program in front of you. I think that it's clear what should be chosen. I want just to recommend some.

GLMark can keep the results obtained in the database where you can always check them afterwards:  Unfortunately, the program itself chooses the folders for storage, and sometimes everything gets messed up since GLMark measures the CPU frequency from time to time and sets this frequency in the name of the folder. And clock speed measurement contains a tolerance (for example, 1000 MHz can be achieved either as 999 MHz or as 1001 MHz), that's why the results of the same video card can be placed in different folders. Moreover, the folders are named after the ICD OpenGL driver, and not after the video card's name, and, for example, test results for the GeForce2 GTS/Pro/Ultra cards will be put into the same folder despite the fact that cards are different. Testing conditionsTestbed configuration:

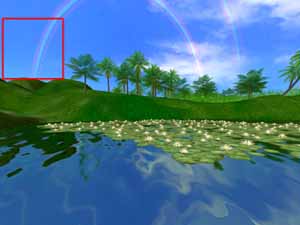

We have examined the NVIDIA GeForce3 with the Leadtek WinFast GeForce3 card and took the following ones for comparison: Leadtek WinFast GeForce2 Ultra and ATI RADEON 64 MBytes DDR (183MHz). For the cards on the NVIDIA chipsets we used 10.70 version drivers, and for the RADEON - the 7.078 version drivers from ATI. Test resultsLet's start examination with the general performance in High Detail mode.   The absolute FPS values are rather low. And it's not strange since the scenes are rather complex , especially in such mode. In general, the results follow the ones we received in our first review on the GeForce3: the performance decrease when migrating from 16 to 32-bit color is insignificant, and in 16-bit color it slightly lags behind the GeForce2 Ultra. I have taken the ATI RADEON 64 MBytes DDR card since it is the only apart from the NVIDIA GeForce3 that supports new possibilities such as bump mapping. But its performance results are very sad. I think it's better to leave aside the RADEON performance results since there is nothing to be proud of (the reason lies rather in the lack of support for a series of ATI ICD OpenGL extensions in GLMark than in possibilities of the RADEON itself). What do Low, Medium and High Details items mean? Let's look at the screenshots where you can see Low Details mode on the left and Medium one on the right:   And now there comes the High Details mode:  It's clear that the modes differ in richness of the objects on the scene, what is very similar to 3DMark 2000. Interestingly how strong does performance depend on the scene complexity?  The results are impressing. The both cards, on the GeForce3 and GeForce2 Ultra, showed sharp leaps in the performance when decreasing a number of objects on the scene. Unfortunately, we do not know the number of polygons in one or another complexity level that's why it's hard to judge how the GPU depends on the number of polygons on the GLMark. Just note that the High Detail is a level of the future games. The diagrams which are demonstrated in the beginning of the performance analysis show how much anti-aliasing takes. We have already discussed a new type of the AA, called Quincunx, which provides a perfect compromise between the quality and speed.   And below you can see a shot with the AA 4X enabled (2X2).   There are no any visual differences in quality between the stronger AA 4X and Quincunx, the former gives higher speed decrease. Now we are going to turn to the anisotropic filtering on the GeForce3, and what it gives in the GLMark. All following tests were carried out in 32-bit color.   Do not feel upset about such performance decrease with the anisotropy activation, especially the hardest one which appears with 32 texture samples. Let's check in practice what give different filtering modes: Here you can see 8-pixel anisotropy: Anisotropic filtering is off:   Anisotropic filtering is on (8 samples):

And here is the 16-pixel anisotropy: Anisotropic filtering is off:

Anisotropic filtering is on (16 samples):

And now up 32-pixel anisotropy: Anisotropic filtering is off:

Anisotropic filtering is on (32 samples):

It's clear that you can tell one filtering type from another only in case of an even, far going surface (floor, for example). So, why to pay so much? I think that in the most cases 8-sample anisotropic filtering is enough. Before turning to the quality of all three cards, I want to attract your attention to S3TC-technology. As I have already said, the GLMark implements texture autocompression with S3TC activation. The Quake3 showed what drawbacks are typical for the cards based on the GeForce chipsets in 32-bit color. Let's see what happens in the GLMark: The first example:S3TC is off:   S3TC is on:   The second example:S3TC is off:   S3TC is on:   Well, yes, there are some imperfections, but remember that usage of the S3TC technology implies some loss in quality. The losses will be minimal and the S3TC will give not a damage but even some benefit only if the game will use textures purposely produces and compressed in advance. And in our case the first diagram shows that S3TC enabling in the GLMark yields little:  Possibilities and qualityHere we will show what all three video cards are capable of, and first of all comes the NVIDIA GeForce3. The first example of the bump mapping (EMBM)ATI RADEON  GeForce2 Ultra  NVIDIA GeForce3  The second example of the bump mapping (EMBM)ATI RADEON  NVIDIA GeForce2 Ultra  NVIDIA GeForce3  It's clear that the EMBM is perfectly realized in the GLMark, and the NVIDIA GeForce3 supports this technology excellently. You must know also that the GeForce2 can't just do it. That's why there is nothing surprising in such gloomy water. And it's unclear why the RADEON didn't manage to realize its potential. There can be only two reasons:

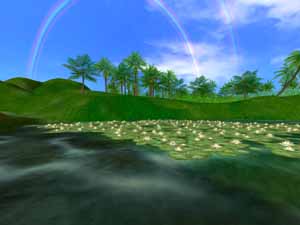

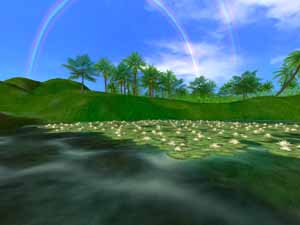

There are some more vivid examples: ATI RADEON  NVIDIA GeForce2 Ultra  NVIDIA GeForce3  Again the water is more realistic on the GeForce3. Besides, the RADEON has some obvious troubles with realization of lighting effects (the NVIDIA GeForce2 Ultra couldn't reach the GeForce3 level either): The first exampleATI RADEON  NVIDIA GeForce2 Ultra  NVIDIA GeForce3  The second exampleATI RADEON  NVIDIA GeForce2 Ultra  NVIDIA GeForce3  ConclusionNow let's draw a conclusion. It's rather short and capacious:

Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. |