|

||

|

||

| ||

The first game tests of NVIDIA GeForce 9600 GT were amazing - its results were very close to those of the more powerful GeForce 8800 GT. Of course, we expected that the new G94 would be fast and well-balanced in main parameters (the number of execution units, memory bandwidth, etc). However, in the first game tests GeForce 9600 GT almost caught up with GeForce 8800 GT, even though the latter has more TMUs and ALUs. Frequency hasn't been increased much relative to the G92-based card, and video memory bandwidth hasn't changed. So why is the G94-based card so strong? What about almost twice as many TMUs and ALUs (there are precisely twice as much of them, if we compare GPUs, but GeForce 8800 GT has only 75% more of them) in G92? Do they make no difference in all modern games, and rendering speed is mostly limited either by fill rate (ROP frequency/number) or video memory bandwidth, or both? Some of the advantage of G94 in the first tests can be explained with different driver versions (for old and new solutions). But the average advantage of GeForce 8800 GT over GeForce 9600 GT in equal conditions is still only 15%, which is much smaller than 75%. We decided to find out what parameters affect performance in modern games most of all. Besides, we haven't analyzed how performance is affected by CPU/GPU/video memory clock rates for a long time already. So we decided to test a G92-based graphics card and determine main render bottlenecks in modern games. Testbed configuration and settings

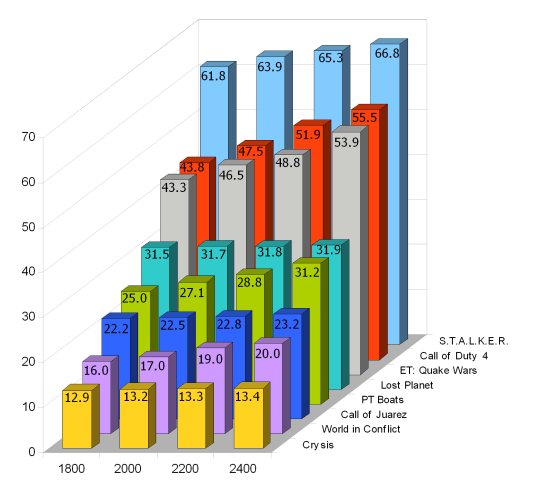

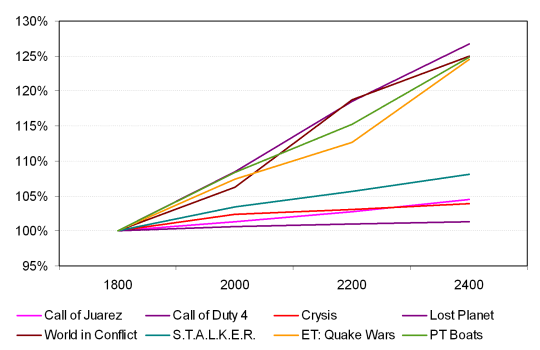

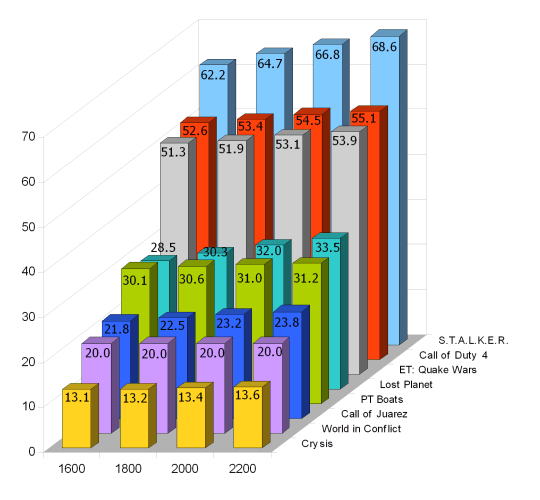

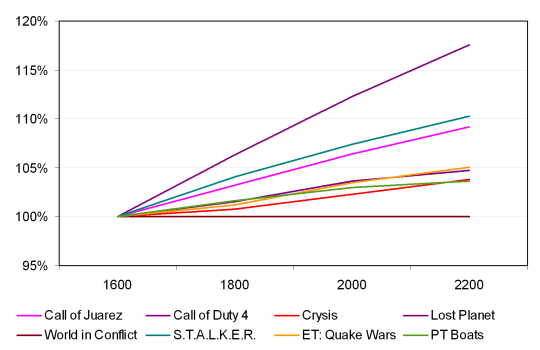

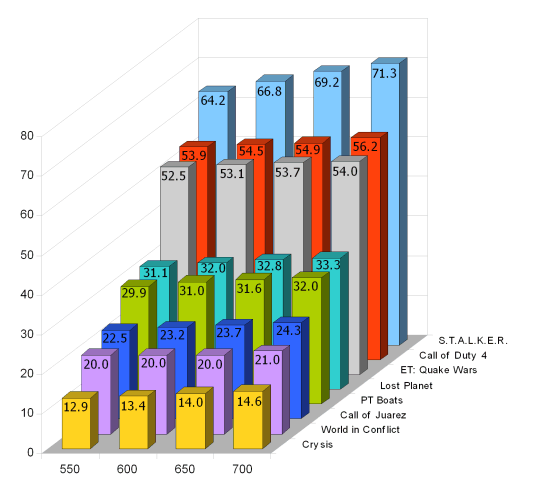

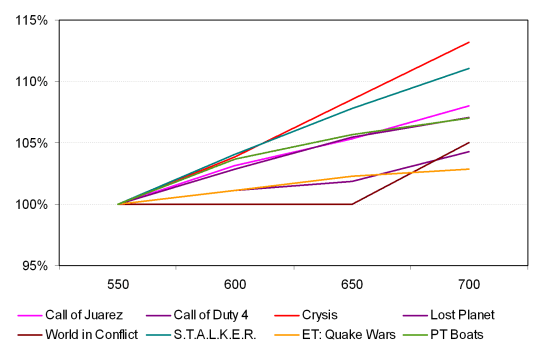

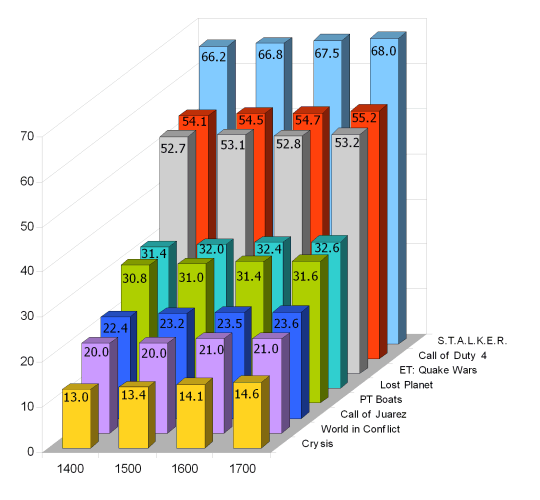

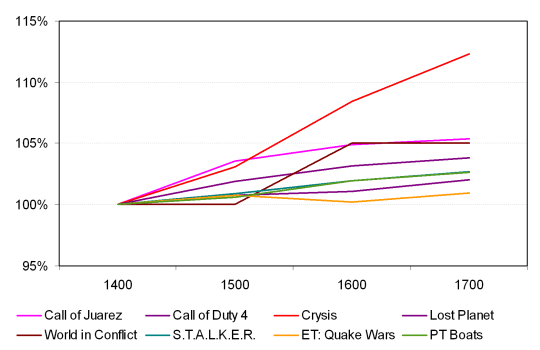

We used only one video mode with the most popular resolution 1280x1024 (or 1280x960 for games that do not support the former), MSAA 4x and anisotropic filtering 16x. All features were enabled from game options, nothing was changed in the control panel of the video driver. Our bundle of game tests includes many recent projects with built-in benchmarks. We gave preference to new games supporting Direct3D 10 or containing new interesting 3D techniques. Here is a full list of games we used: Crysis 1.2 (DX10), Call of Juarez 1.1.1.0 (DX10), S.T.A.L.K.E.R.: Shadow of Chernobyl 1.0005, Lost Planet: Extreme Condition 1.004 (DX10), PT Boats: Knights of the Sea DX10 benchmark, Call of Duty 4 1.5 (MultiPlayer), Enemy Territory: Quake Wars (OpenGL), World in Conflict 1.006 (DX10). We also used the latest version of RivaTuner to modify clock rates of a graphics card. Test ResultsDependence of performance on CPU clock rateIn order to determine how rendering speed depends on CPU clock rate, we changed the clock rate of our Athlon 64 X2 4600+ from 1800 MHz to 2400 MHz at 200 MHz steps. These frequency changes should cause the same performance alterations in games, which speed is limited by CPU in the first place. Of course, we shouldn't forget about rendering resolution in our tests and enabled anisotropic filtering and antialiasing. Everything will be different in other modes. We obtained the following results:  We can easily single out games, which rendering speed depends much on CPU, its clock rate. These are Call of Duty 4, Enemy Territory: Quake Wars, PT Boats: Knights of the Sea demo benchmark, as well as World in Conflict. Other applications are also limited by CPU to this or that degree, but not that much. For example, we can mention S.T.A.L.K.E.R. The other games are always limited by a graphics card. We'll publish this diagram in a different form, where performance of the testbed with a processor operating at 1800 MHz will be taken for 100% fps. The other numbers above 100% show relative FPS gain. That's how it looks like:  Our previous conclusions about the most CPU-intensive games are confirmed. Interestingly, all games clearly divided into two groups: those that depend much on CPU speed and those that don't. As the CPU clock rate grows by one third (from 1800 to 2400), frame rates of the first group grow approximately by one fourth, what concerns the second group - only by 5-7%. So we can make a conclusion that for half of the selected games our CPU in the testbed is not powerful enough to benchmark graphics cards. Modern games are limited by speed characteristics of the CPU. However, the most hard-driving games used for our tests, such as Crysis and Call of Juarez, have relatively low CPU requirements. Their speed depends more on a graphics card. And now we shall analyze parameters of graphics cards. Dependence of performance on video memory frequencyWe shall research performance scalability depending on video memory frequency by gradually changing its effective (doubled for GDDR3) clock rate from 1600 MHz to 2200 MHz at 200 MHz steps. These changes of video memory frequency (in fact, these are not integer values, but they are very close, so the difference can be neglected) should gradually change performance in games, which speed is limited mostly by video memory bandwidth or effective fill rate, which is the same thing when performance is limited by memory bandwidth. We still speak of the same conditions: 1280x1024, anisotropic filtering of maximum level, MSAA 4x - resolution and antialiasing strongly affect game requirements to memory bandwidth. We've got the following results:  We can clearly see applications that depend much on memory bandwidth and effective fill rate (that is video memory frequencies). The leader is Lost Planet, followed by S.T.A.L.K.E.R. and Call of Juarez. Let's have a look at the same data in a more convenient form - relative frame rates in different conditions with variable video memory frequency. The average frame rate with video memory operating at 1600 MHz is taken for 100%:  Lost Planet's dependence on memory bandwidth and fill rate becomes even more apparent. As video memory frequency grows almost by 40%, the frame rate gain almost reached 20%. The other games depend on memory bandwidth in our conditions (1280x1024, MSAA 4x) even to a smaller degree - two games get a 10% advantage from this memory bandwidth gain, another four games - 4-5%, one game does not show that it depends on fill rate and memory bandwidth at all. World in Conflict most likely depends a little on video memory performance as well, but its built-in benchmark does not show tenths in the average frame rate values. Our conclusion will be simple in this section: performance of most modern 3D games almost does not depend on video memory frequency, and consequently on effective fill rate and memory bandwidth. Just a few projects are really limited by memory performance. However even in this case changes in memory bandwidth do not provide corresponding performance gains. That is, none of the games are limited by memory bandwidth to the full extent. Dependence of performance on GPU clock rateNow we are going to evaluate the dependence of average frame rate (scalability) on G92 clock rate - this GPU is used in our GeForce 8800 GT. Frequency of the shader domain remains unchanged, we shall analyze it below. We modified GPU clock rate from 550 MHz to 700 MHz at 50 MHz steps. It's impossible to obtain accurate results for some reasons, but we specified the closest values, which do not differ by more than 1-2%. Changes in GPU frequency affect performance in those games, which rendering speed is limited mostly not by video memory bandwidth and effective fill rate, but by texturing (TMUs), rasterizing and blending (ROPs), as well as by other operations (triangle setup, input assembler, etc) performed by the main part of the GPU. I remind you again that we speak solely about 1280x1024 with enabled anisotropic filtering (it's important, because we evaluate dependence on TMU performance as well) and antialiasing (it affects ROP performance).  Interestingly, the most GPU-dependent games are Crysis and S.T.A.L.K.E.R. They are followed by Call of Juarez, PT Boats, and Lost Planet. And the least affected games are based on relatively old engines, as well as World in Conflict, which seems to be limited by CPU. To verify our conclusions, you can have a look at relative performance values in a more convenient form, where the average FPS of a 550 MHz GPU is taken for 100%:  Indeed, the first game in scalability depending on GPU frequency is Crysis, followed by S.T.A.L.K.E.R. This time we can see three groups: highly dependent on GPU clock rate (the above mentioned two games), medium dependent (Call of Juarez, Lost Planet, and PT Boats benchmark), and weakly dependent others. Interestingly, when the GPU clock rate is changed by more than one fourth, the maximum registered performance gain is only 11-12%, usually 3-7%. So our conclusion is as follows: even though GeForce 8800 GT is limited by TMUs, ROPs, and other units in our test resolution with enabled anisotropic filtering and antialiasing, the effect is not very strong. Rendering performance is more often limited by CPU and memory bandwidth instead of texturing and rasterization performance. However, we should also evaluate dependence of FPS on the clock rate of stream processors. Dependence of performance on GPU streaming processors clock rateWe evaluate rendering speed scalability depending on the power of stream processors by gradually changing their frequency, aka shader domain frequency, from 1400 MHz to 1700 MHz at 100 MHz steps. Fine control of the shader domain frequency is impossible, but all test values were specified within a couple percents of the target frequencies. Frequencies from 1400 MHz to 1700 MHz should illustrate scalability of 3D rendering speed in games, which FPS is limited mostly by arithmetic operations in vertex and pixel shaders. Theoretically, the same concerns geometry shaders, but they are not used on a grand scale in games.  This situation is simpler than in case of the frequency of the entire GPU. When we adjust frequency of shader processors in G92, rendering speed changes significantly only in Crysis, which is really limited by arithmetic operations in shaders (this dependence is almost linear - the game apparently requires shader power). All other games fall within 5%. We can see it well on the second diagram, which shows the data in a more convenient form (relative performance) - rendering speed with the shader domain frequency of 1400 MHz taken for 100%  We cannot single out any game but Crysis. Perhaps, we can mention the only OpenGL game, Enemy Territory: Quake Wars, which performance practically does not depend on the speed of shader processors. The other games did not gain much from the increased clock rate of shader processors either. When it was increased by more than 20%, the speed grows only by 2-5%. Crysis is an exception, of course. It shows almost linear dependence and 12% performance gain. Situation in World in Conflict is not quite clear, because of the not very good benchmark integrated into this game - it displays only integer results. Here is our conclusion: only rare modern games are limited by performance of vertex and pixel shaders, to be more exact, by arithmetic operations in them. More often, performance in such conditions is limited by memory bandwidth, fill rate, texturing speed and/or performance of other GPU units, but not shader ALUs. For the moment, there are very few exceptions. ConclusionsLet's draw individual conclusions about each game:

And now let's draw the bottom line:

Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. |