|

||

|

||

| ||

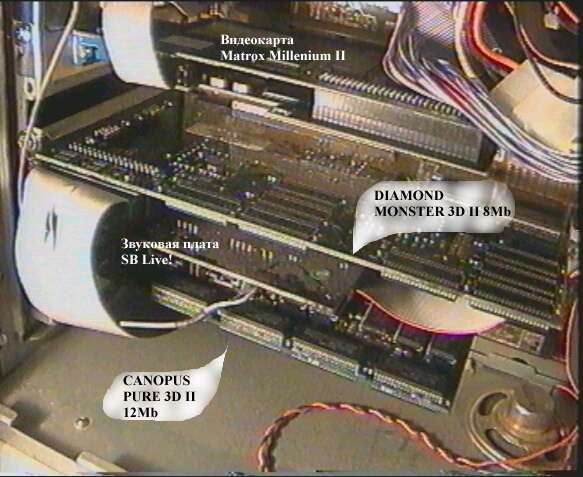

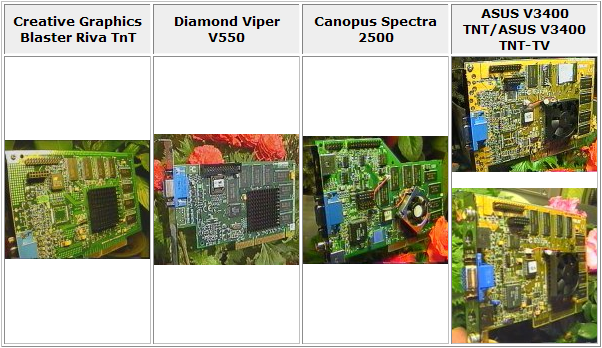

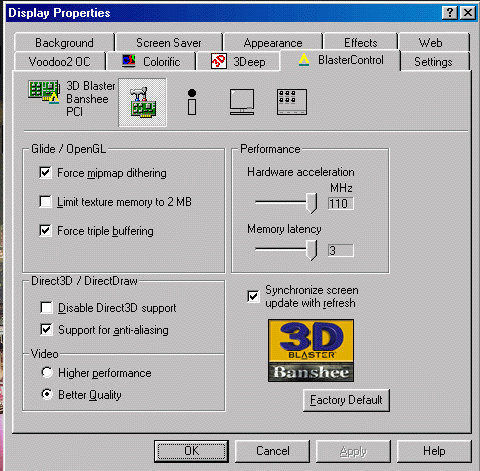

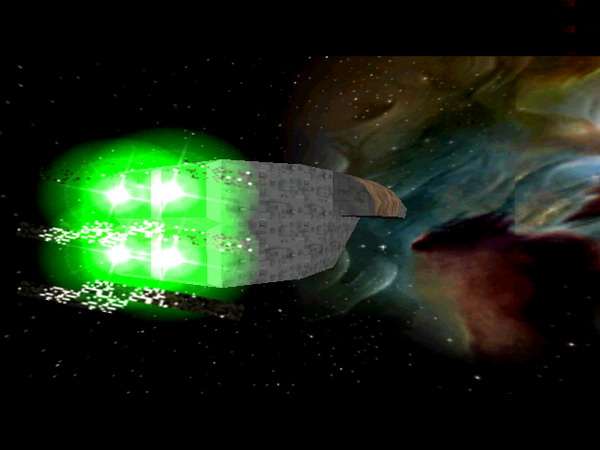

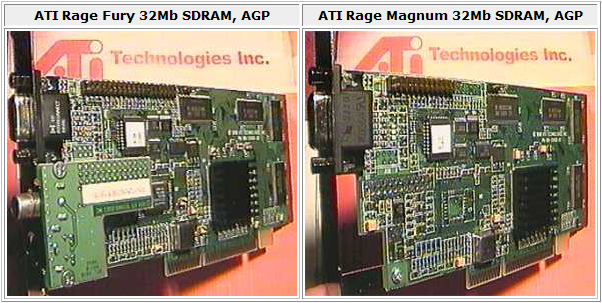

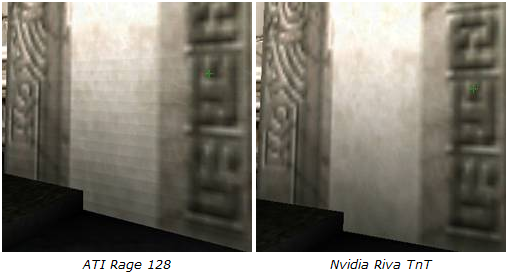

The year of 1998... The key landmarks of 3D graphics were set up already, a new era began. Competition for consumers became the main objective for many companies that were actively seeking their market place. A squall of new products, a trusted marketing strategy, as well as 3D game blockbusters - users had to face them all, although they were happy even with 3Dfx games. Some promising start. Tense competition between the "Elders" (ATI, Matrox, and S3) and ambitious players (3Dfx and NVIDIA) caused an avalanche of various 3D games and had a positive effect on the price of graphics cards. Can you imagine a top graphics card for a tad more than $200, or a card for $150, which is just 10-15% as slow? That was why only the strongest companies could survive in this overheated market, which could promptly fill the market with their products and keep their quality on a decent level. Users could do fine with a nominal 3D accelerator back in 1997, but in 1998 they already had to buy a sterling solution. This material will be devoted to how each of the companies managed to cope with the rapidly evolving market and adapt to the competition. 3Dfx Voodoo2 and unreal beautyThe previous part mentioned that even 3Dfx failed to come up with a sterling 2D/3D product, which could cope with restrictions of a stand alone card - 3D accelerator. Indeed, taking the Voodoo Rush failure into account, Voodoo2 was designed as an addon to existing graphics cards again. It used the old EDO, even though many competing products were already equipped with fast SDRAM/SGRAM. Nevertheless, scepsis about this issue in the beginning of 1998 was not grounded - this chipset was very successful.  The new generation of Voodoo cards - Metabyte Wicked3D Voodoo2 12MB Voodoo2 chipset consisted of three chips: one PixelFX and two TexelFX chips - they allowed to apply two textures simultaneously (multitexturing). For example, the card could apply a wall texture and an additional texture (a light map or a blood stain) to a polygon fragment (part of a wall) per cycle. Multitexturing helped the 0.35-micron 90 MHz solution from 3Dfx break a new record in texturing speed - 180 million texel (TexEl = Texture Element) per second. This approach apparently required tight cooperation with game developers - a lot of new games were updated after Voodoo2 to reveal the potential of this 3Dfx card on a new level. Even old but popular games were updated - patched Quake I also supported multitexturing. API Glide was also improved to provide a high enough level of compatibility with old games written for the first Voodoo Graphics. Voodoo Rush mistakes were taken into account. So Voodoo2 users could enjoy games written to support its technologies.  Unreal with its amazing graphics (800x600, 16-bit colors) Out of doubt, Unreal was one if the brightest games of that time - a new shooter from Epic MegaGames made quite a splash. Along with an unobtrusive plot, harmonic levels, and intelligent enemies, Unreal offered striking graphics. 3Dfx and multitexturing contributed much to the original atmosphere of the game - in fact, only Voodoo2 with 8MB or 12MB could provide a sufficient frame rate to play this game in 800x600. You could also play Unreal with its predecessor, 3Dfx Voodoo, but only in 512x384. What concerns rivals of 3Dfx, they had a hard time: the first versions of Unreal did not support universal API DirectX and OpenGL - only Glide and PowerVR. And the developers were not in a hurry to add this support in their patches. Thus, Unreal was a break-through in graphics, an excellent game, Epic's success, and a tower of strength for 3Dfx and Glide as well as a driving force of Voodoo2 card sales. In fact, not only of Voodoo2 cards: owners of Pentium (and even Pentium MMX) processors with memory size below 64 MB were forced to upgrade their computers, if they wanted to play Unreal.  Connecting two Voodoo2 cards into SLI A special mention should be made of another new technology in context of Voodoo2. SLI and SLI. Yep, it happens sometimes. We'll touch upon this issue in the next parts of this series. But you'll never guess what exactly this technology did! 3Dfx SLI united two graphics processors to process 3D graphics providing almost a twofold performance increase, so that you could play games in superhigh resolutions. For example, you could play Unreal with Voodoo2 SLI in 1024x768, fantastic resolution for those times. The next step of the 3Dfx SLI technology was graphics cards with two sets of chips on a single PCB. These products were manufactured by Quantum3D, famous for its nonstandard approach to designing ready solutions. The Obsidian series included several products with two sets of Voodoo2 chips. Obsidian X16 and X24 were equipped with 2x8MB and 2x12MB of video memory correspondingly. They did not differ much in price, while the increased frame buffer was important for high resolutions. That was why the Obsidian X24, was popular among well-off gamers.  Quantum3D Obsidian X24: 24MB of memory, 2 x Voodoo2, huge dimensions The old guard never says die: Matrox G200Matrox was the first to fight 3Dfx back. This company presented a whole series of products based on the MGA-G200 in the beginning of 1998. The 0.25-micron graphics chip did not possess multitexturing like the 3Dfx Voodoo2 and it couldn't process two pixels at once like the RIVA 128. Nevertheless, it supported high resolutions in 3D games (you could run several games even in 1280x1024), TrueColor (16 million colors instead of 65 thousand colors in the Voodoo), and sterling trilinear filtering, which did not take much of GPU resources. Ready cards usually came with 100MHz SGRAM memory, 8MB, which could be upgraded to 16MB (top memory size for that time; however this record was broken very soon).  Matrox Graphics: one of few chipmakers that manufactures all graphics cards on its own The 2D section of the card was also good (integrated 250MHz RAMDAC), so the image was sharp enough to be used with 17" and 19" monitors. This bunch of functions made the MGA-G200 a decent choice, but games were not in a hurry to accept the new Matrox card. It actually supported only API DirectX 6.0. OpenGL worked only with an emulator, which appeared after the release of products: Glide was out of the question. I cannot say that you couldn't see accelerated 3D graphics with the G200 - you could run GLQuake or Quake2 even in higher resolutions than Voodoo2 supported, the games would run too slow - you had to roll back to 640x480, this resolution was popular among users of the old Voodoo Graphics. However, the MGA-G200 had another weighty advantage - its operation with multimedia content and video support, improved since the first Millennium cards. In order to improve its positions on the market, Matrox also launched the Mystique G200 with TV in/out and the Marvel G200 - a sterling solution for video signal processing. The Low-End segment was covered by the Millennium G100, based on the cut-down G200. It was probably the first series(!) of products based on the same chip.  Typical workhorse - Mystique G200 with 8MB SGRAM As a result, the Matrox G200 series was a good choice for well-off users providing comfortable operation with mid-sized monitors. These users could also play several games that worked with their Matrox cards. But progamers were still using their 3Dfx cards. They needed a different solution... NVIDIA RIVA TNTNVIDIA analyzed its failures with the NV1 and the RIVA 128 and drew appropriate conclusions. The company eliminated weak spots of the RIVA 128, and the RIVA TNT got new advantages: significantly improved OpenGL driver, 90 MHz clock rate owing to the optimized fabrication process, fewer hardware errors, 32-bit colors (16 million colors as with 24x bit colors, but with an alpha channel), expanded video support, 250MHz RAMDAC, 16MB of SGRAM or SDRAM memory, 128-bit bus. Just like the RIVA 128, the new card could do two operations per cycle owing to its two pipelines, each of which had its own texture unit. The NVIDIA solution was objectively stronger than multitexturing provided by the Voodoo2: it could process two pixels with a single texture or one pixel with two textures, while the Voodoo2 could do only the latter. Nevertheless, this feature caused compatibility problems: special patches were required to perform like the Voodoo card in games written for Voodoo - it was not always easy to adapt NVIDIA TwiNTexel (hence the second part of the name - RIVA TNT).  Practically each manufacturer offered a graphics card based on RIVA TNT Besides, NVIDIA launched a large-scale marketing campaign to support its successful product. It aggressively promoted multiple advantages of the product. Special attention was paid not only to the single-chip architecture of the card and its support for various effects, but also to the TwiNTexel technology, as well as support for 32-bit colors (it made quite a splash in forums). Besides, TNT was associated with trinitrotoluene (explosive). The new name for drivers also fell in with the card - Detonator (this name had been used until 2003). NVIDIA's pricing policy allowed multiple partners to offer RIVA TNT cards with 16MB of memory for less than $200 versus $250-$350 for the Voodoo2 card with 8MB or 12MB. If you compared specifications of the 3Dfx Voodoo2 and the NVIDIA RIVA TNT directly, only hardcore 3Dfx fans would tell you that their cards were better. But real tests are usually better than paper comparisons - in this case the situation was not that simple. The main gripe about NVIDIA was no support for API Glide. Yep, one could sound off that the API was closed for third-party developers, but the fact remained: most games were written for 3Dfx - support for DirectX and OpenGL was often added only if there were some resources left. That was the reason why many Glide games were fast and good-looking on the Voodoo2, while competing solutions, including RIVA TNT, sometimes failed to provide both. And users always want these characteristics right now, not in distant future.  ELSA Erasor II presents: TNT for PCI Games were slow to adopt other APIs. For example, owners of non-3Dfx cards could enjoy Unreal in all its splendor only in a year. Nevertheless, RIVA TNT urged the industry to use not only Glide - the process of adopting OpenGL and Direct3D was picking up momentum, and NVIDIA got good profits from its product. Cut-down Voodoo2 plus inexpensive 2D = Voodoo BansheeThe onset from competitors with their 2D/3D graphics cards (NVIDIA RIVA TNT and Matrox MGA-G200) and announcements of future products from ATI and S3 affected the date 3Dfx launched its single-chip solution. The Voodoo Banshee was intended to keep 3Dfx positions in the highly competitive Mid-End segment. The new 3Dfx card got decent 2D graphics (250MHz RAMDAC), which could be compared with typical RIVA TNT solutions. However, as in case with NVIDIA, the quality of 3Dfx cards and video output in particular depended on a given sample and its manufacturer. Alas, the problem of high-quality graphics cards was much more pressing those days than now. But let's return to 3D functions in the Voodoo Banshee.  Creative 3D Blaster Banshee settings: each manufacturer developed its own drivers at that time It was decided to remove the multitexturing technology from the Voodoo Banshee, lest it should interfere with top Voodoo2 sales. So its performance dropped considerably. It was more or less compensated with 100 MHz versus 90 MHz in the Voodoo2, as well as with 16 MB of fast SDRAM/SGRAM memory. The card had the same 3D functions as the Voodoo2, except for the above-mentioned multitexturing. Unlike competing products, this card supported neither TrueColor nor trilinear filtering. However, it was hardly a serious problem for the Voodoo Banshee. TrueColor was not widely used by 3D games in 1998. Besides, even the RIVA TNT was not always fast enough in 24/32-bit modes. Now what concerns trilinear filtering. Even though all other manufacturers claimed that they supported this feature, only the Matrox MGA-G200 did it well, and it offered mediocre performance and compatibility. The RIVA TNT just did approximation (imitation) of doubtful quality, or it took up a lion's share of performance. Thus, shortcomings of the Voodoo Banshee were not relevant at that time. On the other hand, it was not outscored much by the Voodoo2 as far as performance and Glide compatibility were concerned. In return, the card offered a sterling single-chip solution for a democratic price.  Voodoo Banshee 16Mb from Guillemot Competing with the RIVA TNT, Banshee managed to become an affordable alternative for people who needed compatibility with Glide. The NVIDIA card was chosen mostly for its advanced technologies. Savage 3D from S3One of the most influential market players, S3, couldn't hope even for the remaining market of budget 2D solutions: Intel 740 was coming to offer 3D in the Low-End segment. Thus, the last bastion of S3 might fall to its competitors.  Hercules Terminator Beast - Savage3D in all its splendor And voila, the long-awaited Savage3D was launched. It should be noted that even the budget product from S3 offered a lot of interesting features. Let's start with the GPU clock. It should have been very high - 125 MHz, an absolute record owing to the thin 0.25-micron fabrication process. Nevertheless, production-line cards operated at lower frequencies because of inexpensive heat sinks. The key innovation was a proprietary texture compression technology called S3TC. Indeed, despite the budget 8MB of 64-bit SDRAM, the Savage3D with API S3 MeTaL easily coped with massive textures, which were too heavy even for some top solutions. In order to demonstrate the new technology, S3 created special levels for Quake2 and Unreal, where the Savage3D and S3TC worked miracles. These levels illustrated the texture compression potential well.  Quake2 with 20MB of textures on the S3 Savage3D 8MB: thanks to S3TC Alas, the other features were not that peachy: the 2D section remained on the entry level, providing acceptable speed and quality on 15" and 17" monitors (in 1024x768 or lower), and the potentially strong 3D section paled on the background of hardware mistakes and weak software. DirectX 6.0 was supported. However, there were a lot of artifacts in games because of buggy drivers, and OpenGL was emulated by S3 MeTaL. Some of the bugs were fixed, of course. But the impression of S3's debut was spoilt.  Bugs in XDEMO image: S3 drivers in action Nevertheless, many users wanted to spend less than $100 and get a graphics card with good video functions, acceptable 2D, low operating temperatures, promising S3TC technology, and sufficient performance to tickle owners of much more expensive cards from time to time - so the Savage3D got the wind up. ATI Rage 128: better late than never?Number Nine Visual Technology and ATI Technologies were the last to present their new products. #9 was founded in 1982, and it was living out its days in 1998: the company actually presented a sterling solution for 3D graphics based on the Ticket to Ride chip (to replace the Imagine128) only in the second half of the year. The Revolution IV PCB even had the following line engraved: "Bong, Bong... Your Silver Hammer" - some passion for Beatles. The product had an unusual aura (for a graphics card). However, its 3D functions resembled old experimental solutions, only with 16MB of on-board SDRAM/SGRAM memory. Alas, a number of functions, trilinear filtering in particular, were apparently weak, and its performance left much to be desired even compared to the budget Savage3D. But l'enfante terrible from #9 couldn't boast of high 3D image quality and ideal compatibility. Nevertheless, the #9 Revolution IV had one forte - almost ideal quality of 2D image: high speed and accuracy were available even in the highest resolutions with professional CRT monitors.  The last chip from Number Nine: very good at 2D, and just as bad at 3D This feature became the last resort of Number Nine products until its merger in 2000. The company was purchased by S3. But let's have a look at what ATI presented in the last minutes of passing 1998. The Canadian manufacturer lost its ground by the time the Rage 128 was launched: as competing cards appeared in the market, the Rage Pro was getting less popular (even Turbo drivers were of no help here, although they increased performance by more than 1.5 times in one test.) The only market, where ATI was still in the lead, was mobile graphics. Owing to low power consumption and good video functions, the Rage Pro was popular among notebook manufacturers. Nevertheless, updated solutions for desktop systems had to be launched. The following cards were based on the ATI Rage 128: Rage Fury (to work with a video signal), All-in-Wonder 128 (the next product in the ATI A-i-W Pro line with an on-board TV tuner and a new GPU), Rage Magnum - a graphics card without advanced video features, designed for gamers, as well as Xpert 128 - the cheapest cards with basic functionality.  Two representatives of graphics cards based on the ATI Rage 128: Rage Fury and Rage Magnum 100/100 MHz (or 110 MHz), 0.25-micron fabrication process, 250 MHz RAMDAC, 128-bit memory bus, two pipelines - similar to the NVIDIA RIVA TNT, but with the proprietary ATI video features. Nevertheless, there were some 3D improvements expected in the Rage 128: single-pass trilinear filtering, 32-bit Z buffer, 32 MB of on-board memory. So the industry leapt from 4 MB to 32 MB of on-board video memory for a little over a year. The new card performed very well in Direct3D: the Rage 128 was only nominally faster than the RIVA TNT in 16-bit mode (HighColor), but it was the fastest card in the 32-bit mode (TrueColor). It happened because its performance drop in the 32-bit mode was just 10%, so users could play all games in TrueColor mode (if they allowed it.) TrueColor mode was also preferable for the Rage 128 because of mediocre quality of HighColor in the ATI chip. Bilinear filtering was not perfect either: you could see blocks in the image, sometimes even very noticeable.  Bilinear filtering artifacts in the ATI Rage 128 Unfortunately, there were also some problems with mip-mapping that selected textures of lower resolutions for distant objects: mip-levels for lower-res textures often came in the wrong order. Near objects looked well, but distant mip-levels processed by the Rage 128 were ugly. You could see it only if you looked closely - you could hardly notice it in battle scenes. There were also problems with OpenGL: the Rage 128 was outperformed by the RIVA TNT even in the 32-bit mode with drivers optimized for Quake2. The 16-bit mode was a complete fiasco for ATI. Moreover, the situation was even worse in other OpenGL applications. That was why this card produced an impression of a raw product with an excellent potential and video features: this card was actually launched too late, so the engineers were in a hurry to present this product to catch up the departing train. The market was already overheated by the time the ATI Rage 128 was launched - almost all segments were filled by quicker companies. In order to capture somebody's share of the pie, the company had to provide the strongest product and powerful promotion. ATI failed to do both: the Rage 128 slowed down company's descent, but it didn't bring it up. ConclusionSo what did we have by the beginning of 1999? Each manufacturer presented a series of 2D/3D products based on the same GPU. There appeared segmentation of the new 3D market - each user could choose a product for his/her financial situation. Sharp competition started in the $200 segment. Voodoo2 SLI remained the fastest solution. Nevertheless, the situation changed significantly: last of the 2D Mohicans lost what was left of the market, and the reign of Glide gradually shifted to public DirectX and OpenGL. Nothing seemed to promise cardinal changes for 3Dfx, ATI, Matrox, NVIDIA, and S3. But they would happen for the next couple of years... We'll discuss them in the next article. You can have a look at full specifications of old GPUs here. The author expresses gratitude to

Andrey Vorobiev for the idea of this article and the inspiration to write it. Mikhail Proshletsov (mproshl@list.ru)

January 4, 2008 Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. |