PC Memory Testing Methodology

|

We have three questions on the agenda: whether it makes sense to test memory modules at all, results in which programs and benchmarks can be illustrative, and, of course, to select a sufficient minimum of these theoretically interesting programs, which would allow to compare memory modules by their performance (the other parameters: overclocking potential, it's quite easy to determine SPD data, we already did it in our reviews).

So does it make sense to test memory modules, or their performance is currently so high that it covers any requirements of any programs in any modes (we hear such opinions from time to time)? Theoretically, it's crystal clear: we should test them. There is a great difference between the statement "there are almost no performance differences, considering results in 10-20 applications", especially if these applications (and testing conditions) were selected to reveal performance differences of other components (that is to test processors, graphics cards, etc) and the statement that "there is no difference at all". Apparently, those people, who care about memory performance, may deal with tasks that actively interact with large volumes of memory: multitasking environments in the first place, but not only them. However, if differences are within the measurement error in practice, interest to these tests will indeed be minimal. So we either have to show that there exist conditions when fast/large memory yields benefits, or we have to admit that there is indeed no difference at all.

As conditions may vary significantly from user to user, the "natural test procedure" risks being too voluminous/bulky and still of little use. So unpopular synthetic tests do not look as useless now as in case of CPU tests, for example. Algorithms used in different programs differ very much. For example, SuperPi uses code, which is not to be found in any other program. So the load on various computing units of a processor and consequently its general performance cannot be evaluated with just a couple of programs. But data exchange with memory is easier to measure, memory latencies (in various modes) and bandwidth does not depend on what program sends a request to read of write data. However, we are not going to get stuck with synthetics only. We'd just like to note that such data are very useful for testing memory.

What testbed configuration to choose? The only undisputed issue about the OS choice is its bit capacity: a 64-bit operating system is a must, because 2 GB will not stay the standard configuration for long, and a 32-bit version of Windows is not optimal even for 4 GB of memory, as it can actually address only a lower volume of memory. XP or Vista? From a tester's point of view, XP is a better choice for such tests, as there are fewer chances for some aperiodic background processes to interfere with the tests. Besides, 64-bit Vista can work perfectly on one computer and then demonstrate glitch after glitch on another (that is either the OS itself or the drivers are still buggy). However, there are probably much more users of 64-bit Vista than users of 64-bit XP now. Besides, it makes more sense to use the latest operating system. And we shouldn't remove DX10 games from our test procedure. Moreover, we ran all tests in MS Vista, except for two synthetic tests (Sandra and RMMA), and despite some debugging difficulties, we managed to obtain correct results (while we came across one strange problem in XP 64-bit, we'll discuss it later).

What platform to choose? No doubts, we need the memory controller to be integrated into a processors so that performance is not limited by the bus throughput. These days it's Socket AM2+. And Socket AM3 will be welcome 'tomorrow' for DDR3 tests. The recently announced platform from Intel and a Nehalem processor could formally aid us here. But integrated memory controllers are a new solution for Intel, they suffer from incompatibilities with various memory modules. Besides, this memory controller may sometimes perform in a strange way in various modes (so registered deviations may have little to do with performance differences between the modules). In this case it will be debugging rather than testing. Besides, users have all reasons to expect this to be done by the manufacturer. By the way, the first implementation of the integrated controller from AMD also had suffered from compatibility problems, so there is nothing surprising about this state of affairs. It goes without saying that a roundup of memory modules designed for Nehalem can be based on the same test procedure.

However, we cannot expect any principal changes from the DDR2-DDR3 upgrade. It's a quantitative change, the increase of memory bandwidth, which is unfortunately 'compensated' by higher latencies to some degree. But it stands to reason that the advantage of DDR3 memory is getting more apparent as the technology evolves. No one doubts that DDR3 will soon outscore DDR2 not only in real performance, but also in its price/performance ratio.

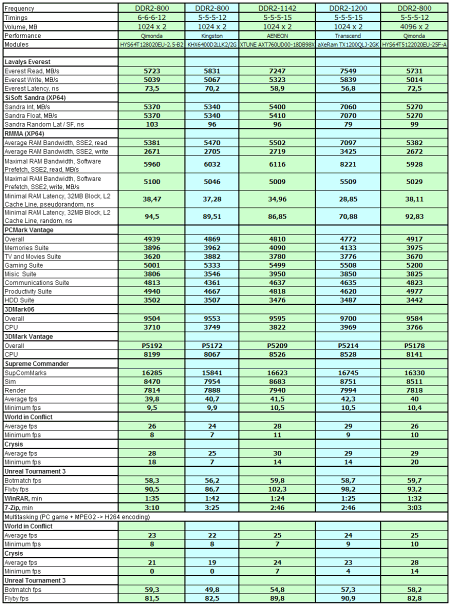

But enough of the theoretical part, let's have a look at tests. To avoid being too 'academic', we selected memory modules to clear up some typical questions that arise when you face this choice. The most frequent question is how to choose between memory modules of the same frequency from different manufacturers (sometimes with different timings) as well as different modules for overclockers with different maximum frequency. However, why not compare expensive modules for overclocking with the cheapest OEM models without heat spreaders, but two or four times as capacious? What do these different things have in common? Well, for example, the most important parameter for consumers: your majesty the retail price.

Hardware and software

Testbed configuration:

- CPU: AMD Phenom X4 9850

- Motherboard: Gigabyte MA790FX-DQ6 on AMD 790FX

- Graphics: ATI Radeon HD 3870 GDDR4 512 MB

- HDD: 500 GB Seagate Barracuda 7200.10 SATA, 7200 rpm

Memory modules:

- Qimonda HYS64T128020EU-2.5-B2 DDR2-800 6-6-6-12 1024 MB x 2

- Kingston KHX6400D2LLK2/2G DDR2-800 5-5-5-12 1024 MB x 2

- AENEON XTUNE AXT760UD00-18DB98X DDR2-1142 5-5-5-12 1024 MB x 2

- Transcend aXeRam TX1200QLJ-2GK DDR2-1200 5-5-5-12 1024 MB x 2

- Qimonda HYS64T5122020EU-25F-A DDR2-800 6-6-6-12 4096 MB x 2

Software:

- Windows Vista Ultimate (64 bit), Windows XP (64 bit), Catalyst 8.9

- Synthetic benchmarks (to measure memory bandwidth and latencies) Lavalys Everest 4.60, SiSoftware Sandra 14.20.2008.5, RMMA 2.35

- 7-Zip 4.10b

- XviD 1.0.2

- x264 rev 807

- PCMark Vantage

- 3DMark06 (Normal settings, 1280x1024)

- 3DMark Vantage (Default settings, Performance)

- Supreme Commander (Gas Powered Games) Default settings, built-in test

- World in Conflict (Sierra) -- DirectX 10, Medium, built-in test

- Crysis (Crytek) -- DirectX 10, Medium, Crytek Built-in, available here

- Unreal Tournament 3 (Epic Games) -- DirectX 10, 5 Details Level, 5 Textures Level, CTF-coret, 1280x1024, demo available here

As the testbed uses mediocre graphics cards (in modern terms), game tests were run at a reduced resolution (1024x768) and with average graphics quality settings, except for Unreal Tournament 3 that was run at 1280x1024. On the other hand, a more powerful graphics card will only raise fps values (and will allow higher resolutions), it does not affect much the performance ratio between memory modules. All tests were run in Windows Vista, except for Sandra (it supports Vista only in its commercial version) and RMMA (it does not support this operating system so far).

Click the thumbnail for the larger image. Click the thumbnail for the larger image.

Write a comment below. No registration needed!

|

|

|

|

|

|