|

||

|

||

| ||

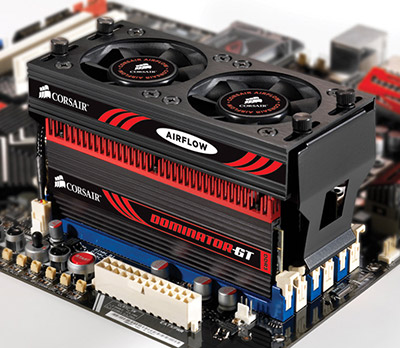

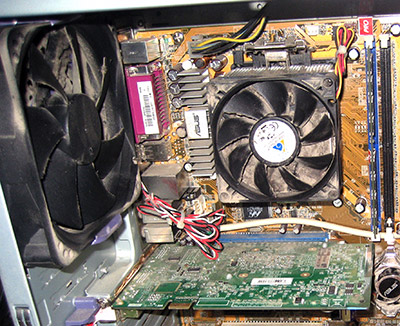

FunctionalityThis notion has little to do with CPUs, as it theoretically implies that some processors can do what others cannot. In fact, practically any x86(-64) processor can cope with any task (that is you can start any program on a computer with a given processor) -- only execution speeds will be different. But on the other hand, fatally low speed of running applications can make them difficult to use, even useless. So we'll take functionality of modern processors into account, but with one reservation: it's not that some processors can cope with a certain task, while the others cannot. it's only that processors possessing certain functionality cope with certain tasks much better (faster, in particular). So, what differences in functionality may exist between modern processors? Support for extended instruction setsSome processors may lack support for additional instruction sets. There have been many such additions in the history of the x86 architecture. However, we focus on modern CPUs, not all x86-compatible ones since 1978, when Intel had launched i8086. So it makes no sense to include such instruction sets as MMX, SSE, SSE2. and SSE3 -- they are supported by all CPUs available in stores these days (SSE3 is not supported only by the oldest Pentium 4 and Athlon 64 processors, but I doubt that you will find them anywhere except a flea market). So we have only SSE4, SSE4.1, and SSE4.2. They cannot affect the execution speed of real software in 99% cases, as they don't include many instructions (specific at that). Besides, compilers do not support these sets as well as the older ones. Thus, we can establish a fact that there are presently no significant differences in functionality between x86-64 processors in terms of support for additional instruction sets (if anyone of you wants to tax us with being too much categorical and tell us how he misses SSE4.1 -- we can only shrug it off: why do you read this article, if you know exactly why you need SSE4.1?) Virtualization and Execute Disable (NX) BitIn brief, you should know the following about this function of modern desktop processors for homes and offices: Execute Disable (NX) Bit support is not an advantage just as support for MMX, SSE, SSE2, and SSE3 extended instruction sets -- this function is available in any modern processor. So we won't even mention what it actually does: it does not matter. Virtualization is another matter entirely: if you know what it is -- you can probably answer the question why you need it or not. If you don't know, then you don't need it in 99.9% cases. Don't get me wrong: there is really no point in explaining the virtualization technology in this article. You don't miss anything here. For example, yours truly knows how this technology operates -- but this information has never come in handy in my work, although I always deal with various computers. 64-bit supportSome processors may support 64-bit (AMD64 or EMT64). People still argue whether the 64-bit mode yields any noticeable advantages in speed versus the 32-bit mode -- (even though the first 64-bit x86 processor -- Athlon 64 -- was launched by AMD in 2003). Here is an impartial summary of this dispute: in pure theory, the 64-bit mode (and consequently 64-bit software) has several peculiarities that can make programs run faster and several ones that can slow them down. Acceleration (purely theoretically) should be stronger than slow downs, so a 64-bit program using all features of a 64-bit processor will be a tad faster than its 32-bit counterpart. In theory. But in practice, software must be properly optimized to gain 64-bit advantages (sometimes even rewritten). Most software developers won't optimize their products for 64-bit processors, while there still exist computers with 32-bit processors. Most of them just recompiled the old programs with 64-bit compilers and called it a day. Such approach is apparently useless as far as performance gains are concerned.  The old and new logos of the same AMD Athlon 64 X2 CPU. How do you think, when AMD was prouder of processor's 64-bit nature? :) Nevertheless, despite the above-said, 64 bits can be really useful in terms of performance, especially for really powerful computers or workstations for serious resource-intensive tasks written for the latest operating systems (for example, Microsoft Windows Vista). The fact is, computers with 32-bit processors can use up to 4 GB of memory (I know about the magical combination of three letters -- PAE -- but I simplify the situation deliberately, as it's justified by the desktop focus of this article). Moreover, 32-bit operating systems from Microsoft cannot use 4 GB RAM entirely. Any 64-bit processor working under a 64-bit operating system lifts this restriction. As a result, 4 GB of memory are used in Windows more efficiently, and it's also possible to install more. When a computer runs complex resource-intensive applications (or heavy computer games with high hardware requirements), the opportunity to install 4 GB or even more can improve overall performance, so that it does not even matter whether a CPU runs faster. From this point of view, 64-bit support is very important -- it removes one of restrictions that can affect performance of a computer as a complex system. You must understand what bonuses exactly the 64-bit support can provide these days: it's lifting a restriction on maximum memory capacity rather than higher performance. Support for various memory typesAs AMD built the memory controller into its processors since the first Athlon 64 models, and Intel has recently decided to follow suite, there appeared a problem, which most IT specialists would have called weird 6-7 years ago: some processors became compatible only with certain types of memory. For example, early AMD Athlon 64 models support solely DDR memory, Athlon 64/X2 and Phenom processors support DDR2, and the new Intel Core i7 -- DDR3. It looks like a difference in functionality. But in fact it's completely artificial. You install what memory is supported by a processor, of course. But to draw conclusions about functionality or speed of contemporary processors proceeding from what memory type they support is to make a mountain out of a molehill. If we compare 5-year-old AMD processors supporting DDR-400 with modern AMD products supporting DDR2-800/1066, we'll certainly see differences in functionality and performance. Does it happen because of different memory types? Not entirely. New processors are just faster than the older ones (including higher frequency), and they could use features of the new memory type since certain time. Since when? This period begins when processors of the previous generation start to leave the market in the natural way -- old models are discontinued, the rest of them go up for sale, etc. However, if we compare equally-clocked processors manufactured at the same time with similar architectures, one of which already supports a new memory type, while the second -- only the old type (other characteristics being almost equal), you will hardly detect noticeable differences.  But it sure looks good. The number of coresMulti-core processors are very popular now, even Low-End series from both leading manufacturers (AMD Sempron, Intel Atom and Celeron) include dual-core processors now. Does the second core provide additional functions or performance gains? As it often happens, it's much easier to get the idea, if we say farewell to Master Myth. Master Myth of our times sounds like this: even now common users run so many background processes, that the more cores, the better -- it's necessary for the antivirus, firewall, torrent client, ICQ, Skype... In fact, this myth is absolutely invalid: this zoo works well for many users with single-core processors even now. And prior to the appearance of multi-core CPUs, a similar zoo had worked for all -- and no one actually complained (antivirus monitors and firewalls had appeared long before Pentium D and Athlon 64 X2). The author of this article even played Quake 4 on a single-core system with antivirus, uTorrent, eMule, QIP, and Skype running in the background. Honestly, I didn't notice any slowdowns: modern operating systems are clever enough to distribute resources of a single core between applications. So first of all you should analyze background activities of your computer. Besides, we'd like to mention another, um, not quite a myth, but let's say, a strong exaggeration. Aggressive advocates of multi-core processors always stress that a background process can take up much resources, much more than decent p2p clients and antivirus monitors consume. As a proof they mention two randomly selected heavy tasks running simultaneously: from simultaneous 3D rendering and editing bitmap images to audio/video encoding and playing some shooter. We shall not incriminate contrivedness to such people -- we cannot know what they do. However, we doubt that such a situation is really typical for most users. For example, as I write this very article, my computer is also encoding video. It's a really resource-intensive background process -- but the main task (typing text) does not require much resources at all! On the other hand, it's difficult to agree with the paranoia of the past years -- "it's all pure marketing, only CPU manufacturers need it to put the lug on users". The number of programs that can use multiple cores to run faster is growing each day. From this point of view, computer games are the most illustrative fall of the last single-core bastion: almost all modern game engines already got (or hurriedly getting) support for multi-core CPUs. And this feature demonstrates performance gains in real tests. Various video codecs, graphics editors, and even some system utilities got this support long ago. So, software is actively adapting and getting optimizations for multi-core processors. So our general recommendation sounds like this: a dual-core processor is presently an optimal choice, as it works well with real tasks. Besides, single-core processors are becoming a thing of the past. But processors with more than two cores are really necessary only to those users, who know exactly what tasks they will be used for. Power saving technologiesIn case of processors, this term stands for various implementations of one simple principle: to reduce power consumption of a CPU, when it's idle, that is when it is not engaged into computing. The main objective of power saving technologies in desktop processors, pray forgive this seditious idea, is not to save a couple of cents a month, but to provide comfortable PC usage at home (read the next article). All modern desktop processors from two main competitors (Intel and AMD) support one of two proprietary power saving technologies: Enhanced Intel SpeedStep (EIST) or AMD Cool'n'Quiet. EIST has no official version numbers, it evolves from architecture to architecture (Intel). And AMD decided to highlight its power saving technology in new Phenoms and called it Cool'n'Quiet 2.0. Anyway, differences between old and new generations of Intel (Core i7) and AMD (Phenom) processors come down to the following -- the latest processors from both competitors can control power saving in each core independently, unlike previous generations, where power saving technologies worked with processors as a whole. It's difficult to say how much more efficient the new technologies are. However, the idea of power management for each core separately looks quite reasonable and promising. You can read about power management technologies and why desktop computers need them in the next part. Noise and dustIt's probably the most 'domestic' and down-to-earth criterion for evaluating processors -- the only criterion that has no direct relationship to the processor proper. We'll need a short excursion into the historical domain to explain the idea behind it. In the year dot, when the clock rate of the first x86 processor (Intel 8086) was only 5 MHz (versus more than 3 GHz in modern CPUs), the problem of CPU cooling was not relevant, if it existed at all: processors in the first IBM PCs might even come without passive heatsinks. Later on, in the i486 times, when the clock rates grew to 40 MHz, it became a normal practice to install heatsinks, and there appeared first active cooling systems (aka coolers). Since AMD K6 and Intel Pentium II, active cooling systems became standard practice, practically part and parcel of a system unit: the problem of cooling hot CPUs grew from a curiosity into a real problem. And it stays that way up to now. In fact, there are three problems here. Problem #1: a processor must be cooled for normal operation. When overheated, relatively old processors without integrated temperature control systems either became unstable resulting in system failures or could even fry. Modern processors respond to overheating in a 'milder' manner -- they either significantly reduce their speed or switch a computer off (users data are not saved, of course -- but at least the processor won't fry). It can be regarded as a purely technical problem, and it's binary in its essence -- your processor either works how it should, or you face some kind of an disaster. Problem #2: the higher is the clock rate (although frequency limit may vary for different models), the hotter it grows. The hotter it grows, the more intensive cooling it needs. And considering that modern desktop processors mostly use air cooling systems, the stronger airflow should be pumped through the heatsink. Strong airflow means a strong fan. A strong fan -- higher rotational speed. Higher rotational speed, higher noise. The industry has already worked out methods to fight noise, of course (the most popular being to increase the heat exchange surface area of a heatsink so that it does not require intensive ventilation and to increase fan size to reduce its rotational speed at the same air flow intensity). However, they still failed to solve the problem cardinally: powerful high-speed processors either require expensive cooling systems, or computers with such processors may be uncomfortably noisy for users and people in the same room. This problem is the most pressing for users of home computers. Power saving technologies (described above) solve some of the problem: processors reduce their frequency and voltage when idle to get cooler. However, the problem in general remains unsolved: as processors start to work, they again increase their frequencies and voltage, which results in their increasing temperature. Problem #3, which is not solved even by quiet cooling systems: dust. Lots of air should be driven through the heatsink to channel much heat away from a processor. Particles of dust are floating in the air practically in any room. Passing through the system unit and especially through the CPU heatsink, some of the dust settles on their surfaces and reduces cooling efficiency. Reduced cooling efficiency makes the cooler work at full speed more often, pumping even more air through the heatsink. More air brings more dust. As a result, the main problem of computers with 'hot' top processors (processors that demonstrate top performance are practically always very hot) is the necessity to clear them out on a regular basis, even if they are equipped with relatively quiet coolers.  That's not the worst case scenario. Even though noise and dust are not objective technical parameters of a processor -- they are eternal companions of performance boost. Read the "Power Consumption" chapter to learn how to identify a noisy and 'dusty' processor and why one of CPU properties should be analyzed from an unusual point of view. The complexity of approachHere are our three pillars of CPU choice: performance, functionality, noise. The first two pillars often cross each other, so it's even difficult to understand where one stops and the other starts -- the third parameter stands slightly apart. So, drawing a bottom line under basic criteria for choosing a processor, we'd like to remind our readers that there is only one final criterion: comfort. If a computer is quiet, but slow, it's not comfortable. If a computer is fast, but noisy, it's not comfortable either (for many users). When you choose components for your future system unit, you should ask yourself one question prior to analyzing their brands and technical characteristics: "What does PC comfort mean to me? What components contribute to that comfort?" And when you choose a processor, you should always keep the blueprint of your entire system unit in your mind. Don't cherish fallacious hopes: even a thoroughly selected CPU will look inappropriate in a wrong PC configuration. No matter whether too fast or too slow. It will be inconvenient to use it anyway. Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. |