|

||

|

||

| ||

TABLE OF CONTENTSOur readers may think that it's another idea of the Canadian company based on the old chips. And they will be right and wrong at the same time. Today we shall review one of the most controversial and at the same time the shortest-lived ATI's products. Yes, the X800 GT will manage 2.5-3 months maximum, then it will be discontinued. What's the reason to launch it then? There are two versions: Version One. The company accumulated quite a lot of R480 processors (plus the R423 remains), intended for super expensive X850 cards. Demand for the latter has dropped (both due to the launch of more interesting solutions from NVIDIA and due to oversaturation of the market with expensive accelerators after a year's deficit and hunger). Prices for these cards can be reduced only a little, otherwise the market will be broken meaning sure death for cheaper solutions. What can be done with these chips? - The solution was found: to cut down their pipelines and sell the resulting cards in the price segment with the highest demand, thus still keeping such cards profitable. Version Two. In honour of its 20th anniversary the Canadian company decided to make a present to overclockers and enthusiasts, who love unlocking chips, etc, and launched intrinsically the same X850, but with crippled pipelines, which can be easily unlocked. Besides, the company will tickle the competitor with its prices for the new card, which will remove the 6600GT from the pedestal of the best buy. Version One is what most likely corresponds to reality, while Version Two is promoted by ATI. I have a mixed feeling: admiration of clever Canadian specialists (they have actually exploited the same core for three years, just adding Shader 2.0b units and various combinations of pixel and vertex pipelines - it's tricky to squeeze so much from the same R300 for so long, which is now doubled, or halved, or a tad expanded) plus a feeling of autumn spicy stagnation, which is evident. The break-through in 2002, when fast shader execution helped ATI to finally conquer half of the desktop market (its share is even larger now), put rose-coloured glasses on many specialists from Toronto. Alas. However, they prefer to speak of a temporal mishap, and threaten to return the status quo in autumn. We'll see. If it happens, we'll applaud ATI for having managed to get out of the wood. However, there is one more version: let's not forget that the Canadian engineers produced a graphics processor for XBOX 360. It's a core of the new generation, that is it will be released for PC only in the next year, when Windows Vista is launched. Even now all their efforts may be directed at the future product, in order to adapt the new core easily to discrete graphics, having sacrificed with temporary failures in the desktop Hi-End sector. So, let's return to the X800 GT. Experienced readers have already realized that it will actually be the same RADEON X800 PRO, but with its core cut down to 8 pipelines. Moreover - the X800 SE, little-known as it had been designed for system integrators. Why do we need this product? We already have the X700/X700PRO. But don't forget that the latter cards have a 128-bit memory bus, while the X800 GT features 256bit, as its PCB and GDDR3 are left intact as in the X850XT/X800XL/X800. By the way, a decision to use high-clocked chips (475 MHz) barred the possibility to use R430 chips (X800/X800XL) for the X800 GT. Even though manufactured by the 0.11 micron process technology (R423/480 - 0.13 micron low-k), they don't manage clocks above 400 MHz. With the recommended retail price for X800GT-based cards within 130-170 USD, the Canadian company evidently opposes them to the notorious GeForce 6600GT. Considering that prices for the latter are getting lower and the GeForce 6800 is pushing from above (its prices will soon go down below $200), it was interesting to compare the new card with both competitors. Now almost all articles will include video clips, which will be brief but more illustrious concerning a new product. There will be three formats: the best quality (MPEG1), average, and low quality (WMV). So,

Video Cards

Sapphire and TUL obviously used PCBs from 256-MB X800/X800XL/X850 cards, with unsoldered elements responsible for external power and VIVO (these cards have no RAGE Theater). It's just another proof that the R430 and the R480/423 are absolutely identical in terms of pins, any of them can be installed on the same PCB. But for some reason HIS decided to use a new PCB from 512-MB X800XL cards (we have already reviewed such cards - see the list of articles below), having installed only half of the memory chips. Of course, the bus remained uncrippled (256-bit). However, I cannot judge whether the new PCB is more expensive or cheaper than the old. At one time I assumed that all X800/X800XL 256MB cards would be based on this PCB, but with half of the memory chips (it's convenient for production unification). We should also mention that the TUL card is equipped with a couple of DVI connectors.

What concerns the graphics processors, we have an interesting issue here. According to ATI, only R480 chips are used, but the Sapphire card uses none other than the R423: You can pay attention to bridges in the corner of the substrate (we already described them in detail, when we reviewed AGP cards and the R420). They lock (or don't lock) pixel pipelines on the hardware level. You can see that the right vertical bridge is cut, which corresponds to 8 pixel pipelines. Flashing BIOS from X800PRO/X800XT/X850PRO/X800/X800XL didn't help to unlock the chip. The other cards are equipped with the R480. It's no different from its 16-pipeline counterpart in exterior. In the same way, flashing BIOS from X800PRO/X800XT/X850PRO/X800/X800XL didn't help to unlock the chip. I'll describe our unlock attempts in detail below.

Bundle Box.

Installation and DriversTestbed configurations:

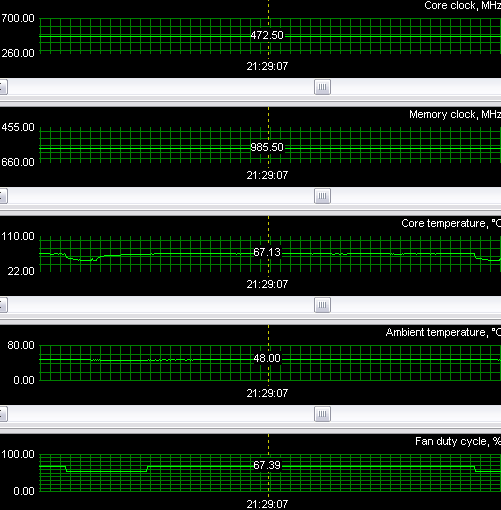

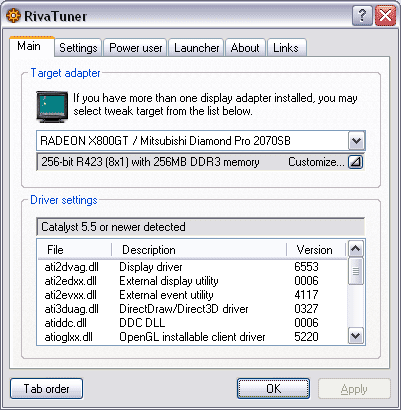

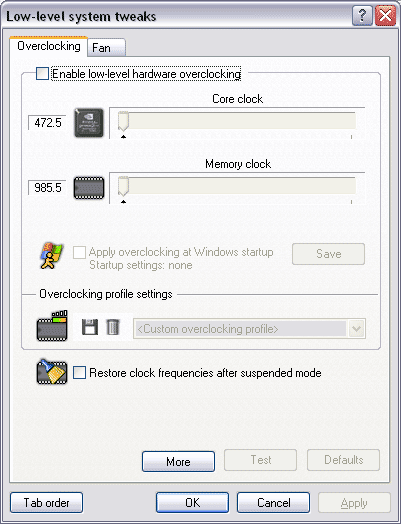

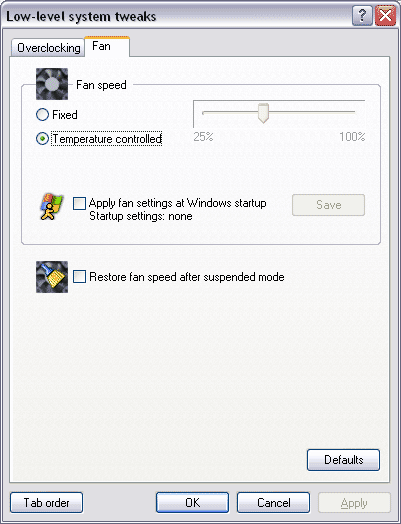

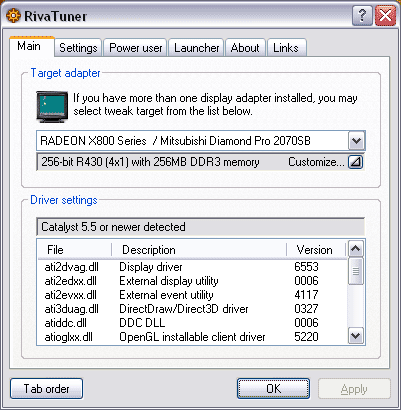

We monitored temperatures during our tests, and the heating was expectedly not very high. The core stayed below 70°. Now what concerns unlocking. That's what we have by default:

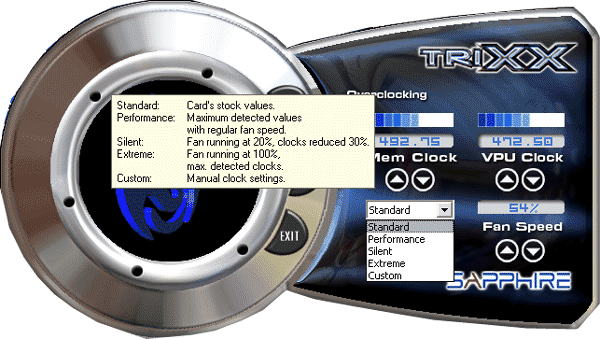

On the first three screenshots we can see the number of pipelines, operating frequencies, and fan rpm control. And here is the last screenshot... Don't be scared, the core is not broken in halves. :) That's the number of pipelines we got, after our last attempt to flash BIOS from senior cards. In short, IRREGARDLESS of the chip used in the card (R423 or R480), flashing BIOS from X800/X800PRO/X850PRO/X800/X800XL will change nothing or may even aggravate the situation (the number of pipelines may be reduced to 4). That's why the version about ATI's present to overclockers that can be easily modified is a lie. The only thing I haven't tried so far is to weld the broken bridge on the R423. But it turns out that the R423 is running out of stock and nearly all X800GT cards will be equipped with the R480, which are locked inside. Alas, there was no miracle. I guess, X800GT Extreme cards may be launched in future (but using two suffixes - down-grading and up-grading - is an absurd) with unlocked R480 chips. This event is not likely as it may cause the market fall. Now what concerns overclocking. The samples I've got managed stable operation only at 510-513 MHz. Yep, overclocking is not high. I don't believe when I'm told about such cards running at 560 MHz... Probably for 15-20 minutes. You can manage to complete the tests. And that's it. Under such conditions I managed to run my cards even at 540 MHz (at the core), but only for an hour... Then the cards came to halt... That's no overclocking. What I call overclocking is a mode, in which a card may operate for at least 6-8 hours in succession, or even for twenty-four hours. I'll conclude this section with a description of the new Trixx utility from Sapphire:

As you can see, it's a very good product. It features overclocking and monitoring. It can even be updated automatically via Internet. It works only with video cards from Sapphire (detected by BIOS). Test results: performance comparisonWe used the following test applications:

The list of tests has been modified. First of all, having analyzed our tests during the last six months, we found out that there was no point in two additional scenes in Far Cry and HL2, as well as in the ducche scene from CR, so we preserved only the initial base scenes. Secondly, with the advent of modern F.E.A.R. and SCCT there is no need in TR:AoD. Besides, such games (where the entire load falls on shaders only and texturing is very simple) are already no more and will hardly appear in future.

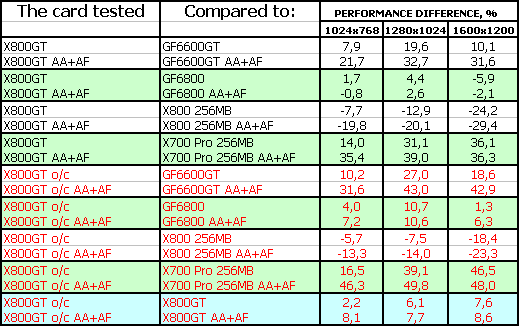

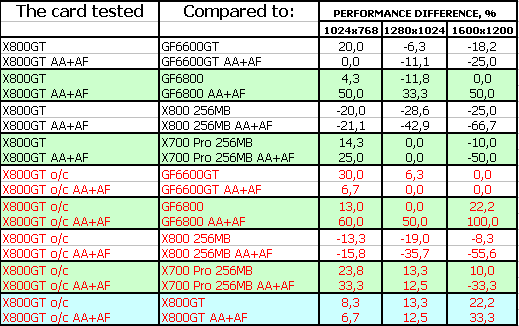

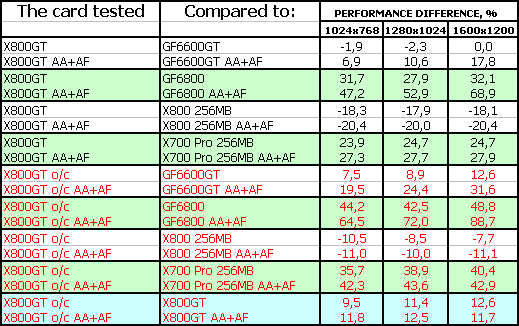

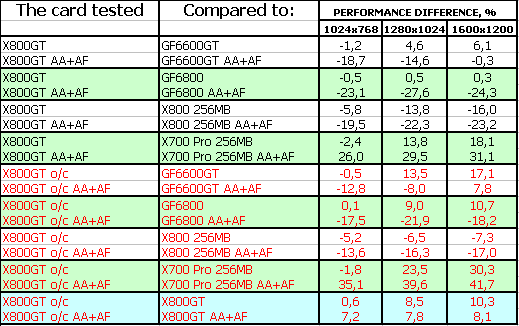

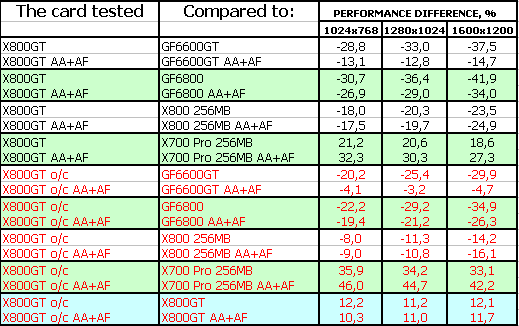

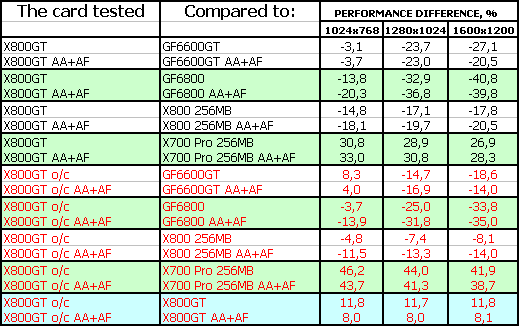

Game tests that heavily load vertex shaders, mixed pixel shaders 1.1 and 2.0, active multitexturing.FarCry, Research

An excellent result for the X800GT. The new card managed to outperform the 6600GT, especially in heavy modes (where the 256bit bus was helpful), and demonstrated approximate parity with the more expensive 6800. Its advantage over the weaker X700 PRO is evident.

Game tests that heavily load vertex shaders, pixel shaders 2.0 (and 3.0), active multitexturing.F.E.A.R. (MP beta)

In this case the situation suddenly got complicated. Firstly, the GeForce 6800 is outperformed by the 6600GT due to its lower clock, even the 256-bit bus is of no help to the former. That's why the X800 GT left the 6800 far behind in the heavy mode. But the new product didn't get the better of the 6600GT. However, the gameplay level is such that we are interested only in 1024x768 without АА+АF. Let's say this - an approximate parity with competitors.

Splinter Cell Chaos Theory

The situation here is more complex. First of all, we should take into account that all NVIDIA cards use Shaders 3.0 in this game, while all ATI cards - Shaders 2.0. In order to enable АА and avoid problems with HDR, we disabled the latter during our tests. The X800 GT is victorious in every respect. But I repeat that the comparison conditions are not quite adequate (that was developers' decision not to allow Shaders 2.0 on NVIDIA cards). The game supports Shaders 1.1., but using this model in a modern game, which could have become a "common denominator", seems an anachronism.

Game tests that heavily load both vertex shaders and pixel shaders 2.0Half-Life2: ixbt01 demoTest results: Half-Life2, ixbt01

Strange as it may seem, the X800 GT has also lost the battle on all the fronts. Alas.

Game tests that heavily load pixel pipelines with texturing, active operations of the stencil buffer and shader unitsDOOM III High mode

Chronicles of Riddick, demo 44

Comment is needless, I guess. NVIDIA products always win in case of OpenGL and games on DOOM3 engine, it has been proved by multiple tests. Unfortunately, ATI failed to produce sterling and "streamlined" ICD OpenGL. And of course, we should take into account that these games actively use stencil buffer, which is also one of the hobby-horses of NVIDIA.

Synthetic tests that heavily load shader units3DMark05: MARKS

The X800 GT ranks between the 6600GT and the 6800, though the new card is victorious with АА+АF. But at speeds demonstrated by these cards in 3DMark05, АА+АF mode is obviously excessive here. Their performance is too low even without it :)

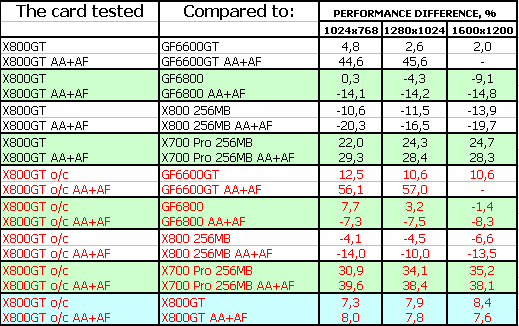

ConclusionsRADEON X800 GT 256MB is a very interesting product. This card demonstrates approximate parity with its competitor from NVIDIA even without unlocking pipelines and overclocking, sometimes it even outperforms it. If we find a way to unlock these chips (there is a chance that flashers don't change BIOS versions) and enthusiasts can make X850 PRO or even XT out of X800 GT, the demand for the new card will be even higher. I have only one doubt... There is something strange here: an expensive chip, expensive 256-bit PCB, 256 MB of GDDR3 memory - for just $150-$170? Thus, the prime cost must be below $100 for this card to be profitable to manufacturers... I don't think that the X800 GT will yield much profit. It will just empty ATI's storehouses of the chips... However, you may still remember the RADEON 9500 256bit, which used to be $150 despite its expensive PCB and very expensive 2.8ns memory at that time. But the ardour, with which three ATI's partners manufacture these cards, shows that this product is still interesting to them. By the way, the Canadian company will deliver its chips for such cards only to three partners. You may have already guessed what partners they are. I remind you that this card is a king for a day, even if it's successful. As soon as the R480 store is depleted, the X800 GT may be discontinued. But now, even considering that the X800 GT does not support Shaders 3.0, only 2.0b, like all current ATI products, it still looks very good. This card often outperforms the GeForce 6600GT. Let's wait and see at what price these new cards will be sold. But the final word is up to our readers and potential buyers. In conclusion I'd like to wish ATI to stop its hide-and-seek games and manufacture a new sterling card (or even a series) with Shaders 3.0. Otherwise, it resembles the situation in 2002, when the R300 was released while the NV30 was only promised... But the eternal competitors had contrary roles at that time. An explicit sign of ill-being has formed for the latest years: if a company starts spawning new products from its old products by inventing various combinations of pipelines, clocks, and buses, it means that it actually has nothing new to offer (having switched the 12-pipelined NV40 to the new process technology, NVIDIA still calls the product GeForce 6800. The NV42 is already out, but the card still bears the 6800 name - that's right, because it offers nothing new from the users' point of view. But the Canadian company can smartly manipulate users, giving practically the same X800XT PE to be the X850XT, for example. What's the difference between the R423 and the R480? - THERE IS NO DIFFERENCE, only technical nuances. Does it give a right for a new name? - No, it doesn't! Intel does not give new names to different steppings of its processors! NVIDIA used to be in a similar stagnation, as you remember... Now it's ATI that is in depression. Perhaps I'm exaggerating the problem, the 7800GTX was launched only two months ago, but... "You should have thought of before." (I mean the leadership, to which the Canadian company still lays claim). Now what concerns the three cards reviewed. The Sapphire product is first of all interesting due to its new utility, because it will hardly attract you with its noisy and little-efficient cooler (besides, the bundled game is old). The card from PowerColor is interesting for its quiet cooler and two DVI connectors. The HIS product has VIVO. It's up to you to choose :) All the cards performed very well, 2D quality was traditionally high.

You can find more detailed comparisons of various video cards in our 3Digest.

Theoretical materials and reviews of video cards, which concern functional properties of the GPU ATI RADEON X800 (R420)/X850 (R480)/X700 (RV410) and NVIDIA GeForce 6800 (NV40/45)/6600 (NV43)

Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. | |||||||||||||||||||||||||||||||||||||||||||||||||||||