|

||

|

||

| ||

TABLE OF CONTENTS

A middle end product - RADEON X800 appeared at the end of 2004. But only 256MB cards were available at that time. They were a complete copy of the reference X800XL design; the only difference from the senior brother was the crippled core and reduced memory frequency. But larger memory capacity of GDDR3 type did not contribute to more or less adequate prices. This product competes both with the GeForce 6600GT as well as the GeForce 6800, ranking approximately between them. Launching a 128MB card with DDR1 would drop manufacturing costs and consequently retail prices for such products. But it would require redesigning PCB to support DDR1 and retain the 256bit memory bus. Some companies are tempted not to install half of memory chips on the card and launch the X800 128MB with GDDR3, the old PCB, and the memory bus reduced to 128 bit. Our today's review will include a comparison with one of such cards. Here is the first robin among 128MB and sterling 256MB X800 cards. It's a product from GeCube that will become an object of our review.

I repeat that now almost all articles will include video clips, which will be brief but more illustrious concerning new products. There will be three formats: the best quality (MPEG1), average and low quality (WMV). So,

I would remind you that the RADEON X800 is a product for about 200 USD. It officially supports only the 256bit bus (as it turns out it's easily converted to 128bit), it contains 12 pixel and 6 vertex pipelines and supports DirectX 9.0c.

Video Cards

GeCube engineers based their product on a new PCB, with the same 256bit bus, where all seats for memory chips are occupied. Besides, it uses cheaper memory; such chips have been on the market for at least three years (the first ones were used in the GeForce4 Ti 4600 in 2002). Let's hope that other ATI partners will follow the trail. NEVERTHELESS! WHEN YOU BUY A VIDEO CARD BASED ON RADEON X800, CAREFULLY EXAMINE THE MEMORY BUS. THE MAIN SIGN OF BEING CRIPPLED IS THE LACK OF SOME MEMORY CHIPS ON A CARD.

Let's proceed with the examination.

GPU R430 (X800):

Bundle Let's have a look at the box.

Installation and DriversTestbed configurations:

VSync is disabled.

Test results: performance comparisonWe used the following test applications:

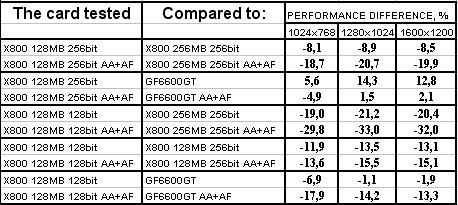

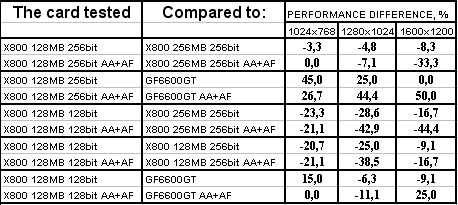

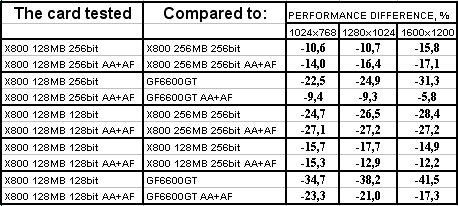

Overall performanceGame tests that heavily load pixel shaders 2.0.TR:AoD, Paris5_4 DEMO We use this outdated and not very popular game as an example of a classic "shader game", which heavily loads these units. As you can see, the 128MB 256bit card is outperformed by its 256MB counterpart, the largest breakaway is demonstrated with AA+AF. It's easy to explain as the memory on second card operates at 990 MHz instead of 700 MHz. Then, the same accelerator from GeCube (X800 128MB 256bit) outperforms the GeForce 6600GT.

Game tests that heavily load vertex shaders, mixed pixel shaders 1.1 and 2.0, active multitexturing.FarCry, Research FarCry, Regulator

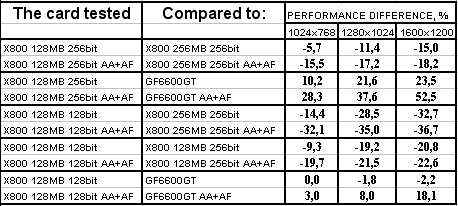

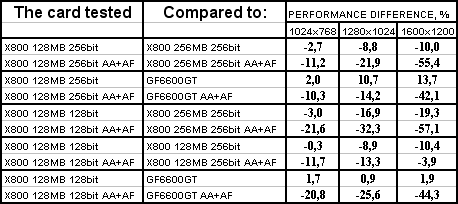

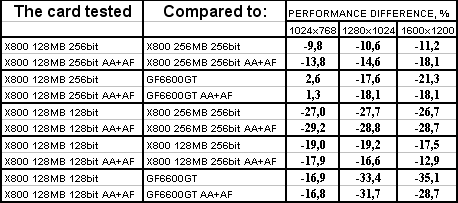

FarCry, Pier GeCube RADEON X800 128MB 256bit PCI-E is outperformed by the X800 256MB 256bit; the difference reaches 18% in heavy modes. I guess that's the effect of the difference in memory frequencies rather than in the memory capacity. However, this product wins back from the GeForce 6600GT.

F.E.A.R. (MP beta)

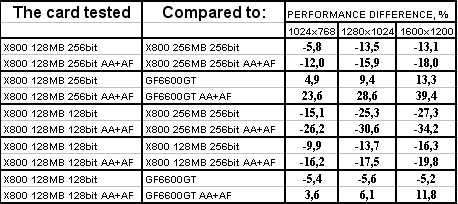

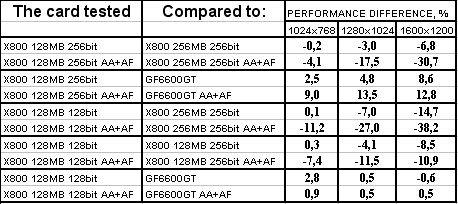

F.E.A.R. is the latest game; its release is planned for the autumn this year. It loads accelerators heavily, so the results are not so high in absolute FPS with maximum quality. The reason the GeCube RADEON X800 128MB 256bit PCI-E is outperformed by the Gigabyte RADEON X800 256MB 256bit PCI-E (GV-RX80256D) has to do only with different memory frequencies, as the gap grows with increased resolution. Game tests that heavily load both vertex shaders and pixel shaders 2.0Half-Life2: ixbt01 demo

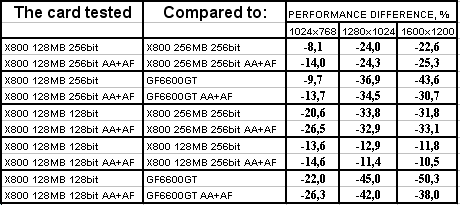

Half-Life2: ixbt02 demo

Half-Life2: ixbt03 demo

The accelerator from GeCube is generally very good. Of course, the X800 256MB turns out the fastest model, but that's the effect of a higher memory frequency.

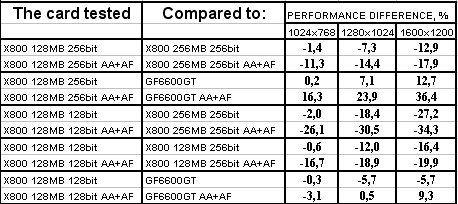

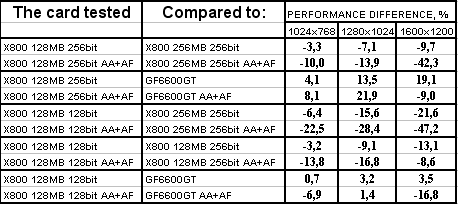

Game tests that heavily load pixel pipelines with texturing, active operations of the stencil buffer and shader unitsDOOM III High mode Chronicles of Riddick, demo 44

Chronicles of Riddick, demo ducche

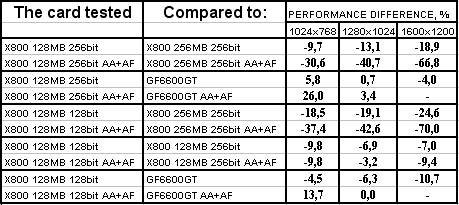

There is nothing much to comment: it's quite clear that the GeForce 6600GT is the strongest here. In other respects the alignment of forces between the two kinds of the X800 remains the same (the fastest X800 card is 256MB, then the X800 128MB 256bit).

Synthetic tests that heavily load shader units3DMark05: MARKS The results are similar to those demonstrated in the first test. That's only natural, considering that the heaviest load falls on shader units again.

Conclusions

GeCube RADEON X800 128MB 256bit PCI-E is a positive phenomenon. Even though the product is slower than the 256MB modification, I think that in the majority of cases it has to do with different memory frequencies. Unfortunately, we don't have the X800 256MB at 400/700 MHz. But that's not important, because the lags are not so catastrophic, considering the low price for this card relative to the 256MB modification. That's why this card will be successful. And don't forget that the same name of X800 may be used for accelerators not only with different memory frequencies, but also with different bus width.

You can find more detailed comparisons of various video cards in our 3Digest.

Theoretical materials and reviews of video cards, which concern functional properties of the GPU ATI RADEON X800 (R420)/X850 (R480)/X700 (RV410) and NVIDIA GeForce 6800 (NV40/45)/6600 (NV43)

Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. | |||||||||||||||||||||||||||||||||||||||||||||||||||||