|

||

|

||

| ||

Our theoretical article about ATI AVIVO technologies had been published long ago. But we had no time to test hardware accelerated video playback. It had to do with the fact that both ATI and NVIDIA were developing support for video decoding in drivers so actively, that nearly each release introduced lots of new features. So it was difficult to compare GPU performance, as the situation would change every month. And now everything seems to settle down a bit. Either ATI and NVIDIA fine tuned their support for video decoding, mentioned in our theoretical article, or they just grew tired of this task. So we decided to analyze the situation with hardware accelerated video decompression and playback. Playing HD Video (HD stands for High Definition) in modern compression formats, which have high requirements to system resources, is a resource-intensive task even for powerful computers. The main load falls on a CPU, but modern video cards take up part of decoding and post processing calculations. Modern GPUs from ATI and NVIDIA incorporate programmable units to accelerate decoding and post processing of various video formats. The technology that uses GPU features for video playback is called DirectX Video Acceleration (DXVA). It allows to use a video processor in decoding and post processing video (deinterlacing, noise reduction, etc). Video decoding features of the latest GPUs from ATI and NVIDIA support the following formats: MPEG2, WMV9, and H.264. You will need special decoders (ATI DVD Decoder, PureVideo Decoder, CyberLink MPEG2 and H.264 video decoder) and players that support DXVA, such as Windows Media Player 10. At first there appeared DXVA decoders for hardware MPEG2 acceleration, then there was released an addon to WMP10 that supported DXVA for decoding WMV9 format. For the last two years there appeared DXVA H.264 decoders, CyberLink being one of the first. Not long ago, this year in spring, there was released a high-quality software decoder of the H.264 format, called CoreAVC. We'll examine it in this article as well. There are currently no performance problems with playing low-res MPEG2 video (up to DVD 480p/480i resolution). But when DVD Video just appeared, typical processors did not cope well with decoding MPEG2. Computers could play DVD only relying on hardware support of video cards. And now even some state-of-the-art CPUs cannot always meet requirements for decoding such a modern format as H.264 in high resolutions, for example in 1080p (progressive 1920x1080). According to ATI and NVIDIA, their latest video chips help processors decode the most resource-intensive H.264 format in addition to already popular WMV and MPEG2, including high resolutions. There are some limitations for various chips. For example, not all low-end chips have sufficient capacities for the highest resolutions, the level of hardware support depends on a card model and its GPU. But capacities of the latest video cards for hardware acceleration of decoding H.264 indicate theoretical solution to the problem of playing such files. Is it true or there are still some problems? In this article we are going to compare performance and playback quality of various video formats, starting from MPEG2 to the latest H.264/AVC. You can read about this format in the above-mentioned theoretical material. We'll pay special attention to the software decoder CoreAVC, which has already proved its worth. Offering the same image quality, CoreAVC is much more efficient than the other H.264 decoders from CyberLink and Nero, to say nothing of QuickTime. By the way, there is currently no hardware accelerated version of CoreAVC. But its developers admit that they plan on supporting video chips. Testbed Configuration, Software, and SettingsTestbed configuration:

We used two video cards in our tests, based on GPUs from the main manufacturers:

We used the latest CATALYST version and the latest official ForceWare beta. According to NVIDIA, this very version has some new features and changes concerning PureVideo technology. Software (players, codecs):

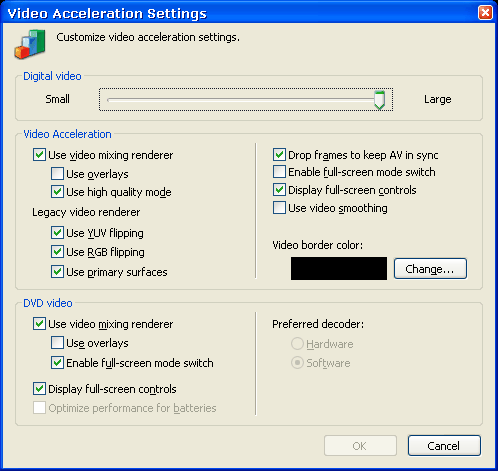

All driver and software settings are left by default. You can see the key Windows Media Player 10 performance options on the screenshot:  "High quality mode" in WMP10 is responsible for using Video Mixing Renderer 9 (VMR9). We captured screenshots in 1024x768 full screen. Performance tests were run in 1920x1080. We disabled overlay support in order to capture screenshots in the same conditions for ATI and NVIDIA. The list of video fragments we used in our tests

As we can see, our test materials include video fragments in all popular video formats, most often in two resolutions - 1280x720 and 1920x1080. Besides, our tests include a small MPEG2 video fragment, 720x480 (480i), as DVDs are very popular. The only difference from most DVDs is interlacing. If we rule out the effect of deinterlacing, there are usually no problems with MPEG2 video at all. We ran the tests in 1920x1080 with our own utility that uses ActiveX components of Windows Media Player 10. It allows to measure CPU usage during playback, to gather WMP10 statistics, as well as to take screenshots of a specified video frame. We measured the average CPU usage playing each video file three times. We also gathered such statistics as a number of dropped frames per second and video framerate. But some combinations of video files and codecs demonstrated evidently wrong values, so we had to give up this idea. What concerns the average CPU load time, we had no problems measuring this parameter. It was used in our tests to determine the quality of hardware support for decoding video by ATI and NVIDIA cards. Dropped frames are mentioned only once. Test ResultsWe'll start the tests with the most interesting format - H.264. Both video cards were tested with two decoders - CoreAVC Pro (pure software), which does not support hardware acceleration by video cards yet, and DXVA-ready CyberLink H.264/AVC Decoder from PowerDVD 7 Deluxe. We also publish CPU load figures using our software H.264 decoder, built into QuickTime. This decoder was one of the first to appear. It was mostly this decoder that promoted H.264 as a resource-intensive format. H.264 (720p)According to the figures, decoding H.264 even in 720p with the old QuickTime decoder loads our powerful processor almost completely. Moreover, playback is jerky, frames are dropped. It's not much, but still unpleasant. CyberLink decoder copes well with its task on both cards, ATI load was a tad lower than NVIDIA load. The same situation is demonstrated by CoreAVC, though it's a purely software codec: NVIDIA is a tad outperformed by ATI. But the difference is small - 7-8%. Considering that CPU is loaded by less than a half, I would say that it comes to nought, you won't notice it in practice at all. And now let's see the image quality. It's almost identical in all cases. I didn't detect any significant differences, but I have to publish several samples.

I have no gripes with quality in any combination of video cards and codecs. They all show the same picture, there are just small differences in performance. H.264 (1080p)The second video fragment in H.264 format uses the highest resolution (1920x1080) and noninterlaced mode. We'll see whether its performance will differ in any of our decoders and video cards. Will Athlon 64 3200+ cope with decoding this video fragment? QuickTime used CPU almost by 100% to play the video fragment in such a high resolution. Moreover, it dropped lots of frames and still it was too slow. Why do I pay attention to it anyway, you may ask. Our article is devoted to hardware acceleration of video, which is not used in QuickTime decoder. We published this example, because QuickTime was almost the only available decoder for video files of this format, when H.264 just appeared. All people used this codec and wondered how "heavy" the new format was... When you have a look at the results of CoreAVC, the second software decoder, you will see that the problem has nothing to do with the format. Developers should just be skilled enough to write decoders. Just look - purely software CoreAVC outperforms the advanced (in words) CyberLink with DXVA support even in the second video fragment! ATI and NVIDIA video cards demonstrate closer results this time, but ATI still retains a small advantage. Especially in case of CyberLink. This decoder was developed in cooperation with ATI. At the same time, a purely subjective impression of NVIDIA is a tad worse, than the results published on the graph. NVIDIA card demonstrated more jerks and FPS instabilities, which is confirmed by dropped frames per second. This is the only video fragment in our review, which always dropped frames (about 15-20 and 140-150 for ATI and NVIDIA correspondingly). I repeat, we speak about the CyberLink decoder. As in case of the first fragment, I saw no significant changes in quality in various H.264 decoders. Everything is absolutely the same on cards from ATI and NVIDIA, except for different contrast and brightness. Default settings are more appealing on the ATI card. Anyway, GeForce can be configured in the same way, we checked that.

The second frame shows the same as the first one, screenshots from ATI and NVIDIA differ only in contrast and brightness. There are some minor differences in sharpness of letters and logos below. But we cannot say for sure which screenshot offers better quality, especially as the difference may be caused by the contrast setting. Here are the H.264 results - ATI leads with an insignificant performance advantage due to the CyberLink decoder. CoreAVC demonstrates almost the same results. In fact, CoreAVC is much better. Here is our conclusion - all that glitters is not gold. In other words, support for hardware decoding in itself does not mean better performance and/or quality. CoreAVC offers better performance, and its quality is no worse. And the third conclusion - we found no problems in the quality of H.264 decoding. WMV HD (720p)WMV Decode diagrams will be simpler, they use only one possible decoder from Microsoft. It comes with Windows Media Player, being the only available WMV decoder (ffdshow seems to have an alternative option, but it does not work so far). The first WMV HD video fragment offers the 1280x720 (720p) resolution. It's encoded with a relatively low bitrate - 5 Mbit/sec. Playback performance is a tad higher on NVIDIA video cards, but the difference is not big. As in case of H.264, decoding the 720p video file does not take even half of CPU resources. We had no problems with video playback, it was smooth.

We saw no differences in quality, except for the above-mentioned brightness and contrast settings. However, bitrate of the first WMV HD video file is too low and its quality is not high. Perhaps, the 1080p video fragment will change the situation. WMV HD (1080p)The 1080p video file with higher bitrate takes up just a tad more CPU resources than the 720p file. And again NVIDIA slightly outperforms ATI, about 5%, you just won't see the difference in practice. So even not a fastest testbed copes well with playing the 1080p video file. Perhaps, other WMV HD video files will demonstrate a different situation, but this one plays without any problems. And now we should check the quality.

Here is the first quality surprise! ATI retains the best brightness and contrast settings by default, but there appears evident banding on smooth color renditions. It's most noticeable on the selected fragment, but it exists in the other parts of the screenshot as well. We should check the quality on another screenshot...

And again ATI has evident problems with quality on the selected part of the screenshot, now in the form of barely visible lines. But insignificant banding on smooth blue renditions remains. Conclusions on WMV HD are not consoling for ATI - it demonstrates evident problems with the quality of decoded video. Perhaps, it's a problem of this very driver version or a combination of the testbed components. But the fact remains - the quality of WMV playback on ATI cards is not ideal. What concerns performance, we have no gripes with any card. CPU usage was only insignificantly higher than 50% even for high-res video files. In this case, NVIDIA's efficiency is a tad better. MPEG2 (480i)Let's proceed to MPEG2, the oldest format. First of all we tested a video file of the most popular DVD resolution (720x480), having complicated the task by choosing a file with two fields (interlacing). Decoders require high-quality and fast post processing (deinterlacing) for decoding and playing such formats. Let's see how ATI and NVIDIA cards cope with this task using two different MPEG2 decoders... ATI used an MPEG2 decoder from CyberLink PowerDVD 7 and a proprietary ATI MPEG Video Decoder. In case of NVIDIA, we used CyberLink (the main decoder) as well as the latest NVIDIA PureVideo (commercial). Frankly speaking, the results are puzzling. Remember that all decoders, drivers, and players used default settings, except Windows Media Player, which was configured to use VMR9 without overlays. According to our tests, CyberLink decoder on the ATI card loads a processor much lower than on the NVIDIA card. And vice versa, if we compare decoders developed by these companies, PureVideo loads a CPU less than the native decoder from ATI. They sort of swap their places... But we shouldn't forget that PureVideo is not freeware. You'll have to pay for using the latest innovations in the field of hardware decoding and post processing. So you should take the price of PureVideo into account. On the other hand, CyberLink PowerDVD is not cheap either... But performance is not the most important point here, especially as the CPU load was from a quarter to half of CPU resources. The main objective is to get maximum quality. We'll now try to evaluate it.

The first MPEG2 example demonstrates serious differences on most screenshots. For some reason, CyberLink decoder started blending fields on the ATI card... We might assume that either DXVA was not enabled or the video file was encoded wrong, or it was due to the default settings of the decoder and drivers. We tried to rerun the tests, having previously reinstall the software. We obtained the same results again. Perhaps, ATI drivers are fine-tuned for video overlays, not VMR9? This assumption was proved wrong as well, enabling the overlay mode changed nothing. The native ATI decoder showed some remnants of previous frames in the form of barely noticeable lines above the bird and the text... But both NVIDIA frames are very similar, even though decoders and CPU load were different. Oddities of video playback on the ATI card can also be found on the second screenshot from this video file as well:

ATI demonstrates the same problem with CyberLink - deinterlacing by blending fields, the proprietary decoder has no such problems. But the wing of the largest bird shows traces of the previous frame or of the other field. CyberLink demonstrates good quality on the NVIDIA card, but PureVideo is still better. This screenshot could bring the victory in quality contest to PureVideo, as it offers a sharper image, just look at the horizontal lines of the upper edge of the wall, a shadow from the hero, and ground details. But you should keep in mind that this decoder is not free, this is its main drawback. MPEG2 (1080i)The second MPEG2 file also contains interlaced video, but in higher resolution. I wonder how our contenders will cope with this task. Won't the post processing load be too high? ATI demonstrates bad results. The RADEON X1800 XL performs worse than the competing product both with ATI decoder and CyberLink. CyberLink decoder is just a tad better than ATI MPEG Video Decoder on the ATI card. The NVIDIA card had much fewer problems to play this 1080i MPEG2 video fragment. 70-80% for MPEG2 is too much, but ATI still had no problems with playback. Let's have a look at the quality, are there any new unusual problems?

The situation is generally the same. CyberLink decoder on the ATI card deinterlaces video by blending fields. In other cases it uses less noticeable advanced methods. The difference in default brightness and contrast settings in ATI and NVIDIA cards is still noticeable. It's more or less OK in other respects. ATI decoder is a tad "noisier", there is noticeable banding on the sky. PureVideo Decoder is the best in this task.

We found no problems with deinterlacing on the second frame. There is little motion here, we concentrate on sharpness and aliasing of the logo in the corner. This element looks best on the screenshot from ATI, CyberLink Decoder. Then follows NVIDIA, which demonstrates almost identical quality in both cases. And the worst quality is provided by ATI Decoder - we can clearly see aliasing on the dark logo lines. We can again see the difference of default color settings in ATI and NVIDIA. MPEG2 (1080p)Let's analyze the results of the third and the last MPEG2 fragment, noninterlaced video with the highest HD resolution. ATI's lag is not as big here as in the previous case. If we don't take PureVideo into account, we can say that the cards offer similar performance. But proprietary MPEG2 decoders bring GeForce forward. We can see that ATI is nearly twice as slow as NVIDIA in case of ATI MPEG Video Decoder and PureVideo Decoder. It's another proof that PureVideo decodes MPEG2 video really efficiently. Unfortunately, the ATI card worked worse in this case. But on the whole, it's still possible to play 1920x1080 MPEG2 video even when it loads CPU by 60-70%. The other 30-40% of CPU resources will suffice for most tasks, that are usually running in the background when you watch video on your home PC. Let's analyze the quality:

There are no problems with quality this time. All decoders and both cards worked well. The only problem is slightly blurry details on the NVIDIA card in case of CyberLink decoder, built into PowerDVD. The screenshots are the same in other respects, they again differ only in color settings. Let's draw a bottom line under MPEG2 tests. NVIDIA performs a tad better, considering its PureVideo decoder. ATI has noticeable problems with deinterlacing in CyberLink decoder and noticeably higher CPU usage in case of HD video in MPEG2 format. Anyway, the processor together with two video cards in our testbed offer enough resources to decode, deinterlace, and display MPEG2 video. There is a small difference in CPU usage, but it's not important in real life. Unlike the quality, we should really analyze this issue... As most questions about quality concern decoding and deinterlacing MPEG2 video, we plan on writing a separate article about AVIVO and PureVideo and decoding MPEG2 tested in HQV benchmark. We hope it will answer all our questions. We'd like our readers to comment on what seemed wrong to them in this article and what is their best way to configure ATI and NVIDIA cards to get maximum quality and performance. ConclusionsLet's mention our general conclusion first - the testbed coped with playback of all video files, but not all decoders fared well. For example, QuickTime H.264 decoder wastes CPU resources, it's performance for all HD video files was not ideal. On the other hand, CoreAVC uses much less CPU resources for decoding the same files, it allows to play any H.264 video (including the highest 1080p resolution) without resorting to a video card. The same concerns MPEG2 decoders - all-around CyberLink plays MPEG2 as well. But NVIDIA cards perform better with PureVideo. But you'll have to buy it. So, let's formulate the first conclusion - much depends on a given decoder. If you made the right choice, you won't even need the vaunted support for hardware decoding and post processing in your video card. If your choice is wrong, even this feature will not help you. Athlon 64 3200+ (installed in our testbed) coped well with playing video files in all popular formats and all resolutions. It all depends on a codec. We had no performance problems with playing video files of any format in the optimal cases for ATI (CoreAVC for H.264, WMVideo for WMV and any of the two for MPEG2, it's up to you to choose which you like) and NVIDIA (the same applies here, but PureVideo does better for MPEG2, though CyberLink also copes well). Many people, including yours truly, are of the opinion that it makes little sense to compare video cards by their performance and CPU usage to decode video - there are few users who watch movies and run other resource intensive tasks on their computers at the same time. Conclusion on quality: video quality is generally good, except for some cases - banding in WMV, strange ATI's deinterlacing method for MPEG2 and mediocre default contrast and brightness settings in NVIDIA. There are no significant differences in the picture, except for the above-mentioned issues. Concerning formats, we can note that there are fewer problems with WMV HD, because there is only one decoder for it - the one integrated into Windows Media Player. You should carefully choose a decoder for H.264. One of the best decoders is CoreAVC. Purely software, it provides excellent quality and performance both on ATI and NVIDIA cards. Well, and MPEG2 is the most popular and the most problematic format. As this format often uses interlaced video, it requires post processing in the form of deinterlacing. This very process causes most problems. This article cannot answer all questions about MPEG2. So we plan on publishing another article devoted to playback of video files in this format on video cards from ATI and NVIDIA, the most problematic issue in quality terms according to our tests. Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||