|

||

|

||

| ||

Does 2900XT really need 1GB of memory?We understand why AMD doesn't allow to sell cards like HD 2900 XT 1GB. Even after our first article of this series it was clear that the problem was not in memory capacity - 512 MB of memory was quite sufficient even for top graphics cards. There is nothing wrong with memory bus width either. The problem is in the core. Our tests proved that the first driver bundled with the first card sample significantly raised performance at the expense of other card features. By "other" we mean antialiasing. Alas, there is evidently a bug in the GPU, since it does not allow the core to process AA as it would normally do. But the idea was brilliant! Expand the memory bus to 512 bit, so that AA is processed almost for free! Pity, but we can already state the fact that the card does not need 512 bits of bandwidth, because it's not utilized at 100%. The company got around this bug rather smartly - drivers are used to force ALUs process AA. That is, AA is actually emulated, which takes up much shader resources and affects overall speed - all our tests prove it. Now, imagine this product with 1024 MB of memory instead of 512 MB. What does it give us? Not much. OK, memory performance is increased, because memory frequency is raised from 830 MHz to 1000 MHz. Memory bandwidth is increased. But will it help the gimpy AA mode? I think if you pay US$550 for this product, you have the right to demand normal AA operation. All AMD programmers are currently optimizing the drivers to minimize these losses. But the cards are already in stores and people buy them. So we are very curious: what will 1GB of memory give to this product? And does it make sense to overpay $100 for it? (MSRP of HD 2900 XT 512Mb is 449 USD, MSRP of 1GB card is 549 USD. The latter is not set by AMD, however, but by its partners. As I have already mentioned, the chip maker strongly objects to retailing these cards and insists they are manufactured only for OEM and system builders. All HD 2900 XT cards are manufactured by AMD/ATI's orders at third-party plants. Partners of the American-Canadian company have nothing to do with their manufacturing, they just buy ready cards from AMD. Nevertheless, AMD is well aware that all partners will want to sell these cards, OEM or not, especially if cards are refused by system builders. Besides, the latter themselves might want to get rid of surplus (which usually goes to the gray market). All right, now let's proceed to the examination of the graphics card, which was kindly provided by our readers (and that's very pleasing!) This product bears markings of Sapphire and gets to stores via its sales channels. Graphics card

This card will be NO DIFFERENT from its modification with twice as little memory. It just uses memory chips of higher capacity, that's all. No more changes.

The R600 requires a much more complex PCB compared to the R580 (X1950 XTX) because of the twice as wide memory bus. Nevertheless, the manufacturer tried to keep the card no longer than previous solutions and to equip it with a proper cooler (with normal dimensions). Owing to very high power consumption (above 200 Watt) the card has two power connectors. One of them is a 8-pin connector (PCI-E 2.0) instead of the usual 6-pin one. There are presently no adapters for this connector. That's OK, because a usual 6-pin cable from a PSU can be plugged into this connector, and the remaining two pins are responsible for unlocking overclocking (the driver determines whether these pins are powered, if no - it blocks any attempts to raise frequencies).

The card has TV-Out with a unique jack. You will need a special adapter (usually shipped with a card) to output video to a TV-set via S-Video or RCA. You can read about TV Out here. We should also note that all top cards from ATI are traditionally equipped with VIVO (including Video In to convert analog video to digital form). This function is based on RAGE Theater 200 here instead of the traditional Theater:  We should mention sterling HDMI support. The card is equipped with its own audio codec, which signals go to DVI. So the sterling video-audio signal goes through a special bundled DVI-to-HDMI adapter to an HDMI receiver. We can see that the card is equipped with a couple of DVI jacks. Dual link DVI allows resolutions above 1600x1200 via the digital interface. Analog monitors with d-Sub (VGA) interface are connected with special DVI-to-d-Sub adapters. Maximum resolutions and frequencies:

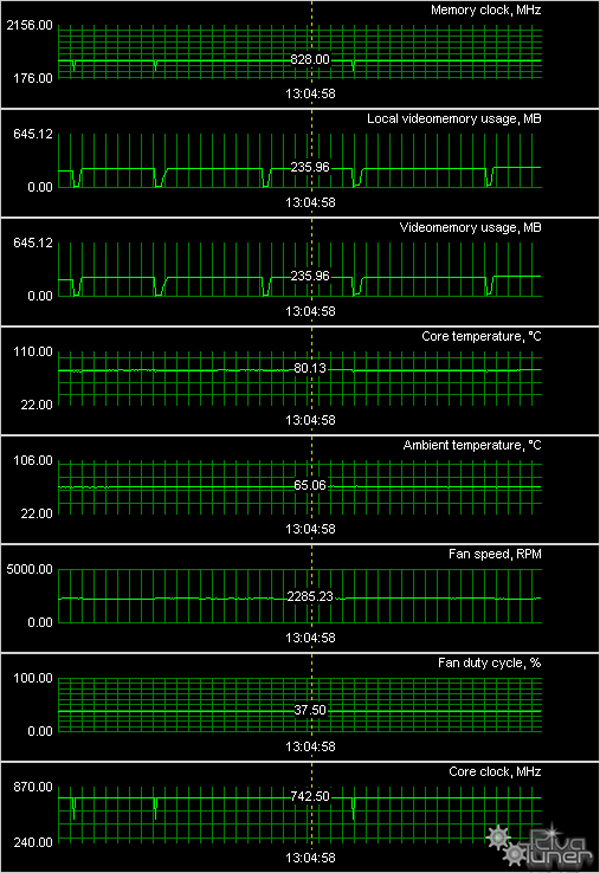

Cooling systemThe baseline article mentioned 37% of the fan potential at which the cooler usually works. However, the noise is noticeable even in this mode. The core temperature does not exceed 80°C. It's clear that the card dissipates almost as much power, there is no much difference here.  When we examined the 512 MB card, we tried to reduce fan speed to 31% (when the noise is practically unnoticeable).  We can see well that the core temperature reaches 100° in this case. The cooler is forced to operate at 100% (howling!) for a short time, and then fan speed abates to 37%. The same happens with the 1 GB product. You can control fan speed at large steps only, it's either 31% or 37% (the new version of RT can do that). Hence the conclusion that 37% is the required minimum, which helps avoid GPU overheating. You must not reduce the speed below this limit. That it the engineers were not being on the safe side here, they determined the real speed minimum in 3D mode to avoid overheating. And this minimum is not quiet. That's a big problem of the new cards. Here is a little consolation: the noise is generated by the airflow, not by the fan, so its frequency is not very irritating.

As this card is an OEM product, packaging is out of the question. We can only say that the bundle includes DVI-to-VGA, DVI-to-HDMI, VIVO adapters, and TV cords. Installation and DriversTestbed configuration:

VSync is disabled.

Test results: performance comparisonWe used the following test applications:

Graphics cards' performance

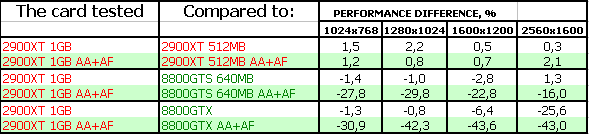

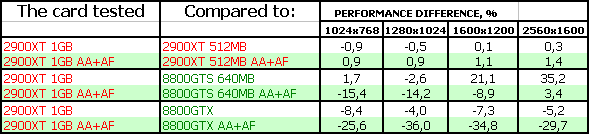

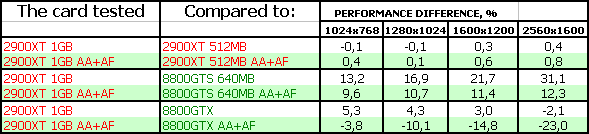

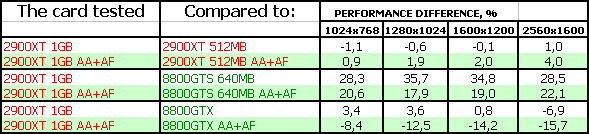

If you are familiar with 3D graphics, you will understand our diagrams and draw your own conclusions. However, if you are interested in our comments on test results, you may read them after each test. Anything that is important to beginners and those who are new to the world of video cards will be explained in detail in the comments. First of all, you should look through our reference materials on modern graphics cards and their GPUs. Be sure to note the operating frequencies, support for modern technologies (shaders), as well as the pipeline architecture. ATI RADEON X1300-1600-1800-1900 Reference NVIDIA GeForce 7300-7600-7800-7900 Reference If you have just begun realizing how large the selection to choose a video card is, don't worry, our 3D Graphics section offers articles about 3D basics (you will still have to understand them - when you run a game and open its options, you'll see such notions as textures, lighting, etc) as well as reviews of new products. There are just two companies that manufacture graphics processors: AMD (its ATI department is responsible for graphics cards) and NVIDIA. So most of the information is divided into these two sections. We also publish monthly 3Digests that sum up all comparisons of graphics cards for various price segments. The February 2007 issue analyzed dependence of modern graphics cards on processors without antialiasing and anisotropic filtering. The March 2007 issue did the same with AA and AF. Thirdly, have a look at the test results. We are not going to analyze each test in this article, primarily because for us it makes sense to draw a bottom line in the end of the article. We will, however, make sure that we make our readers aware of any special circumstances or extraordinary results. S.T.A.L.K.E.R.Test results: S.T.A.L.K.E.R. World In Conflict (beta), DX10, VistaTest results: World In Conflict (beta), DX10, Vista Splinter Cell Chaos Theory (No HDR)Test results: SCCT (No HDR) Splinter Cell Chaos Theory (HDR)Test results: SCCT (HDR) Call Of JuarezTest results: CoJ Company Of HeroesTest results: CoH AA stooped working in this game with all AMD graphics cards starting from Drivers 7.8. So in this case AA+AF for the 2900 means just AF!

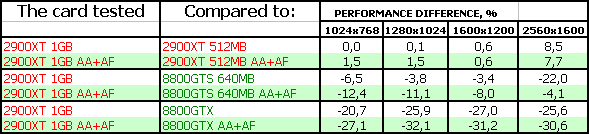

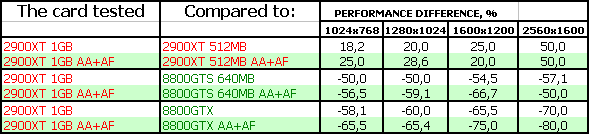

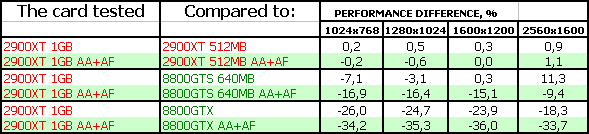

Serious Sam II (No HDR)Test results: SS2 (No HDR) Serious Sam II (HDR)Test results: SS2 (HDR) PreyTest results: Prey 3DMark05: MARKSTest results: 3DMark05 MARKS 3DMark06: SHADER 2.0 MARKSTest results: 3DMark06 SM2.0 MARKS 3DMark06: SHADER 3.0 MARKSTest results: 3DMark06 SM3.0 MARKS ConclusionsOur tests show that there are almost no performance differences between 512 MB and 1024 MB modifications of the 2900 card. That is, the difference (for example in SS2) is not big enough (in games with high memory bandwidth requirements in the first place), to pay for it so much. The same performance drops in AA modes, as we have already mentioned in the beginning of the article (back in our 3Digest we wrote about nonoperable AA in all AMD cards in CoH starting from Drivers 7.8). What concerns WiC, its performance is below the playable limit, so it makes no sense to analyze this game. Even though the game consumes very much memory, and it affects performance. At the same time, we can see that the HD 2900 demonstrates good results in some tests and even outperforms its rivals. It's still far from the 8800 GTX level, but that card is more expensive. All 2900 cards should be cheaper to become more attractive. ATI RADEON HD 2900 XT 1024MB PCI-E is an image product in the first place. It's for those users who want a gaming graphics card with a huge memory capacity (we remember the 7950GX2 with 1 GB of memory, but it's a dual-GPU system). Does it make sense to pay more than for the 512 MB modification? - It's not an easy question, it's probably one of those holy war issues. There are few such cards in stores, so they will all find their consumers. What concerns the drawbacks, the cooler is not noisy in 2D mode. The product gets really hot, so you'll need a well-ventilated PC case. You cannot even touch the card after it worked in 3D mode - it's so hot! The gravest drawback is performance drops in AA modes. It's either you wait for new drivers to make up for the bug, or you wait for the next top product from AMD. Or you choose the GeForce 8800. As always, the final choice is up to the reader. We can only inform you about products and their performance, but can't make a buying decision. In our opinion, that should solely be in the hands of the reader, and possibly their budget. ;) And another thing that we are not tired to repeat from article to article. Having decided to choose a graphics card by yourself, you have to realize you're to change one of the fundamental PC parts, which might require additional tuning to improve performance or enable some qualitative features. This is not a finished product, but a component part. So, you must understand that in order to get the most from a new graphics card, you will have to acquire some basic knowledge of 3D graphics and graphics in general. If you are not ready for this, you should not perform upgrades by yourself. In this case it would be better to purchase a ready chassis with preset software (along with vendor's technical support,) or a gaming console that doesn't require any adjustments. More comparative charts of this and other graphics cards are provided in our 3Digest.

Andrey Vorobiev (anvakams@ixbt.com)

October 12, 2007 Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. | |||||||||||||||||||||||||||||||||||||||||||||||||||||||