|

||

|

||

| ||

TABLE OF CONTENTSJune and Computex seemed to be not long ago. But the summer is over... Dull autumn days await us. But it's all different in the IT world. As if this world industry branch is located solely in the Southern Hemisphere: it's all peace and quiet in summer, but manufacturers and vendors become increasingly active by winter. The climax the year is Christmas and sales before this holiday. New Year's Eve will follow. And then back to work to dance the last pas after the spring turmoil at Computex and to go to rest till autumn. That's the paradox in the human world, unlike the nature. Only denizens of the Southern Hemisphere live in full accord with the IT rhythm. Because 90% of all projects and production is done in the Northern Hemisphere :-) The nature is slowing down to get ready for cold weather, and we are expecting new video cards. And here they are. We have recently reviewed GeForce 7900GS. Now it's a turn of the ATI product announced back in August. The Canadian manufacturer declared the general tendency for reducing prices on top accelerators. The recommended retail price for the X1950 XTX was set to 449 USD, so all lower-ranked cards should go down in price proportionally. We have a nice surprise - RADEON X1900 XT (sterling! without any cutoffs!) with 256MB of memory instead of 512MB. A recommended price for this product is 279 USD. Thus, the new line of top products from ATI will look like this (video cards' properties are in brackets: vertex pipelines/pixel shader units/texture modules/ROPS):

Here are competing cards from NVIDIA:

That's why the X1900 XT 256MB should compete with the old 7900 GT and with the new 7950 GT. That's because NVIDIA has no clear-cut positioning strategy in the $279 segment. Unfortunately, we haven't got the 7950GT 256MB yet, so we cannot compare our cards with it. And we cannot emulate this card, as all GeForce 7800GTX, 7900GT cards with this memory volume cannot operate at 550 MHz (GPU frequency). So, we can see that the Canadian company is obviously trying to attract potential customers in the popular segment of $250-$280 with its X1900 XT card, famous for the power of 48 shader units, but with halved memory capacity. We'll run our tests and see.

Video card

We can see well that the video card suffered no changes compared to the earlier counterparts. It differs only in capacity of memory chips.

The card has TV-Out with a unique jack. You will need special bundled adapters to output video to a TV-set via S-Video or RCA. You can read about the TV-Out in more detail here. The product is also equipped with TV-In to be used with a special adapter as well. Analog monitors with d-Sub (VGA) interface are connected with special DVI-to-d-Sub adapters. Maximum resolutions and frequencies:

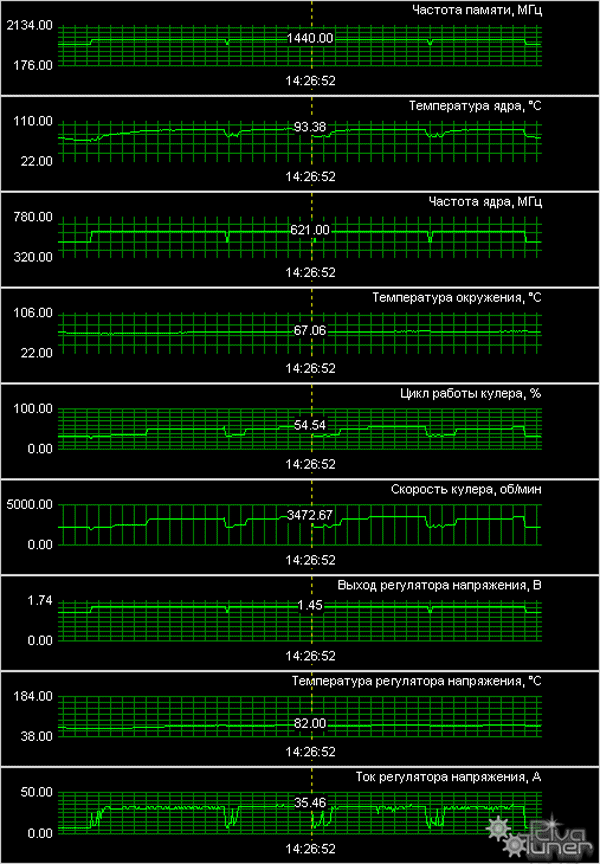

What concerns the cooler, the product is equipped with the same reference cooler as in the X1900 XT/XTX. The device is quite complex. It's very noisy, when the card gets hot. We have described this poor cooler many times - it made many ATI fans groan and look for ways to get rid of this system. Look at the temperatures of the X1900 XT (to say nothing of the X1900 XTX)!  I also want to draw your attention to the fact that real frequencies, both of the core and memory, in all X1900XT/XTX cards was lowered by 4-10 MHz some time ago. But even this step does not solve the overheating problem. It's a fiery card, the cooler is noisy (for example, the noise gets almost intolerable in FEAR). We noted in the X1950 XTX announcement that this noisy and awkward cooler had been finally replaced with a better solution. But unfortunately, its retention module is not compatible with older cards. So we have to put up with this monster, the reference cooler from the X1900 series. Users will again have to look for a replacement to this cooling system (of course, those who get irritated by the noise; there are also people who either don't use the card in heavy game modes, or do not suffer from noise).

It's an engineering sample, so the bundle and package are out of the question.

Installation and DriversTestbed configuration:

VSync is disabled.

Test results: performance comparisonWe used the following test applications:

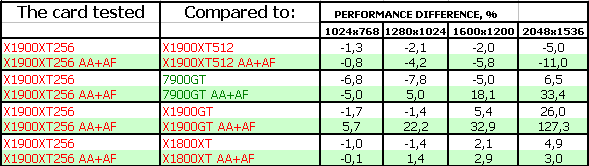

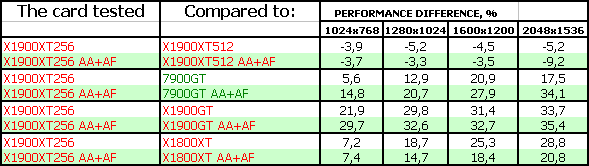

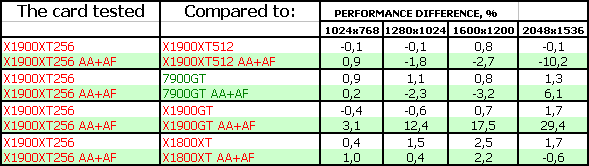

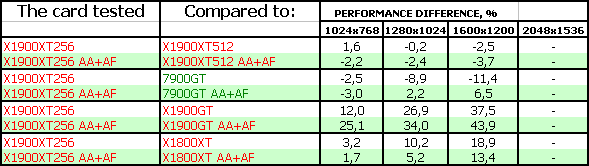

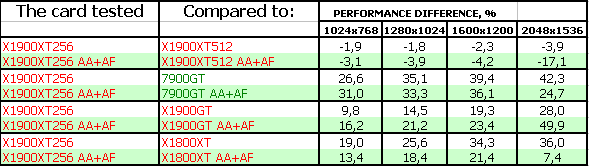

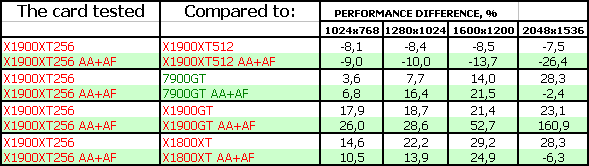

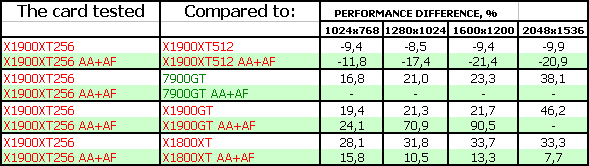

Summary performance diagrams

Game tests that heavily load vertex shaders, mixed pixel shaders 1.1 and 2.0, active multitexturing.FarCry, Research

Game tests that heavily load vertex shaders, pixel shaders 2.0, active multitexturing.F.E.A.R.

Splinter Cell Chaos Theory

Call Of Duty 2 DEMO Half-Life2: ixbt01 demo

Game tests that heavily load pixel pipelines with texturing, active operations of the stencil buffer and shader unitsDOOM III High mode Chronicles of Riddick, demo 44

Synthetic tests that heavily load shader units3DMark05: MARKS 3DMark06: Shader 2.0 MARKS

3DMark06: Shader 3.0 MARKS

Conclusions

ATI RADEON X1900 XT 256MB PCI-E. According to the tests, the new product (it's actually not new, it just has less memory and a better price) outscores the 7900GT practically everywhere. These days, of course. Even if that card is cheaper than the X1900XT, it will be still expedient to buy the latter. But we don't know about the 7950GT 256MB yet... Perhaps, it will demonstrate a better price/performance ratio. But I'd like to repeat that the X1xxx series has an important advantage: it supports HDR+AA, a very important feature for top cards, their performance is sufficient for this mode. NVIDIA products cannot possibly do that. Plus anisotropic filtering of higher quality. There is also an opinion that the default quality of NVIDIA cards with all their optimizations is worse than that of ATI products. If optimizations are disabled, NVIDIA accelerators lose much of their performance to raise the quality. Perhaps we'll analyze this issue in the nearest future and will see the situation with image quality - the last time we did it was last year. But still... A very noisy cooler and video card's overheating, which heats other elements inside your computer, stop us from saying that the X1900 XT 256MB is the best card in its class. Yes, its results are good, but there are other important issues for top modern accelerators as well. We all know well how hot these cards get and how noisy they are. Since 2002 and GeForce FX 5800 (NV30), which howling cooler shocked its users. We changed our attitude to Hi-End cooling systems since that time. Now we thoroughly examine them and they play a more important role in choosing cards. As for now, the only choice is to buy a X1900 XT and a cooler (e.g. Zalman), but will the total cost be expedient? If the 7950GT is equipped with a cooler from the 7900GTX, this product will be evidently more expedient. The X1900 XT 256MB is good (it would have been our favorite choice for 279-300 USD), but for the cooling system. :(

You can find more detailed comparisons of various video cards in our 3Digest.

Theoretical materials and reviews of video cards, which concern functional properties of the GPU ATI RADEON X800 (R420)/X850 (R480)/X700 (RV410) and NVIDIA GeForce 6800 (NV40/45)/6600 (NV43)

Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. | |||||||||||||||||||||||||||||||||||||||||||||||||||||||