|

||

|

||

| ||

TABLE OF CONTENTSIn one of our previous materials about new products from NVIDIA we severely criticized ATI's recent activities. But as time and current events show, the situation is not as bad as it seemed. Yep, I understand the Canadians, they are living through hard times; I understand that the R520 had been ready nearly a year ago and they had Napoleonic plans to launch the new card this year in spring-summer. Of course, we can understand difficulties, experienced by their technicians, when R520 running at higher than 550 MHz would result in unpredictable system glitches and reboots. That was not even the fault of the 0.09-micron process technology or high frequencies - no, there were no problems with overheating. The trouble was in current leakages, which accumulated at 550 MHz and higher, resulting in the above mentioned consequences. This problem was very difficult to track down and then to eliminate. When they managed to do it, it was summer already. But the company had to manufacture a new revision of R520, with the bugfix. That was why this product was put off till October, although due earlier than the G70. Now we wait till the company accumulates a sufficient number of chips (new revision) to launch the X1800 XT on a mass scale. The company initially planned to launch three R520-based cards. But when it faced the problem of current leakages, they launched only two products: X1800 XT (on the new core revision) and X1800 XL (based on the previously manufactured R520). Hence the huge frequency gap: X1800XT at 625 MHz and X1800XL at 500 MHz - as the latter uses those chips with the leakage problem. Thus only 500 MHz (not even 550 MHz). This chip evidently does not need a super-large memory bandwidth, 1 GHz is quite enough. Feeling grudgy? If you equip cards with 1.4ns memory, capable of operating at 700 (1400) MHz, let it run at this frequency! There is never too much of it. Besides, it would have come in handy for AA, especially for the heaviest AAA modes. But! The trick is that the X1800 XL cards, currently available on the market, were initially planned as X1800 XT or X1800 PRO, that is products with higher frequencies than 500/500 (1000) MHz. But the problem with unstable performance of the cores at above 550 MHz forced the company to change its plans. So the first lucky buyers of X1800 XL can enjoy wonderful memory overclocking up to 1400 MHz. Why the first? As the stock of old R520 chips (and PCBs of the first revision) is depleted, there will appear new cards with usual 2.0ns GDDR3 (as faster memory is not required here). I repeat: the X1800 XL you can buy right now is a prototype of the X1800 XT - the card that must have been out in spring. Of course, this one has higher frequencies than 500/500 (1000) MHz. I think in a couple of months there will appear cards with the new revision of the R520 core and slower memory (their PCB may also be different). These cards will use the X1800 XT rejects. Later on we shall check everything up by trying to overclock the video core as much as possible. The announcement was made more than a month ago already and the first rare X1800 XL cards can be found on sale. Of course, retailers didn't fail to overprice this product ($600 and higher)! And the X1800 XL cannot compete with the GTX model, mind it! It's a direct competitor to the junior 7800 GT. However, the price depends on the demand. That's why these cards come at $450-$470 or even lower in the West. Our market is special, avarice often takes charge rather than sales opportunities. But let it drop. Market is market. There is nothing permanent here, everything changes. ATI swears to solve all its shipment problems in the nearest future and flood the market with products so that their prices are at least on the level with what was promised, or even lower. We'll see. And now we proceed to the examination of the first production-line robin - X1800 XL from HIS (but it's not manufactured by this company). Video card

I already told you that HIS has nothing to do with manufacturing these cards. All Hi-End cards from the Canadian company are manufactured in Foxconn and Celestica plants, ordered by ATI itself. The company does not sell chips and(or) memory separately. Only ready-made cards. That's how the Canadians intend to preserve all the requirements to the manufacturing quality, having concentrated it in just two plants. Even such manufacturing monsters as ASUS do not get a license to manufacture the cards on their own. We can still see that even the X850-series cards are just of the reference design. Frankly speaking, a similar situation can be seen in NVIDIA - all 7800GTX cards are also manufactured by Foxconn and sold to partners as ready-made cards. But its restrictions are less severe, NVIDIA allows its partners to manufacture cards on their own. So it all depends on a partner to decide whether to manufacture such expensive PCBs on its own or to buy ready cards from NVIDIA. For example, we can already come across the 7800GT card from ASUS and Gigabyte. But the Canadian company does not allow this at all. So our readers should accept that any Hi-End product from ATI is just a reference card. The same concerns this very product. Even the cooling system is not changed! Note that this product supports VIVO, where VideoIn is based on RAGE Theater. Besides, this card has two DVI jacks, regular monitors with d-Sub can be connected via the corresponding adapters that come shipped with the bundle.

Let's review the cooling system.

Bundle

Box.

Installation and DriversTestbed configurations:

VSync is disabled.

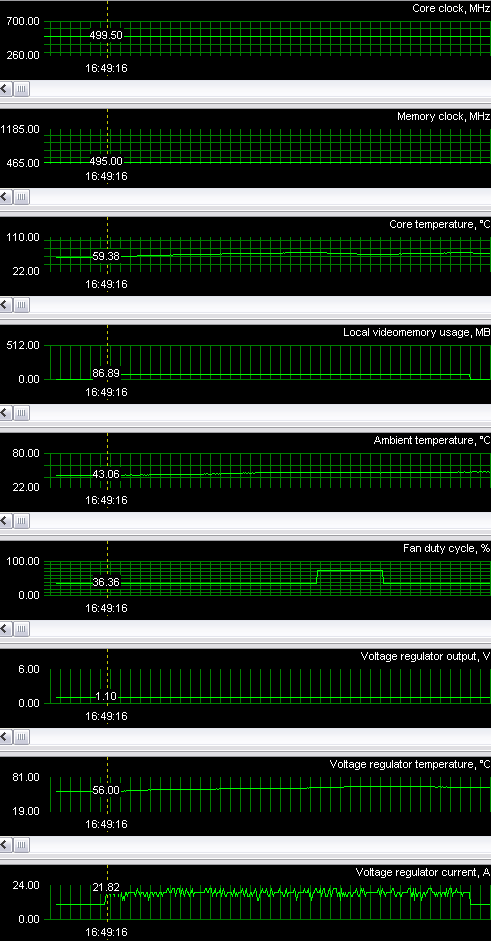

And now the most interesting part. As is known from the reference article, ATI changed frequency generation in new products, so all overclocking utilities, released earlier, failed to work with them. Even such a guru in this field as the author of RivaTuner couldn't immediately catch up with the frequency changes. But this is history now, Version 15.8 will be able not only to overclock X1xxx, but also to monitor these cards. Having studied X1800 XL/XT, Alexei Nikolaychuk, the author of RivaTuner, found temperature sensors as well as GPU current and voltage sensors.  The voltage on X1800 XL is 1.1V. The chip consumes approximately 10A without 3D load and up to 22A in 3D mode. Thus, we can draw a conclusion that the GPU itself consumes from 11 W in 2D up to 24 W in 3D. Taking into account that the overall power consumption of the card is about 80-90 W under maximum load, we can estimate how much power the memory consumes (plus some power consumption on overheads). Here we can see the cooler changing its rotational speed, I have already mentioned it. But most importantly, we can change frequencies! That's what we squeezed out of the card: 700 (1400) MHz of memory frequency - that's the maximum. A tad higher - and we get artifacts.

In terms of the core frequency, the card can run even at 580 MHz(!), but it results in the consequences described in the beginning of the article! Spontaneous reboots and system crashes. Even at 560 MHz. The card was stable only at 555 MHz.

We noticed no overheating. Raising the core frequency by 55 MHz had no effect on the temperature mode. Test results: performance comparisonWe used the following test applications:

Summary performance diagrams

You can find our comments in the conclusions.

ConclusionsHIS RADEON X1800 XL 256MB PCI-E is a copy of the reference design, except for a brand sticker on its cooler. HIS left behind many competitors with its X1800, but it's still not tired of changing designs. I hope the X1xxx series with improved coolers from HIS IceQ will soon be available. But its success will depend on pricing. As for now, I repeat that X1800 XL is obviously in deficit in Moscow retail in early November. Prices for this product are raised too high due to the wave of the first interest of enthusiasts. There is a great difference between prices for such products in Russia and in the USA (for example). Remember that the X1800 XL competes with the GeForce 7800GT and so its price must be within $420-$480 (early November). Drivers 5.10a offer a nice surprise: ICD OGL uses Ring Bus in the R520 more effectively now, which allowed to raise performance in heavy modes (AA+AF) without loosing quality in such games as Doom III. I assume that the efficiency of the new memory controller is far from the end of its resources, we are still to see performance gains in games. The card was stable (without overclocking higher than 555 MHz), we had no gripes with it, everything was up to the mark. It offered excellent 2D quality. We hope for acceptable prices and are looking forward to see it hit the shelves. It was very interesting to research power consumption of the X1800 XL core. In the next article you'll learn how much the X1800 XT actually consumes - it's very interesting. You can find more detailed comparisons of various video cards in our 3Digest.

Theoretical materials and reviews of video cards, which concern functional properties of the GPU ATI RADEON X1800 (R520)/X1600 (RV530)/X1300 (RV515) and NVIDIA GeForce 6800 (NV40/45)/7800 (G70)/6600 (NV43)

Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. | |||||||||||||||||||||||||||||||||||||||||||||||||||||||