|

||

|

||

| ||

TABLE OF CONTENTSSummer... It's hot or just warm. Gamers' system units, heated as they are, get nearly red-hot. Temperatures go off-scale, especially those of 3D accelerators. Overheating protection snaps to action, chips are slowed down, frequencies are reduced, ambulances are called, gamers are hospitalized... That's an extreme situation, but still there always are fans who could have a heart attack at the sight of their favourite accelerators getting suddenly slow in a shooter. Ardent gamers, willing to play the national game called "3DMark, or more points", go into stores to buy GeForce 7800GTX. Shop assistants reach out their greedy hands for money, raising prices up to 1/5 of the accelerator cost; gamers, fighting off their own greed and cursing their fate, hand over banknotes (sometimes green), wet from sweaty hands, and get boxes with green cards inside. Then they immediately run..., no, not to install the cherished card into their computers, but to inform everybody about their great purchase in forums. Benchmarks, points, that will wait. Other interested people are divided into three groups: enviers, who always criticize happy owners, assuring them that performance gains are insignificant, the card is nothing special, and that they had expected more; those who want to wait before buying this card, expecting the 6800GT/Ultra prices to drop; and those who are reasonable enough to wait for the release of counterparts from competitors, in order to compare the cards and then make a choice. The new product from Gigabyte may put in pie in these three groups. Considering the news about the new AA for SLI solutions from NVIDIA, all GeForce 7800GTX advantages (except for its prices) evaporate, as their other features are identical. The difference is only in frequencies, pipeline number, etc. Just imagine that now, when the GeForce 7800GTX costs $650 (minimum!), appears a product at the same price, that has two 6800GT cards on board. Fans of such extreme and the most powerful hardware will have to pause and think which model to buy. However, the new product must be reviewed before making comparisons and drawing conclusions. That's what we shall do now. Remember that the object of the review is a video card (3D accelerator) that carries two GPUs GeForce 6800GT and the total of 512 MB. Everything taken together operates as NVIDIA SLI, that is it's actually two accelerators on a single PCB. We have recently reviewed a similar product from ASUS and were stricken by its dimensions. Gigabyte promises a more compact card.

By the way, here are the videos, which briefly illustrate the new product.

Video card

Engineers obviously designed their PCB from scratch. While ASUS used available fragments of layout for its product, which led to huge dimensions of a card (according to the reference design, all memory chips are placed on the front side of the PCB; you just try to arrange 16 chips in groups of 8 compactly around your GPU!); Gigabyte engineers decided to complicate the PCB layout resulting in increased costs, but the card was made rather compact. Note two details:

As a result, the card turned out of standard 100 mm in height, it's just a tad longer than the standard 190-200 mm. The latter factor may theoretically play a negative role when you try to install a card into a PC case (not all PC cases would be able to accommodate it). However, card's thickness is a more important of potentially-negative factors. It's 50 mm.

Thickness of the main heatsink on the front side does not exceed that of "two-slot" coolers, so there are no special problems. But the rear heatsink may interfere with installing this card.

Photos above show how this accelerator abuts against memory modules. That is this motherboard (ASUS A8N-SLI) will have problems with the 3D1-68GT, because its memory slots are too close to the PCI-E slots. I managed to install this card (with a tip), but you should understand that it won't do. It's just for emergency to carry out our tests. You cannot have this card installed in such an awkward position with such a heavy cooler. Besides, if you look at the thickness of the rear heatsink, you can see what motherboards won't support this product (for example, where northbridge with a heatsink are close to the PCi-E slot). Thus, we have come close to the topic of 3D1-68GT compatibility with motherboards. It's common knowledge that the previous 3D1 product works solely in motherboards from Gigabyte based on nForce4 SLI. The new card lacks these constraints and can work with any motherboard with PCI-E slot. However, NVIDIA driver lays its own limitation; it detects a chipset before giving OK for SLI. It's clear that NVIDIA will not allow SLI on motherboards with one PCI-E slot (there is no point, as two cards cannot be installed anyway), so here is the conclusion: Gigabyte 3D1-68GT will work with any motherboard with two PCI-E slots, if the NVIDIA driver gives the green light to SLI. However, "will work" in this context means - will work to the full extent, in SLI mode. It will work as TWO 6800GT cards on any motherboard. That's why the card can output information to four monitors (as two sterling cards) (the bundle includes a special adapter to install two additional VGA connectors). Let's review the cooling system. Just one look is enough to see that it's too bulky. It consists of two heatsinks located on both sides of the PCB. In my opinion, it's the heaviest and largest cooler of all models I have ever seen. If you look at the card at a certain angle, it produces an impression of a waffle cake covered with chocolate (with a product logo).

On the whole, the device turned out very thick (5 cm). As we have already mentioned above, it may interfere with installing this card into any motherboard (that meets the requirements). Fins of the main heatsink are very thick, so it weighs very much even though it's made of aluminum alloy.

The card contains two GPUs GeForce 6800 GT: Unlike the similar product from ASUS, this huge cooler did not allow to overclock the graphics cores to the 6800 Ultra frequencies (425 MHz). However, its memory is "heaven-born" to be overclocked to 600 (1200) MHz, considering that it's 1.6ns. That's why this product cannot demonstrate the 6800 Ultra SLI. I guess the reason is in one of the cores, which cannot physically run at higher than 400 MHz (the 6800GT cannot always manage 425 MHz). However, this card can be easily overclocked to 400/1100 MHz, so we can speculate on how the 6800 Ultra SLI would have worked, if its core frequency hadn't been increased from 400 MHz to 425 MHz. We should also mention that the card is equipped with a couple of DVI connectors.

Bundle

Have a look at the box.

Installation and DriversTestbed configurations:

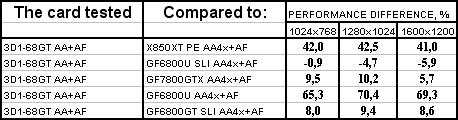

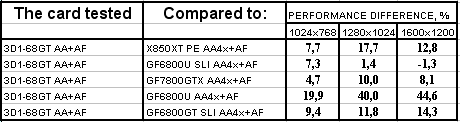

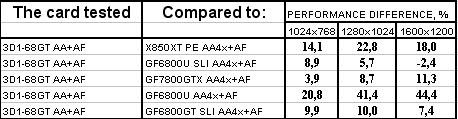

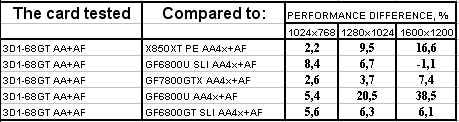

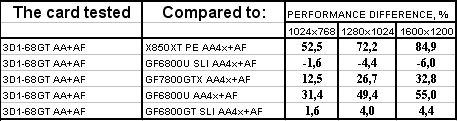

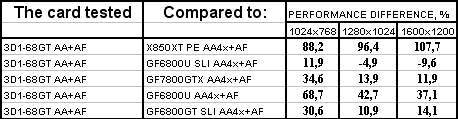

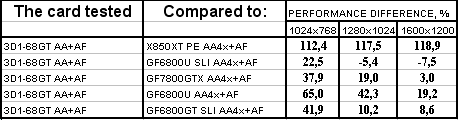

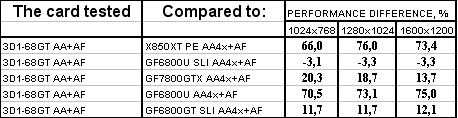

VSync is disabled. Test results: performance comparisonWe used the following test applications:

Game tests that heavily load pixel shaders 2.0.TR:AoD, Paris5_4 DEMO We use this outdated and not very popular game as an example of a classic "shader game", which heavily loads these units. Test results come up with the expectations: it's slightly outperformed by the 6800 Ultra SLI; in other cases it's victorious due to higher clocks, the 3D1-68GT and 6800 GT SLI cards are outperformed. Game tests that heavily load vertex shaders, mixed pixel shaders 1.1 and 2.0, active multitexturing.FarCry, Research FarCry, Regulator

FarCry, Pier Here is a paradox: the 3D1-68GT is slightly faster than the 6800Ultra SLI! What are the reasons? I guess there may be two reasons: reduced memory timings (as this test is critical to memory bandwidth, it could have an effect), better implementation of the internal SLI adapter. F.E.A.R. (MP beta)

F.E.A.R. is the latest game; its release is planned for the autumn this year. It loads accelerators heavily, so the results are not so high in absolute FPS with maximum quality. As this game loads shaders heavier, everything falls into place: the 7800GTX is victorious due to its optimal pipelines, plus increased frequency of the geometry unit (don't forget that increasing the total number of vertex pipelines in SLI tandems has no effect, as each card calculates all vertices anyway, only rasterizing is divided between the cards), then goes the 6800 Ultra SLI, followed by the card under review.

Game tests that heavily load both vertex shaders and pixel shaders 2.0Half-Life2: ixbt01 demo

Half-Life2: ixbt02 demo

Half-Life2: ixbt03 demo

The situation is actually similar to Far Cry: this game is more critical to memory bandwidth; we assume that the 3D1-68GT has reduced memory timings as well as a better organized SLI adapter - that's why the Gigabyte product outperforms even the 6800 Ultra SLI. Approximately the same situation is with the 7800GTX.

Game tests that heavily load pixel pipelines with texturing, active operations of the stencil buffer and shader unitsDOOM III High mode Chronicles of Riddick, demo 44

Chronicles of Riddick, demo ducche

While such a situation in DOOM III can be explained and the results are natural, something strange happens in CR. The advantage over the 6800 Ultra SLI in low resolutions is very large. Can it really be the effect of memory timings, which I assumed previously? It's very strange; perhaps the drivers acted in a different way, their version being the same.

Synthetic tests that heavily load shader units3DMark05: MARKS Like in TR:AoD, this situation is crystal clear: each card demonstrated its capacities, provided in the specifications. The total capacity of 32 shader pixel pipelines even at 370 MHz prevailed over the GeForce 7800GTX with its 24 pipelines, even if at 430 MHz.

ConclusionsSo: Gigabyte 3D1-68GT (Dual GeForce 6800GT) 2x256MB PCI-E is an image of a card, like the similar product from ASUS. It's designed for hardcore gamers and fans of the new extreme hardware. It consists of two 6800 GT cards on a single board in SLI mode. Its advantage over the similar tandem is most likely provided by the special implementation of the SLI adapter inside the PCB as well as modified memory timings (this unique layout required its own BIOS version). It's currently impossible to answer the question whether two such cards can form SLI, as the layout does not provide for this matter. However, the latest driver versions allow SLI without an adapter, so why not install two 3D1-68GT cards together? Considering that the total price of two 6800 GT cards is currently (end of July) 720-740 USD and that the retail price for Gigabyte 3D1-68GT (Dual GeForce 6800GT) 2x256MB PCI-E is up to 700 USD, this purchase makes a sense. But I emphasize that in some modern games the GeForce 7800 GTX outperforms even the GeForce 6800 Ultra SLI, to say nothing of the 6800 GT SLI, even at the lower price, which is currently too high as this product is new.

The Gigabyte 3D1-68GT (Dual GeForce 6800GT) 2x256MB PCI-E has the following disadvantage: a noisy cooler. There is no getting away from this fact. It certainly has pros (besides the above mentioned ones): it doesn't require motherboards manufactured only by Gigabyte. Besides, if you don't need SLI, but require 4 monitors to display the info, this product is also your choice.

You can find more detailed comparisons of various video cards in our 3Digest.

Gigabyte 3D1-68GT (Dual GeForce 6800GT) 2x256MB PCI-E

Theoretical materials and reviews of video cards, which concern functional properties of the GPU ATI RADEON X800 (R420)/X850 (R480)/X700 (RV410) and NVIDIA GeForce 6800 (NV40/45)/6600 (NV43)

Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||