|

||

|

||

| ||

TABLE OF CONTENTSNot long ago we were enjoying summer, saw the launch of new cards from NVIDIA, participated in a splendid party in Paris... Then the 7800GT appeared... Now it's time of Christmas sales, we are expecting snows and frosts. Time flies. Especially in autumn. Having wound up the spring of time, summer flies in an instant and takes autumn with it... It runs out of the energy after New Year's Eve and the time before spring drags on very slowly... The bright red-white whirling of autumn announcements from ATI Technologies is over. Leaves had fallen and now we are clear that the month is over and some representatives of the X1xxx have just found their way to the shelves. Strange as it may seem, the low-end solutions are nowhere to be found. Even though it's time for all the products except for the X1600 XT to be in full sale. They must be! That's obviously not the idea of the Canadian specialists. Alas, another paper announcement was made on October the 5th. Words are faster than deeds. It's a well known fact... Santa Claus will come soon and stimulate people to spend large sums of money. But what will the red-white camp offer? Good dreams and New Year's Eve wishes. But strange as it may seem, NVIDIA hopes that ATI has something to sell and so it's getting ready for a new counter offensive. Counter counter offensive? It's actually a new attack. Californian specialists are evidently concerned that after the long years of severe competition the Canadian company suddenly got out of it, weakly throwing out the X800GT and X800GTO, even without any hopes to damage the competitor... What's the most important, they got rid of the R480, as the 100 million losses may be followed by other losses for disposing of the left-overs. As for now, there is some demand and the company can still bite with old-new solutions... To say nothing of CrossFire, which hasn't actually appeared on the mass market. While ATI employees told stories about SLI with enthusiasm and anticipation in late May, they now speak of "a low market demand", etc. Alas, it's hard to implement what was so easily declared... Even after the failure of CrossFire (yes, we all understand that it's a technology from the future, that it's not implemented in the current solutions; but still even a schoolboy understand that ITS SUCCESS depends on HOW IT'S IMPLEMENTED and HOW FAST IT APPEARS on the market. A long time elapsed - there are still no motherboards on RD480 (rare samples don't count), CrossFire Edition video cards are lost somewhere - perhaps ATI engineers decided to keep them in Toronto; we have heard nothing about similar products based on X1800: this is hardly a success, MAXX spirit seems to overlook no mistakes and is still avenging the past). To make a paper announcement again, to show the cards, and again fail to provide them to mass users is a shame. Considering a bright example from NVIDIA in summer, which delivered hundred thousands of freshly announced cards. I share the NVIDIA's anxiety. When only two companies are left on the battlefield (Matrox abandoned the market, S3 manufactures only reference cards, which no one wants to manufacture on a large scale, XGI plays the f... that is it's constantly updating its roadmaps), such a passive position of the Canadian company is scary. Even if not passive but a pile of mistakes for the past year, but it still does not lift their responsibility. Only foolish and small-minded specialists from NVIDIA or ATI (the lower the position, the less they use their brains) may rage and wish death to their competitor, they don't understand how deep they sink afterwards! If Intel learns that AMD is dying, Intel will do everything possible to help it. Because Intel will find itself in a bad situation after that. Microsoft has suffered the "beauty" of this situation to the full. That's why I hope that the Canadian company will be OK, its products will come out, their prices will not be high (though it's useless to explain the situation to our retailers - they will try to get 100% benefits from a poor new card anyway and then will get stuck with this card - they won't be able to sell it even at the initial price, because the X1800XL will not sell for $650 (I have already seen this price in a Moscow shop)). And today we are going to review the answer of NVIDIA to ATI RADEON X1600 XT and X1300 PRO. Considering that the full support for DirectX 9.0c with SM3.0 was introduced 1.5 years ago, there is no need to tell you that it's very easy to launch a product that will kill the new card from ATI. An overclocked 6600GT or even 6800 will make the X1600 XT feel bad. Add an intermediate product between the 6600 and the 6600GT (there is a huge frequency gap there) to make the X1300 PRO stagger. That's actually what NVIDIA has done. It has an excellent NV42 chip, which has been manufactured by 0.11 micron technology, has low cost (relative to the cut down NV40 or NV45), supports the 256bit bus. In fact, 0.11 micron products from NVIDIA are very good, the huge overclocking potential speaks for itself (unlike ATI R430). When high frequencies were not necessary in order not to interfere with the NV45 sales, all GeForce 6800 cards, based on NV42, operated at 350 MHZ (core). We found out that the X1600 XT sometimes outperformed such cards due to the huge 590 MHz core clock. It's elementary to find a sufficient number of NV42 chips that can run at 400 MHz or 450 MHz. NVIDIA experts came to an accommodation between price and performance and set the core clock to 425 MHz. They called this processor GeForce 6800GS. Why GS? Probably because of the weariness in search of the new suffix. GT and GTS were already used, but GS is still vacant. As is well known, the GeForce 6800 was equipped with DDR memory running at 700 MHz. But the 425 MHz will be gripped in a vice of such a narrow memory bandwidth. That was why a Solomonic decision was made: to equip the 6800GS with 2.0ns GDDR3 memory, there are plenty of such modules on the market and its price has dropped much. Besides, it will be based on the 7800GT PCB, which is cheaper than the 6800GT one (the 6800 PCB is evidently not compatible). Such a product will have a recommended price of $249, that is it's identical to the price for the X1600 XT 256MB. Considering that the GeForce 6800 can be already found for less than $200 and even $190, the 6800GS price may just as well change depending on the X1600 XT. Of course, the Canadian company is in a better situation - its product has a 128bit bus and thus a much cheaper PCB. But it's also equipped with still expensive memory (it runs at 690 (1380) MHz!). Thus, ATI cannot drop the prices very much. It'll be interesting to watch this opposition. What concerns the X1300 PRO, which has just 4 pipelines and a huge 600 MHz core clock, it will be easily opposed by the 6600 with 8 pipelines and increased core and memory frequencies. In this case NVIDIA has chosen GDDR2 memory, which is very cheap, even cheaper than DDR. GeForce 6600 DDR2 comes at $120. So, let's analyze the new cards from NVIDIA and what they really can do. Will they really be a strong counter offensive to the products from the Canadian company. Video Cards

As I have already written, the 6800GS is obviously based on the PCB from 7800GT. All NV4x and G70 chips are identical in terms of their pins, so NV42 can be easily installed on such a PCB. It should be mentioned that this card has SLI connectors. There is a VIVO jack. As you may remember, all 7800GT cards are equipped with this codec. However, the Philips chip is not installed, probably in order to keep status quo with other 6800 cards without VIVO. What concerns the 6600 DDR2, its title hints that it's a unique card with a new PCB. On the whole, this design sometimes resembles the usual 6600, but chips of a different form factor (packaging) and type leave their stamp.

Cooling systems cover GPUs, as usual. You can hardly expect anything unique from the 6600 DDR2. Simple GeForce 6600. Note that NVIDIA started marking chips right on their dies (they used to be marked on their substrates).

The same concerns the GeForce 6800 GS. Interestingly, judging from its designation, the GPU has been manufactured on Week 33, 2005, that is in September (quite recently). It's already marked as GS. Not just GeForce 6800. We remember that the chips used to be marked on their substrates, but these chips are evidently marked on their dies. Most likely, it's not a previously manufactured NV42 for the 6800 and just selected and re-marked for the new card, but it's been specially manufactured for the GS card. It's our base-line material on the new products, so we shall not review their boxes and bundles. But we'd like to note that our 6600 DDR2 is an XFX modification (the blue color and a logo on the cooler). That is it's a production-line video card.

Installation and DriversTestbed configurations:

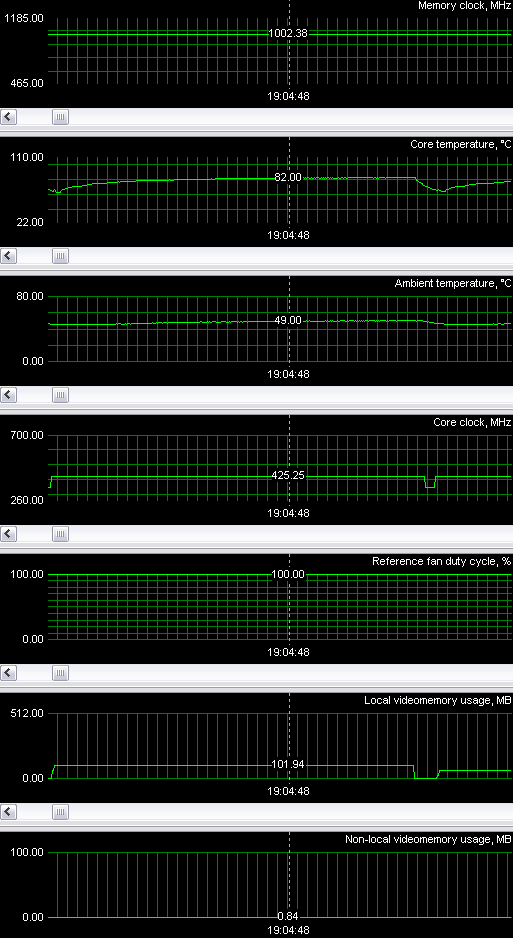

This picture refers to the NVIDIA GeForce 6800 GS. Please note that the cooler monitoring results are wrong (RivaTuner author will have to fix it). In fact, I set the cooler rpm to minimum - 25%. It's not audible at all!

As we can see, the temperature background does not exceed the reasonable limits (in a closed PC case without additional cooling).

Test results: performance comparisonWe used the following test applications:

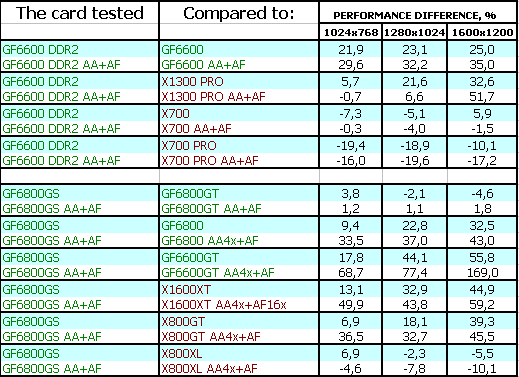

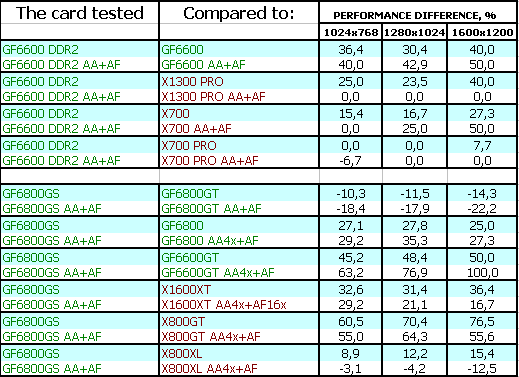

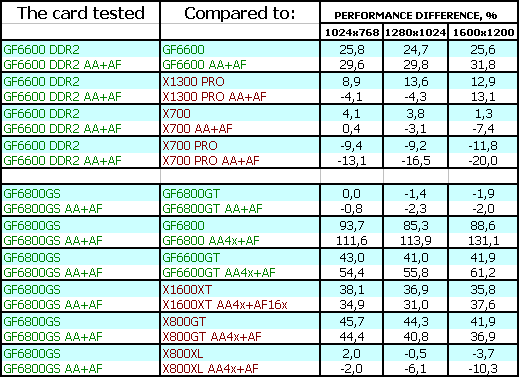

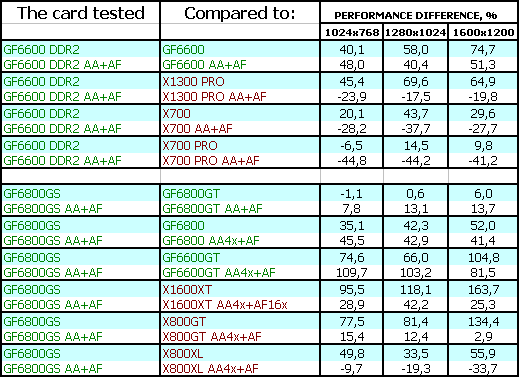

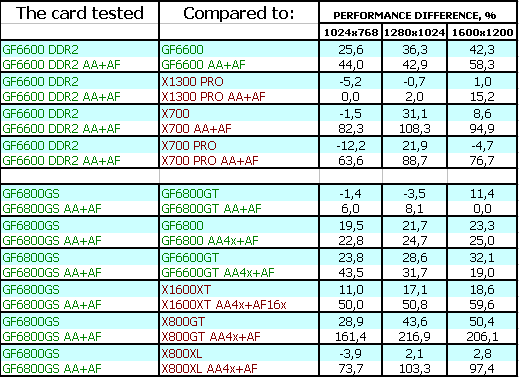

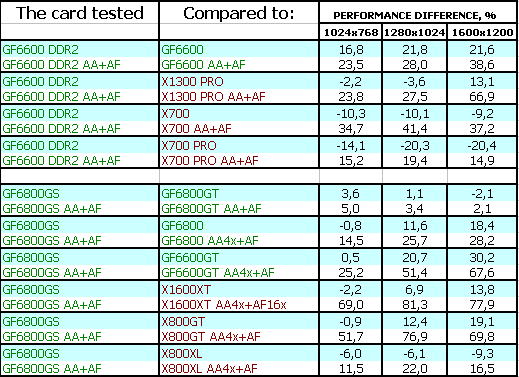

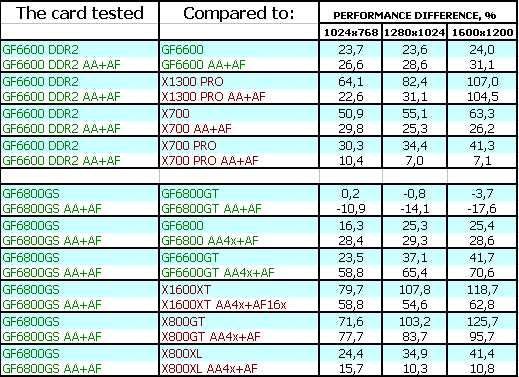

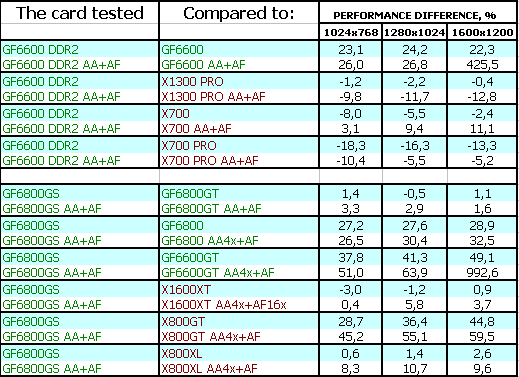

Game tests that heavily load vertex shaders, mixed pixel shaders 1.1 and 2.0, active multitexturing.FarCry, Research Considering that the GeForce 6600 DDR2 price is even lower than that for the X1300 PRO (NVIDIA recommends to position the new card against the X1300), the 6600 DDR2 is evidently successful! The 6800 GS demonstrated an excellent result, nearly on a par with the 6800 GT. Of course, it outperformed not only the X1600 XT, but also the X800 GT. It has nearly caught up with the X800XL, which is evidently more expensive. Alas, both ATI products (X1300 PRO and X1600 XT) are inferior. NVIDIA's cards are faster. Even in this game, where ATI cards are traditionally at advantage. Game tests that heavily load vertex shaders, pixel shaders 2.0, active multitexturing.F.E.A.R. v.1.01 Considering the absolute performance results in this game, I can say for sure that we should pay attention only to non-AA+AF modes as far as the 6600 DDR2 comparison is concerned. The new card is also successful there. 6800 GS demonstrated a still more brilliant result, having heavily outperformed its competitors. Only the 6800 GT is faster, but it's considerably more expensive (don't forget about it). Splinter Cell Chaos Theory We can also establish a fact that the 6600 DDR2 is very expedient compared to its competitor, it's slightly outperformed only in AA+AF mode in low resolutions. But again, considering the performance demonstrated here by these cards, we can assume that AA+AF will hardly be necessary. Of course, a gamer can reduce the quality level (disable some effects) and enable AA+AF instead. What concerns the 6800 GS, it fairs well, its advantage over the competitors is obvious. Moreover, this card nearly effortlessly caught up with the X800 XL. But we should take into account that all X8xx cards work with Shaders 2.0 in this case, while NVIDIA - 3.0. That's why we should mentally adjust the scores. The only correct comparison is between the X1xxx series and NVIDIA's competitors. BattleField 2 Here is the first game, where the 6600 DDR2 is defeated in the heavy mode with AA+AF. Considering the FPS values in this test, we can say that heavy modes are justified here, so we score a point to the X1300 PRO. But the 6800 GS still fairs well, the card demonstrates excellent results, it was only outscored by the X800 XL in heavy modes. It should be said that BF2 is an unpredictable test, you have to rerun it 3-4 times in order to make sure that the results are presentable. Call Of Duty 2 DEMO It's a very heavy test for modern video cards, though I don't understand the reason for such low FPS (I don't see super-excellent graphics here). Both new cards from NVIDIA are also up to the mark here. The first card won parity with the competitor from ATI, the second one easily outperformed all its competitors. Half-Life2: ixbt01 demo

This game is rather simple for state-of-the-art accelerators, so even the 6600 DDR2 copes with it in AA+AF modes. In these mode we can see that the new cards from NVIDIA are again victorious (including the 6800 GS).

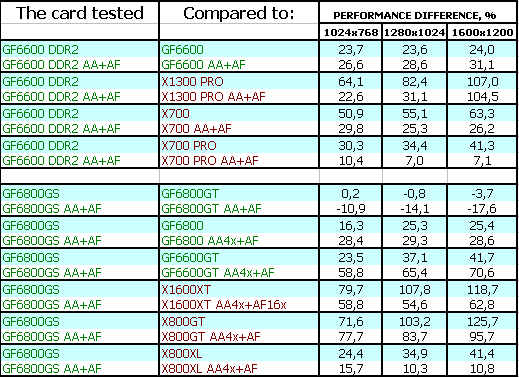

Game tests that heavily load pixel pipelines with texturing, active operations of the stencil buffer and shader unitsDOOM III High mode Chronicles of Riddick, demo 44

I guess there is no point in commenting the results, it's quite clear that the video cards from NVIDIA will be the best in these tests. Test results confirm it.

Synthetic tests that heavily load shader units3DMark05: MARKS It's practically the only purely shader test, which compares corresponding units in accelerators. As we can see, the results are different from those we saw previously in games. X1300 PRO is faster than the 6600 DDR2 (having compared the fill rates: 4 x 600 = 2400 megapixel per second and 8 x 350 = 2800 megapixel, we can say that theoretically the 6600 DDR2 must be faster. But we all know well that computational capacities of ATI chips are their only fad. If games had used very complex shaders and little texturing, ATI cards would have always been victorious. Remember TR:AoD). In case with the 6800 GS and the X1600 XT (their fill rates are 425 * 12 = 5100 and 590 * 12 = 7080 correspondingly), we can see that the X1600 XT has just 4 texture units, even though it must be victorious. The huge advantage in the peak pixel fill rate comes to nought due to the texel fill rate. Even such a shader application as 3DMark still requires high texturing capacities, the 3:1 ratio of shader to texturing capacities does not work, no matter what ATI representatives tell us.

Conclusions

NVIDIA GeForce 6800 GS 256MB is a very good solution from NVIDIA, which has a great number of advantages. First of all, it's the 256bit bus, while the RADEON X1600 XT has only a 128 bit bus. So the memory bandwidth of the first card is evidently higher ( What concerns overclocking, this sample shows an excellent potential: 530/1180 MHz! Not to overcrowd the graphs with the results of this product at these frequencies, I will note that the GeForce 6800 GS at 530/1180 MHz is just a couple per cents slower than the 6800 Ultra, its price lower by $150! I repeat that RivaTuner can control the cooler on such a card, so we can make it practically noiseless. NVIDIA GeForce 6600 DDR2 256MB just as well offers nothing new. Just an overclocked NV43. It's quite clear that part of the 6600GT chips can be used for these cards, there is nothing more to test. Besides, there are a lot of chips in 6600 cards that will not manage 500 MHz (the level of 6600 GT), but will easily cope with 350 MHz. That's why these cards are very cheap to manufacture. What concerns DDR2 and its expedience in a $115-120 card, if ATI can install DDR 2.5 ns memory to such cards, why can't NVIDIA? Besides, DDR2 is already cheaper than DDR1. Considering that NVIDIA recommends to compare the 6600 DDR2 with the X1300 (not PRO), it must have a price reduction capacity. Such cards may easily go down below $100. As NVIDIA easily made up competitors to ATI products, actually better than the recently launched Canadian products, we can say that their retail advantage will be obvious (of course, ATI employees will oppose such opinion, but I have already written: I have made a lot of forecasts concerning ATI, no matter what its employees told against them, they had to admit that they were true in the long run). Considering that Christmas sales have already started, the results may be quite unmirthful for ATI. The latest market research shows that the Canadians are rapidly losing a share of the desktop market. It still fairs well on the mobile and chipset market, but there are bad tendencies even there. Did they really need to make so many mistakes to fall into the pit (deep!), to understand what they had done, and only then to brainstorm to find a way out and to go up again? I remember that ATI had already fallen to such a pit in 1998-1999... Not learning their lessons from the past? We can congratulate NVIDIA with its current situation. At the cost of small defeats in 2004, they built an excellent basis for the future and now they reap the fruits. While the competitor takes the trouble of mastering 0.09 micron solutions, having no capacities to manufacture enough new-generation accelerators with SM 3.0, NVIDIA easily makes a check with its new queens, it already had in store, and quietly streamlines 0.09 chips to be announced a tad later. Why hurry up, if it still hasn't run out of previously manufactured solutions, which can easily compete with the new products from ATI? I again express my concern and lack of understanding of ATI's policy (this company couldn't fail to understand the full capacity of its competitor) and of the reasons for so many planning mistakes. I just cannot understand what 100-200 employees in Toronto do: they had to predict the aftermath of the company's moves. Did they all hope that re-marking old SM2.0 solutions would help them out? It really helped to some degree. But it couldn't have worked for good. The Canadian company obviously lost the round with SM3.0 level accelerators. The X1800XT will be opposed in a week (a killer solution), the X1800XL already has an opponent. According to our today's review, the answer to the X1600 XT, X1300 PRO is very strong and even potentially knocking out. We'll see what happens in the next year, when the titans clash again on the other ring - DirectX 10. The layout of forces and potentials may change cardinally there... The Canadians may be fostering that victory, ignoring the current attitudes and requirements. But the market dictates its own conditions... ATI is already suffering losses from its mistakes. Heavy losses. How can it repair the situation PRIOR to the next generation of graphics products? Only marketing experts have the answer so far in the form of promises to go up and make improvements. But the realia are gloomier.

The Canadians are catastrophically late... In all aspects. They are late with CrossFire, they are late with the X1xxx series, the competitor had time to get ready. They are even late with their chipsets (RD480 is still not available in production-line motherboards, while the second generation of NForce4 SLI X16 is already launched). Something is wrong in Toronto. Something has been broken. It's awfully nasty to see it. It's painful. I'm a partisan of equal competition, it just MUST be!

You can find more detailed comparisons of various video cards in our 3Digest.

Theoretical materials and reviews of video cards, which concern functional properties of the GPU ATI RADEON X800 (R420)/X850 (R480)/X700 (RV410) and NVIDIA GeForce 6800 (NV40/45)/6600 (NV43)

Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||