|

||

|

||

| ||

I wonder how many readers notice that 3D graphics has been evolving in distinct discrete jumps of late? Are there readers who evaluate the quality of video cards' algorithms before their performance? If there are, they had a little holiday to celebrate on November 8: NVIDIA presented two video cards based on the new G80 chip. Our baseline review of this video card mentioned that G80 was so finetuned for future tasks that it actually couldn't reveal its full potential in modern applications. We can partially blame the lack of API DirectX 10, drivers, and certainly applications that use this API. Almost the only way to test a new video card and evaluate its real performance in these conditions is to use high resolutions and maximum levels of anisotropic filtering and antialiasing in old games for DirectX9. While the situation with anisotropic filtering in G80 is clear (G80 offers the best quality of anisotropic filtering among present-day GPUs, its silicon implementation is so good that you can safely force AF 16x in the driver and forget about blurred textures, noises, and other artifacts of low-quality filtering without losing much performance), the new type of antialiasing definitely needs to be analyzed. That's what we are going to do in this article. But first we review another production-line video card based on GeForce 8800 GTX presented by Foxconn. Video card

Remember that all GeForce 8800 GTX/GTS cards are manufactured in Flextronics and Foxconn plants. Their quality is controlled by NVIDIA. Then the company just sells them to its partners. None of NVIDIA's partners manufactures these cards on its own! Just drill it like an axiom. When you buy the 8800GTX/GTS card, different vendors mean just different bundles and boxes. That's it! This time we can also see that it's just a reference card. We should also mention the PCB length - 270 mm, which may pose some problems, as the card has grown by 5 cm versus previous top solutions. The photos also show well that the GTX PCB requires TWO 6-pin PCI-E cables from a PSU. The card has TV-Out with a unique jack. You will need a special adapter (usually shipped with a card) to output video to a TV-set via S-Video or RCA. You can read about TV Out here. We can see that the card is equipped with a couple of DVI jacks. They are Dual link DVI, to get 1600x1200 or higher resolutions via the digital channel. Analog monitors with d-Sub (VGA) interface are connected with special DVI-to-d-Sub adapters. Maximum resolutions and frequencies:

We should mention clock rates of the cores. Almost all previously reviewed GT/GTX cards could set all core frequencies at 27 MHz steps, geometry unit operating at the frequency higher by 40 MHz. We wrote about it in detail before. But the 27 MHz step has been removed from G71. The 7900 series allows to change all three frequencies arbitrary at up to 1-2 MHz steps. The difference in frequencies between the geometry unit and other units in 7900 GTX cards has grown to 50 MHz. The 7900 GT cards demonstrate the 20 MHz difference, there is no 27 MHz steps either. So we can see that two operating frequencies available in the G80 core (575 MHz and 1350 MHz) differ greatly. Overclocking attempts demonstrated that increased ROPs frequency caused proportional frequency growth of the shader part. And now what concerns peculiarities of this video card. We have already mentioned above that all such products are manufactured by a couple of plants and are completely identical. Foxconn preserved the reference cooler, we already described it before several times. So we are not going to publish the description over and over again. But Foxconn still decided to decorate the cooling system. As the housing of the cooler is transparent, engineers installed LEDs under it. It's actually crude workmanship, unnecessary wires are secured with scotch tape.

Nevertheless, this card looks great inside a PC case, because the LEDs are not on the bottom (where they are usually arranged near fan blades), but around the edges. So they are visible - this solution may please modders.

Remember that graphics output is now up to a separate chip - NVIO. It's a special chip that incorporates RAMDAC and TDMS. That is the units responsible for outputting graphics to monitors or TV were removed from the G80 and implemented as a separate chip. It's a reasonable solution, because RAMDAC won't be affected by interferences from the shader unit operating at a very high frequency. The same chip is also used for HDCP support.

Bundle

Box.

OK, we've examined the card - there are no differences from the reference design, except for the bundle and the illuminated cooler. Now let's get down to antialiasing. But first we get back to the past and remember the beginning of antialiasing in 3D cards. Some HistoryThe idea of smoothing triangle borders in real time has been heating imagination of engineers from the earliest times — the legendary 3dfx Voodoo Graphics attempted to use antialiasing by drawing semitransparent lines along triangle borders. It looked horrible, and engineers had to give up this idea. The next attempt was made by 3dfx in its VSA-100 chip, which was used in Voodoo 4 and Voodoo 5 video cards. Having given up the crazy idea to draw lines along triangle borders, 3dfx engineers fell back on a standard idea of averaging rendered frames. But they added a number of their "know-hows". Approximately at the same time a similar idea was used by 3dfx competitors — NVIDIA and ATI. But they implemented it "head-on", without giving it much thought. The idea was quite simple: frames are rendered to resolutions, which are 2, 4, 8, etc times as large as the resolution of your monitor. Prior to displaying a rendered frame, it's scaled down. Values of pixels that are squeezed into the same pixel on the screen are averaged. That's how pixels are antialiased — not only at triangle borders, but across the entire scene. This algorithm is now called supersampling (SSAA). "2x", "4x", "6x", and "8x" that symbolize antialiasing come from that source — it's a multiplier that used to show how many times the frame resolution is greater than the screen resolution. It should be clear even from this simple description of supersampling that this algorithm delivered a heavy blow at video card's performance — it had to render a frame in a higher resolution and consequently to write more data into memory — twice as much in case of AA 2x and four times as much in case of AA 4x. As a result, performance in SSAA 4x mode often dropped four times - hardly an acceptable situation. Multisampling (MSAA), first implemented in NVIDIA GeForce 3, became the first improvement of SSAA. While supersampling was nothing more than a clever program "hack" that made a video card render a frame in a higher resolution and then scale it down to the screen resolution, averaging pixel values, multisampling is a hardware optimization. It allowed to save performance on calculating subpixel colors — if all, for example, 4 subpixels are inside a triangle, they get the same precalculated color value, only Z and Stencil buffers are rendered in a sterling high resolution. Thanks to algorithms that compress identical information about colors of most subpixels, MSAA allows to significantly reduce the load on memory bus bandwidth as well. Besides, owing to an opportunity to calculate more Z-values per cycle, Z-buffer of the increased resolution was filled much faster than an entire frame in SSAA mode. Intensive development of antialiasing in video cards practically stopped at this stage. There followed a long period of fine-tuning MSAA algorithms:

But on the whole, antialiasing fell into stagnation. It was especially noticeable in NVIDIA, where the main indicator of antialiasing quality (a number of subsamples calculated by a video card for each pixel) hasn't changed since GeForce 3. Only now, in GeForce 8800, NVIDIA dared to significantly overhaul antialiasing algorithms. MSAA and GeForce 8800It's important to understand here that the stagnation in antialiasing development has partially to do with video memory bus width and video memory capacity that limited the further growth of MSAA quality. MSAA is just a "superstructure" over supersampling, its modification. A video card still renders a frame to a higher resolution, averages subsamples, and then displays the results. Hence, 6x and 8x MSAA modes require 6 and 8 times as large frame buffers — that's when on-board video memory is not always enough to store all vertices and textures, and memory bus width grows much slower than GPU appetites. However, technologies are always evolving. What seemed impossible a couple of years ago is available in stores these days. The first improvement of antialiasing in NVIDIA G80 has to do with good old multisampling. As you may remember, multisampling consists in anticipatory testing of Z coordinates of subpixels. The more such Z tests a video card can perform, the more subsamples it can calculate. Since GeForce 3, video cards from NVIDIA and ATI can perform only two Z tests per cycle, but they can calculate different subpixels at different cycles — GeForce could calculate either two subpixels per cycle, or four subpixels per two cycles; Radeon went further and got the 6x mode, where 6 subpixels took only three cycles to calculate. I think you have already guessed the first MSAA improvement in G80 — NVIDIA made the new chip calculate 4 subpixels per cycle, and like in previous GeForce cards with MSAA, calculate other 4 subpixels during the next cycle. Thus, we get MSAA 8x. It should be noted that such an improvement did not come out of the blue — writing 4 subpixels into memory per cycle requires higher memory bus bandwidth. This feature appeared in G80 partially owing to the 384 bit bus. The new MSAA mode certainly resulted in the improvement of subsample grids. Let's have a look at these grids using D3DFSAAViewer and compare them with grids in previous NVIDIA chips as well as with the grids in competing GPUs:

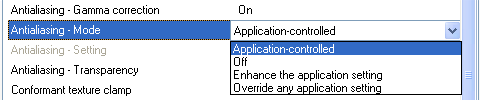

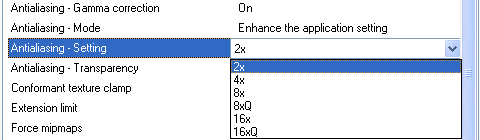

We can see well that subsample grids in 2x and 4x modes in GeForce 8800 are no different from the grids that first appeared in GeForce 6800. Along similar lines, the 2x and 4x grids in GeForce 8800 are mirror reflections of the grids in similar Radeon modes, starting from Radeon 9700. The 8x mode is certainly the most interesting. We can see well that we indeed deal with multisampling — unlike the combined MSAA+SSAA mode in GeForce 7800 (and all GeForce cards starting from GeForce 4). NVIDIA clearly followed ATI in subsample layouts. It avoided their uniform arrangement on the grid. It's very important for better antialiasing of a greater number of inclined lines. But we should also note that the layout of subsamples in MSAA 8x mode in GeForce 8800 resembles their layout in 8xS mode in GeForce 7800 rather than subsample layout in Radeon 6x mode. In conclusion of the theoretical account of MSAA in GeForce 8800, we should mention that G80 inherited gamma correction and transparent antialiasing, we have known since GeForce 7800. CSAA modes in GeForce 8800But the expectable MSAA update was not enough for NVIDIA engineers. They added another significant improvement into G80 — Coverage Sampled Antialiasing, CSAA. What is CSAA, why has it appeared, and how does it work? The fact is, a further increase in MSAA subsamples has stopped yielding the required effect: an increase in quality from MSAA, e.g. 16x, cannot compare with the amount of memory required for this mode — frame resolution will be 16 times (!) as large as the screen resolution. That is, if you configure a game to run in 1600x1200, your video card will have to render frames in 6400x4800! Considering that it has become fashionable to render frames in 64-bit colors (FP16) to obtain an HDR effect, a 1600x1200 frame in MSAA 16x mode will require (1600 x 1200 x 4) + (6400 x 4800 x 8) + (6400 x 4800 x 4) = 360 MB of video memory just for frame buffers, without taking into account textures, vertices, and shaders. And without taking into account triple buffering, rather popular among competent gamers. And without taking into account an option to render a frame in 128-bit colors, which appeared in Direct3D 10. NVIDIA engineers obviously had to find another solution to this problem. They carried out some experiments and found out that precise information about pixel parts in various triangles is more important for calculation of pixel values, which contain triangle borders, than subpixel colors. Here is the charm of this method - information whether a subpixel is in a triangle or not is expressed by just two values — yes or no, 1 or 0. When written into a frame buffer, these values do not load a memory bus as much as color values (we are told that transmitting 16 coverage samples compares to transmitting 4 color samples; we'll check it up below in real applications); improved information about the coverage of a pixel by a triangle, plus 2, 4 or 8 color values of MSAA subpixels can be used to obtain higher precision in calculating a screen pixel color. This method goes well with the existing rendering algorithms (that's one of the key problems of most other AA methods — they are incompatible with algorithms used in hardware, APIs, and applications), it does not load a memory bus much, and it does not take up much video memory. When CSAA fails (for example, intersecting triangles, borders of shadow volumes), we do not get a noticeable lack of AA, but just no CSAA — that is we roll back to a certain MSAA level (2x, 4x or 8x). This, CSAA is an improved SSAA method, which smoothes everything in a scene "head-on" at the cost of horrible performance drops. MSAA helps us do with calculations and transmissions of a single color value (due to compression) for a single screen pixel inside a triangle. CSAA allows to do with transmitting and storing a single color value or Z value of each subpixel. It specifies more accurately the averaged value of a screen pixel by using precise information how this pixel covers triangle borders. Antialiasing settings in driversBefore we proceed to our tests, we should mention another important issue — antialiasing settings available in games and drivers for GeForce 8800. As none of the existing games knows about the existence of CSAA, CSAA can be controlled only on the ForceWare control panel. And now we face a problem: most modern games do not allow to force AA in the driver control panel, because it ruins their rendering algorithms. How can we enable CSAA in these games — they don't know about it, you cannot force it in drivers... NVIDIA programmers found a solution to this problem by adding a new antialiasing control mode to the control panel:  Enhance the application setting is implemented for the new CS antialiasing in the first place. You don't force antialiasing here, but you cheat an application. That's how this mode works: you set "Antialiasing - Mode" to "Enhance the application setting", then you specify a necessary antialiasing mode in the "Antialiasing - Setting" field (it all does not differ from forcing AA). Then you start a game and choose any antialiasing mode there. That's it actually — the driver uses the mode you selected in the control panel instead of the mode specified in the game. An application thinks that it works with antialiasing and modifies its rendering algorithms accordingly; and the driver uses an antialiasing mode, which is unknown to the applications. It's an elegant and correct solution. What concerns MSAA, the D3D driver correctly detects the new 8x mode and provides corresponding information to applications through "caps". For example, 8x antialiasing is available in Far Cry and a number of other correctly written applications on GeForce 8800. And you can always use the above mentioned "cheat" in incorrectly written applications (for example, Half-Life 2). But there is one nasty issue here: "crazy" about its new CSAA algorithm, NVIDIA decided to build these new modes into the same list with MSAA — the idea may be correct, but the implementation is not. Let's have a look at the screenshot:  Raise a hand, if you understand which modes are MSAA and which are CSAA. What? You don't care? Look. The mode, marked as "8x" in the control panel, is actually not the "8x" mode, which is available in some "correct" applications. The 8x mode in the control panel is not MSAA, it's CSAA. MSAA 8x, available in some applications, is called 8xQ for some reason. That's the first problem — discrepancy between the mode name in the control panel and in API Direct3D. Then, if Q is a sign of MSAA modes, why don't 2x and 4x modes have this sign? And what does the 16xQ mode mean? The lack of consistency in using this sign is the second problem. And finally, it would have been right to highlight new CSAA mode out of pure marketing reasons. There are a lot of talks about the new AA. But when users buy GeForce 8800, they don't see any CSAA in drivers — strange, eh? Yep, very strange. Here is a table for those, who want to know what each antialiasing mode in the control panel means:

What do we see in this table? Firstly, the 8x mode in the control panel is actually MSAA 4x + CSAA 8x. Secondly, the 16x mode is MSAA 4x + CSAA 16x, while the 16xQ mode is MSAA 8x + CSAA 16x. So we can assume (we'll test this assumption below) that the 16x mode in the ForceWare control panel may have worse quality than 8xQ, which is strange from the point of view of their ranks. We offer NVIDIA two solutions to this unpleasant mess in mode names. The first consists in just renaming the modes in a consistent way. For example:

Yes, the 16x mode disappears, but let's be honest with users: G80 does not support the 16x mode in terms of nx nomenclature, historically established since GeForce 256. The 16x mode implies 16 sterling subsamples in each screen pixel. But 16 coverage samples are not sterling ones. There is also the second way out — to separate multisamples and coverage samples in the control panel, to create two lists (or sliders) to select a number of multi- and coverage-samples. In case of this approach, users could have been allowed to specify a number of coverage samples in the nx form for CSAA modes. That is, you select "Enhance the application setting" and then set a necessary MSAA mode (for example, 4x). You may also specify a CSAA mode (for example, 16x) in the other list, if you want. The second solution is more complex, but it's more flexible. But enough of theory, let's proceed to practice. Testbed configurationsWe carried out our performance analysis on a computer of the following configuration:

We carried out our quality analysis on a computer of the following configuration:

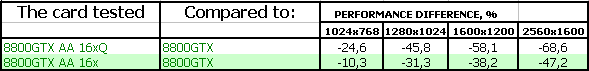

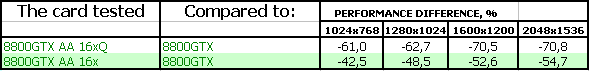

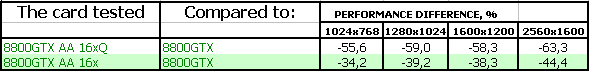

Unless the contrary is specified in the text, video cards always have Transparency AA Supersampling / Adaptive AA Quality and AA Gamma Correction enabled, all texture filtering optimizations are disabled (High Quality in GF cards and Catalyst A.I. in R cards). Antialiasing Performance in GeForce 8800We decided to test performance of the two heaviest antialiasing modes in GeForce 8800 GTX: 16x and 16xQ. Running a few steps forward, we can note that the 16x mode offers a tad higher quality than the 6x mode in Radeon cards. The quality of 16xQ mode is absolutely unattainable for all other modern video cards. We used the following test applications:

You can download test results in EXCEL format: RAR and ZIP

FarCry, Research (No HDR)

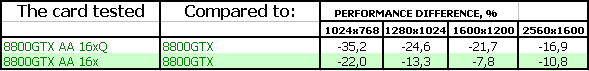

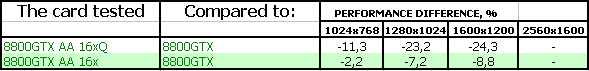

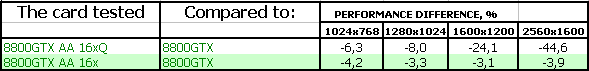

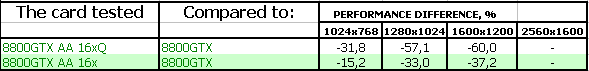

The 16x mode (MSAA 4x + CSAA 16x) in Far Cry yields 38% performance drop in 1600x1200. We got interested in the contribution of the new CSAA algorithm into this performance drop and carried out additional tests in FC in 1600x1200, but already in AA 4x mode. We got 133.3 fps. So we can assume that CSAA with 16 coverage samples demonstrates a similar performance drop in GeForce 8800 GTX as MSAA 4x. Switching to 16xQ in 1600x1200 drops performance by the same 30 fps as in case of switching from no AA to AA 4x. It fully corresponds to the difference between 16x and 16xQ. And finally, fps remained playable only in 2560x1600 16xQ.

F.E.A.R. The situation in F.E.A.R. is different: we see twofold performance drops, which grow together with resolutions. However, the game remains playable even in the heaviest modes.

Splinter Cell Chaos Theory (No HDR) The situation in Splinter Cell changes again: the game is evidently limited by something else than the fill rate of a frame buffer. As a result, even top quality 16xQ demonstrates no more than 35% performance drops.

Call Of Duty 2 We can see a similar situation in Call of Duty 2. Only the performance drop is even lower percentagewise. We could explain such behavior of antialiasing in SCCT and CoD2 by lots of complex shaders in these games, which make the accelerator to do a lot of cycles, when ROPs manage to write all necessary samples into the buffer. But this theory is not confirmed by test results demonstrated by GeForce 8800 GTX in F.E.A.R. It can also boast of many shaders in its code. What is the reason for such a heavy performance drop in F.E.A.R.? Perhaps, the problem is in buggy drivers? Let's hope that these questions will be answered in time.

Half-Life2: ixbt01 demo

Half-Life 2 is so heavily limited by a processor that AA 16x in GF8800GTX in HL2 comes practically free of charge. It cannot be said about 16xQ, which starts to get worse from 1600x1200. It leads to an almost twofold performance drop in 2560x1600, but GeForce 8800 GTX still demonstrates 77 fps in this resolution.

DOOM III High mode Despite the significant performance drop in 16xQ, GeForce 8800 GTX still provides playable fps in Doom 3 even in 1600x1200.

At the same time, we don't like such twofold performance drops: what if we spoke about a modern game instead of Doom 3, where fps is not high even without AA?

Chronicles of Riddick, demo 44

The overall situation actually resembles Doom 3, but the absolute fps values are a tad lower. It holds out a hope that antialiasing performance under OpenGL is currently limited by buggy drivers. Performance drops may decrease in future, fps may grow. But let's have a look at the quality demonstrated in reviewed antialiasing modes. Antialiasing quality in GeForce 8800Having started the analysis of antialiasing quality, we faced a funny problem: it turned out very difficult to find games, which would clearly show the difference between all antialiasing modes. Most modern games are either dark first person shooters, where you cannot always understand whether AA is enabled (Doom 3, Quake 4, Prey, F.E.A.R., Splinter Cell); or they do not allow to save in any location (Call of Duty 2, Fahrenheit, Prince of Persia: The Two Thrones, Tomb Raider: Legend); or they do not support antialiasing at all (Gothic 3). That's why we had to roll back to good old games in 2004 — Far Cry and Half-Life 2. We can say for ourselves that we analyze antialiasing quality and that these very games are the best at demonstrating all its weak points. We also have one new game for dessert — The Elder Scrolls 4: Oblivion. It was added to our tests to see how GeForce 8800 would cope with antialiasing together with FP16 HDR. Far CryFirst we are going to examine basic quality of antialiasing of triangle borders in Far Cry.

So, the 2x and 4x modes are practically identical in quality in GF8800 and RX1900. Radeon does not support higher modes. Too bad - 8xQ and 16xQ modes again demonstrate top quality and leave no chances to Radeon modes. We can again see the anomaly: 16x mode offers worse quality than 8xQ. On the whole we can draw a conclusion that NVIDIA is correct and that it really makes no difference for ROP G80 for which buffer formats to calculate AA - the quality and ratings of forced modes in Oblivion are no different from the quality and ratings of these modes in Far Cry and Half-Life 2 with disabled gamma correction. Oblivion looks just great with HDR and forced 16xQ ;-) ConclusionsFoxconn GeForce 8800 GTX 768MB PCI-E is actually a reference card, but it stands out with its bundle and a slightly modified cooling system. So if there are no inflated prices, this product will interest those users who like new powerful products. The card worked great, we had absolutely no gripes. You can find more detailed comparisons of various video cards in our 3Digest. What concerns the new antialiasing mode in G80, we can draw the following conclusions from our test results:

And finally, you should understand that we have compared AA quality of the new generation of NVIDIA video chips with the AA quality provided by the old Radeon chips. We'll be able to carry out a sterling comparison only after AMD launches the first video cards based on R600. This comparison will be valid not only for the nearest several months, but probably for the several following years.

Foxconn GeForce 8800 GTX 768MB PCI-E gets the Excellent Package award (December).

It also gets the Original Design award.

ATI RADEON X1300-1600-1800-1900 ReferenceNVIDIA GeForce 7300-7600-7800-7900 Reference

Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||