|

||

|

||

| ||

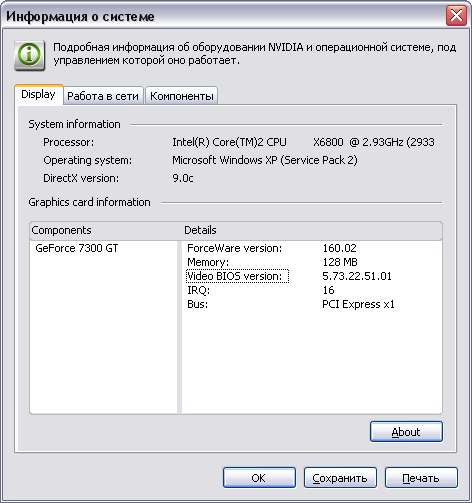

We've almost forgotten about cheap graphics cards. Well, it's not that we've really forgotten about them, since they are represented in our montly 3Digests. But these products change only a little. Performance stays very low, new drivers introduce almost nothing. You also have to sacrifice quality in games, not speaking of AA and AF, which you can just forget about. 3D works only in simple games. Of course, aesthetes may enable all options and enjoy a slide show. But that's not the point today. Given you have a computer with integrated graphics (it especially concerns barebones) and you want to upgrade your graphics subsystem to a more-or-less modern 3D solution and you don't have a PCI-E x16 slot. What can you do? Yep, you should look for a PCI-E x1 graphics card. One of such products will be reviewed today. This 7300GT card is made and factory-overclocked by Galaxy. Graphics card

The photo shows well that the card has a unique design. It's similar to the 7600 GT in the layout of memory chips. The card is rather small. It has a TV-Out with a unique jack. You will need special bundled adapters to output video to a TV-set via S-Video or RCA. You can read about the TV-Out in more detail here. Analog monitors with d-Sub (VGA) are connected either via a VGA connector, or special DVI-to-d-Sub adapters (they are not included into the bundle). Maximum resolutions and frequencies:

Speaking of MPEG2 playback features (DVD-Video), we analyzed this issue in 2002. Little has changed since that time. CPU load during video playback on all modern graphics cards does not exceed 25%. What concerns HDTV and other trendy video features, we are going to sort them out as soon as possible. Now what concerns the cooling system. There is nothing much to write about. You can see well on the photo that it's a usual round cooler with a fan in the middle. The latter has a low rotational speed, and so there is no noise. However, we cannot guarantee that such a cooler will always remain silent (there were some cases when such devices became noisy in six-twelve months). We monitored temperatures using RivaTuner (written by A.Nikolaychuk AKA Unwinder) and obtained the following results:

Galaxy GeForce 7300 GT 128MB PCI-E x1  As we can see, the temperatures are not very high, despite the increased frequencies (500 MHz versus 350 MHz). Bundle

Box

Installation and driversTestbed configuration:

Test results: performance comparisonWe used the following test applications:

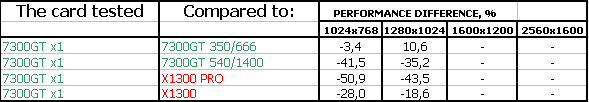

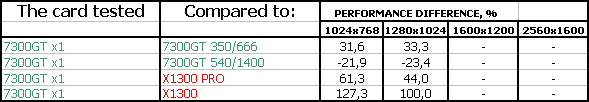

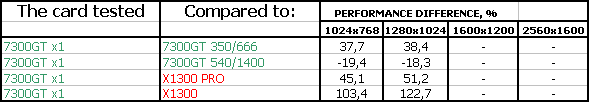

Graphics card performanceThose of you who are into 3D graphics will understand the charts themselves. And newbies and those who have just begun choosing graphics cards can read our comments. First of all, you should browse our brief references dedicated to modern graphics card series and underlying GPUs. Note clock rates, shader support, and pipeline architecture.

ATI RADEON X1300-1600-1800-1900 Reference NVIDIA GeForce 7300-7600-7800-7900 Reference

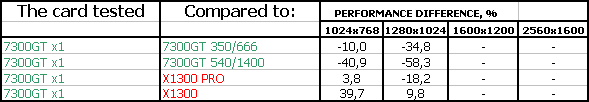

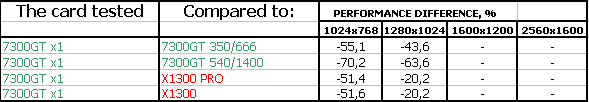

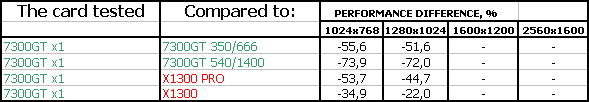

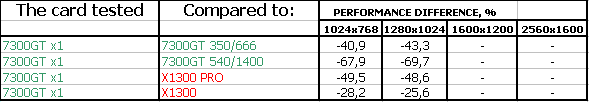

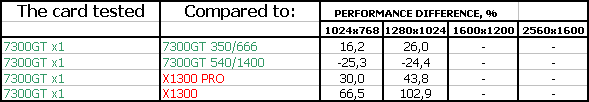

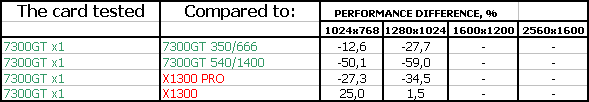

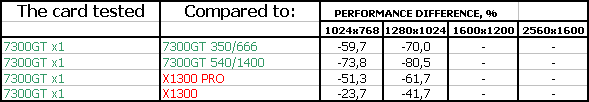

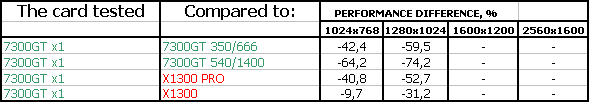

Secondly, you can browse our 3D graphics section for the basics of 3D and some new product references. There are only two companies that release graphics processing units: ATI (recently merged with AMD and obtained its name) and NVIDIA. Therefore all information is generally separated in two respective parts. You can also read our montly 3Digests, which sums up all graphics card performance charts. Thirdly, have a look at the test results. We'll not analyze each test, because it makes more sense to draw a bottom line in the end of the article. As this is a budget product, designed to work in 3D only at minimal resolutions, we have run our tests in 1024x768 and 1280x1024. There is no point in using higher resolutions. FarCry, Research (No HDR)Test results: FarCry Research (No HDR)  FarCry, Research (HDR)Test results: FarCry Research (HDR)  F.E.A.R. Splinter Cell Chaos Theory (No HDR) Splinter Cell Chaos Theory (HDR) Call Of Juarez Company Of Heroes Serious Sam II (No HDR) Serious Sam II (HDR) Prey 3DMark05: MARKS 3DMark06: SHADER 2.0 MARKSTest results: 3DMark06 SM2.0 MARKS  3DMark06: SHADER 3.0 MARKSTest results: 3DMark06 SM3.0 MARKS  ConclusionsGalaxy GeForce 7300 GT 128MB PCI-E x1 demonstrated oppressive results at first sight. The 128 MB of memory are not enough even for budget cards now. Besides, lower video memory capacity increases the amount of data pumped through the bus (in order to use system memory), and this bus is narrow. Hence the low performance in games, which have a lot of textures and where the size of local video memory is very important. Only when shader computing prevails, we can see the Galaxy card get on a par or shoot forward thanks to the increased frequencies. But the situation is not that bad, considering the objective of this product. It's a priori designed for integrated systems with terribly weak 3D. Or for machines without PCI-E x16 (this happens). And it's just indispensable in this case! It's cheap, it offers some 3D functionality, and it will let you play some simple modern games. So, while there's no use in advertising it widely, there's a niche of usage, where it's essential. However, we haven't mentioned the multi-monitor configurations. If you have only one PCI-E x16 slot and one graphics card for it, the second graphics card with the x1 interface will allow to use more monitors with this system. And another thing that we are not tired to repeat from article to article. Having decided to choose a graphics card by yourself, you have to realize you're to change one of the fundamental PC parts, which might require additional tuning to improve performance or enable some qualitative features. This is not a finished product, but a component part. So, you must understand that in order to get the most from a new graphics card, you will have to acquire some basic knowledge of 3D graphics and graphics in general. If you are not ready for this, you should not perform upgrades by yourself. In this case it would be better to purchase a ready chassis with preset software (along with vendor's technical support,) or a gaming console that doesn't require any adjustments. More comparative charts of this and other graphics cards are provided in our 3Digest.

Galaxy GeForce 7300 GT 128MB PCI-E x1 gets the Original Design award (June 2007):

Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||