|

||

|

||

| ||

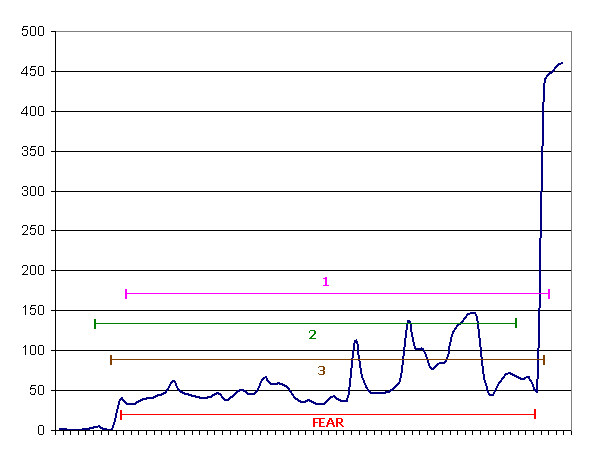

Everyday work of 3D testers always contains some sore subjects. One of the most burning subjects is that far from all 3D games allow to run performance tests. Still fewer games offer convenient benchmarks with automation features that provide complete information on game performance with certain settings. Benchmarking features of various games differ much, some of them do not offer even the simplest FPS counter, while the others offer all necessary tools you can imagine. From this point of view, there are actually three game types: those that have no benchmarks at all (such games are in majority, for example, Need For Speed: Most Wanted, Condemned: Criminal Origins, Ghost Recon Advanced Warfighter, Tomb Raider: Legend, etc, the list is practically endless), those that offer benchmarks, but they are not always convenient to use (for example F.E.A.R., which lacks automation, you cannot run the benchmark from a command line and use custom demos, as well as lots of games that show only instant FPS values, like TES4: Oblivion). And only a small group of games possesses all necessary features to measure average FPS, sometimes even minimal and maximal fps, to record user demos on various game levels, and calculate all necessary results when it's played back. For example: Half-Life 2, DOOM 3, Serious Sam 2 (the latter offers the following features: recording user demos, standard demos, displaying minimal, maximal, and average fps values, with/without peaks, etc). As games without built-in benchmarks are in majority and they are much more diverse, we certainly want to test such 3D applications in any possible way. One of such ways is using third-party utilities, which can read instant FPS and display this value, as well as analyze performance relying on instant fps for a custom period of time. They usually calculate the minimal, maximal, and average frame rate in a given time interval. The most famous utility of this kind is FRAPS. It provides such features for measuring performance, including its free version, which can be downloaded from the above-mentioned web site. This method of measuring performance is probably the only chance to benchmark applications without integrated benchmark support, but it's not flawless. Moreover, these flaws can cancel out all its advantages. In this article we'll try to find out whether it makes sense to use FRAPS to measure performance in 3D games that have no built-in tests of rendering performance. We'll also try to analyze the main drawbacks of this method. Theoretical outlookEven from a purely theoretical point of view, we can point out several big problems, which can (and must) appear before testers. In our opinion, they may eclipse all advantages of this method. For example, one of the biggest drawbacks is a human factor. A man cannot possibly press a hot button to start and stop a benchmark in precise time. In order to get accurate figures of an average frame rate in various tests, the start/end moments of calculating average, minimal, and maximal FPS values must be the same! For natural reasons, a man cannot be perfectly accurate, his capacities are limited by his reaction time, attention, and other factors. Though the author of FRAPS took several steps in the right direction (a timer stops the benchmark after time is up) to reduce the effect of human weaknesses, it does not eliminate them completely. It's still up to a user to start the benchmark. And the end depends on the start, if the test length is limited. Perhaps a solution to this situation might have been an option to start a benchmark as soon as instant fps exceeds a specified value, which often happens when you start a demo from a game menu. Frame rate in a menu is usually much higher (there are some exceptions) and it can be used to start measuring performance. The program lacks this setting so far, it's only in our imagination. But let's get back to reality. Necessary manual operations are a real disappointment - you must start and stop the test manually (except for the above-mentioned feature). No automation. Just imagine, to get more or less correct data on the average frame rate, you'd better start the test several times and drop evidently wrong results (obtained due to the above-mentioned human weaknesses or technical problems) and average the remaining results. And what if there are several tests? In different resolutions, with different settings, with different scenes, with different hardware configurations, at last! A tester will have to be very careful not to make mistakes for several hours (!) not only when he changes settings and resolutions, but also when he starts and ends a benchmark exactly in time. These tests may take several days to complete. So no one can guarantee that a human tester will do everything accurately and uniformly. Let's analyze examples of errors, which can be made by a user in such tests. Have a look at the picture that shows possible tester's errors based on real results in F.E.A.R. The red segment must be used for measuring the average, minimal, and maximal frame rate (it's used by the built-in benchmark), the other colors show segments, which can be used by a tester, influenced by a human factor.

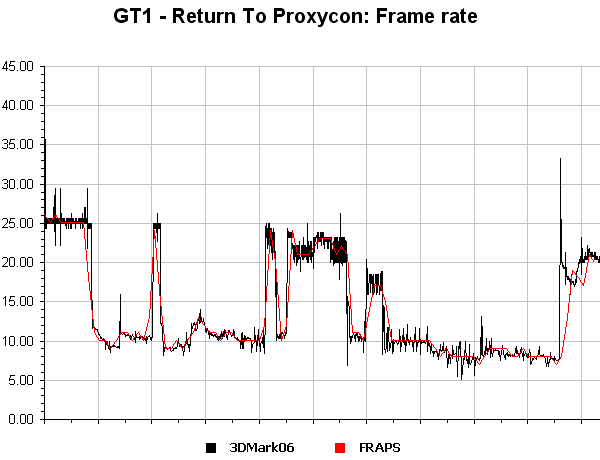

In the practical part we shall analyze such errors and evaluate their effect on FRAPS calculations in real 3D applications. It was all written about the games without performance counters (average frame rate, at the least), but which have script scenes and/or an option to record and play back user demos. Even in such cases the results may differ significantly from the real gaming performance, as a game does not consist of script scenes and demos only. But if a game has no such features... For example, some testers use the following trick, if a game has no demos and script scenes - they load a selected game level and just complete it several times with various software and hardware settings. They seem to think that the average frame rate will still be statistically true. Of course, we shall check this assumption in the practical part of our article. Tests in real applicationsIn this part of the article we are going to consider the issue of how much we can trust FRAPS results. We'll try various conditions and even attempt to recreate some of possible testers' errors. At first, let's see whether FRAPS figures match what we are used to measuring. For this purpose, we started the famous 3DMark06 benchmark, its first game test in performance graph mode. Then we started FRAPS to compare the results obtained by two different methods, only instant frame rate so far.

Now let's see how the average frame rate, calculated by FRAPS, correlate with the data obtained on built-in benchmarks. For this purpose we shall test a couple of applications, which can measure average FPS on their own. To get significant results, we'll take readings three times. Besides, we'll evaluate the effect of a human factor in case of careful (in this case the human error was small) operation.

FRAPS seems to cope well with its task, its results differ from those obtained by the built-in benchmark less than by 2%. On the other hand, the results are evidently less stable than those obtained by the game - a user still brings his human influence. Besides, FRAPS might yield relatively good results because of a lengthy test demo, when the effect of the start and the end of the demo (that's where the error appears) is small. Let's see what happens in other cases...

We tried several demos in Far Cry on various levels. In this case the difference was larger than in Serious Sam 2. Perhaps, it happens because the demos were much shorter and the start/end intervals made a bigger difference. That is such a difference is the result of manual starting/ending calculation of the average frame rate per second, it affects final results. To check the assumption mentioned in the theoretical part of the article, let's look through the figures demonstrated by the F.E.A.R. benchmark and compare them with FRAPS results. In one of the tests we deliberately stopped FRAPS a tad later than the test demo ended and captured a part of game outside the demo. The menu that appears after the test reaches higher FPS, so the difference must be greater. We try to recreate a real situation, when a test scene may be followed by a much simpler screen (menu, splash screen, etc).

That's what happens, if a tester is concentrated on a display and is watching it all the time, starting and ending the calculation of the average FPS in time. In this case, FRAPS results are little different from the results of a program itself (that is these results are close to reality). But if we emulate slow reaction of a user, the result is noticeably different. In the last test, FPS calculation is stopped less than a second later than usual, but the difference is too high, because the frame rate jumps up at the end of the test. There appears another human factor problem, which we described in the theoretical part about problems in measuring performance with FRAPS: a drop in attentiveness, a change in reaction times, etc. Let's check the same with the first game test in 3DMark06.

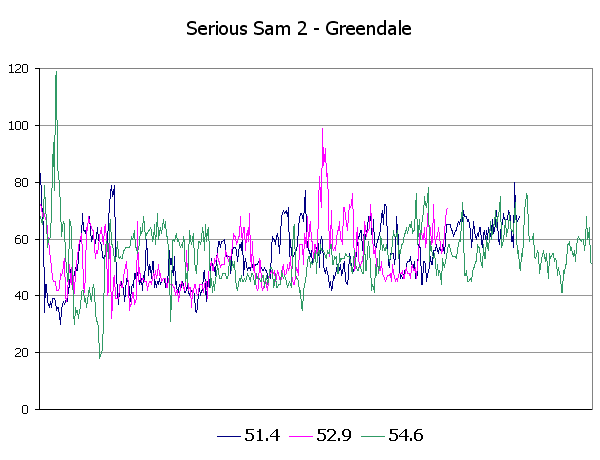

We can only confirm the above said - an inattentive tester may stop FRAPS later than necessary by fractions of a second, but the result will not correspond to the true performance values. Such results must be discarded. You should run the test several times to get correct results (five times at the minimum, at least three) and then average the results, as additional measurement error of this test procedure is confirmed here as well - 2-3%. Such measurement error (2-4% both ways) would be admissible in certain cases, if all games had allowed recording and playing back user demos. But they may fail to reflect the real gaming situation, at least they will be close to what happens in a game. Sometimes you can use real time script scenes that use the game engine, but these data reflect gaming performance even to a smaller degree. Results will differ significantly from gaming FPS and make little sense. Yep, they can demonstrate a relative performance of various video cards and settings in rendering script scenes, but they won't reflect performance in a game itself. Let's review the most questionable features, used by some testers to measure performance in such games as Oblivion, Need For Speed: Most Wanted, etc. They do not allow to record your own demos, they have no long script scenes that reflect gaming performance, which can be used for tests. That's why some testers use such methods as completing game levels several times. Let's see what happens if we just load a selected game level and complete it three times. In this case, 3D scenes will be naturally different, but will the average frame rate be at least close to the true value? We'll start with Serious Sam 2, Greendale level. The graph shows average frame rates per second for three attempts to pass a large plain-type section of this level. Completion time is quite long, from 7 to 9 minutes, so the average results will hardly be very different.

Let's review another first person shooter, Far Cry, a beginning of the River level. The game is notable for impossibility to repeat a gaming situation, each attempt brings something new, original. It often happens in modern games, when enemies possess some AI and do not appear in the same places, each time acting differently. Besides, it will be interesting to have a look at a shorter scene, where average FPS will not help the situation that much.

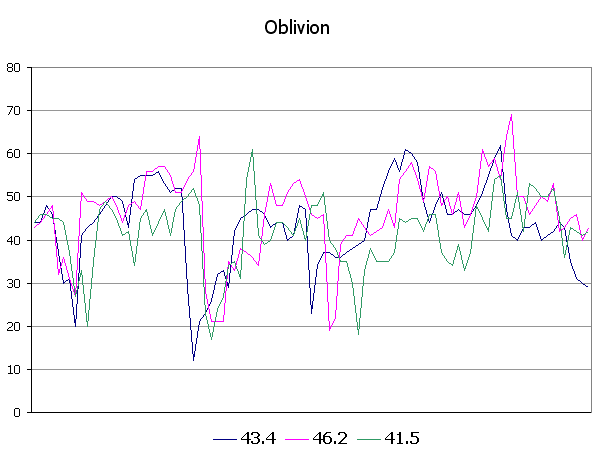

From the point of view of graphics technologies, one of the most interesting latest games is The Elder Scrolls IV: Oblivion. Unfortunately, the game itself offers only a counter of frames per second, which can be enabled in a console. But there are no options to record and play back demos. That's why some testers user the above mentioned method to measure performance in this game - they load a saved game and complete part of the level several times. But it's impossible to pass the level identically each time, so the results must contain large measurement errors.

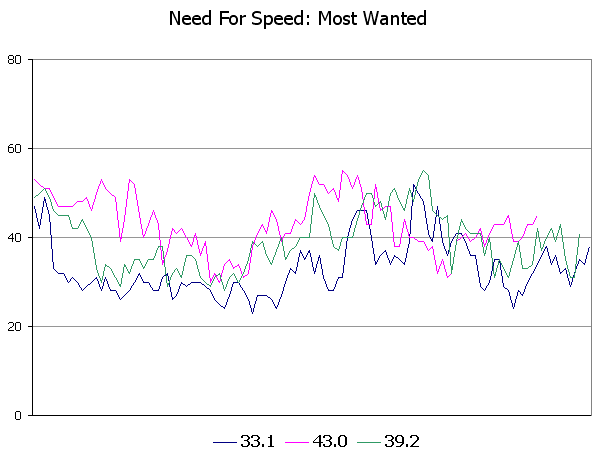

And the last game to be reviewed in the practical part of this article is Need For Speed: Most Wanted. In fact, this game was the reason for writing this article, as it's practically impossible to repeat a situation in the game for testing performance with FRAPS. I already noted in the article about this game that using FRAPS-like utilities to measure average FPS for a given race with the same configuration and car was very difficult because of weather and other conditions of a race. The spread of results was even higher than 10%, specified in that article. One and the same track and the same cars with the same settings each time yield different results. The results of three races are published on the graph:

ConclusionsPerformance tests we carried out for this article demonstrated that measuring FPS in such programs as FRAPS can be used with some reservations for games without built-in benchmarks (average, minimal, and maximal frame rate per second), but which allow recording and playing back demos or at least which can show the same 3D animation (using the game engine) in real time each time you need it . But even in case of games that allow to play back demos or script scenes in real time, we should be careful about FRAPS results, as the spread of results may reach 2-4% both ways. So to get more or less accurate results, you must repeat the test several times (we recommend at least five times). It introduces additional limitations into the method reviewed: necessity to process results (drop anomalous data, average the rest), much more labour-intensive tests, no automation, except for setting test duration, thus these tests will require much more time. Add to the above said a significant error even in case of multiple repetitions of the tests and you will get low credibility of this method of measuring 3D performance. In our opinion, using FRAPS for games that cannot play back demos (Need For Speed: Most Wanted, TES4: Oblivion, and some others) makes absolutely no sense, as these tests demonstrate too big a spread of results, even 10% is an acceptable figure, to say nothing of higher spread. Of course, our tests highlighted weak spots. We can think of ways to soften such side effects as human factor and different game situation during each test, to get more accurate results. For example, in case of NFS: Most Wanted, we can choose an empty track without cars, traffic, and competitors, but will such results correspond to the real situation in the game? In Oblivion we could find some deserted location, some plain, and go straight forward each time without turning or meeting enemies. But such tests will resemble synthetics, sort of 3DMark. But this utility is advertised as a benchmark of gaming performance... One more point. Even in case of fully automated tests and a minimized effect of a human factor, performance results may sometimes be anomalous and difficult to explain. Because of rare mistakes in drivers or in a game, for example. What can we expect from such inaccurate tests? In this case, possible explanations of such anomalies in test results may be poisoned by errors and human weaknesses of a tester. Moreover, they will certainly stand first on the list in their influence and frequency, I'm sure of it. I'd like that developers should understand what users expect them to do - people interested in 3D graphics and performance of hi-tech games on various hardware platforms and with various settings. At lest the highest-tech games should get built-in benchmarking tools, they should be convenient to use and offer maximum automation. Developers are interested in it as well, after all. Firstly, they need convenient tools to analyze performance as well. Secondly, they may facilitate cooperation with game testers on various debug stages (public beta versions for testing, for example F.E.A.R.) Thirdly, using hi-tech games as benchmarks for testing performance among 3D enthusiasts may raise their popularity. And the most important thing - it's not too difficult for developers to implement convenient ways to benchmark performance in games, they only need to want it.

As for now, we'll continue using those applications that have built-in benchmarks and offer convenient methods of using them. FRAPS-like utilities can be used only in some (isolated) cases - you should run the tests several times, check whether the results are correct, use game demos where possible, publish information how you carried out your performance tests. You should mention that you use FRAPS, the method that is affected by a human factor and that reduces trustworthiness of results obtained. This method won't do for constant use.

Alexei Berillo aka SomeBody Else (sbe@ixbt.com)

July 28, 2006. Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. |