|

||

|

||

| ||

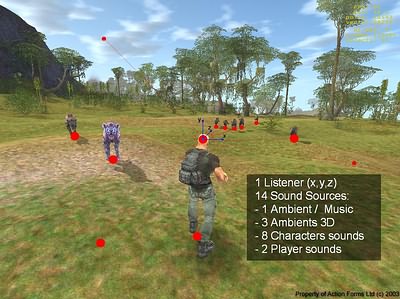

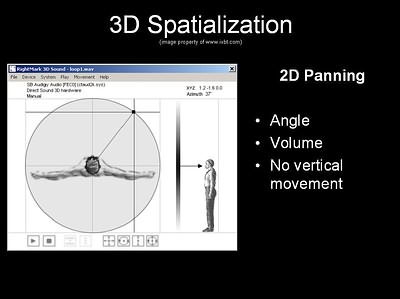

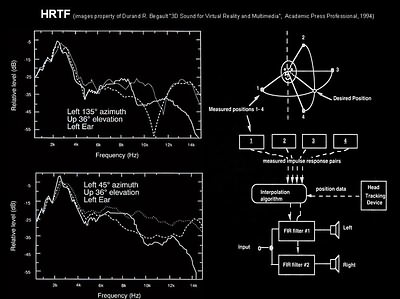

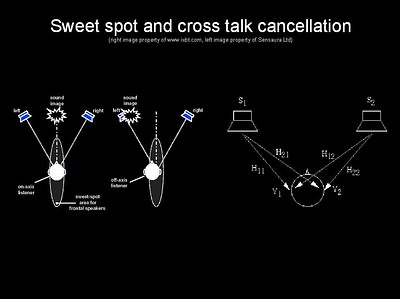

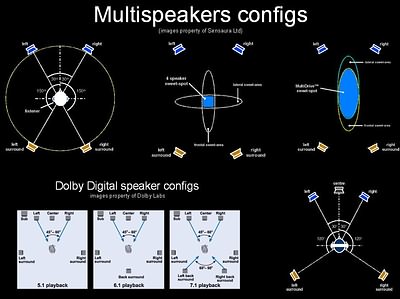

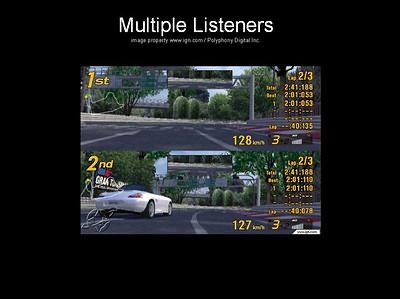

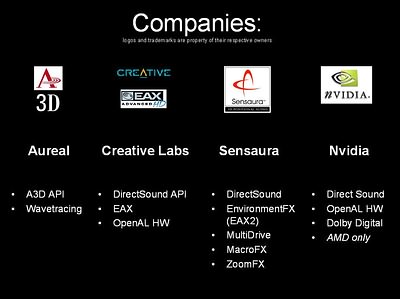

This article is based on my presentation given at the Game Developers Conference in Moscow in 2003. The author is the professional developer of 3D sound based engines for modern games. 3D Sound vs. Surround SoundSound is not as important as graphics in game development. The game developers spend more time on new features and effects for 3D graphics. It's difficult to persuade people to spend time and money on high-quality sound in games. At the same time, most users would better get a new 3D accelerator than a new sound card. However, the situation is changing - both users and developers are now paying more attention to sound. And modern projects dedicate to sound up to 40 % of the budget, time and manpower. Audio chip makers and 3D sound developers have done their best to convince users and application developers that good 3D sound is an integral part of a modern computer. Sound was stereo before, then it turned into 3D and now we have multi-channel solutions: 4-channel, 5.1, and 7.1. Let's have a closer look at 3D sound, its similarity and difference from multichannel solutions.  The concept of 3D sound means that sound sources are located in the 3D space around the listener. Each sound source represents an object in a virtual game world able to produce sound. Here is a typical view in a 3D shooter by the example of Vivisector: Beast Inside (from Action Forms). There is the Listener and Sound Sources. Some of the sources are stereo (such as background music; in this particular game wind and jungle sounds are the main ambient sounds), 8 sources are produced by monsters, 1 source is dedicated for a player - shots, steps, and 3 ambient sounds (in this case they are sounds of insects, birds etc.). 3D sound is used for deeper submersion into the game's virtual world by making more realistic what's going on in the scene. Various technologies are used to emulate or hyperbolize sound behavior in the real world. For example, reverberation, reflected sounds, occlusions, obstructions, remote modeling (how far a sound source is from the listener) and many other effects. ĒD Sound technologies: positioningEveryone perceives sounds differently (it depends on the ear shape, age and psychological state). There can't be a single opinion about sound quality of a particular sound card or effectiveness of a given 3D technology. Sound reproduction depends a lot on a sound card and speakers, as well as on the audio engine of a given game.  Let's see how the 3D sound effect is created. We'll start with the 2D panning. This technology was used yet in ID Software's DOOM. So, every mono sound sources is played as stereo, and their positioning can be altered with the left- or right-channel's volume level. Such system has no vertical positioning but it's possible to change the sound a little (for example, by filtering high frequencies) when it comes from behind the listener because in this case he hears it a little muffled. Now the hardware realization. A sound card can emulate position of a sound source with the HRTF (Head Related Transfer Function) on two speakers or headphones. Filtering and other transformations emulate human auditory sensation. HRTF (Head Related Transfer Function) is a transfer function which models sound perception with two ears to determine positions of the sources in space. Our head and body are actually obstacles modifying the sound, and our ears hidden from the sound source perceive sound signals altered; then the signals proceed to our head to be decoded in order to determine the right position of the sound source in space.  On the left you can see three HRTF (sound source position, azimuth 135 degrees and 36 degrees) for three different persons for the left and right ears respectively. All of them are based on certain laws. In most cases they are recorded using special methods with special stereo mics inserted into ears of a human or a model (KEMAR). Sensaura, in particular, utilizes synthetic HRTFs using the same laws you can see on the slide (for example, the peak at 2500 Hz and the drop at 5000 Hz for a given point in space). Some other companies use averaged HRTFs. The system is actually composed of two FIR filters (Finite Impulse Response), and HRTF is their transfer function. Since the HRTF are discreet, and it's too costly to store megabytes of HRTF, the source real position is calculated with HRTF interpolation. Downsides of HRTF1. Sound can be badly distorted! 2. Operation can be pretty slow. 3. If sound sources are immovable, their positions can't be determined precisely, because the brain needs them moving (movement of the source or subconscious micro-movements in the listener's head), which helps to determine a sound source position in the geometrical space. It's typical of people to turn their heads towards unexpected sounds. When the head's turning, the brain gets additional information defining the sound's position in space. If the sound source does not generate a special frequency forming the difference between the front and rear HRTF function, the brain ignores such sound; instead, it uses data from the memory and compares the information about location of known sound sources in the hemisphere. 4. Headphones give the best results. Headphones make it simpler to solve the problem of delivering one signal to one ear and another signal to another ear. Moreover, some people do not like headphones, even light wireless models. Besides, the fact that a sound source seems to be much closer when the player has headphones on should also be accounted for.  Acoustic systems make it possible to avoid some problems of the headphones, but there are other troubles popping up: first, it's not clear how to use speakers for binaural listening, i.e. when a part of the signal goes to one ear and the other part to the other ear after the HRTF transformation. When we connect speakers instead of headphones the right ear catches the sound meant for the left one as well, and vice versa. One of the way-outs can be crosstalk cancellation (CC). In so-called sweet spots a listener can hear all 3D effects perfectly, while in other areas the sound will be distorted. The necessity to choose the right position, i.e. sweet spots, brings in new problems. The wider the sweet spot, the better. That is why the developers keep on looking for new ways to expand the sweet spots.  In a multi-speaker system (4.1, 5.1) the sound is distributed among speakers which are located around the listener's head. The sound coming from one or another speaker is positioned so that the listener could locate it. In principle, usual panning is enough, i.e. there are several streams (depending on the number of speakers) which play simultaneously on all speakers but at different volume levels - hence the effect. For example, Dolby Digital utilizes 6 and 8 streams in the 5.1 and 7.1 configurations respectively. The Sensaura MultiDrive, Creative CMSS (Creative Multispeaker Surround Sound) technology reproduces sound using HRTF functions with 4 or more speakers (every sound area uses its own crosstalk cancellation algorithm). Each pair of speakers forms front and rear hemispheres. Since the sound areas are based on the HRTF functions, each sweet spot allows for good perception of sources located on each side of the listener and sources located on the front/rear axis. As the covering angle is pretty wide, the sweet spot is large enough. Without crosstalk cancellation (CC) positioning of sound sources is impossible. Since HRTFs are used for 4 speakers for the MultiDrive technology it's necessary to apply CC algorithms to all of 4 speakers, which requires powerful computational power. Usage of the HRTF on the rear speakers makes its necessary to position the rear speakers accurately relative to the front ones. Front speakers are usually placed near the monitor. A subwoofer can be put somewhere on the floor in the corner. As for rear speakers, people place them where they find it better. Not everyone would want or has place to put them behind. Also remember, that calculations for HRTF and CC for 4 speakers take much power. And Aureal, for instance, uses the panning algorithms for the rear speakers because restrictions on positioning of rear speakers are not that strict. NVIDIA uses Dolby Digital 5.1 for 3D sound. When positioned, the whole sound stream is encoded into the AC-3 format and transferred to the external decoder in the digital form (for example, for a home theater). Min/Max Distance, Air Effects, Macro FX One of the main features of a sound engine is distance effects. The farther the sound source, the quieter it is. One of the simplest models is lowering the volume level at farther distances: the sound designer must assign a certain minimal distance out of which the sound starts fading out. While the signal is within this distance, it can only change its position; when it crosses the BORDER=1 it loses half of its strength (-6 dB) with each meter. It will keep on getting quieter until it reaches the Maximum distance, where it's too far to be heard. When this distance is reached the sound can keep on dying out until it comes to the zero volume level, but it's better to turn off such sounds to free the resources. The farther the maximum distance, the longer the sound will be heard. In most cases the volume level is based on the logarithmic dependence. The designer can discern loud and quiet sounds. Sound sources can have different Min and Max Distances. For example, a mosquito isn't heard at the distance of 50 cm already, while the sound of an air plane dies out only at the distance of several kilometers. A3D EAX HF RolloffA3D API extends the DirectSound3D distance model by modeling high-frequency degeneration - like it works in the real world when high frequencies are absorbed by the atmosphere according to the logarithmic law - approximately 0.05 dB per meter (for the frequency chosen: 5000 Hz by default). But in the foggy weather the air is thicker. And high frequencies are fading out quicker. EAX3 grants lower-level features for modeling atmospheric effects: here two reference frequencies are assigned - for low and high frequencies. Their effect depend on the environment parameters. MacroFX Most HRTF measurements are carried out in the far field, and it makes the calculations simpler. But if the sound sources are located within 1 meter (in the near field), the HRTF doesn't work adequately. The MacroFX technology was developed to reproduce sounds coming from the sources of the near field. The MacroFX algorithms apply sound effects in the near-field, and the sound source seems to be located very close to the listener as if it's moving from the speakers towards the listener and even penetrates into his/her ears. This effect is based on accurate modeling of sound-wave propagation around the listener's head from all positions in space, and on transformation of the data with the effective algorithm. This algorithm is integrated into the Sensaura engine and managed by the DirectSound3D, i.e. it is transparent for application developers who can create a good deal of new effects. For example, in flight simulators a listener as a pilot can hear a conversation of the air traffic controllers as if he has headphones on. Doppler, Volumetric Sound Sources (ZOOM FX), Multiple Listeners The Doppler effect is observed when the wavelength changes as the source approaches or moves away. When the sound source is nearing the wavelength shortens, and when it's moving away it grows in accordance with the special formula. Racing or flight simulators benefit most of all from the Doppler effect. In shooters it can be used for rackets, lasers or plasma, i.e. in any objects that move very fast. Volumetric Sound SourcesModern systems of reproduction of positioned 3D sound utilize HRTF functions forming virtual sound sources, but these synthetic virtual sources are spot. In the real life the sound mostly comes from large sources or composite ones which can consist of several individual sound generators. Large and composite sound sources allow for more realistic effects in comparison with spot sources. A spot source can be successfully applied to large but distant objects, for example, a moving train. But in the real life when the train is approaching the listener it's no more a spot source. However, the DS3D model determines it as a spot source anyway, and the picture gets less realistic (i.e. it looks like a small train near rather than a huge one). Aureal was first to apply the volumentric sound in its A3D API 3.0. Sensaura with its ZoomFX was next. The ZoomFX technology solves this problem and defines a large object as a collection of several sound sources (in case of a train the composite source can consist of noise of wheels, engine, couplings of carriadges etc.).  3D Sound Technology: wavetracing vs reverbs In 1997-1998 every chip maker decided upon the technologies they considered to be promising. Aureal, the then leader, staked on the maximum realism in games with Wavetracing. Creative decided that it's better to use precalculated reverberations and developed EAX. Creative bought Ensoniq / EMU in 1997, the developer and manufacturer of professional studio effect-processors - that is why they had the reverb technology at that time. When Sensaura appeared on the market, they used the EAX as a base, named their version EnvironmentFX and started on other technologies: MultiDrive, ZoomFX and MacroFX. NVIDIA was the last to come onto the scene (developer of the components for the MS X-Box), - they implemented the unique real-time Dolby Digital 5.1 encoding for 3D sound positioning. Wavetracing To create the effect of full submersion into the game it's necessary to calculate the acoustic environment and its interaction with sound sources. As the sound propagates, the waves interfere with the environment. The sound waves can reach the listener in different ways:

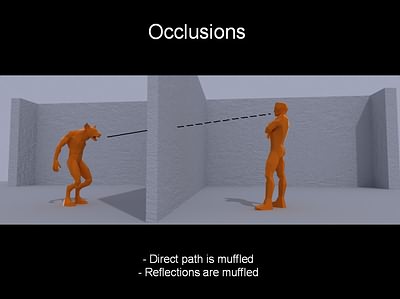

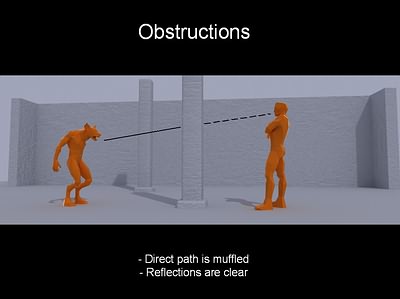

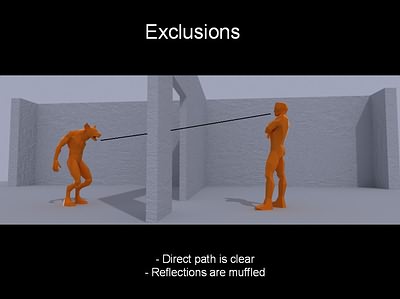

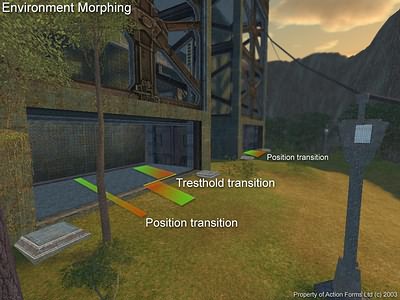

Aureal's Wavetracing algorithms analyze the geometry describing the 3D space to determine ways of wave propagation in the real-time mode, after they are reflected and passed through passive acoustic objects in the 3D environment. The geometry engine in the A3D interface is a unique mechanism of modeling of sounds reflected and passed through obstacles. It processes data at the level of geometrical primitives: lines, triangles and quadrangle (audio geometry). The audio polygon has its own location, size, shape and properties of the material it's made of. Its shape and location are connected with the sound sources and listener and influence how each separate sound is reflected or goes through or round the polygon. The material properties can change from transparent for sounds to entirely absorbing or reflecting. The database of the graphics geometry (which is displayed on the monitor) can be passed through the converter which turns all graphics polygons into the audio polygons while a game level is loaded. Global values can be set for parameters of reflecting or occluding objects. Besides, it's possible to process the graphics geometry database in advance by processing the polygon conversion algorithm and storing the audio geometry database in a separate file-card and swapping this file while a game's level is loaded. As a result, the sound becomes much more realistic: the combination of the 3D sound, acoustics of the rooms and environment and accurate representation of audio signals to the listener. The environment modeling realized by Aureal has no equals, even as compared to the EAX latest versions from Creative. However, the number of hardware streams assigned for calculating reflections by the Wavetracing technology is limited. That is why it's still a far way to the full realism. For example, the processing power won't be sufficient for late reflections, not to mention the graphics processing. Besides, the Wavetracing technology is not prompt; the realization requires huge expenses. That is why you shouldn't disregard the prerendered sound textures of the EAX technology. 3D graphics doesn't use the real-time rendering based on the ray tracing method yet. OcclusionsThe EAX technology and its reverberation model will be described a little bit later. And now let me dwell upon the occlusion effects. In principle, it can be done by turning down the volume, but the more realistic effect can be reached with the low-pass filter.  In most cases one type of occlusions is enough - the sound source is located behind the blind obstacle. The direct path is muffled, and the filtering degree depends on the geometrical parameters (thickness) and materials the wall is made of. Since the sound source and listener have no direct contact, the echo from the source is muffled according to the same principle.  For the maximum realism the API developers at Creative use one more concept of Obstruction which means that the direct path is muffled - there is no direct contact with the listener, but the source and the listener are in the same room, and late reflections reach the listener in the original form.  One more type is Exclusions. The source and the listener are in different rooms but they have a direct contact, the direct path reaches the listener, but the reflected sound can't entirely pass through the opening and gets distorted (depending on the thickness, shape and properties of the material). Anyway, no matter how the effects are realized (with Aureal A3D, Creative Labs EAX or manually on your own audio engine), it's necessary to trace geometry (wholly or only the sound part) to find out whether there is a direct contact with the sound source. This is a very strong blow on performance. That is why in most cases it's better to build the simplified geometry for sound (especially for shooters, 3D RPG or other similar games that bring in as much realism as possible). Fortunately, such geometry is almost always calculated to define collisions - in order not to trace the whole geometry around the player in the room. That is why we can use the same geometry and make it a bit more detailed for the sound. Environments morphing One more solution of Creative Labs was launched in 2001 together with the EAX3. This is the algorithm of gradual transformation of reverb parameters of one environment into another. The picture demonstrates two practical realizations.

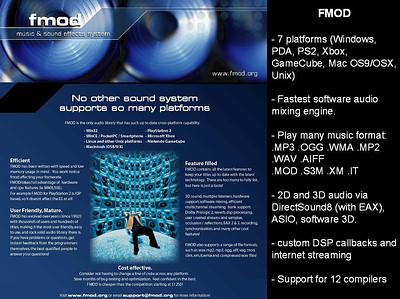

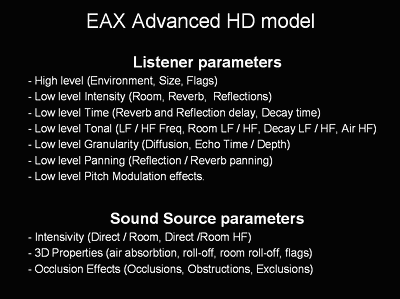

Environments morphing is one of the most important functions concerning reverberation. It's a breeze now to create new presets for reverb parameters. Even if the gradual transition is not used, you can use this function to form a certain average environment (for example, we have Outdoor and Stone corridor) by setting the morphing factor equal to 0.5, and we will get something average of different sounding. Before the Environments morphing was developed, this effect in the games (i.e. Carnivores 2) where the parameters couldn't be changed gradually (there were fixed presets in EAX1 and EAX2) was created the following way. An intermediate environment was composed of those 25 presets available (mostly orally). For example, there is Cave preset and it's required to made gradual transition into the Mountains preset. After listening and adjusting some of the parameters the Stone Corridor is chosen as something intermediate. Now you can avoid it thanks to the environment morphing. Interfaces and API Now let's talk about choosing API for programming the audio engine. The choice is actually not great: Windows Multimedia, Direct Sound, OpenAL, Aureal A3D. Unfortunately, the drivers of the Aureal A3D are still buggy, and it works poorly on the modern Windows 2000 and XP. The Windows Multimedia system is the basic sound reproduction system inherited from earlier Windows 3.1. It's rarely used for games because of its large latency due to its large buffer. However, the WinMM is used in some semiprofessional cards which have special optimized WDM drivers. OpenAL, Loki Entertaiment's solution of a cross-platform API, analog of the OpenGL. It's promoted by Creative Labs as an alternative for Direct Sound. The idea is good but the realization is poor. Besides, Loki Entertaiment has recently gone bankrupt. We hope that a new alternative API for sound will soon appear because the OpenAL is a real nightmare for a programmer. However, NVIDIA has recently launched the hardware driver for the OpenAL on its nForce chipsets, which was a real surprise. Direct Sound and Direct Sound 3D are the most optimal APIs at the moment. It has no equals at the moment. It's a bit pretentious, but after all, it reproduces sound, and there is nothing more needed. Beside these hardware APIs (the APIs that have hardware drivers instead of the emulation of reproduction via DirectSound or WinMM), there are so called wrappers (program interfaces which use ready soft-hardware interfaces for creation of their own interface). As a rule, every game has its own wrapper interface. There are a lot of such API-wrappers (they have no real hardware support): Miles Sound System, RenderWare Audio, GameCoda, FMOD, Galaxy, BASS, SEAL. MilesSS is the most famous one - 2700 games use exactly this wrapper. They licensed Intel's RSX technology, and now offer it as an alternative to the software 3D sound. There are a lot of features available, but it's not deprived of weak points: it covers only Win32 and Mac and has a very high license price. Galaxy Audio was developed for Unreal and now it's used for all Unreal-engine based games. But the Unreal 2 was built on the OpenAL, that is why the Galaxy can be considered dead now. Game Coda and RenderWare Audio from Sensaura and Renderware respectively have almost equal sizes. Both support PC, PS2, GameCube and XBOX and many various features, but the license price is still too high. Finally, the FMOD. It has recently arrived but it takes one of the leading positions thanks to a wide choice of features and technologies supported in the API. Firelight Multimedia (Melbourne, Australia) which was founded in 1995 and had only one person on the staff - Brett Peterson, paid much attention to audio tracker formats MOD, XM, IT, S3M, and the first version of that API (yet for DOS) was also released at that time. It was a free API for noncommercial use for demos and other interactive applications. Since the tracker formats playback technologies require a very fast software mixing code, the FMOD takes the leading position among the APIs because its mixing code is the fastest. When the first cards with hardware 3D sound came onto the scene, they brought a new problem of combining the hardware and software 3D sound, and, therefore, fostered development of new technologies: Aureal A3D, Creative Labs EAX 1, EAX 2, scene geometry software manager - for A3D compatibility. In 2000 the company was renamed into Firelight Technologies and got 4 persons on the staff. They primarily ported a code to the PS2 and XBOX consoles. Firelight Technologies was almost the first to offer audio middleware for consoles. Now the company aims to embrace as many platforms as possible and to develop a single interface (maximum compatible and emulating some functions on the software level if they are not available on a certain platform). The year of 2003. This is the only API supporting 7 platforms - PC (win32), PlayStation2, XBOX, GameCube, Linux, Mac OS (OSX) and PDA (winCE), the Multiple Listeners technology, 12 compilers for any Visual Basic programming language up to Assembler, the fastest mixer software code, a fast MP3 decoder (unfortunately, an additional incense from Thompson multimedia is needed to use MP3 for commercial applications), but there is an alternative - OGG or WMA. But that's not all the FMOD has. At http://www.fmod.org/ you can look through the full list of supports and license prices. No secret that they are currently developing the FMOD hardware driver for several Windows platforms and mobile phones (PDA). At present, FMOD takes a firm position on the market. Nice to see that a lot of the things I wanted our Engineers to develop into A3D actually got done by an outside company, says David Gasior / Former Technology Evangelist at Aureal. I trust David Gasior, and agree to what he says about the FMOD. It's not advertising but a real state on the current Audio API market. EAX (Environmental Audio Extensions)EAX (Environmental Audio Extensions) from Creative Labs is not an API, not a library but a set of extensions for the API DirectSound3D. It's simple to realize the EAX in a game for programmers but adjustment of the parameters takes much more time. We will deal separately with the Listener and Sound Sources. The EAX system discerns parameters adjusted separately for the Listener and for Sound Sources. We will call them Listener parameters and Sound Source Parameters respectively. In 1997-1998 Creative launched the EAX v1. This was a primitive set of 26 presets and 3 parameters for more accurate adjustment of the Listener Parameters and 1 parameter for for the Sources. The EAX 1 was soon followed by the EAX2 (14 parameters for the Listener and 13 for the sources including occlusions), it was actually taken as a standard for games. Interactive Audio Special Interest Group (http://www.iasig.org/) created the IASIG Level 2 standard which was almost entirely based on the EAX2, but still had some disadvantages. The matter is that every company that applies the IASIG Level2 - Microsoft Direct Sound 8, Sensaura EnvironmentFX, Aureal A3D, - makes its own add-ons, - it can rearrange or rename the parameters. It makes adjustment and porting a bit inconvenient. The EAX2 is described in detail in the SDK at http://developer.creative.com/. EAX Advanced HDIn 2001 Creative announced the Audigy sound card and new EAX functions named EAX Advanced HD. They cover 25 (!) parameters for accurate adjustment of the Listener and 18 parameters for the sources including two new occlusion effects. So, in the EAX Advanced HD (let's call it simply EAX3) the Listener parameters are divided into two groups: high-level and low-level.  Listener ParametersThe high-level parameters include Environment, 26 presets back compatible with the EAX 1 and 2. The second parameters of the Environment Size from 1 to 100 meters. And the third group is flags for automated calculation of low-level parameters depending on the environment size; it covers the auto latency calculation, decay time of late reflections etc. You can learn the details in the SDK and documentation, and here we will just look at the approach. The low-level parameters are divided into several subgroups depending on their actions. The first one covers volume level parameters. There are volume level parameters for all sounds (Room - master volume), 1st order (Reverb), 2nd order (Reflections), and the Room Roll-off Factor. The second subgroup includes the time parameters: Reverb and Reflection Delay, and Reflections Decay Time (in seconds). The third group consists of the sound tone parameters. This can help the player define what the walls are made of, what is the air density in the environment etc. Every material reflects and absorbs certain frequencies. These parameters emulate such absorption and reflection. They are relative frequencies (LF - Low Frequency and HF - High Frequency) within which changes can be made. For example, metallic walls reflect more frequencies than wooden ones, and the HF level will be lower for them than for emulation of wood. For example, the workshop has the following parameters: 362Hz LF and 3762 Hz HF; a wooden room has the LF at 99 Hz and the HF at 4900 Hz. Finally, there are parameters controlling the effect of Room LF and HF frequencies (in dB). This subgroup also contains Decay factor for LF and HF, and Air Absorption HF factors. The fourth subgroup controls granularity of sound reflected. But I'd prefer the concept of density. Here we have the Environment Diffusion (0...1) and Echo Depth and Echo Time - how many times the original sound repeats while fading away. For example, Echo Time is 250 ms by default, which means that the echo repeats 4 times/sec. So, this group controls density and, therefore, realism. One of the most interesting subgroups is the fifth one with the panning effect. They allow for much higher dynamics of the sound reflected. If you know how the rooms are arranged, you can make the program calculate and define the direction most reflections come from, for example, taking into account how the near walls are positioned. Or imagine that the player is in the open space, i.e. there is no echo, but he can hear the sound reflected from the cave's hole located not far away. The program can pan the reflected sound as it it comes from the cave hole with the Reverb Pan (1st order reflections) and Reflections Pan (2nd order reflections) parameters. This is a unit vector with the coordinates (x,y,z) changing within 0 - 1. All the values are equal to 0 by default, which means that reflections can come from all directions. In case of 1 the reflections come from only one directions, and the maximum value is limited by 0.7 for all coordinates.  The last subgroup is Pitch Modulation effects. These effected are not typical of real environment. They are created for emotional load, for example, to make you feel dizzy, intoxicated etc. Here we have Modulation Depth (0...1) and Modulation Time (0.4...4 seconds). Sound Source ParametersLike the Listener parameters, the Sources ones are divided into several subgroups. The first one is the volume level control as well. It includes Direct path volume, and Direct HF (HF absorption volume level), as well as the volume levels for 1st order reflections (Room) and HF absorption for reflected sound (Room HF). The second group covers the 3D properties of the sound sources: Roll-off Factor, Room Roll-off Factor, Air Absorption Factor and flags for automated calculation of some parameters. The third group is occlusion effects. The occlusion types were studied in the first part, and now I want to draw your attention to some peculiarities. These effects are simply filters used for the direct path sound and/or for reflections. So, occlusions (the sound goes round an insurmountable obstacle when the listener and source are divided by it) include 4 parameters: Occlusion, which is actually the effect volume level (-10000 to 0 dB), Occlusion LF Ratio (0...1), Occlusion Room Ratio and Occlusion Direct Ratio (the two last control the sound filtering degree for the direct path and reflections). Obstructions (both the listener and source are in the same room, the direct path is blocked, but the reflected sound reaches the listener). There are 2 parameters: Obstruction (effect volume level from -10,000...0 dB) and Obstruction LF Ratio. Finally, Exclusions (the listener and source are in different rooms but there is an opening in the wall, the direct sound reaches the listener completely while the reflected one only partially). There are two parameters as well, like in Obstructions. EAX4 (EAX Advanced HD version 4)In March 2003 Creative announced the EAX Advanced HD v4 which was scheduled to become available in the end of April or beginning of May. Unfortunately, Creative does not permit the detailed description of the EAX4 with technical details. The EAX3 differs from the EAX4 only conceptually. So, the EAX Advanced HD version 4 has the following new elements:

Studio quality effectsEAX4 presents 11 studio quality effects. You can use any of the effects listed below for 2D and 3D sound sources.

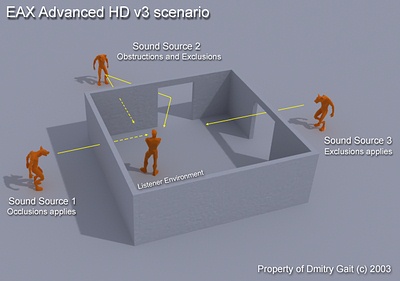

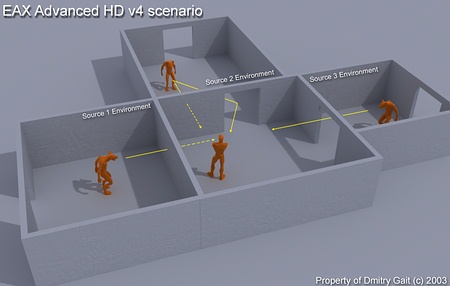

At http://www.harmony-central.com/Effects/effects-explained.html you can get more details on how some of the effects work. These effects will give vent to your imagination. For example, the Flanger effect can be applied to a machine gun to make an effect of overheat or faster shooting in the real-time mode, without changing the audio file, or to emulate the transmitter effect with Distortion and Equalizer. You can come up with many ideas but there is one problem - it's supported only by the Audigy / Audigy 2. However, some of such effects are built into the Direct Sound 8 or can be emulated on the software level. Multiple effect slotsAnother feature announced is several slots for effects. You can add there several effects mentioned. For example, you can hear sound in several environments simultaneously, or add to the Distortion and Equalizer in the transmitter the effect of Environment Reverb to create illusion of a transmitter in the room with echo. Multiple EnvironmentsImagine the following scenario. 3 sound sources surround the listener. Source 1 is heard in the environment with the Occlusions effect. Source 2 has Obstructions and Exclusions applied. The third one is coupled with Exclusions. All the parameters can be set with four EAX3 functions - one for the listener and 3 for Occlusions, Obstructions and Exclusions effects (additional parameters can be set together with the main ones).  In case of the EAX4 each source and the listener will have their own environments. The sound from each source is spread both in its own environment and in the listener's one. Occlusions, Obstructions and Exclusions are applied both to the sources' environments and to the listener's one. Thus we get the result of interaction of the sources in their own environments plus interaction in the listener's one. With these settings it's needed to use the EAX4 functions a lot: it's necessary to define the environments for the sources and listener, and effects for each source, but the sound becomes more realistic - the sources sound as if they are located in different rooms, while in the EAX3 they were simply in a different room with the listener.  Zoned effectsThe concept of zones is very similar to Room or Environment. Creative Labs recommends dividing the visual geometry into several zones, each with its own properties, its own reverb preset. If the game knows where the listener is and what sources there are, the respective parameters can be set automatically. Space should be divided into zones in the level editor, where the audio designer can set identifiers for the zones and reverb parameters. EAX Advanced HD 4 ensures more gradual transitions between the zones. Like in the EAX3, the listener crossing the BORDER=1 between zones enable the morphing operation. But now it can be applied to parameters in the slots, i.e. it's not needed to load them every time.  The slide demonstrates 3 zones (3 environments). Zone 1 is a cylindrical room, Zone 2 is a small low room and Zone 3 is a long corridor. Each will have its own reverb effect. We load 3 packets of parameters into the slots, and morph them when the gradual transition is needed (see Environment Morphing). The Reverb / Reflections panning and Occlusions effects are especially interesting. You can load several different Environment Reverbs for each Zone into the same slots and then morph one into another for entirely realistic sounding. The sound source is located in Zone 3 and the listener is in Zone 2. The Zones are connected with a doorway (Exclusion effect - direct path is clear, and reflections are muffled). So, the direct path and muffled exclusions get from Zone 3 into Zone 2, reflect and reach the listener. Also, there is Zone 1 behind the listener. There are no sound sources at the moment (if there were something, the direct path and reflected path would reach the listener and contact Zone 2). But we can adjust the panning vectors so that the reflections from Zone 2 get to Zone 3, interact there and get back to the listener. The sound scene would look very realistic. That's what we should expect in the near future. The realization will certainly be much more difficult than the theory. The main problem will be to define where sources are, correctly load parameters of the nearest zones and trace of each source for determining Occlusions, Obstructions or Exclusions. Sure, it's not necessary to use all the effects the EAX4 offers. It's possible that you project needs only realistic Environment Morphing and Occlusion effect. Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. |