|

||

|

||

| ||

It's been almost two months since the first version of the universal RightMark Memory Analyzer 2.4 test was officially announced. It proved to be perfectly suitable for detailed study of low-level platform parameters. But as time went by, hardware and software advanced, and so did RightMark Memory Analyzer. Soon after, a modified second version appeared — RightMark Memory Analyzer 2.5, where the main focus was not so much on the test development as on the updating of aspects not well enough realised previously. But we can't say that the changes were made to suit some specific CPUs, not at all — the universal principles remained the same. The RMMA 3.0 is different: there are much more real innovations than minor corrections concerning test realisations, which is certainly good. Well, it's high time we examined its main parameters. CPU Information

First of all, look at the window that opens at the test launch.

Its customary view has changed: there are now fewer tabs, though none of the test parameters has disappeared, they have just undergone a certain reorganisation. The first tab (CPU Info / CPU) opens by default when the test is launched. It contains detailed information about the CPU (or CPUs, if there are more than one of them). The information is similar to that given by popular CPU-identification programs, such as WCPUID and CPU-Z. We won't dwell on it going instead to the control options CPU Index is the number of the active CPU (physical or logical) if the system has several physical (SMP) or logical (Hyper-Threading) ones. If the CPU index is changed, the information about the chosen CPU is automatically refreshed. This parameter also specifies the number of the active CPU (physical or logical) that will be used for executing the main test flow. Though the option is virtually of no use for standard SMP systems (to say nothing of HT), it can be applied for examining system's behaviour differences when the CPU accesses "its own" memory and an "alien" one. Of course, memory architecture must be separate (such as two-processor AMD K8 platforms (Opteron), with each CPU possessing its own memory). Save — CPU's identification info dump (CPUID) is saved into a binary file. Because this information is used to identify the CPU in the database (described further), we call on users of our test to save this information as well as test results. It will enable us to extend our CPU database, and the users will, in turn, have the opportunity to receive its regular updates. Refresh — information about the CPU selected in CPU Index is refreshed for this and following tabs (Cache/TLB). Now we're going to the next tab — CPU Info / Cache.

It includes full information about different CPU cache levels. Most parameters have featured and actual values. The former are received by deciphering the CPUID values, the latter are inserted from our data base that is an integral part of the benchmark. Now we will dwell on the database. Its functioning principle is simple. Each CPU model has a unique data set provided by the CPUID instruction with different input parameters. These data include, first of all, a 12-letter manufacturer's identificator (e.g. — GenuineIntel, AuthenticAMD). Another important parameter is the so-called CPU signature (numbers of family, model, and CPU stepping taken as a single 32-bit value). Then come CPU features (such as various SIMD extensions) and finally, cache and TLB features. These parameters are enough to identify any given CPU model (though not a particularl CPU). And it would be useless, or even harmful to supply this "set of fingerprints" with such specific information as CPU's serial number (Intel Pentium III and Transmeta Crusoe CPUs have it), or its "name" (e.g. — Brand String), which often contains CPU frequency. Considering all this, the searching principle is very simple: if all specified CPUID values of a CPU coincide with those of an Nth processor from the base, then it is the CPU we're looking for, and we can insert all actual values right from the base. And on the contrary, if a CPU is not found in the base, these values are unaccessible — except CPU's L1/L2 line sizes which are identified automatically by the test itself when the application is launched. Coming back to the features of CPU cache levels, additional parameters provided here (and unavailable in CPUID) are latency of the access to the cache level, bus width and its hierarchy (exclusive or inclusive).

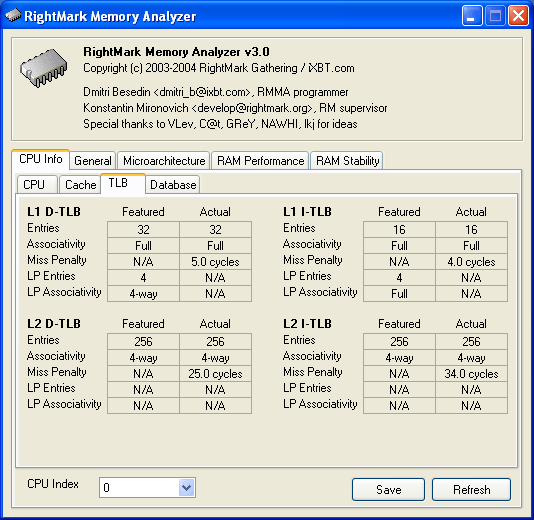

The next tab (CPU Info / TLB) is similar to the previous one, it contains information about TLB buffers of the CPU.

The tab is organised in a similar way: featured values are taken from processor's CPUID data, while actual ones — from our database. Data on TLB sizes and associativity that use large memory pages (LP Entries/Associativity) are now absent from the database. It will be recalled that today's OSs still use small 4-KB memory pages making impossible an objective evaluation of the mentioned parameters for large 4-MB pages (by means of direct measurement). At the same time, we can estimate a miss penalty value for each TLB level — the value of an increased latency of access to CPU's L1 cache if the corresponding TLB level misses. These are the values that are inserted from our database.

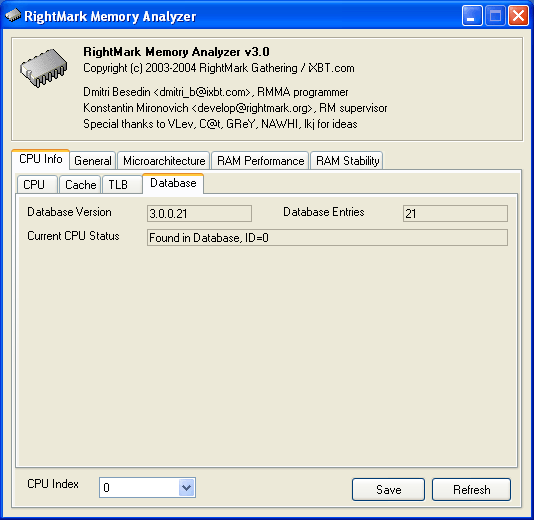

And finally, this is what the information about the database itself looks like.

Field names are understandable: database version (3.0 stands for the program version itself, the next figure — for the revision number that will increase with each new refreshing, and the last unit— for the number of entries in the database). The number of entries is also shown in a separate line, as is the current CPU status. The latter, in our case, was found in the database as it had been the first to be placed there (ID=0). General SettingsThis sector contains general settings of all subtests realized in RMMA 3.0. Memory AllocationChooses a way to allocate memory needed for test executions. Standard — a standard method of memory block selection "in heap" with the help of malloc. It is suitable for almost any OS. VirtualLock — a method that works with the virtual memory using the procedures realised in Windows NT-class OSs. A memory block of a needed size is reserved by invoking VirtualAlloc. Then the VirtualLock function is invoked at the address of the selected memory region, which guarantees that all data from this region will be in the physical memory, that is, later accesses to this region won't cause page fault. AWE— this method uses Address Windowing Extensions available in Windows 2000/XP/2003 Server. This memory allocation method is more reliable for some tests such as cache associativity. Usage of AWE requires Lock Pages in Memory privilege which is not available by default. To get this privilege make the following steps:

Data Set SizeThe total size of data to be read/recorded when measuring every next pixel. Every pixel is measured four times, then the minimal result (in CPU clocks) is chosen. It provides higher repeatability. So, if you measure the memory bandwidth by reading 1MB units the Data Set Size equal to 128 MB means that it takes 4 measurements with 32 reading iterations. A higher Data Set size provides a more reliable result (smoother lines), but respectively increases the test time. Thread LockIn general, every test is started in the main stream which has the highest priority (realtime) to prevent effects from other running processes. It concerns only uniprocessor systems, though most user systems are such. In case of SMP or Hyper-Threading systems additional processors can have a noticeable effect on the test scores. This option locks other processes in SMP systems to increase precision and reliability of the measurement data. At the same time, it's not recommended that you use this option in Hyper-Threading systems as it makes its own great effect. The ideal test condition for Hyper-Threading systems is the minimal possible system load. All applications including those with the lowest priority should be closed. Logarithmic Y ScaleApplies a logarithm scale on the ordinate axis. A linear scale is set by default. White BackgroundIt allows using a white background in graphic representation of test results during the test execution and in the report BMP file, instead of the default black one. This option is needed for more convenient test results printout. Create Test ReportThis option determines whether a report will be created on completion of the test. The report includes two files with textual (MS Excel CSV) and graphic (BMP) representation of test results. Sequential test execution (Batch)The tests are executed sequentially as it's more convenient, in particular, for streaming testing of a great number of systems with the same test suite. RMMA supports the following operations with a batch:

Press Add to Batch to add individual tests whatever subtest is running. Description of RMMA 3.0 TestsNow we are going to describe tests for the most important platform features. Initially, tests of the project were supposed only to measure those features, and not to compare platforms with each other. Measuring TestsWe can divide RMMA 3.0 tests into measuring and competitive. The first group includes almost all the tests you came across in previous versions of the project. They measure values that sometimes can't be compared with one another. These values are:

It's very difficult to compare even such elemental values as maximal memory bandwidth and minimal memory latency, because different platforms use different memory types. But this is just half the trouble, the other one is that the values depend very much on the way this or that CPU microarchitecture is realised (the most important parameter here being the cache line size). Thus, different approaches are needed to measure the values. To be precise, let's formulate the principles that refer the values to the measurable class, not to the comparable.

In this RMMA version the measuring tests were referred to "microarchitectural"

tests. As a result, a new tab was added with a corresponding name.

And this is where all the tests realised in previous versions are

"concealed". It will be recalled that each test enables

to specify user's settings or to select one of the presets. The latter

were introduced to make viewing of test options more convenient and

to allow comparing different-class systems in the same conditions.

When you select a preset the option to change test parameters is blocked

for safety reasons. Benchmark #1: Memory BW The first benchmark estimates an actual memory bandwidth of L1/L2/L3 data caches and RAM. This test measures time (in CPU clocks) during which a data block of a certain size (which can vary or stay fixed) was fully read/recorded/copied using some or other CPU registers (MMX, SSE, or SSE2). The important loop unrolling factor allows to optimize operating modes concerning small block sizes (L1 data cache region) and to increase the values of the cache level's real bandwidth. In case of reading and writing the test also allows for various optimisations - Software Prefetch or Block Prefetch - in order to reach the Maximal Real Read Bandwidth. The scores are calculated in bytes transferred to the CPU (from CPU) at one clock, as well as in MB/s. Below you can see settings of the first benchmark. Variable ParameterSelection of one of three test modes:

Minimal Block SizeMinimal Block Size, KB, in case of Variable Parameter = Block Size; block size in other cases. Maximal Block SizeMaximal Block Size, KB, in case of Variable Parameter = Block Size. Minimal PF DistanceMinimal Software Prefetch Distance, in bytes, in case of Variable Parameter = PF Distance; Software Prefetch Distance in other cases. 0 means that the Software Prefetch mode is disabled. Maximal PF DistanceMaximal PF Distance (for Software Prefetch) in case of Variable Parameter = PF Distance. Minimal Block PF SizeMinimal Block PF Size, KB, in case of Variable Parameter = Block PF Size; Block Prefetch size in other cases. This parameter makes sense only for the Block Prefetch methods (1, 2) described below. Maximal Block PF SizeMaximal Block PF Size, KB, in case of Variable Parameter = Block PF Size. Stride SizeStride Size in operations of reading data into cache in Block Prefetch methods (1, 2), in bytes. For more reliable results this parameter must correspond to the cache line size. That is why in this and other subtests this parameter is selected by default, like L1 Cache Line, which means using L1 cache line width value either inserted from the database or automatically identified by the program at launch. CPU Register UsageCPU Register Usage - selection of registers for fulfilling read/write operations ( 64-bit MMX, 128-bit SSE and 128-bit SSE2 ). Read Prefetch TypeRead Prefetch Type defines a type of instructions used for Software Prefetch (PREFETCHNTA/T0/T1/T2); also, it enables one of Block Prefetch modes needed for taking measurements at Variable Parameter = Block PF Size. Block Prefetch 1 uses line readsets from memory to block prefetch of a certain size using MOV instructions and is recommended for AMD K7 family (Athlon/Athlon XP/MP). At the same time, in the Block Prefetch 2 method data are read with one of the Software Prefetch instructions (PREFETCHNTA). This method is recommended by AMD for K8 family (Opteron/Athlon 64/FX). Non-Temporal StoreNon-Temporal Store - direct memory access (write combining protocol) at writing. This access method writes data into memory without prereading of old data into the CPU cache levels system (without using the write allocate mode). It saves the CPU cache from unneeded data, in particular, in case of copy operations. Copy-to-Self ModeData block is copied to the same memory region where the copy block is located, i.e. the memory content doesn't actually change. This option is not enabled by default, and data copied are shifted by the offset equal to the transferred data block size. Since in this mode write operations completely get into the cache, this benchmark tests memory's ability to read data after writing (read around write). In this case the cache memory is utilized to a greater degree and the benchmark turns out to be much lighter for the memory subsystem. Note that the Non-Temporal Store and Copy-to-Self modes are incompatible. Selected TestsSelected Tests define the memory access ways.

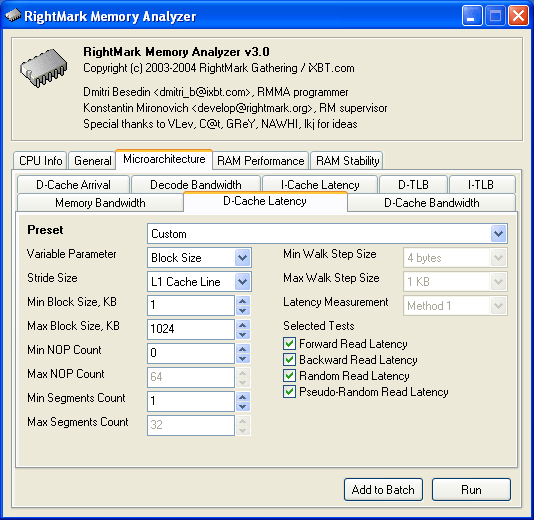

Benchmark #2: Latency/Associativity of L1/L2 Data Cache (D-Cache Lat) The second benchmark estimates the average/minimal latency of L1/L2 data cache and memory, L2 cache line size and L1/L2 data cache associativity. Below are its parameters and operating modes. Variable ParameterThere are 4 types of this test:

Stride SizeStride Size, bytes, in the dependent access chain. Minimal Block Size, KBMinimal Block Size, KB, in case of Variable Parameter = Block Size; Block size in other cases. Maximal Block Size, KBMaximal Block Size, KB, in case of Variable Parameter = Block Size. Minimal NOP CountMinimal NOP Count in case of Variable Parameter = NOP Count; NOP count in other cases. Maximal NOP CountMaximal NOP Count in case of Variable Parameter = NOP Count. Minimal Segments CountMin Segments Count — a minimal number of successive cache segments in case of Variable Parameter = Segments Count; the number of successive cache segments in other cases. Maximal Segments CountMax Segments Count — a maximal number of successive cache segments in case of Variable Parameter = Segments Count; the number of successive cache segments in other cases. Minimal Walk Step SizeMinimal Walk Step Size, bytes, in the dependent access chain in case of Variable Parameter = Walk Step Size. Maximal Walk Step SizeMaximal Walk Step Size, bytes, in the dependent access chain in the case of Variable Parameter = Walk Step Size. Latency MeasurementLatency Measurement technique (the parameters can be configured only if Variable Parameter = NOP Count). In Method 1 an ordinary dependent access chain with a varying number of NOPs (see above ( edx = 0)) is used to determine the minimal latency:

Nevertheless, in some cases (if the speculative loading works effectively) the minimal cache latency may not be achieved. For such cases there's an alternative RMMA method (Method 2) which uses a different chain read code (ebx = edx = 0):

Selected TestsSelected Tests define the memory access ways when testing latency:

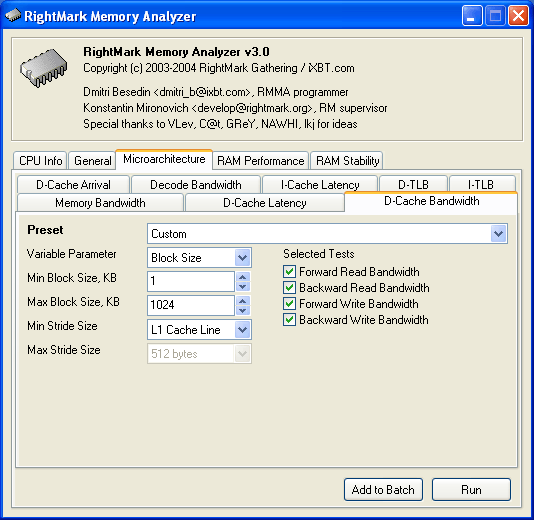

Benchmark #3: Real L1/L2 Data Cache Bus Bandwidth (D-Cache BW) This benchmark estimates a real L1-L2 cache bus bandwidth (or L2-RAM bus bandwidth). It's the simplest test in RMMA regarding its configuration. It's based on the method used in the real L1/L2/RAM bus bandwidth test (Benchmark #1). But in this case memory read/write operations are carried out line by line, i.e. with the stride equal to the cache line size and with CPU's ALU registers. Both forward and backward access modes are supported. Test parameters are as follows: Variable ParameterVariable Parameter defines one of two test modes: Block Size - dependence of a real data bus bandwidth on the data block size; Minimal Block Size, KBMinimal Block Size, KB, in case of Variable Parameter = Block Size; Block Size in other cases. A value less than 1.5 times L1 cache will yield senseless results. This test doesn't estimate a bandwidth of the L1-LSU-registers tandem because loading of data from L1 into LSU (Load-Store Unit) and then to CPU registers is not fulfilled line by line. To estimate the L1-LSU bandwidth it's better to run the first test (Memory BW) within the range of block sizes which can get into the L1 cache. Maximal Block Size, KBA value lower than the L2 cache size (inclusive cache architecture) or L1+L2 (exclusive cache architecture) allows estimating a real L1-L2 bus bandwidth. In case of the Block Size values ranging from L1+L2 to some greater value this benchmark estimates the maximal real memory bandwidth at reading/writing of full cache lines, which in some cases turns out to be greater than the maximal real bandwidth in case of total data reading/writing. Minimal Stride SizeMinimal Stride Size in cache accessing at reading/writing in case of Variable Parameter = Stride Size; Cache access Stride Size in other cases. Maimal Stride SizeMinimal Stride Size in cache accessing at reading/writing in case of Variable Parameter = Stride Size; Selected TestsSelected Tests define a type of measurements.

Benchmark #4: L1/L2 (D-Cache) Arrival The fourth benchmark estimates bus realisation features (bit capacity, multiplexing) of the CPU's L1-L2 data cache. This test actually measures the total latency of two accesses to the same cache line, which are separated by a certain value. The measurement method is identical to the one in Method #2 except the fact that two consecutive chain elements are located in the same cache line. Besides, the fourth test can be used to estimate L1/L2 cache line size (this is the test that is used for its calculation at program launch if the CPU model hasn't been found in the database. These are the test parameters: Variable ParameterVariable Parameter defines one of five test types:

Minimal Block Size, KBMinimal Block Size, KB, in case of Variable Parameter = Block Size; total Block Size in other cases. Maximal Block Size, KBMaximal Block Size, KB, in case of Variable Parameter = Block Size. Minimal NOP CountMinimal NOP Count defines the minimal number of NOPs between two successive accesses to adjacent cache lines in case of Variable Parameter = NOP Count; the number of NOPs between two successive accesses to adjacent cache lines in other cases. Maximal NOP CountMaximal NOP Count defines the maximal number of NOPs between two successive accesses to adjacent cache lines in case of Variable Parameter = NOP Count. Minimal SyncNOP CountMinimal SyncNOP Count defines the minimal number of NOPs between two successive accesses to the same cache line in case of Variable Parameter = SyncNOP Count; the number of NOPs between two successive accesses to the same cache line in other cases. Maximal SyncNOP CountMaximal SyncNOP Count defines the maximal number of NOPs between two successive accesses to the same cache line in case of Variable Parameter = SyncNOP Count. Stride SizeMinimal Stride Size, in bytes, in the dependent access chain between two successive accesses to consecutive cache lines. Minimal 1st Dword OffsetMinimal 1st Dword Offset within the cache line, in bytes, in case of Variable Parameter = 1st DW Offset; 1st Dword Offset within the cache line in other cases. Maximal 1st Dword OffsetMaximal 1st Dword Offset within the cache line in case of Variable Parameter = 1st DW Offset. Minimal 2nd Dword OffsetMinimal 2nd Dword Offset within the cache line, in bytes, in case of Variable Parameter = 2st DW Offset; 2st Dword Offset within the cache line in other cases. The 2nd DW Offset is calculated relative to the 1st Dword offset modulo stride size (cache line size): 2nd_Dword_Offset = (2nd_Dword_Offset + 1st_Dword_Offset) % Stride_Size Maximal 2nd Dword OffsetMaximal 2nd Dword Offset within the cache line in case of Variable Parameter = 2nd DW Offset. Selected TestsSelected Tests defines a way of testing the latency of the double access:

Benchmark #5: Pipeline and Instruction Cache Test

|

| Instruction type | Instruction subtype | Operation |

| ALU |

NOP (1) LEA (2) MOV (2) ADD (2) SUB (2) OR (2) XOR (2) TEST (2) CMP (2) SHL (3) ROL (3) XOR/ADD (4) CMP-0 (4) CMP-0 (6) CMP-8 (6) CMP-16 (6) CMP-32 (6) CMP-0 (8) CMP-8 (8) CMP-16 (8) CMP-32 (8) |

nop lea eax, [eax] mov eax, eax add eax, eax sub eax, eax or eax, eax xor eax, eax test eax, eax cmp eax, eax shl eax, 0 rol eax, 0 xor eax, eax; add eax, eax cmp ax, 0x00 cmp eax, 0x00000000 cmp eax, 0x0000007f cmp eax, 0x00007fff cmp eax, 0x7fffffff [rep][addrovr]cmp eax, 0x00000000 [rep][addrovr]cmp eax, 0x0000007f [rep][addrovr]cmp eax, 0x00007fff [rep][addrovr]cmp eax, 0x7fffffff |

| FPU |

WAIT (1) FADD (2) FMUL (2) FSUB (2) FSUBR (2) FCHS (2) FABS (2) FTST (2) FXAM (2) FCOM (2) FCOMI (2) FST (2) FXCH (2) FDECSTP (2) FINCSTP (2) FFREE (2) FFREEP (2) |

wait fadd st(0), st(1) fmul st(0), st(1) fsub st(0), st(1) fsubr st(0), st(1) fchs fabs ftst fxam fcom st(1) fcomi st(0), st(1) fst st(0) fxch fdecstp fincstp ffree st(0) ffreep st(0) |

| MMX |

EMMS (2) MOVQ (3) POR (3) PXOR (3) PADDD (3) PSUBD (3) PCMPEQD (3) PUNPCKLDQ (3) PSLLD (4) |

emms movq mm0, mm0 por mm0, mm0 pxor mm0, mm0 paddd mm0, mm0 psubd mm0, mm0 pcmpeqd mm0, mm0 punpckldq mm0, mm0 pslld mm0, 0 |

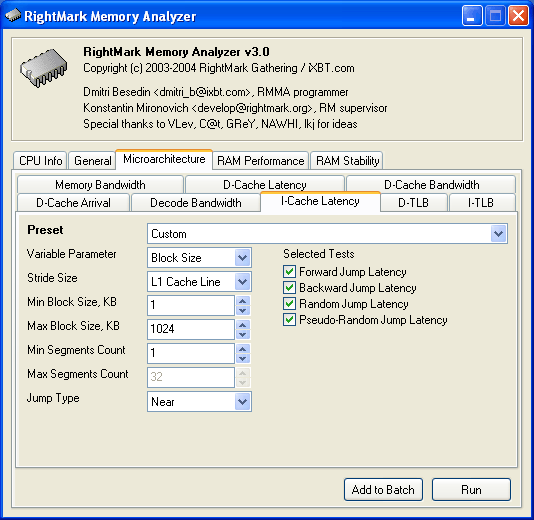

The sixth test was only introduced into this RMMA 3.0 version.

It measures latency of the access to L1i/L2 cache/RAM in the code execution condition, and it also estimates L1 I-cache and unified L2 I/D-cache associativity. The test works according to the following principle. In a selected

memory block, a dependent chain is constructed, where each of the elements contains a jump-to-the-next instruction (jmp)

with the stride specified by Stride Size. The last element contains a return instruction (ret). When an associativity test is run, reading in the "multi-segment" mode first goes through an Nth element of each segment and then jumps to the (N+1)th element of the main segment.

The scheme taken from the D-cache associativity test is also suitable for this case. The only difference is that jump instructions (jmp) are used instead of dependent data-loading ones (mov eax, [eax]). Here are the test parameters:

Variable Parameter selects one of the two test variants:

Block Size — dependence of jump-instruction execution latency on the block size;

Segments Count — dependence of jump-instruction execution latency on the number of successive cache segments, which enables to estimate its associativity.

Stride Size, bytes, in the dependent code-chain.

Minimal code-block size, KB, in case of Variable Parameter = Block Size; code-block size in other cases.

Maximal code-block size, KB, in the case of Variable Parameter = Block Size.

Min Segments Count — the minimal number of successive cache segments in the case of Variable Parameter = Segments Count; the number of successive cache segments in other cases.

Max Segments Count — the maximal number of successive cache segments in the case of Variable Parameter = Segments Count.

A type of the jump instruction:

Near — the nearer jump (jmp near +relative_offset);

Far — the farther jump (mov ecx, absolute_offset; jmp ecx).

Selected Tests defines reading modes at latency testing:

Forward Read Latency

Backward Read Latency

Random Read Latency

Pseudo-Random Read Latency

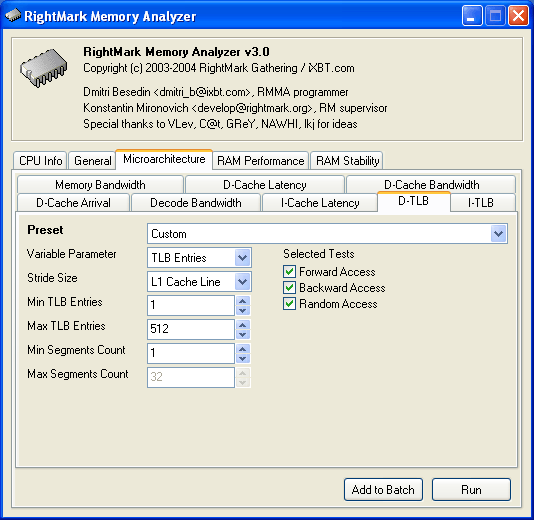

The test defines the size and associative level of the Translation Lookaside Buffer (L1/L2 D-TLB). Actually, it measures latency when accessing the L1 cache provided that every next cache line is loaded from the next, not the same, memory page.

(The memory page size in real operating systems is much larger (e.g. 4096 bytes) than in our scheme which only houses 4 cache lines).

So, if the number of pages used is less than the TLB size the test calculates L1 cache's own latency (TLB hit). Otherwise, it measures the L1 cache latency in case of TLB miss. Note that the Maximal TLB Entries mustn't be greater than the number of L1 cache lines, otherwise the graph will have a jump related with the transition from L1 to L2, but not with the D-TLB structure size. But the overall size of TLB levels is always less than the number of cache lines which can be put into the L1 cache. Test settings:

Variable Parameter defines one of two test modes.

TLB Entries - dependence of latency when accessing the L1 cache on the number of memory pages used.

Segments Count — dependence of latency when accessing the L1 cache on the number of sequential "TLB segments" at a given number of pages used for estimation of the associative level of each D-TLB level. The principle of "multi-segment" dependent access chain formation is identical to the one used in the latency test (Benchmark #2), but in this case the value equal to the stride size when accessing each next element (cache line size by default) is added to the offset between the segments. Here you can see reading of four TLB elements in the "two-segment" access mode.

Stride Size in the dependent access chain, in bytes.

Minimal TLB Entries used for reading cache lines in case of Variable Parameter = TLB Entries; TLB Entries in other cases.

Maximal TLB Entries in case of Variable Parameter = TLB Entries.

Min Segments Count defines the minimal number of successive segments in the dependent access chain in the case of Variable Parameter = Segments Count; the number of segments in other cases.

Max Segments Count defines the maximal number of successive segments in the case of Variable Parameter = Segments Count.

Selected Tests defines a way of testing:

Forward Access

Backward Access

Random Access

The eighth test measures the size and the associativity of instructions translation lookaside buffer (L1/L2 I-TLB). Like in the D-TLB case, the test measures L1 I-cache access latency provided that every next cache line is loaded from the next, not the same, memory page. All the parameters (except one additional) are the same as in Benchmark #7.

Variable Parameter defines one of two types of the test modes:

TLB Entries - dependence of latency when accessing the L1i cache on the number of memory pages used.

Segments Count - dependence of latency when accessing the L1i cache on the number of sequential segments at a given number of pages used.

Stride Size, bytes, in the dependent data chain. Strides are made with a jump (jmp). Here you can see forward sequential reading of four I-TLB elements in the two-segment access mode.

The last element, marked with a cross, contains the return instruction (ret).

Minimal TLB Entries used for reading L1i cache lines in case of Variable Parameter = TLB Entries; TLB Entries in other cases.

Maximal TLB Entries in case of Variable Parameter = TLB Entries.

Minimal Segments Count defines the minimal number of sequential segments in case of Variable Parameter = Segments Count; the number of sequential segments in other cases.

Maximal Segments Count defines the maximal number of sequential segments in case of Variable Parameter = Segments Count.

A type of the jump instruction:

Near — the nearer jump (jmp near +relative_offset);

Far — the farther jump (mov ecx, absolute_offset; jmp ecx).

Selected Tests defines the ways of testing:

Forward Access

Backward Access

Random Access

Now we have come to the second test type — competitive tests that give out comparable values on different platforms. There doesn't seem to be much to say, as this type comprises an overwhelming majority of performance tests conducted on a system component (and RAM is no exception here). They also include the new project we're developing — CPU RightMark that we described in a separate article. Competitive memory tests are based on a single principle: a full independence of those "crucial low-level platform parameters" (and first of all, cache line size) that we measure in the first type of tests. From the point of view of code organisation, a competitive test must be something like a reference code of a typical streaming C/C++ task, written without assembler optimisations and compiled by a good compilor.

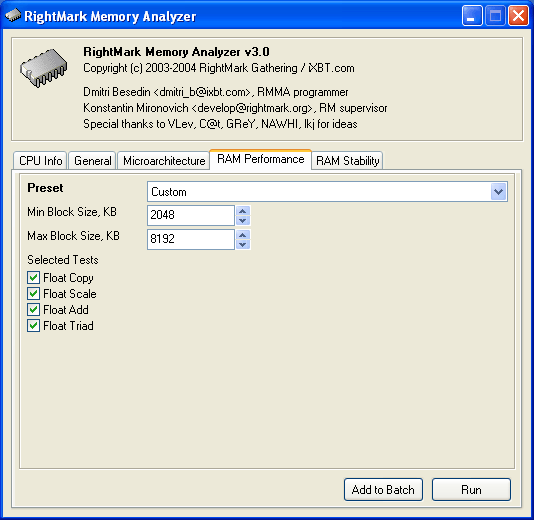

In fact, there is only one RAM performance test for the moment. We decided not to complicate things and realised STREAM tests, popular, comprehensible and possible in the source code form. These are the most important parameters of the test:

Min Block Size, KB, is the minimal size of the array.

Max Block Size, KB, is the maximal size of the array. The real memory size used in the test is larger and depends on the measurement method (see below).

Selected Tests define performance test variants:

Float Copy — copies the array of float-point numbers (the data type hereinafter is 64-bit double):

a[i] = b[i];

Float Scale — multiplies the element of the first array by the constant and saves the element in the second array:

a[i] = b[i] * k;

Float Add — sums two arrays elementwise and saves the element in the third array:

a[i] = b[i] + c[i];

Float Triad — Float Add and Float Scale, together. The element of the first array is added to the second one's multiplied by the constant, and is saved in the third array:

a[i] = b[i] + c[i] * k;

It is clear that two first tests (Float Copy, Float Scale) coincide with two last ones (Float Add and Float Triad) in terms of the load on the memory subsystem. Indeed, there are two accesses to the memory by the first test group (reading and writing), and three more by the second group (reading, reading, and writing). The test result is the so-called "full" real memory bandwidth, which was obtained considering the fact of several accesses. Another important thing is that test configurations specify only the array size, not the size of the whole memory block. That is, real memory utilisation increases twofold (Float Copy, Float Scale) and threefold (Float Add and Float Triad).

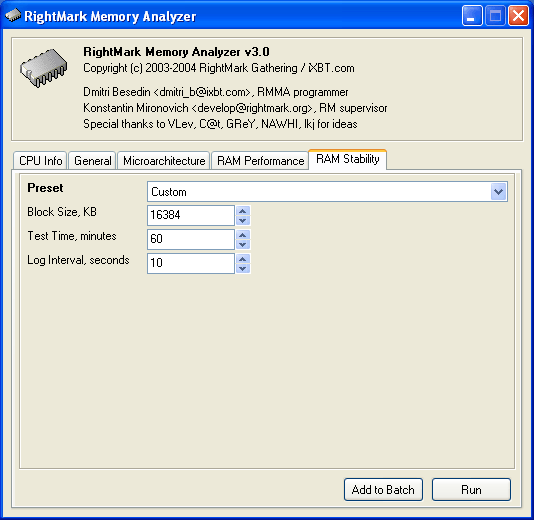

The article on the new RMMA 3.0 version wouldn't be complete without mentioning the last tab entitled RAM Stability.

The test estimates stability of memory work in the given configuration (mainly concerning the timings). This is, probably, the simplest test of all realised in RMMA 3.0. Besides, it is nothing new: it just measures latency of a random access to a large memory block (its size is specified by Block Size, KB). It is different from a stndard "microarchitecture" latency test in the duration of a pretty heavy load on the memory subsystem as the test settings (Test Time, minutes) allow to select a long testing period.

Considering the fact that the address of each next element in the chain is accessed from RAM, any fault in its work will cause the wrong value to appear, which, in turn, will lead it to the point that the CPU will generate an exception. But this test will inform the user on it as exit/halt test completion is written down in the report. To make assurance double sure, the test also makes a write into a log file (Stability.log) at the interval specified by the user (Log File Interval, seconds). A value written in the file corresponds to random access latency measured at this particular moment.

|

Article navigation: |

| blog comments powered by Disqus |

| Most Popular Reviews | More RSS |

|

Comparing old, cheap solutions from AMD with new, budget offerings from Intel.

February 1, 2013 · Processor Roundups |

|

Inno3D GeForce GTX 670 iChill, Inno3D GeForce GTX 660 Ti Graphics Cards A couple of mid-range adapters with original cooling systems.

January 30, 2013 · Video cards: NVIDIA GPUs |

|

Creative Sound Blaster X-Fi Surround 5.1 An external X-Fi solution in tests.

September 9, 2008 · Sound Cards |

|

The first worthwhile Piledriver CPU.

September 11, 2012 · Processors: AMD |

|

Consumed Power, Energy Consumption: Ivy Bridge vs. Sandy Bridge Trying out the new method.

September 18, 2012 · Processors: Intel |

| Latest Reviews | More RSS |

|

Retested all graphics cards with the new drivers.

Oct 18, 2013 · 3Digests

|

|

Added new benchmarks: BioShock Infinite and Metro: Last Light.

Sep 06, 2013 · 3Digests

|

|

Added the test results of NVIDIA GeForce GTX 760 and AMD Radeon HD 7730.

Aug 05, 2013 · 3Digests

|

|

Gainward GeForce GTX 650 Ti BOOST 2GB Golden Sample Graphics Card An excellent hybrid of GeForce GTX 650 Ti and GeForce GTX 660.

Jun 24, 2013 · Video cards: NVIDIA GPUs

|

|

Added the test results of NVIDIA GeForce GTX 770/780.

Jun 03, 2013 · 3Digests

|

| Latest News | More RSS |

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook

Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved.