|

||

|

||

| ||

Not all gold glitters from the start (a remake of the proverb) We have an inflammable combination of a PCI-Express motherboard (made by ECS on a new Intel 915 chipset) and an NVIDIA GeForce PCX 5900 graphic accelerator with a PCI-Express bus. It would be a pity not to explode this mixture and not to see the first results of the new bus in action. Certainly, its way too early to speak about a real comparison as ATI's PCI-Express cards have just appeared in our lab and are not taking part in this testing. Besides, the way OS and graphic card drivers support the new bus evidently leaves much to be desired. Thus, we must introduce some provisos here:

We have tested a number of popular game applications and will show you their preliminary results later. And now, a bit of theory.

A bit of theoryTo begin with, we're giving you a brief compilation of various facts about PCI-Express and its features as a new graphic bus. PCI-Express 16x

It is hardly a secret that the 16-channel variant of the universal (industrial) PCI-Express bus has been playing the role of a general-purpose standard graphic bus since the start of the year. Given below is the simplest way of transition to PCI-Express for desktop systems with a standard architecture.  Key differences between the new bus and AGP/PCI :

The first mainboards with PCI-Express logic sets (e.g. i915-based) will include one PCI-Express 16x slot mostly aimed at graphic accelerators. Soon, PCI-Express solutions by NVIDIA (NV3X and NV4X accelerators, via an HSI bridge) and ATI (R423 with a native PCI-Express interface, etc.) will be available. PCI-Express support in NVIDIA and ATI acceleratorsATI decided to release two chip versions (R420 and R423). The former supports AGP 8x bus, the latter PCI-Express one. And that is the only fundamental difference between the chips.

NVIDIA, in its turn, prefers one chip (bridge) that makes the PCI-Express -> AGP transformation. The bridge can be used with both NV40 (and its NV41 successor) and the latest chips of the current NV3X series. Besides, the bandwidth of such scheme (AGP

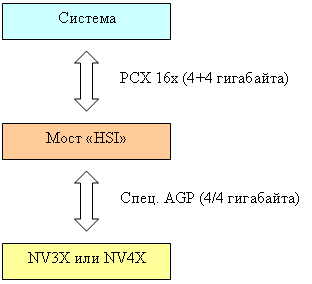

chip + PCI-Express bridge) is not limited by values typical of AGP 8x. How can that be? Here's the scheme:  We have the system at the top, then comes the 16x PCX slot, and then the chip of the HSI (High Speed Interconnect) bridge. The bridge chip is connected to the graphic NV3X chip or a next-generation one via its standard AGP pinouts. But if the graphic chip works with the HSI bridge allocated at a minimal distance from the chip itself on the accelerator board, we can reach a double increase in the speed at which the AGP 8x bus interface of the graphic chip functions. Thus, we get a bandwidth analog of AGP 16x bus which doesn't exist as a standard. Consequently, we get a 4-GB/s bandwidth. But that's not all. In contrast to a typical AGP-standard system that can reach the maximal speed of data transmission only in one direction, a modified AGP interface between the HSI bridge and the NVIDIA accelerator transmits data at 4 GB/s both ways, but not simultaneously. At each particular moment, data are only transmitted one way at the constant peak bandwidth of 4 GB/s. Besides, it takes some time to switch the state from transmission to reception. Interestingly, the bridge chip can be turned the other way round and function as a bridge for creation of AGP 8x cards based on PCX chips which NVIDIA is planning to start producing in a while. We can single out the following theoretical shortcomings of such solution:

On the other hand, the following practical advantages are obvious: NVIDIA's approach enables the company not to produce two variants of the accelerator (which would be rather expensive). Besides, it also paves the way for a flexible regulation of the PCX/AGP ratio according to the current market demands. We'll try to give a preliminary estimate of the possible performance gain/loss basing on the test results. For now, we can say that both API DirectX and most actual game applications are made considering AGP peculiarities, and the situation is unlikely to change within the next two years. Of course, it doesn't necessarily mean a performance fall, but at least it is not at all indicative of its considerable rise. So, the main problem for NVIDIA is the card's complexity (an additional bridge chip implies more responsibility for card manufacturers), while ATI is mostly concerned with supply (possible deficit or overproduction of PCX or AGP versions). Time will show which approach is more appropriate. PCI-Express support in the OSWindows XP regards PCI-Express 16x as a classical PCI but at the moment. It uses its innate compatibility with the PCI logical protocol, that includes interrupts, configuration (distribution of resources), I/O space, and PCI devices' access to the system memory. Certainly, it all takes far less time than in the case of classical PCI buses, but in terms of system software and drivers, a PCX graphic card is practically equal to a PCI one, even though the latter is based on a very fast PCI bus with a 4-GB bandwidth both ways. Evidently, none of the new capabilities (isochronous transmissions, parallel flows, etc.) are used either on the API level, or in drivers, or in the system core. The situation may change in the future when support of new capabilities is introduced into the OS and the driver of the graphic accelerator. In this case, they will be able to increase PCI-Express efficiency as a graphic bus. But it's not clear for now if it's enough for Microsoft to just update the PCI bus driver in the OS or it will need a new HAL (a whole new core in the worst-case scenario) or a new DirectX version. Thus, the process of a full revelation of PCI-E capabilities might last until Longhorn appears early in 2006. PCI-Express is now regarded as a classical PCI bus and consequently, there's no GART or any other functions specific to AGP, due to which graphically optimised access from the accelerator to the system memory used to be made. The emulation of these AGP capabilities in PCI-Express would give nothing new to modern accelerators, as they are mostly interested in a forward access to the system memory and a high bus bandwidth, which they already have. Besides, the fact that no control of a separate AGP memory distribution catalogue is needed can only have a positive influence on performance. Well, time will show if it's really like this, but we can't quell our suspicion that PCI-Express is not optimally used by the videocard's OS and driver for the moment.

Practical part

Tested applications and testbed configurations

We tested the following applications:

Results

Somewhat untypical POP results may be caused by the use of FRAPS.

ConclusionIt is evident that, considering all the provisos we have made, GeForce PCX in the PCI-E variant loses to its AGP fellow for the moment. At least it concerns those few tests where applications are not limited by the CPU or the accelerator, i.e. in low resolutions, as a rule. Basing on this, we can make the follwing conclusions:

Well, there's nothing left for us to do but wait for more cards to take part in a more comprehensive testing.

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. |