|

||

|

||

| ||

NVIDIANo sooner had the disputes around the 3DMark2003 and Detonator FX drivers faded away than www.tech-report.com published some information about new cheats for this benchmark in the same Detonator FX, namely about the aggressive optimization of anisotropic filtering with the name of an active application being the criterion of its activation. The ShaderMark from ToMMTi-Systems also had its shader code slightly modified which noticeably affected the performance; it indicates that the Detonator FX plays tricks on it as well. Since this hunting is acquiring the mass character we decided to investigate this issue ourselves. But we realize that to reveal the driver's tricks with benchmarks we need to cooperate with developers of given applications in 99% of cases. Moreover, even the developers are not always able to find and eliminate such optimizations because drivers often use very sophisticated methods of detection of one or another application. That is why we decided to look at the problem differently and to analyze the driver inside to locate and lock all ways of detection of one or another Direct3D application. It will disarm the driver and bring to minimum the risk of getting wrong benchmark results. So, the starting point in our investigation was that the driver reacts to renaming of an executable file of at least one benchmark. It means that its code must contain an algorithm that analyzes names of active Direct3D applications. The first our step was to look for it. The research showed that the Detonator FX really contains a base of applications detectable with a command line that covers about 70 elements. When creating a Direct3D context the driver calculates two 64bit checksums using the name of the executable file and a list of captions of all process windows to get a unique 128bit identifier of the Direct3D application and to make settings for this application if it's available in the base. Unfortunately, it's impossible to find out names of applications from 128bit identifiers from the base. The only thing we can do to decode names of applications is to write a utility able to calculate checksums for an arbitrarily entered line according to the algorithm used by the driver. It can help to check whether the base contains a given application. And now we can only guess what applications beside 3DMark2003 can be found there. Its size indicates that the most part of it is used to avoid certain problems and probably to optimize certain gaming applications because the overall number of existant synthetic and gaming Direct3D benchamrks is noticeably less than 70. Supposedly, the same base contains compatibility settings for forcing full-screen anti-aliasing by the super-sampling method in certain DirectX6 and DirectX7 applications (the base of compatibility settings for supersampling of quite a big size wasn't decoded in the earlier versions). But it mustn't be ruled out that some of applications are detected by the driver in order to realize tricks undesirable for users, in particular, to activate aggressive optimizations for one or another benchmark. Whether it's right or not will be found out a bit later, and now we are starting the procedure of calculating the checksum which is used by the driver to identify applications. This procedure is used by the driver not only for identification of an active application by creating a 128bit checksum when the context is made, but also uses it to form additional checksums. We found the links to this procedure and very suspicious calculations of checksums in handlers of many D3DDP2OP_... tokens. Let me digress a little to explain to you what are D3DDP2OP_... tokens. If you have some knowledge of programming in the API Direct3D, you probably know that an application communicates with a Direct3D accelerator on the COM interface IDirect3DDevice..., but do you know when and how transferred from the application to the interface get to Direct3D driver? Requests for many methods of this interface are transformed by the API into special control words (tokens) that get into the internal command buffer, and its contents is the input data for the Direct3 driver. For example, when creating a pixel shader by the command CreatePixelShader the API puts into the command buffer the token D3DDP2OP_CREATEPIXELSHADER and a structure unique for this token which contains a shader code to be created. In other words, the internal command buffer contains D3DDP2OP_... tokens that identify one or another command a given Direct3D application fulfills and some data provided by the application. The Direct3D driver, or rather its D3dDrawPrimitives2 function processes the command buffer. This stage of the driver's processing of buffer tokens suits best of all for realization of detection of applications by analyzing the data supplied. At this moment the driver can identify vertex and pixel shaders created by a given application, analyze the format and contents of textures when it's loaded into the video memory and identify many other specific things. That is why we should focus on the command buffer processor realized in the body of the D3dDrawPrimitives2 function if we want to find code parts used by the driver for identification of certain applications. So, we said that we noticed suspicious calculations of checksums in functions processing certain D3DDP2OP_... tokens. Unfortunately, there were also D3DDP2OP_CREATEPIXELSHADER and D3DDP2OP_CREATEVERTEXSHADER, and the checksum was calculated according to the tokenized input shader code. It means that this is the very attempt of the driver to identify one or another pixel or vertex shader or one or another Direct3D application. The scandal around the 3DMark2003 and DetonatorFX proved that the driver identifies and replaces some pixel and vertex shaders in this benchmark, but it's confusing that only the number of calculated checksums of vertex shaders is about 50, which is incomparable to the number of optimizations for the 3DMark2003 found and voiced by Futuremark. That is why we decided to lock the checkups found to estimate a pure performance of the Direct3D driver. The well structured Detonator code helped us: since the driver uses the same procedure of checksum calculation in all mechanisms of identification of Direct3D applications, we created a small patch-script NVAntiDetector that slightly modifies the calculation algorithm by changing the original value of its seed. The procedure thus calculates checksums different from the original ones and locks all drivers' detecton methods, the command line analysis etc. Surely, we can't guarantee that this patch-script disables all tricks, as well as locks admissible optimizations and algorithms of avoiding problems in certain applications. We admit that but it's not an obstacle because our aim is to estimate performance in benchmarks, and no detection algorithms for one or another popular synthetic test application used for performance estimation only should take place in the driver's body. The results of the Detonator FX tested together with the NVAntiDetector exceeded all expectations. The GPU family with its flagship GeForceFX 5900Ultra got much slower in almost all synthetic and gaming Direct3D benchmarks. The performance drop in the 3DMark2003 is incomparable to the results of the patch 330 that locked all Detonator FX's cheats used in this benchmark detected by the Futurtemark. Only the beta version of our RightMark3D showed the same scores, but this benchmark is not very popular yet, and its shaders are unknown to the Direct3D driver. At the moment we are working on a full-scale review of production cards based on the GeForceFX 5900Ultra which will also cover operation of this card together with the NVAntiDetector. And now let's estimate the scores of the benchmark which is becoming a thing of the past. As you understand, we will speak about the predecessor of the 3DMark2003 - 3DMark2001 SE. You may say that this is an obsolete benchmark, and it makes no sense in the tests. But if you remember, Futuremark always tried to make benchmarks with an eye to the future, that is why its complexity corresponds to what we can see in today's games. Moreover, looking for tricks in this benchmark will help us prove or disprove NVIDIA's statements made right after the release of the Futuremark's patch 330 that eliminates Detonator FX's cheats for the 3DMark2003. If you remember, the company tried to justify the tricks saying that the 3DMark2003 tests didn't comply to what we could see in future games and the 3DMark2003 discredited their products. Let's have a look at the previous Futuremark's solution which didn't produce such a negative reaction and wasn't so biased from NVIDIA's standpoint. So, to repeat results of our tests you will need:

For the scripts you will need to install and start the RivaTuner at least once to register this application as a processor of RTS files. Then you can start a script required from the OS explorer, and install the patched version having changed the distrtibutive of the Direct3D driver. It's enough to lock in the Direct3D driver all found mechanisms of detection of Direct3D applications. Well, let's see whether the results of the 3DMark2001 will change after installation of the NVAntiDetector. Testbed:

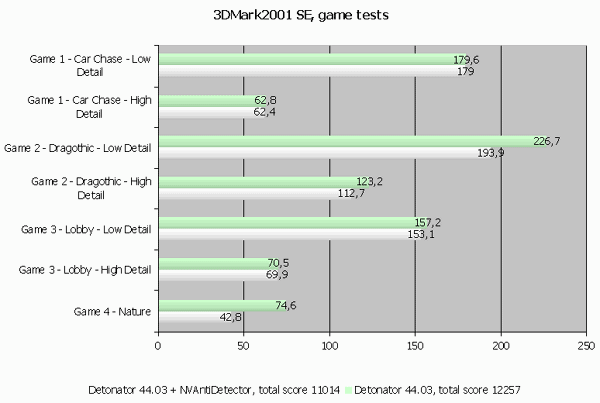

It's clear that the situation with tricks in the synthetic benchmark is not new for NVIDIA, and the optimization for the 3DMark2003 is not an exception:  With the benchmark detection being impossible the total score of the 3DMark2001 goes down by almost 10%. The total score is based on the game test results and is calculated by the following formula: 3DMark score = (Game1LowDetail + Game2LowDetail + Game3LowDetail) * 10 + (Game1HighDetail + Game2HighDetail + Game3HighDetail + Game4) * 20 The formula indicates that the half of difference (i.e. almost 600 points, (20 * (74.6 - 42.8))) between the original and modified driver is dependent on the Game4 results. What does the driver do to be able to lift the scores so greatly when it detects a given scene? Let's take a look at the test4 and try to find where the driver could save on calculations. Most probably, the rendering speed of the Nature scene must depend a lot on animated grass and leaves of trees which make a considerable part of the scene geometry. They are the main candidates for optimization, and the driver should save on them first. To clear that out we are going to compare the image quality on the screenshots made with the original and modified drivers (ALL FULL-SIZE SCREENSHOTS are in the BMP format, that is why EACH IS ABOUT 2.5MB, they are packed in RAR achives each measuring from 1.3 to 3 MB):

Like in case with NVIDIA, the scenes are identical at first glance. But again we used the careful examination of the screenshots. Again, we used Adobe Photoshop to calculate the per-pixel difference between them and then intensified it with the Auto Contrast function: It reminds me the picture I saw above when analyzed optimizations of the NVIDIA's drivers. It's obvious that ATI also pulls up the results at the expense of the alternative rendering of leaves and grass, i.e. at the expense of the objects the scene is full of and which are a bottleneck of this test. But the character of the difference is unlike to NVIDIA's case. It seems that the optimizations take place during polygon texturing, not at the stage of transformation and lighting. Since the difference is most noticeable on the leaves' edges we can affirm that the driver detects the Nature scene and then replaces the texture format of the leaves and grass with a more advantageous one (in order to save on the bit capacity of the alpha channel) by forced compression of an uncompressed transparent texture or by repacking DXT3 textures into the DXT1 format. To see if our hypothesis is correct we tried to change settings of the texture format in the 3DMark2001 and analyze appearance of the leaves and grass in case of different formats. As expected, in case of 16bit textures the performance dropped much and the speed was lower even in comparison with the more resourse-hungry 32bit format. Finally, we looked through the code to find the place used for detection of the Nature in the 3DMark2001. The results proved that the Catalyst detected the scene by creation of three successive textures of a definite format and size by the Direct3D application. Finishing the analysis of the Catalyst driver we decided to check the insides of ati3d2ag.dll - a Direct3D driver for the R200 processors because this chip gave us an idea on possible optimizations in the ATI's driver. Like in case of ati3duag.dll, the study of the code reveals multiple attempts to detect applications, and they are even more aggressive. Even without the patch-script we checked it the same way, i.e. altered the code of the shader tree.vsh. The result on the same system with the RADEON 8500LE video adapter is rather sad: almost 30% drop (from 42 to 31 FPS) in the same test 4. Again Nature? Deja vu? Again optimized vertex shaders? So, let's sum it up. You may ask us why's bad if both companies are trying to boost performance in the 3DMark? There is no difference in quality like, for example, in case of the Game test 2 - Dragothic and Detonator FX. But even if it's noticeable like in the Game test 4 - Nature, the image doesn't get worse at all. So, why is it bad then? The answer is simple: first of all, all optimizations we detected and locked work in only one benchmark, which indicates that it took a lot of time for the programmers to analyze its behavior and specific character, bottlenecks of certain tests and parts of the scene where it's possible to save the processor's resources even without the detriment to the image quality. But any optimizations which work when a benchmark is identified are based on the careful analyses of its specificity and rigidly tied to a certain scene, i.e. they make existance of benchmarks senseless as they do not reflect real performance in most other applications. Synthetic benchmarks are not aimed to reveal who faster renders a certain frame by culling out one or another hidden object or by using alternative rendering procedures. Benchmarks have a single aim which is to estimate the speed of execution of a strickly determined set of calculations by a given video adapter. It's nice if driver developers who know peculiarities of their chips much better than third software developers are able to notice an ineffective shader and can markedly lift up its speed even by altering the image quality. Althoutgh we don't welcome such approach, such optimizations can be used sometimes, but only in gaming applications. Nevertheless, such optimizations are not admissible for test applications which are meant exceptionally for speed estimation. Otherwise, it would make no sense to use benchmarks to compare performance of video adapters from different manufacturers because volumes of calculations would be different. Conclusion

Unfortunately, the conclusion is rather distressing. First of all, I want to ask Futuremark where they were before and why they started fighting the cheating just a short time ago. Weren't they aware of aggressive optimizations made by vendors for their benchmark? I don't think so. Weren't there any reasons or facts before? They were. Just consider the case when the splash screen in the Detonator go off or the notorious drivers of the SiS Xabre. Weren't they qualified enough to locate and do away with the cheats? I don't believe it. It took us only one day to find and eliminate tricks in the drivers of two largest vendors though we didn't have the source code of the 3DMark2001. We don't want to believe that participation in a beta-program automatically gives the right for such optimizations, but this is the only idea we have. We hope we are wrong. Secondly, it's a sorry sight to see how vendors stubbornly try to outrun one another using unfair means. Here is the history of optimizations of our ill-fated Nature: the announcement and release of the R200 - criticism of performance of the R200's shaders based on comparison with the NV20's scores - optimizations for the Nature in the ATI's drivers - the release of the R300 and the same pulling up of performance in the Nature - NVIDIA's response in the form of the Detonator 40.xx that lifted up the speed in the same notorious test allegedly at the expense of the higher shaders' performance... Unfortunately, the race has no end. I don't like hearing pathetic annoucements of both companies when one of them is caught red-handed. In the scandal around Quake/Quack NVIDIA stated that they never used optimizations for certain applications and that they advocated only general approaches for optimizations. In the disputes around the 3DMark2003 ATI took a position of a hurt child and was inconsistent in its actions: first it repented and promised to remove its optimizations saying that their programmers never used any rendering tricks to get higher scores in benchmarks, then they promised to optimize the drivers to catch up with the impious competitor. A sad thing. Do you still believe that there are honest players on this market? Unfortunately, none of the vendors is able to stop such an unfair race. The final scores of the popular benchmarks have little in common with the actual performance. Unfortunately, sales volumes of both ATI and NVIDIA are directly dependent on these scores. They will use any means to make them higher. Do we have any chance to see a fair situation in benchmarks? Probably, only in two cases: when only one player remains on the market or when one of the competitors is much weaker and it's useless to affect the real scores. At the moment we have two graphics processors with similar parameters from different manufacturers, and everyone is tempted to take the first place. That is why benchmarks are going to reflect a competition between the driver developers who optimize the code to get as high scores as possible even at the expense of an alternative rendering, rather than a real performance of video adapters. What's next? Being well aware of how both companies react to such patch-scripts and taking into account the distructive methods they used against SoftQuadro and SoftR9x00 we are almost sure that in the next driver version they will try to prove that the scripts work incorrectly and disable a vital part of the code. NVIDIA will probably distribute all existant checkups along the Direct3D driver's body to make difficult their localization and disabling, and will use the checksum calculation procedure modified by the NVAntiDetector to calculate checksums by the fixed pattern included in the driver's body, and if it doesn't coincide with the original it will imitate certain problems. Will the Direct3D application detection mechanisms disappear in the Detonator and Catalyst drivers? Unlikely. Judging by the test results of the drivers released after the scandal around 3DMark2003 none of the companies is going to step back. Thus, the new NVIDIA's Detonator FX 44.6x is able to detect all changes in the shader code made by Futuremark in the patch 330 and even increases performance as compared to what was before the patch. Obviously, NVIDIA decided to keep to the tactics of aggressive optimizations irrespective of the negative attitude of most users towards such things. Nevertheless, the NVAntiDetector script still makes the GeForceFX 5900Ultra work much slower in this benchmark. ATI also released a new build of the Catalyst drivers where they really removed two optimizations for the 3DMark2003 revealed by Futuremark. They proudly announced it in the following press-release, but the optimizations for the previous benchmark are still there. The company seems to follow the principle "innocent until proven guilty", and the fine guesture of locking undesirable optimizations will touch only those cheats which the company was thrusted under its nose. It's very convenient to be partially honest. Everyone is satisfied. I do want to believe that this review will influence the attitude of the manufacturers of video adapters towards consumers. I think that the latest events clearly show that there are certain things which are much more important than the 3DMark scores, namely, reputation and trust of users which are hard to gain and easy to distroy. Actually we hope that we were wrong, and the real situation is not that sad, though it's very unlikely. That is why all comments from all parties this research concerns are welcome. Just note that when we were preparing this review for publication, ATI contacted us and assured that the parts of the code for application detection are used exceptionally for elimination of problems of applications themselves. Nevertheless, the problem with the 3DMark2001 remained without the answer...

Aleksei Nikolaichuk AKA Unwinder (unwinder@ixbt.com)

Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. |