|

||

|

||

| ||

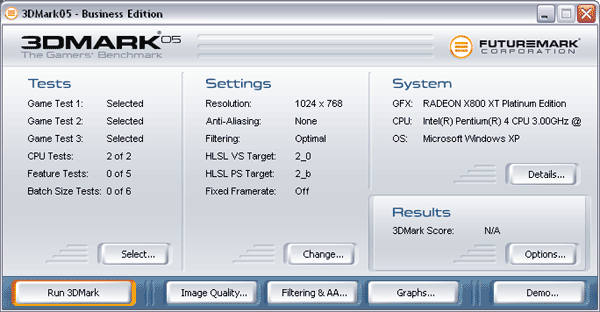

So, 3D Mark 2005 was released, took root and is now used successfully.

It's high time to sum up what we know and think about this test and write a short but concise description of its features.  Let's start from the end, or to be more exact, from sideways. Besides game and synthetic tests and settings, the right part of the window on top offers system information, which is available at the Details... button in the form of compact and convenient HTML file. The following reports are available:

This report does not claim to be complete but it touches upon all important parameters, including frequencies of the processor, video card and memory, and certainly contains a lot of useful information, especially for users without special information or tuning utilities, such as RIVA-Tuner. The Settings section is in the middle of the window. They are available only in commercial versions of the benchmark – you have to register your copy as PRO or Business to access the settings. Otherwise, all the tests are carried out with default settings and in default resolution. The settings include:

The Results section is located in the bottom right corner, its purpose can be easily guessed from its title. The free version allows only to save the file with results and view it online, while the commercial version can export results to Excel. Speaking of commercial versions – there are two license types for 3D Mark 05 – professional (~$20, in case you download it) and Business (~$240). The former includes all settings and export options, blocked in the free version. The latter also allows to control tests from command prompt, which is very important when you test large volumes of various equipment, for example, in case of something like our 3Digest. Owners of the previous version will only have to pay $10 for the PRO license. There appeared (on the bottom, to the left of the results) a new Graphs function – it dynamically collects information in the process of testing and then plots graphs for a given parameter in time. Besides standard settings, one can select one of the following parameters for each test:

And then get a graph in Excel (it's required for this function) displaying how this parameter was changing in time during the test. This feature is intended rather for professional testers and game developers – it allows to detect weak points of accelerators and select characteristic render parameters for maximum use of their resources. However, there are more convenient tools for such analysis provided for developers directly by the manufacturers – for example NV Perf HUD allows to analyze hardware performance counters of certain accelerator units and to obtain more detailed and precise picture of how various subsystems are loaded. Anyway, this feature is useful at least to evaluate FPS spread in tests and how much the drivers and hardware are to blame. There are also modes for testing Image Quality and Filtering & AA quality. Nothing new in this respect – everything is like in the previous version here. If you have the latest (summer 2004) DX SDK installed, you will get an option to render reference images using entirely software Ref-Rast calculations (DX reference rasterizer). The test section is located on the left, where you can obviously select what tests you want to run. There are three games tests, two CPU tests, Fillrate test, two tests for vertex and pixel shaders each, and the size efficiency test for batches sent to the accelerator for rendering (there are 6 size options). Let's describe each of them in detail: Game testsAt first let's note one important moment – all game tests use the same engine. That is you shouldn't expect considerable optimization for this or that game type. It's rather a generalized process of scene rendering, which is based on several illumination and shadow calculation models and which uses the same source HLSL shaders compiled for a selected profile. The engine itself can be considered state-of-the-art – it uses cube maps to calculate illumination from light sources, the cube edge being 512 points, high detail perspective shadow maps (2048x2048, floating format, 32-bit precision), if supported by hardware – DST (Depth Stencil Test – depth maps to render shadows) in D24X8 format, also 2048x2048. Texture compression is widely used. Textures, normal maps and transparency maps are compressed by different methods. All these methods are DXT of one or another version – 3Dc from ATI is not yet supported. In DEMO mode rendered frames in all scenes are post processed to get such cinematographic effects as depth of field, glow, and such. But post processing is disabled when the tests are run. Now let's proceed to tests.

Return To Proxycon

The scene balance in the first test is adequate to modern FPS in many respects. Indoor fights, local dynamic light, maximum calculations are carried out by the accelerator, minimum – by CPU. Somewhat simplified illumination calculations. Metal surfaces with normal and reflection maps. Number of light sources – 8, two of them are complex, directional, the others are point lights.

FireFly Forest

Night forest, lots of vegetation, flying firefly that illuminates leaves of numerous plants. A lot of object physics modelling – flight, waving of the leaves. One directional light – the moon. The firefly is a point light source. This test uses the technology of perspective shadow maps, which can improve the quality of shadows obtained with the help of bitmaps, in case the surface is located far from the object casting shadows on it – traditionally weak point of shadow maps. This technology provides noticeably better quality on such open scenes. The test uses normal maps, maps for diffuse lighting, with detailed textures, procedural light scattering calculations for the sky. On the whole this test is much heavier for CPU.

Canyon Flight

A flight along a canyon. Vast open space, a lot of ground and sky, which is rather difficult from the point of view of pixel shaders. Actually, the balance of this test is close to a (high image quality) flight simulator. The water surface is rendered with reflection/refraction maps, it reflects all the surroundings. Moreover, fresnel coefficients for water are rather intensively calculated pixel-by-pixel. There is only one light source, the sun. It lights the vast open area casting shadows everywhere. This is an intensive test with its fill rates and pixel shader calculations. Formula to calculate the final result:

This formula runs that all the tests are equally "valuable" and tries to smooth over the breakaway of powerful accelerators from average solutions (it's all right, the difference between 100 and 200 fps is not that important in real applications as the difference between 30 and 60, which is stressed by the formula). Synthetic testsCPUTo evaluate CPU performance, two game tests are run at minimum 640x480 resolution with minimum Shaders 2.0. And the vertex shaders are shifted on to CPU. You cannot call it a sterling CPU test, because in real games it will perform other tasks connected with calculating AI and physics in the game instead of software emulation of vertex shaders. Anyhow, parallel tasks are used to generate the initial data and thus multi-CPU, multi-core or HyperThreading systems may get an advantage.

Fill RateThe test is not modified (there are cosmetic changes but the algorithm is the same), it tests texture mapping on large objects (texturing) and multitexturing.

Pixel shadersThis test calculates a fragment of a canyon wall from the third game test. Note that this test features intensive calculations of pixel shaders, which model many effects on the pixel level by calculating them. However one should take into account that this material is not calculated completely as a procedural texture and uses a lot of various maps, and thus this test depends not only on the performance of pixel shaders but considerably on the memory performance of the video card and texture caching efficiency. To get a more precise evaluation of the unit performance balance of the accelerator, one should use more local synthetic tests, which load precisely a given subsystem.

Vertex shaders

The test consists of two parts. The first one tests a relatively simple geometry shader on models with a great number of vertices – that is it tests close to peak throughput and typical performance of the accelerator on high detail geometry. And on the contrary, the second test examines rather complex and long vertex shaders, as a result it analyzes performance of vertex units in complex tasks.

Batch processingIt's a very interesting test, which is necessary rather to game developers than to common enthusiasts of gaming hardware. It checks the dependence of the accelerator efficiency on the average size of data batches. Too many batches – too high load on CPU and additional expenses on synchronizing the accelerator with the program. Too large batch size – limited rendering flexibility, textures and shaders cannot be changed. Optimal balance is up to a developer, it may be different for different accelerators. Anyway, such things are best to check on real engines and games instead of 3DMark05 engine – that's why we think this test is somewhat redundant and making little sense in the context of the overall game test. Especially as we cannot control the content and character of tasks, we can only have preselected parameters and change the batch size. Let's not be strict though. This test is included into the benchmark, you can use it or skip it as you wish. And you can always interpret its results to your profit. It's much worse when you don't have this test :). Test ResultsTestbed configurations

2. Pentium4 Overclocked 3200 MHz based computer (Prescott)

Each testbed runs Windows XP Professional Service Pack 2, DirectX 9.0c, we used ViewSonic P810 (21") and IIYAMA Vision Master Pro 514 (22") monitors. ATI drivers 6.497; NVIDIA drivers 70.90.

ConclusionsSo, this benchmark is definitely a success. On the one hand a unified engine may fail to reflect certain aspects of different game genres, on the other hand, that's what the real games are for. On the whole these game tests are in advance of their time rather than just state-of-the-art, which is actually what's needed. HLSL usage looks justified and the most probable tendency of all future games as well as the minimum requirement of Shaders 2.0. The strict system requirements are also justified (2GHz SSE CPU, the latest DirectX version, accelerator supporting Shaders 2.0, 512 Mbyte of RAM) – it's a modern gaming computer. This benchmark aims at the future and that's exactly how it will be used. Even the next generation of accelerators can be tested and evaluated with the help of this benchmark. Several shadow technologies and an option to compile shaders into various available versions ensure maximum usage of hardware resources and help avoid the war of standards and consequent accusations of being biased to one or another company and its technologies. Synthetic tests look rather "semi-synthetic", but they allow to get an idea of chip systems to a first approximation – pixel and vertex parts. CPU test and batch size test are appearingly not very adequate. In our 3Digest you can find more detailed comparisons of various video cards.

Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. |