|

||

|

||

| ||

Rendering of infinite changeable game universe based on the ray tracing method. An ideal 3D engine for multiplayer RPGIntroductionIn this article we will analyze a traditional technology of rendering of game worlds based on the triangle rendering method with the z-buffer. We will speak about its weaknesses and an alternative technology based on the backway ray tracing method. The VirtualRay graphics engine is an example of the non-traditional 3D technology for computer games. The engine can potentially draw infinite 3D game universes which can be changed arbitrary in a real time mode. The engine is based on the backway ray tracing method which is completely different from those used in modern games of polygonal algorithms. The rendering mechanism differs sharply from those which are realized in the modern accelerators of game graphics. This article continues "Real-Time Ray Tracing Optimization. SSE Optimization", and since that time the VirtualRay engine improved. Last time we spoke mostly of optimization of graphics applications for SSE, and now we will speak about the 3D theory. Analyses of the traditional technologyAlmost all modern 3D game engines render a scene by drawing textured triangles using a z-buffer. There is a game world and it is presented with triangles. A scene of triangles is sent into the accelerator which renders it. But if there are too many triangles in a scene, none of accelerators will be able to handle it. Hence various optimization methods. The most popular are the following: preliminary determination of the PVS (Potential Visibility Set) - the engine determines which triangles and in which positions are seen. After that triangles which form the scene are sorted over with the help of the BSP tree (Binary Space Partitioning). This tree helps to sort triangles very quickly according to a position of a viewer in course of drawing of a certain level. It optimizes rendering of polygons considerably. At the same time, it's a quite tough problem to build the BSP tree. Another method consists in using portals. For a certain level it's necessary to determine positions of doors, windows, passages between rooms. This is used for clipping of hidden surfaces. Objects which are not located in the same room with the viewer are checked for visibility before rendering through the portals. To optimize and make more efficient the above methods scenes are divided into clusters, models and submodels. High-polygonal compact objects are framed into simple figures like parallelepipeds or spheres. First of all, these are moving objects; but chairs which can be broken, boxes which can be moved, barrels which can explode - all of them are separate models. It's necessary to build a hierarchical tree of a certain level, then find separate clusters, for example, a building; then subclusters, for example, rooms. Subclusters consist of lower-level ones, for example, objects in a room. Visibility and other properties are first defined for the whole cluster; if it is not seen, then all its subclusters and objects are not seen either. The speed can become really faster because all simple figures of a certain cluster are pre-calculated and it's much easier to carry out operations with them than with an arbitrary number of triangles. The hierarchy of the scene is build in advanced mostly by the level designers because this is a very difficult algorithmic problem. Algorithms of the effective clustering of a large scene work slowly. The scene must be illuminated so that it looks realistic. For this, lighting of each object is calculated in advance and then recorded into the lightmap. Lighting of a level of a modern computer game is just a preliminarily drawn picture, which, in fact, can be drawn by an artist. This doesn't allow changing considerably a level during the game. All those preliminary calculations of scenes are carried out for a really long time, it takes hours even on the most powerful computers. Sometimes it requires a designer. For example, if you slightly increase levels for the QuakeIII engine they will be processed for tens of hours on powerful workstations. Besides, you can't build a new level in a real time mode, which doesn't allow creating really open levels. It's not convenient to calculate them, store data etc. Examples of the traditional technologyFirst accelerators appeared a long time ago, but the problems remain. Sometimes it's possible to break a lamp and the light will change because it's known which triangles were illuminated with this lamp. But it's impossible to turn on a new lamp. Sometimes dynamic objects have shadows, but they are not always correct. One model doesn't shade others. Such static levels form long chains. And it brings to a game universe. Its dimension is equal to 1. You can go forward or backward and just a little step aside. Let's take a look at the latest games based on the Quake III. Here you simply go ahead. Everything is predetermined. Even your enemies raise their barricades on your way, they just have no other places. And what about a multiplayer? Nothing changed considerably since the QuakeI. I mean features of the graphics engine which influence the game. Here is one more example. It's written on the box that this game incorporates the Geo-Mod technology which allows changing entirely or destroy the environment in a real time mode; that this is the only shooter with geometry which can be changed in a real time mode; that this is an incomparable multiplayer with special strategies provided by the Geo-Mod. But this, in fact, is a clone of Half-Life. I'm pretty sure that a new game will just have more triangles and nothing more. Development of the traditional technologyThe processing power is growing, and maybe accelerators will become one day so powerful that they will be able to display all triangles of a scene and calculate lighting in a real-time mode. Today there are shadows of models of bots, and after that dynamic lighting of a scene will also appear. At present, at the expense of the preliminary analyses just several percents of triangles forming a single level are drawn. There are a lot of algorithms of shadow calculation. And they either implicitly require information about objects (i.e., the shadow volumes method), otherwise, it will process complex objects for a too long time, or it requires multiple rendering of an object into the texture (quite big one, otherwise a shadow on the far objects will be angular) from the direction of a light source and exploits the accelerator mercilessly (i.e. shadows by textures projection). That is, the games need powers which are dozens of times greater than those of the today's accelerators. Taking into account the law of doubling of processing power every two years, we can estimate when the required power will be obtained. It seems that not in the near future. But what plans do the accelerator makers have for the near future? Well, they plan on developing pixel and vertex shaders, tessellation on triangles of curved surfaces. TruForms and other RTPatches. And different full-screen image anti-aliasing algorithms. Pixel shaders are mostly meant for modeling of the surface material. Per-pixel lighting with relief mapping, flashing etc. It makes the image more realistic. Well, the full-screen anti-aliasing improves visual quality. And hardware tessellation allows drawing more curved surfaces. I.e. the accelerator will divide the given surface, a spline, into triangles and draw them the usual way. Vertex shaders reduce the CPU load. Well, the levels will become curved, textures relief and images smooth. But the scene will remain completely static. Again it will be possible to break only small stuff. Lamps won't swing under the ceilings of huge halls and sun rays won't get inside through windows. Sources of the traditional technologyThe modern game 3D graphics started its development 10 years ago. It originates from Wolfentein3D and Doom-like games. I can say that it's development stopped on the QuakeI game. After that it was developing only extensively, using powers of 3D accelerators. Almost nothing new from the algorithmic standpoint. The first place was taken by optimization of programs for hardware. But new accelerators appeared, and it was necessary to adapt again. For example, imagine how much work the Unreal creators did: first they wrote a version for software, then for glide, then for Direct3D and then for OpenGL extensions. The strong side of the present scene rendering algorithm based on drawing of triangles is that it shows quite good results on old personal computers with 386 processors, which were the starting point for the game 3D graphics. It turned out that triangle texturing using bilinear filtering and other accompanying operations are difficult to program. It's necessary to use special unnatural methods. Well, this was to be expected as a cost of good performance on low-power processors. After that those operations were accelerated. The accelerators can, in fact, draw only textured triangles. In great quantities, and much faster than a central processor. On the other hand, Hardware T&L is implemented at a comparable speed. The performance gain is obtained only at the expense of better operation with the memory. But even if the accelerators increase the speed of polygon rendering ten times, it will be of little use. The fundamental drawback in lighting calculation - shadows, still remains as a cost of fast rendering of static scenes. 3D Engine for the game worldModern genres of computer games can't develop at their full capacity because they are limited by capabilities of graphics engines. Ideally, the engine must meat two requirements. It must be possible to change a game world arbitrarily and a game universe mustn't be limited in its size. Now it's only image quality that keeps on developing. As a result, modern 3D engines can draw perfectly only arenas for endless death matches. There are few strategies in the full 3D, often only side view or view from above is used because it's difficult to realize a view from the gamer's side. RayTracing as an alternativeMethods like ray tracing are used to preliminarily calculate lighting for levels of modern computer games. For drawing of various dynamic scenes they use also similar methods. It makes me think that the ray tracing method is optimal for rendering of dynamic scenes with varying lighting. Well, when I watch different demo programs related with the ray tracing I notice dynamic shadows. The programs can be found, for example, at www.scene.org. But the ray tracing speed is too low. And many such programs use different ways to minimize the number of traced rays. For example, they use ray tracing not for each pixel but for every several ones, and define the color using interpolation for intermediate pixels. To improve quality rays are traced more frequently near projections of object edges onto the screen so that shapes won't get wrong. This is, in fact, lowering of the screen resolution. The time of operation of the ray tracing algorithm contains a member which is directly proportional to the number of traced rays, i.e. to the screen square. And this member is the heaviest one. It takes a lot of processing power even to define how the rays cross simple objects such as spheres, triangles, cylinders and cones. These expenses were so great for personal computers that it made possible to use this method in a real time mode only in low resolutions, like 320x240 and lower. At 800x600 the algorithm would work 6 times longer. And instead 24 frames it would be 4. But with time processors will become so powerful that they will be able to trace the required number of rays. Currently the processor power grows more rapidly than monitor resolutions. It seems to be a good solution to build a graphics engine on the ray-tracing principles so that problems of dynamic scene changing, lighting and shading can be solved. Such engine will add new capabilities for 3D computer games. VirtualRay-3D engine, Ray Tracer in the real time modeThere are a lot of demo programs which draw beautiful scenes using the ray tracing method. I have decided to create a normal graphics engine which will entirely be based on the ray tracing algorithm. The aim is to render a dynamic scene completely with its entire calculation at each frame. And first of all, there must be per-frame calculation of lighting of the whole scene. It's clear that with such strict requirement we must give up something. I think that it would be correct to give up an aim to draw a copy of the real world. Such approach makes spheres as base primitives for representation of objects. They are optimal from the standpoint of the ray tracing method. Let everything consist of spheres - buildings, environment, monsters etc. Later it will be possible to include segments of spheres, for example, triangles. Last time I already spoke about the VirtualRay engine. Now there is a new version which provides more possibilities for games. So, let's take a look at the realization of the ray tracing method in the real time mode. Here are several screenshots to give you a general idea.

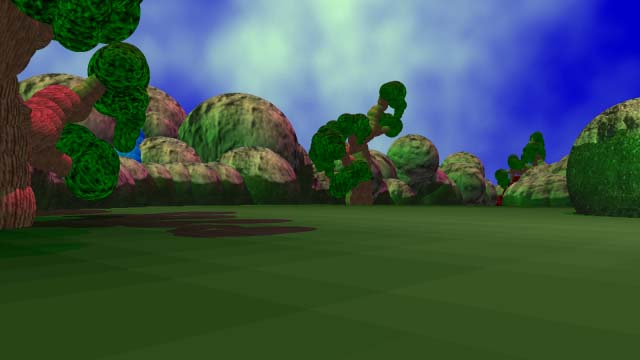

Extraterrestrial construction and monsters  Landscape of some world  Extraterrestrial constructions Unfortunately, the screenshots can't deliver how dynamic shadows are playing. You can download the demo version of the engine to look at the capabilities. The minimal system requirements are a processor with the MMX support, 64 MBytes memory, 32-bit color. It's not compatible with the Intel740. It's recommended to use the latest processors with the SSE support (Pentium4, AthlonXP). As low resolutions look too grainy on large monitors, it's recommended to reduce a screen area so that the performance can improve. The demo version allows destroying levels in a real-time mode and adding new constructions. Characteristics of the VirtualRay engine

The engine has a high-level API which allows a user to determine a scene, i.e. positions of spheres, light sources, properties of objects, and a position of a viewer. The user must only determine a scene, and it will be drawn automatically. It's not like in the Direct3D or OpenGL where it takes a lot of time to render each triangle. If you place triangles into the accelerator in the wrong order the performance will drop considerably. Unique capabilities. Rendering of an infinite changeable game worldThe VirtualRay engine based on the ray tracing method gives completely new capabilities for 3D computer games with the view from the gamer's side. First of all, it allows changing the whole scene in the real-time mode. Besides, as the preliminary scene calculation is lacking, it's potentially possible to draw infinite 3D universes. There's a surface of a planet of the arbitrary size, with any number of balls. But the engine draws only those balls which are not hidden (not behind the horizon). Depending on the processor's power it's possible to determine a curvature of the surface, i.e. how far the horizon is. The current demo version shows a small part of the 3D world. There is yet no an automatic relief generator and a convenient ball base so that it can be possible to define quickly whether large parts of the surface become visible. But it's not a tough problem. Besides, there mustn't be problems with memory as information on a sphere takes few bytes, and if necessary, it's possible to load data on the current surface sector from a disc. All this stuff makes the VirtualRay an ideal graphics engine for a wide circle of games, like multiplayer ones. PerformanceThe engine provides performance acceptable for many games on modern processors. On the Pentium 4 and Athlon XP it is some 20 fps in 800x600x32. Note that it does provide stable FPS. The minimal frame frequency is just a little lower than the average one. It's accounted by the fact that the engine was originally optimized with the purpose to increase the minimal FPS. The average fps can be 25 at the minimal equal to 20. In many games the average FPS is high, but the minimal one can be several times lower. On the other hand, the ray tracing algorithms provide for considerable optimization. By improving the algorithms we can lift the performance by tens of percents. Sometimes a more optimal method allows raising the speed by several times. The engine, in fact, consists of several parts, and a considerable improvement of realization of one of the parts made a slight effect on the overall performance. Nevertheless, the efficiency has much improved as compared with the previous version. DevelopmentWell, all effects are developed. The question left concerns the speed of operation. Highly realistic computer graphics for cartoons and movies are processed for a long time on very powerful workstations. This is not a real-time mode for personal computers. So, in which direction the VirtualRay which deals with ray tracing in the real time mode must develop? In the ray tracing algorithms processing of the surface material doesn't take much time, the time is mostly spent to define how objects intersect. It's easy to realize such effects as bump-mapping which are acceptable today only for new polygonal video accelerators. At present, there is no performance reserves, but they will soon appear as power of processors keeps on growing. And then it will be possible to improve much the image quality. It's planned to realize bump-mapping, trilinear texture filtering, flashes, i.e. a more complicated lighting model. The bump-mapping is already realized, only relief texture doesn't rotate yet.

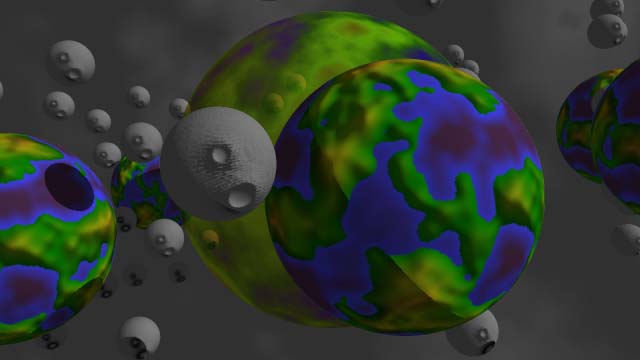

Planets with relief. The gray moons are actually monochrome Further it is planned to improve quality of surface rendering and increase the number of balls. It will allow making a complex relief, hills, ravines etc. Now there are only infinitely remote light sources light the sun. But soon local light sources will also appear which will improve quality of lighting considerably. It will be possible to model lamps, flashes etc. And dynamic shadows will also be possible. The demo version had something similar, but it still requires optimization and improvement of the performance. To extend capabilities of the engine in object modeling it's necessary to add such primitives as spheres with cut-outs. I.e. parts of spheres cut off by other spheres. Then it will be possible to model almost any objects. It's easy to draw curved triangles and quadrangles. By the way, sometimes several semispheres make a certain object better than hundreds of triangles. But for higher resolutions we must provide much more powerful processors. Some objects and effects, sprites, particles etc. can be drawn with the help of usual video accelerators. The ray tracing method excellently goes with multiprocessor systems, and the VirtualRay is not an exception. At present, mutiprocessor systems are not widespread in personal computers, but the version meant for several processors is going to be developed, especially considering that this is not difficult. Almost double speed is expected. And such system costs less than a cool accelerator, and has a unique performance potential. ConclusionThe progress of the game computer graphics is not going to cease. But it mustn't be based only on the computational power growth; it is algorithms which must be improved. Otherwise, it's easy to become at a deadlock. I hope that thanks to new achievements it will be possible to create

new games with wonderful capabilities. Graphics engines won't bound the

gameplay and will please our eyes with beautiful scenes.

Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. |