|

||

|

||

| ||

By creation of the Ti (Titanium) NVIDIA had for several objects: from a response to the Radeon 8500/7500 which are yet unreleased to definite figures of the performance of this baby. The situation is quite annoying - the NVIDIA experts have to wait for the definite final figures of the G2 Radeon to decide into what niches and at what prices they should throw into the market their products. The figures are still unclear and the shareholders and the governing body of the company are urged on by the 6-month term. The only way-out is new drivers which were released to fill up an awkward pause during which ATI and NVIDIA try to let each other pass first to the autumn market. However, it was not rational to launch the NV25 or NV17, killers of the Radeon 8500 and 7500, until the mass sales of the latter ones started. But a proactive blow should have been done. The situation was solved by a release of three nonexistent chips - GeForce 2 Ti, GeForce 3 Ti200 and GeForce 3 Ti500.

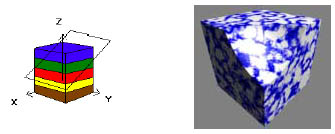

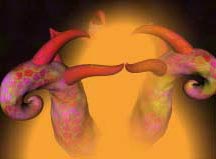

These releases are followed by three solid announcements. Announcement 1 - DirectX 8.1 supportNVIDIA just made Microsoft to declare the pixel shaders of v1.2 and v1.3 sufficient for compatibility with DirectX 8.1 (see ATI R200 review). And while the Radeon 8500 supports considerably improved pixel shaders (a longer shader code, a lot of new instructions etc.) the NVIDIA products have just minor alterations (several new instructions, the number of registers and shader length are the same). Moreover, in the current drivers (21.85) the pixel shaders were still of v1.1. I suspect that the v1.2 and v1.3 will be available only in the NV25 and NV17. The NV25 is said to support the proprietary shaders of the Radeon 8500 (1.4), but I think it is impossible. This support would require a considerable redesigning of the whole pipeline and a system of texture values sampling, for what NVIDIA has no time. Announcement 2 - 3D texture supportThe 3D texture support is realized now only in the OpenGL; in the current Direct3D it is still locked, although there are some traces of it in the D3DCAPS. It lacked for a long time in the Direct3D, although it was to be done yet for the NV20. It seems that there is some error in the chip, and while it could be eliminated on the driver level in the OpenGL, which passes all data by creating lists of requests to the accelerator according to the API calls, it was impossible in the Direct3D, where the most of data structures are implemented directly by the chip. ATI, however, has 3D texture support both in the Direct3D and in the OpenGL drivers since the Radeon 1. But NVIDIA provides much better support for 3D textures than it is done in the Radeon1. It supports really compressed (in three dimensions, with a compression factor equal to 1:4 and 1:8) texture formats, 3D texture MIP mapping and their procedural generation and usage of them as 3D and 4D parametrized tables for calculation of some effects. Compression must be obligatory provided since one 256X256X256 texture takes 64 MBytes if uncompressed. When compressed, it takes 8 MBytes. But we still only dream of the scenes where a lot of objects have their own 3D textures; the local memory of accelerators allows us to use only several 3D textures to create impressing effects such as 3D mist or complex illumination.   MIP mapping also allows decreasing a data volume (making it smaller as the distance from an object grows) and to improve the visual quality of objects with 3D textures. The procedural textures allow generating data on the fly according to a certain formula, which is, however, calculated by a CPU. This approach is possible for different special effects with quickly changing 3D textures, but it is not justified for economizing the memory in case of a great heap of various objects with 3D textures - the performance will be too low. Today there is only one game application which uses 3D textures - DroneZ:  It is quite a primitive game in plot but it is rich in special effects and uses all latest innovations available in the OpenGL (including 3D textures):       But there are also Imposters - flat stripes which are usually drawn quite fast (because they have a constant Z and don't require a per-pixel interpolation of parameters) with rectangular 2D textures in use. Such stripes are used to optimize displaying of a lot of similar small objects, for example, a system of particles.  Usage of 3D textures here opens new prospects - we can animate these objects, for example, in respect of the position of an imaginary source of light. If we use a set of preliminary calculated images, we can create an illusion of a great number of really 3D objects displayed at a great speed since in reality they are only 2D sprites. Well, now 3D textures remain still a resource-demanding tool useful only for creation of special effects. Their realization would really look great in the GeForce3 family if a normal support were not absent in the Direct3D. Announcement 3 - Windows XP supportIt supports both a complete set of 2D features necessary for normal acceleration of the Windows XP, and a new 2D API Microsoft GDI+ on a lower level. It provides fast image building and a more effective usage of hardware acceleration. The GDI+ is an attempt to overcome the architectural drawbacks of the API. Besides, it contains several new possibilities such as gradient shading. CardsI will introduce only the GeForce3 Ti500 since only this one has an increased frequency relative to the GeForce3. Operation of the GeForce3 Ti200 was emulated also on it with the frequencies decreased to 175/200 (400) MHz. Operation of the GeForce2 Ti was estimated on the Leadtek WinFast GeForce2 Ultra video card with the memory frequency decreased to 200 (400) MHz. The reference NVIDIA GeForce3 Ti500 card has AGP x2/x4 interface, 64 MB DDR SDRAM located in 8 chips on the right side of the PCB.   ESMT (Elite Semiconductor) produces memory chips with 3.5 ns access time, which corresponds to 285 (570) MHz.  The memory works at 250 (500) MHz. The memory chips are covered with traditional heatsinks. The frequency of the memory is lower than the rated one according, obviously, to the recommendations of makers of such a fast memory. But I hope it will be possible to overclock it up to the rated speed according to the access time. The GPU works at 240 MHz. It is not a considerable increase, but taking into account that GeForce3 cards are well balanced, the performance gain should be quite large. If you look through our related reviews and 3Digest you will see that the GeForce3 was already overclocked up to 255/280 (560) MHz. This is higher than the Ti500 is able to give. There are also a lot of GeForce3 cards which can operate at over 240/250 (500) MHz. The GeForce3 Ti500 looks very close to the reference card, but there are some differences:     The most considerable changes are made near the VGA connector:   On the left you can see the GeForce3 Ti500 card and on the right is the GeForce3 one. At the expense of a different core feeding unit a logic set which controls 2D quality (filters etc.) was moved from the rear side of the PCB to the front one. OverclockingBeing cooled enough, the NVIDIA GeForce3 Ti500 raised its frequency up to 260/290 (580) MHz. While the memory overclocking is impressing (although it is only 10 MHz over the rated frequency), the GPU speed, which is higher by 20 MHz, is moderate. Note:

Installation and driversTest system:

Plus, ViewSonic P810 (21"), NOKIA 447Xav (17") and ViewSonic P817 (21") monitors. For the comparative analyses we used the NVIDIA drivers v.21.85. VSync was off, the S3TC technology was enabled. For the comparative analyses we used the following cards:

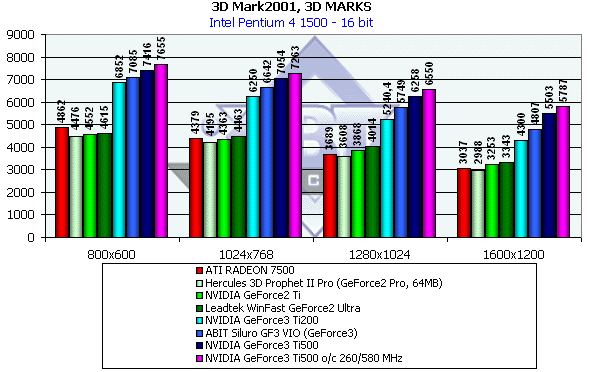

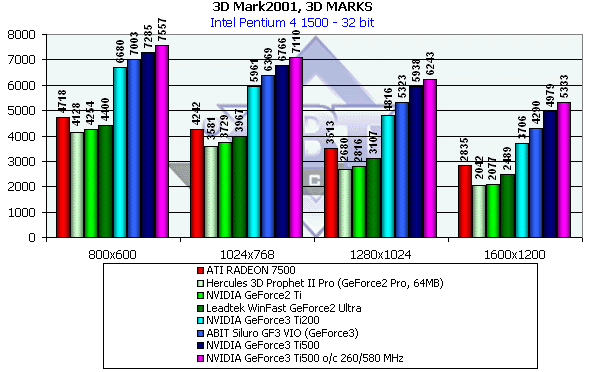

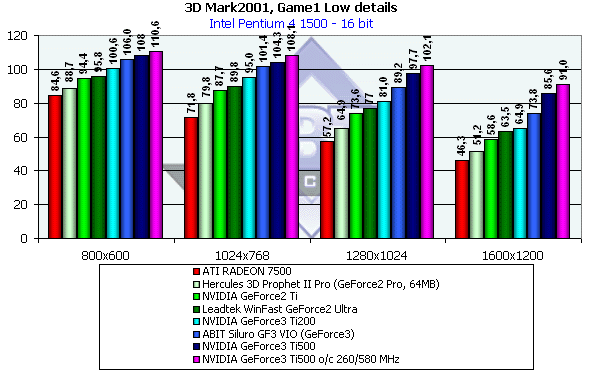

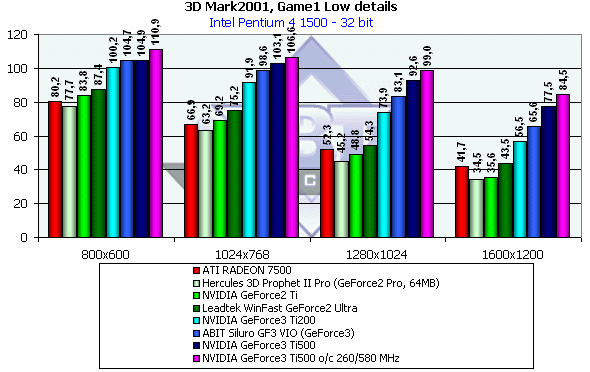

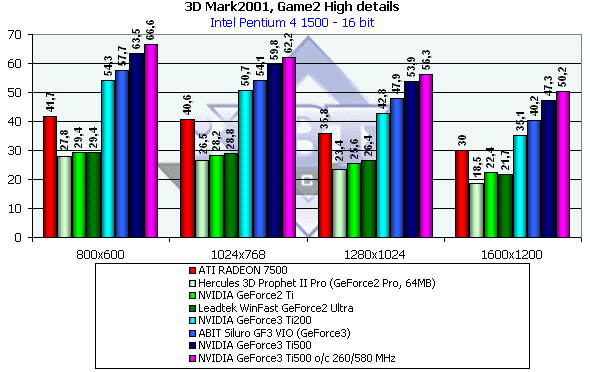

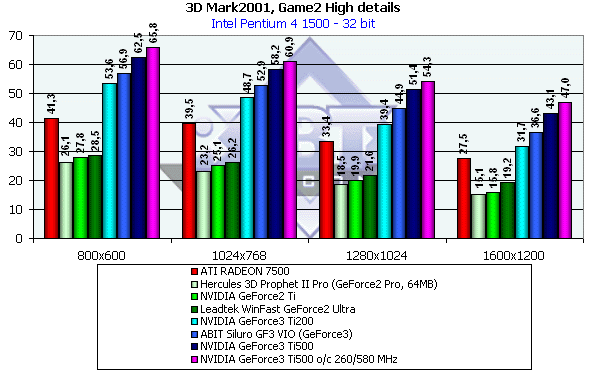

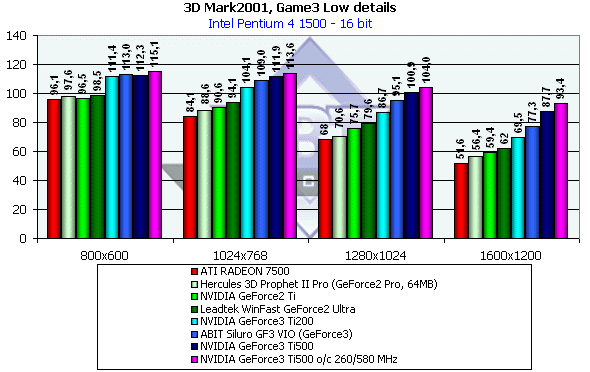

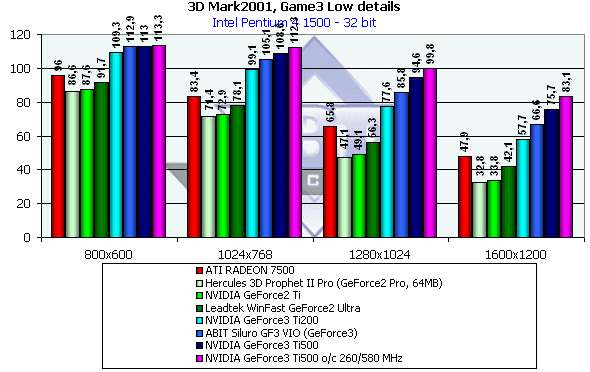

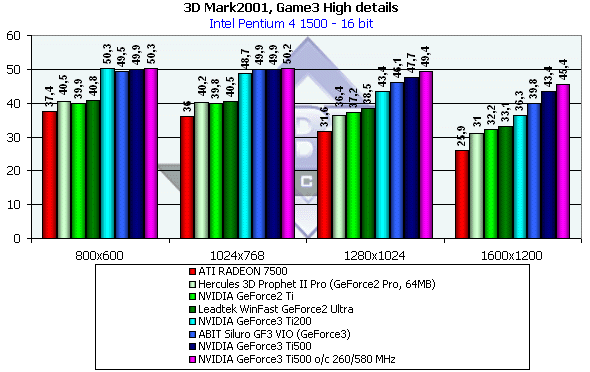

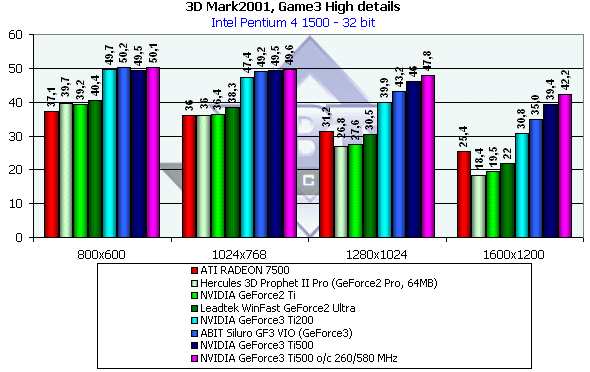

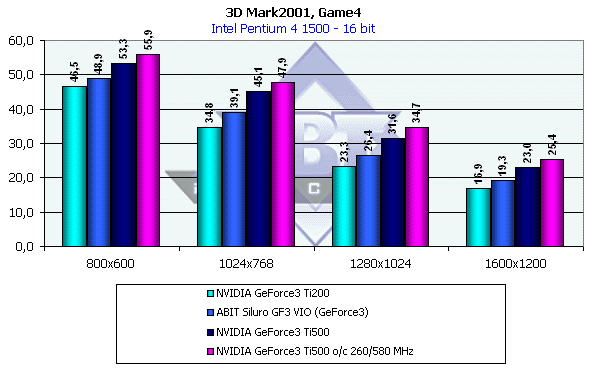

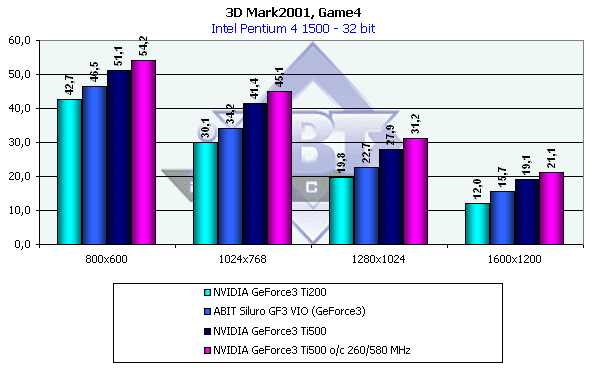

Test results2D quality is traditionally high. At 1600X1200, 85 Hz you can play comfortably with a high-quality monitor which supports such modes. I noticed no changes despite the fact that the PCB was redesigned. For estimation of 3D quality we used several tests:

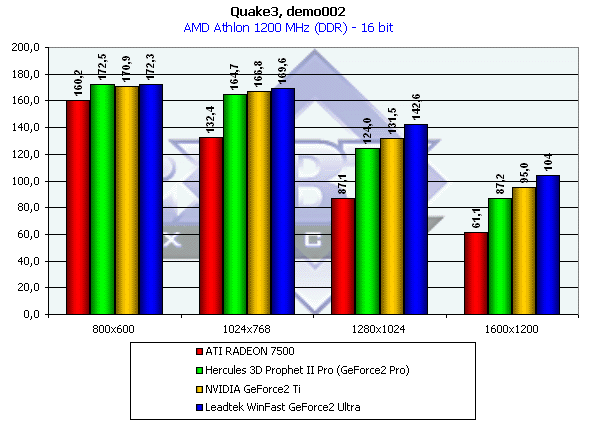

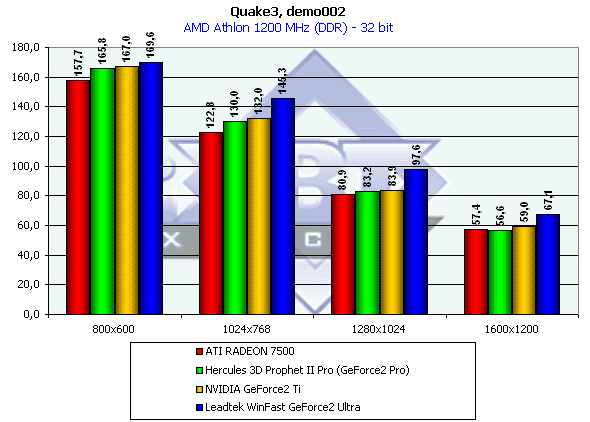

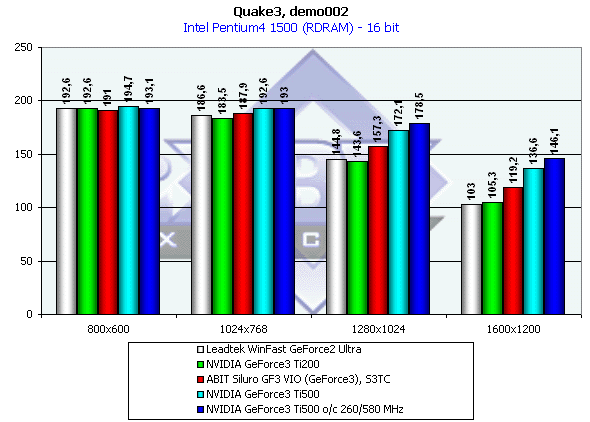

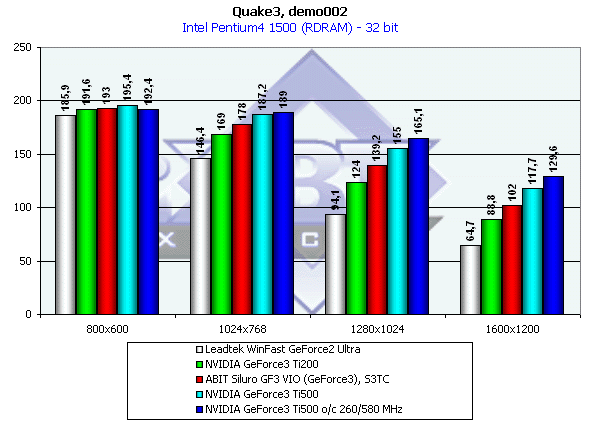

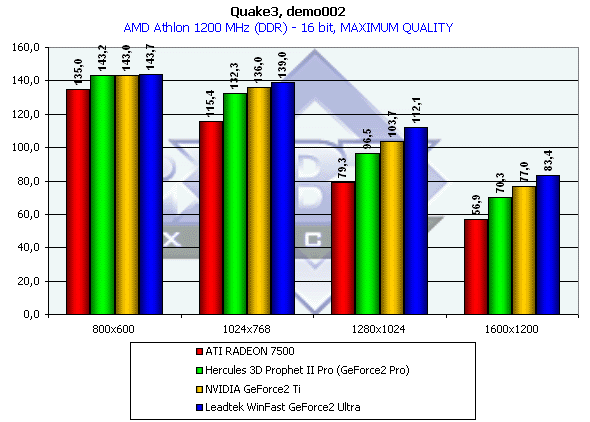

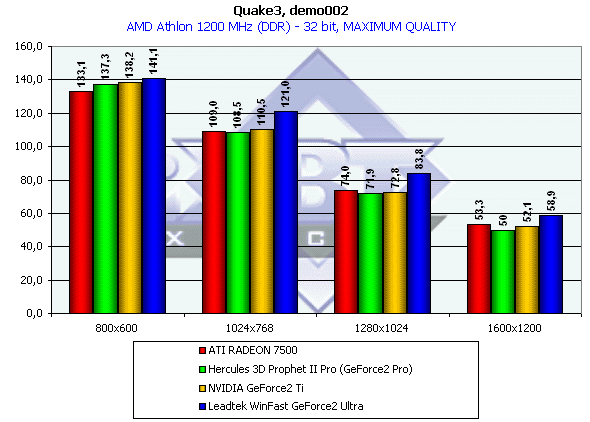

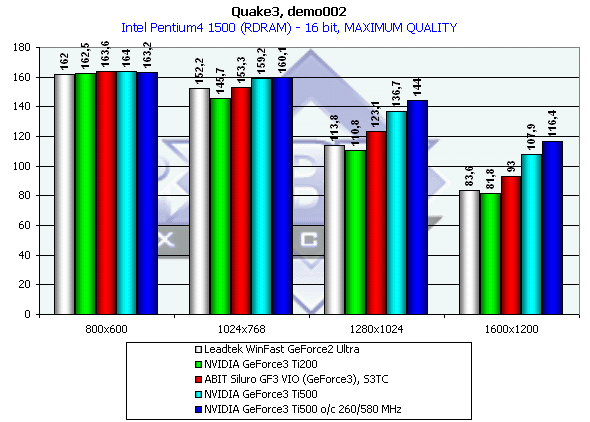

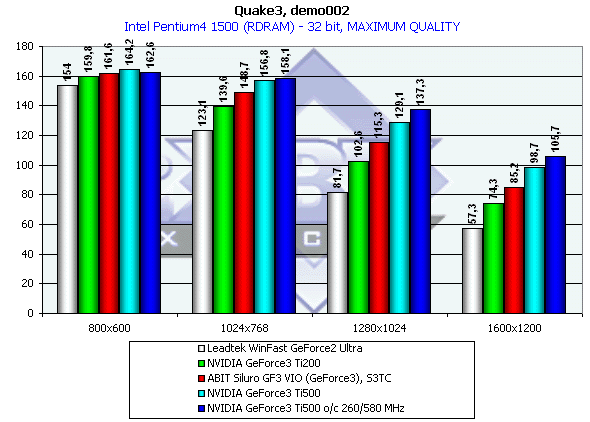

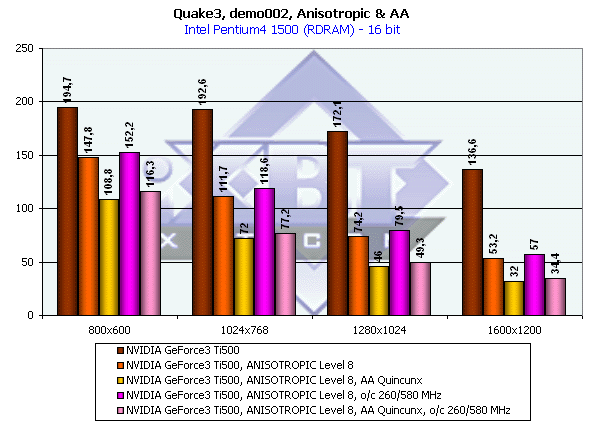

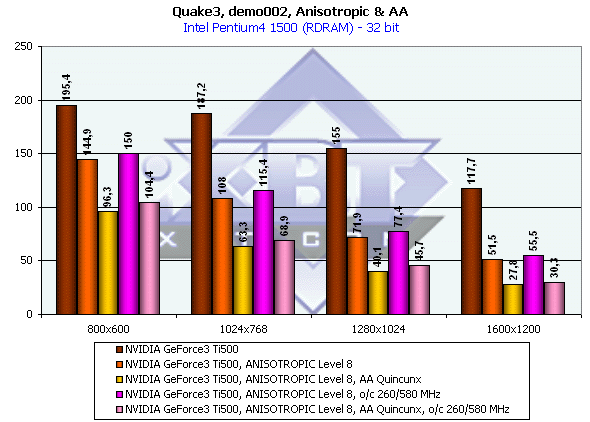

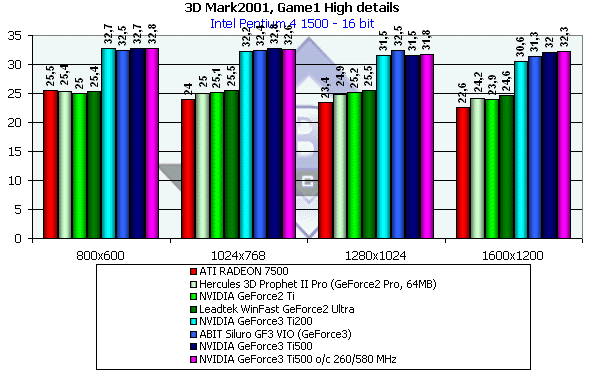

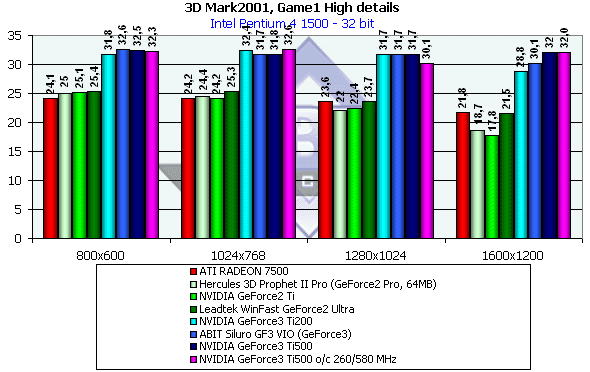

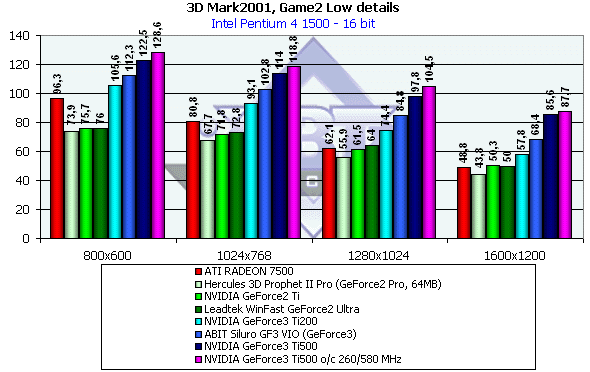

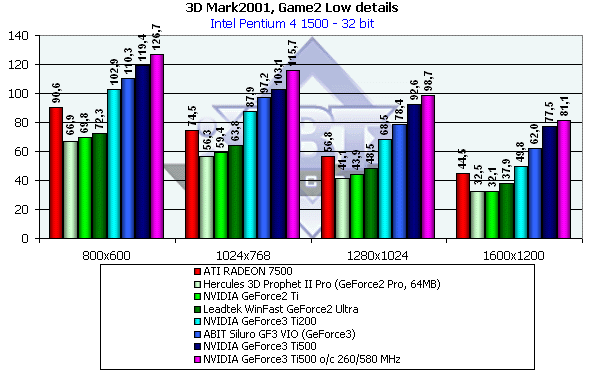

Quake3 Arenademo002, standard modesThe tests were carried out in two modes: Fast (16-bit color) and High Quality (32-bit color). Operation of the GeForce2 Ti was emulated with the help of the Leadtek WinFast GeForce2 Ultra card by setting a 250/200 (400) MHz frequency. Operation of the GeForce3 Ti200 was emulated with the NVIDIA GeForce3 Ti500 card by setting a 175/200 (400) MHz frequency. As the GeForce2 Ti and GeForce3 Ti cards refer to different market niches I have divided the diagrams into two units for the performance analyses. NVIDIA GeForce2 Ti  The GeForce2 Ti cards doesn't differ drastically from the GeForce2 Pro one. NVIDIA GeForce3 Ti200/500  The Ti200 takes an intermediate position between the GeForce2 Ultra and GeForce3 (there is almost no difference between this card and the GeForce2 Ultra in 16-bit color). demo002, highest quality and load modesThe detailing levels of geometry and textures were maximum, and the objects were extremely complicated because of curved lines (r_subdivisions "1" r_lodCurveError "30000"). NVIDIA GeForce2 Ti  The GeForce2 Ti beats its predecessor in 16-bit color at the expense of a higher core speed, but in 32-bit color it was put into its place by the memory bandwidth. NVIDIA GeForce3 Ti200/500  Due to a considerable drop in the core speed the Ti200 lags behind the GeForce2 Ultra in 16-bit color. But in 32-bit one the situation is different. demo002, anti-aliasing and anisotropic filtering testsThe GeForce3, as you know, possesses two important functions in 3D: anti-aliasing and anisotropic filtering. The most optimal AA mode for the GeForce3 is Quincunx, and the best graphics is obtained with the Level 8 of anisotropy which uses up to 32 texture samples. The performance drop is quite big at that. Even 1024X768 is not playable with the Quincunx AA and Level 8 anisotropy enabled simultaneously. Let's see how the Ti500 can boost the performance:   The performance drop is considerable, and even an overclocking of the Ti500 hardly saves the situation in high resolutions. But there are many games which do not require hundreds of FPS, and in 1024X768 in 32-bit color one can play excellently with the highest AA and anisotropy. In our 3Digest you can look at the anisotropy quality of the GeForce3. 3DMark2001As the test consists of several subtests and, therefore, there are a lot of diagrams, I didn't divide GeForce2 Ti and GeForce3 Ti200/500 into different diagrams. 3D Marks  The general results of the 3DMark2001 show that the GeForce2 Ti takes an intermediate position between the GeForce2 Pro and GeForce2 Ultra. The Radeon 7500, however, outscores them. In the GeForce3 family the performance gradually increases from the GeForce3 Ti200 to the Ti500. Game1, Low details  The Game1 is a scene from car races where you can practice shooting. There are a lot of effects and detailed objects. In the 16-bit color there is a good stair, while in the 32-bit one the GeForce2 Ti loses to the ATI RADEON 7500. Game1, High details  This is rather a processor test because of a too complex scenes. In 16-bit color all go on a par inside their niches, and in 32-bit color the RADEON 7500 again outshines the GeForce2 Ti. Game2, Low details  Here the RADEON 7500 comes very close to the GeForce3 Ti200 in 32-bit color. But this scene has a high overdraw factor, and traditional accelerators without optimization of operation with a Z-buffer implement a lot of unnecessary operations (they draw many invisible surfaces). The GeForce3 is able to implement such optimizations, that is why the performance is much higher. Game2, High details  The situation is similar. Game3, Low details  This scene shows the expected correspondence of the performance levels in the GeForce2 clan in 16-bit color and an advantage of the ATI RADEON 7500 in 32-bit one. Game3, High details  The competition in the GeForce2 Pro niche shows that the RADEON 7500 excels in 32-bit color. Game4  Despite its lower frequencies the GeForce3 Ti200 copes excellently with this scene thanks to the new Detonator XP driver. ConclusionWe have just studied a new Titanium series from NVIDIA which includes 3 cards: GeForce2 Ti, GeForce3 Ti200 and GeForce3 Ti500. The new 0.15 micron technology allows the GeForce2 Ti to reach much more than 250 MHz. But the positioning of this card doesn't permit the memory to work at higher than 200 (400) MHz, that is why it is not rational for the chip to operate at over 250 MHz. NVIDIA says that the GeForce2 Ti outscores the GeForce2 Ultra and costs as much as the GeForce2 Pro. But they exaggerate since in 32-bit color it doesn't provide the speed of the Ultra, but it will, indeed, cost much lower than the GeForce2 Pro cards. The GeForce3 Ti200/500 line is meant to make the powerful accelerator with DirectX 8.0 (Ti200) possibilities affordable and to beat the ATI RADEON 8500 (Ti500). The time will show whether the GeForce3 Ti500 is able to outdo the ATI RADEON 8500 whose capabilities are not known yet. I think the Ti200 will be quite popular, while the Ti500 will hardly be such at the beginning. The current GeForce3 will be replaced with the Ti line, that is why there will be no choice. But it is possible that NVIDIA will develop some new solution by that time. That is why GeForce3-card owners should wait for the NV25, and those who still lack for such a powerful accelerator should look at the Ti200 or wait for the RADEON 8500 to clarify the price situation. Today it is quite difficult to recommend one or another product since their prices are not available yet. Highs:

Lows:

Just a little time ago we have received some information concerning the retail prices which will be up to $349 for the GeForce3 Ti500, $199 (!) for the GeForce3 Ti200 and $149 for the GeForce2 Ti. The prices look quite attractive taking into consideration the possibilities and the performance of these cards. Let's wait for the ATI RADEON 8500 and ATI RADEON 7500 which will obviously initiate a new price war. Our testers will publish reviews of the new line of production Titanium cards very soon. Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. |