|

||

|

||

| ||

Part 1: Arrival of Intel as an architecture locomotiveI'm not going to sink into discussions about what a Pentium 4 was meant to be and what we have now - the first work on errors (Northwood) is here. Let's look what hides behind a new possible railway track (i.e. architecture) which is moving away from the IT train for several years already like a horizon. So, here's an Intel's standpoint:

Such steps can displace MS as a monopolist on the PC OS market. On the other hand, a transition to the IA-64 is dragged out for some reason, and it can help MS adapt to a 64-bit architecture the same way it reacted to the 32-bit one earlier. If you remember, some time ago a necessity to use all advantages of the 32-bit addressing and a page MMU as soon as possible gave birth to a hybrid of interfaces and an API Windows with a multitask core of a sophisticated OS architecture (Windows NT). By the way, it was developed by a team which came to Microsoft from DEC. But a harmonious core architecture didn't make for an overall harmony of the system comparable to much more elegant OpenVMS or *NIX bundled with the Windows' interface. Modern NT variations are too bulky, they mix up to much various technologies and APIs. I don't know whether it's good or bad but MS systems are not planned or projected: they rather evolve from numerous trials and mistakes. And the most dangerous thing here is to go too far with mutations. So, here are two possible scenarios:

It is clear that the second scenario is more appropriate for MS. But if MS feels that the ported Windows NT is in a shaky position on a 64-bit scene, MS can follow the second scenario. I don't think it will be more successful from a technological standpoint than the case of NT, but in the marketing sphere MS is able to work wonders. Nevertheless, a delay with release of the IA-64 plays into the hands of MS, as well as the latest reason of the delay (or rather, a peculiarity of initially badly portable source code Win32*, which all major manufacturers have in quantity). Besides, the IA-64 requires a great memory bandwidth (may be a 128-bit memory bus). Therefore, we should wait for new memory technologies and use DDR/QDR interfaces. Moreover, average prices for PCs are falling down and this, together with an IT slump, holds back any technological initiatives for considerable performance gain. The latest problem is that modern applications do not require such powerful hardware. So, there are two obstacles that hinder from PC architecture development: "E" (economical) and "S" (software). The economical obstacle can be overcome thanks to scalability of the architecture; for example, it is possible to sell this stuff in the server and scientific and engineering sectors, thus, skimming the cream off yet before the product appears on the mass market. But as far as the S factor is concerned you have to put up with it. As a result, Wintel holds back Intel considerably. It is not simple to be a locomotive that carries the whole industry. Part 2: AMD, as a careful extender of its possessionsAMD is not able to promote the new (incompatible with x86) architecture on the market. Moreover, it makes no sense for it to do this. So, what we have now:

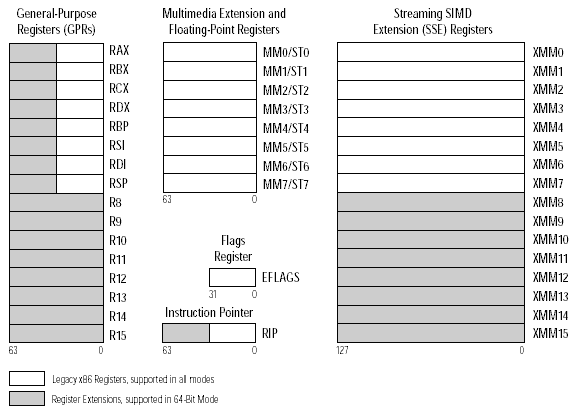

AMD takes the risk of a growth of its market share because of a possible success of the IA-64. It takes a more favorable position than Intel. Even if a role of MS operating systems isn't so important, they will be replaced with portable open systems which will put Intel and AMD into the same positions. Moreover, an emulation which is necessary for compatibility with old applications will be easier to implement for AMD. Besides, any "lazy" industry slump plays into the AMD's hands. Everyone counts money, and the solution from Intel is a bit more expensive and sometimes slower. It is much more important for a user to get 3D acceleration, a large memory size and data safety. It is also interesting how both companies approach marketing: Intel sponsors advertisements of its dealers and resellers. On the contrary, AMD sells to them finished promotional materials at best which the resellers must distribute themselves. But just a short time ago AMD admitted that good advertising campaigns are vital, but they will be carried out directly by the company. The place AMD took is comfortable only when there is a locomotive (Intel). And what if something happens to it? Act 3: 64 grooves and a vast register filed...Do we need these 64 bits? Modern storage devices have already surpassed a 32-bit threshold (4 GB). And memory will soon overstep this limit. Large video files will be easier to store. Accuracy and speed of calculations? Here SIMD extensions are enough. Data transfer acceleration? It is limited by a processor bus. Higher efficiency of a code execution and processor blocks utilization? The new architecture IA-64 thrives here, but everything depends on whether a compiler becomes successful. The Hammer processor from AMD doesn't have many advatanges with the new code. On the other hand, it is not required for the Hammer to use 64 bits and it's not necessary... At present a performance gap between RISC and x86 processors is becoming narrower, though at the expense of greater heat generation and complexity of development of the latter ones. Part 4: AMD, with Hammer in hands, repairs x86Let's have a look at the new architecture of x86-64 from AMD:

On the whole, there is nothing new - the architecture is developing according to a scenario suggested by Intel yet for transition of the x86 from 16bit to 32bit. The registers are extended, new ones are added, and the system of instructions is slightly altered. Complete binary compatibility with all 16 and 32bit operating systems remains, and 64bit data and code can be used together with 32/16bit ones. For example, adaptation of compilers and *NIX operating systems to this architecture was flawless and fast (see www.x86-64.org). But we shouldn't expect a revolutionary performance increase; only additional registers are able to accelerate operation. Taking into account a high data rate of load from an L1 cache and a considerable superscalar level of modern x86 processors, the performance gain won't be great, at least, for popular algorithms. A maximum of 10-20% (if we take into account only x86-64 architectural difference, Hammer also have internal DDR memory controller and pretty fast HyperTransport buses for effective MP realizations). A processor frequency will grow up by this percentage at several months, i.e. faster than this 64 bit extension was developed. On the other hand, the processor's structure will change only at the expense of increasing in number and in capacity of similar blocks, and not at the expense of complication of operation of their algorithms (keep in mind that the competing IA-64 is meant to simplify the processor by diverting a great deal of load onto the compiler). o, there seems to be no problems with a transition. The Hammer is just around the corner. Besides, we should expect soon a Hammer's version with SMP or SMT technology in the chip. And now I'm going to talk about the latter. Part 5: Intel tries on the heritage of the CRAY and AlphaIntel, in its turn, enters a high server RISC architecture market. If you remember, once upon a time there was Alpha architecture. Well, it exists today as well, but its latest 5th unfinished generation will never be released. This architecture had been going to incorporate multithreading support. The Alpha is an excellent example of how a careless marketing can kill a good product. Time passed, and Intel decided to announce their Jackson Technology - a hardware multithreading technology. What's the key problem of modern superscalar processors which house dozens of blocks for simultaneous loading, processing and storing of data? The problem consists in mutual dependences of these data. It is impossible to load, process and save the same number simultaneously. Moreover, you can't load, process and save it before it was saved previously. The problem is in the code - people used to write algorithms in the form of successive interrelated operations and not in the form of a chaotic set of nonoverlapping formulae which is more optimal for superscalar implementation. A small number of x86 registers for which the code is compiled aggravates it - it's quite difficult for the processor to parallelize such interconnected instructions, that is why the operation is carried out on 4-5 registers. Therefore, a half of executive units of the processor stand idle with a usual code. It is much cheaper and takes less time to complicate an x86 processor by adding a number of executive units than to develop a new compiler! We can't ignore dependences of instructions, but we can start processing two independent instruction streams simultaneously. We just bring in the second address pointer's register (EIP-2), two interrupt controllers and add an additional bit to each executed micro instruction (in sheduling pool) which indicates an instruction stream it refers to (first or second). Then we duplicate a flag register, some of the control registers and APIC. General registers don't need to be physicaly duplicated - we already have a big register pool for speculative rename. Now, when renaming, we keep in mind two virtual set of registers. So, from software side we have got two processors in one. Let's call all this SMT (Simultaneous Multi Threading). So, what do we have now? On the one hand, a complexity of a die of the modified Pentium 4 has increased only by ~5%. On the other hand, in most of applications its performance has grown by 10%-15%, or even 30 % and more. It is not much as it could have been. What's the reason?

The two latter items hold back this technology the most considerably. By the way, that was the reason why Intel hesitated to publicize this technology for x86 - the latest Alpha (EV8) was so greatly simple that it had almost 4 times advantage in 'instructions per clock' with 4 threads of a web-server processed on a hardware level, 2.5 times advantages in database applications etc... It is interesting that the Alpha lacks for hardware speculative (with prediction) register renaming, and a register file is just divided among threads. If Intel realizes these four wishes, the performance can grow by 50% in typical applications. But it's necessary to redesign the processor much. Well, we shall see what we shall see. But the rumor has it that the SMT can be used another way. Let's imagine a special compiler that creates a code for two threads. One of them is base (computational), and the other is auxiliary which implements data prefetch with respect to a processed algorithm, thus, applying to those memory addresses which the base thread can need soon. Taking into account a significant downtime while data are loaded into a cache, it is no wonder that with such a model the benefit can reach 70% in most of tasks. At the same time, HP is developing a full SMP in a chip with a combined L3 cache, IBM is busy at the same thing, and Sun promises to release an SMT solution within its UltraSparc line in the near future. Well, server processors can cost dear. And what about desktop systems? Will Intel develop further its IA-32 or it will entirely turn to the IA-64? The Alpha passed away but it brought in a concept of multithreading in a general-purpose OS and almost finished developing a hardware microprocessor model (the first publications about a hardware SMT date back to 1978 when the first hardware realization was made in the Cray CDC 6600 whose CPU wasn't, however, just a single-chip solution. Besides, reportedly, some MARS-M machine also had a similar technology. Well, Alpha initiated a lot of other things - just read its specs and you will be really surprised. Act 6: unfinishedBut what are you going to do with heat? With watts which keep

on growing in number as time goes by? Anyway, this is a subject

for a separate discussion about transistors, electrons, kilowatts,

cases etc. Let's leave it for next time...

Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. |