|

||

|

||

| ||

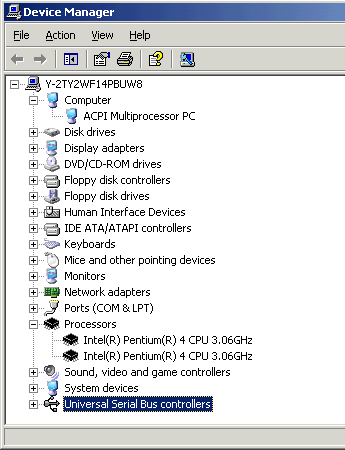

We have just received the Pentium 4 2.8 GHz, but Intel, which never seems to be tired, keeps on announcing new versions of CPUs with its so well overclockable new processor core. But the today's hero differs from the previous top models not only in frequency (which is higher by 200MHz odd), by also in the support of the technology of emulation of two processors on one processor core, which was earlier available only in ultra expensive Xeons. Now, do you want a dual-processor home computer? You can have it! All Pentium 4 models starting from this one will have the Hyper-Threading supported. But you can ask what for a dual-processor system for a home user? So, today we will try to explain what is Hyper-Threading and what it is for in personal computers. SMP and Hyper-ThreadingFirst of all, let's logically consider mechanisms of a classical SMP (Symmetric Multi-Processor) system. Why? Simply because there are not many users out there who clearly understand how an SMP system works and when they can expect a performance gain from a multiprocessor system and when not. So, imagine we have two processors instead of one. What do we have now? Nothing. To make them work "profitably" we must have an operating system able to use both processors. It must be a multitask system and its kernel must know how to parallelize calculations for several CPUs. None of OSes from Microsoft known as Windows 9x (95, 95OSR2, 98, 98SE, Me) can do it. SMP is supported by Microsoft's system based on the NT: Windows NT 4, Windows 2000, Windows XP, by Unix based OSes (Free- Net- BSD, commercial Unix (such as Solaris, HP-UX, AIX)) and numerous Linux systems. By the way, the MS DOS has no idea about multiprocessor systems :). Well, if a system defines two processors, the further mechanism looks quite simple. If a computer runs only one application - all the resources of one processor will be fully given to it, and the second processor will just stand idle. If there are two applications, the second one will be given to the second processor, that is why the speed of processing the first application mustn't fall down by no means. But the reality is much more complicated you know. You can run only one executable application, but the number of processes (i.e. fragments of the computer code for fulfillment of a certain task) is always more than one in a multitask OS. First because an operating system is an application itself, and so on. That is why the second processor can help even in case of a single application by taking up processes generated by the OS itself. Although it's impossible to divide the tasks so ideally, the processor dealing with a useful task still have less side tasks to do. Besides, even one application can generate threads which can be processed by several CPUs. For example, all rendering programs use such threads as they were specially written for multiprocessor systems. That is why when threads are used the gain obtained with an SMP system can be quite marginal even in a single-task situation. A thread has two differences from a process: it can never be generated by a user (process can be started both by a system and a user), and a thread dies together with the parental process automatically. Also remember that in a classical SMP system both processors have their own cache and registers but share memory. That is why if two tasks work with RAM simultaneously, in spite of different processors, they will certainly impede each other. And finally, there are not just few processes. On the collage below (we have removed all user processes from the Task manager) you can see that only the Windows XP itself generates 12 processes and some of them are multithread at that; that is why the overall number of processes amounts to 208 (!).  That is why we can't provide a separate CPU for each task, they will still switch between code's fragments. However, if a computer code is written properly, an idle process (or thread) takes almost no processor time (this is also shown on the collage). Hyper-Threading is also a multiprocessor system, but a virtual one. We have one Pentium 4 CPU, but the OS sees two processors. Look at the picture.  So, a classical single-core processor was supplemented with one AS unit - IA-32 Architectural State. The Architectural State contains states of registers (general-purpose, control, APIC, function registers). In fact, AS#1 plus a single physical core (branch prediction units, ALU, FPU, SIMD units etc.) make one logical processor (LP1), and AS#2 plus the same physical core make another logical processor (LP2). Each LP has its own APIC (Advanced Programmable Interrupt Controller) and registers. There is a special table named RAT (Register Alias Table) where you can establish a correspondence between general-purpose registers and registers of the physical CPU. Each LP has its own RAT. So, now two independent code fragments can be executed on the same core, i.e. this is a multiprocessor system! Hyper-Threading: compatibilityRemember that not all OSes, even having a multiprocessor support, can work with such CPU as with two CPUs. It depends on how many processors can be found during initialization of an OS. Intel says that an OS without the ACPI support can't identify a second LP. Besides, a BIOS of a mainboard must identify the Hyper-Threading support and report about it. As for Windows, it means that we can't use either Windows 9x or Windows NT - the latter doesn't have the ACPI support and therefore can't work with one Pentium 4 as with two processors. On the other hand, the Windows XP Home Edition can work with two logical processors obtained with the Hyper-Threading technology, though it doesn't support two physical processors. And the Windows XP Professional with two installed CPUs supporting HT technology reports about 4 processors, though the number of physical CPUs is limited by 2. Now the hardware aspect. You probably know that the new CPUs working at frequencies over 3.0 GHz can require a new mainboard. Even with the Socket 478 Intel can't neglect consumed power and heat generation of new processors. I assume that the increased current consumption results not only from a higher frequency: because of the virtual multiprocessing the core has a greater load which makes the average consumed power higher as well. Some old mainboards can be compatible with new CPUs if they were given the needed reserve. In short, if a manufacturer developed its PCB according to the Intel's recommendations regarding power consumed by the Pentium 4 he loses to those who provided their boards with "stronger" VRMs and made respective layouts. Moreover, beside an OS and a BIOS, a chipset must also be compatible with the Hyper-Threading. So, your mainboard must be based on a chipset supporting 533 MHz FSB: i850E, i845E, i845PE/GE. Note that the first revision of the i845G doesn't support the Hyper-Threading, but its successor does. Well, now we have two logical processors and Hyper-Threading! But still, there is only one physical CPU. So, why do we need such a complicated emulation technology? Hyper-Threading: what for?Well, let's try to clear up what Intel is offering us and how we should regard it. Intel never had absolutely perfect products, moreover, sometimes solutions from other manufacturers were more interesting. However, as it turned out, it's not necessary to make everything perfect - they should create a chip expressing a certain idea and arriving at the right time into the right place. And - no one must have anything similar. First Intel opposed its powerful FPU in the Pentium to the AMD Am5x86 that had excelled in integer calculations. Then its Pentium II got a wide bus and a fast L2 cache which helped it to beat all Socket 7 processors. After that we got the Pentium 4 with its SSE2 support and rapidly growing frequencies. And now Intel offers the Hyper-Threading. So, why does the manufacturer with such competent engineers and huge expenses for research works offer us this technology? It's surely very easy to call Hyper-Threading one more marketing trick. But remember that such technology requires a lot of money, forces, time... Wouldn't it be easier to hire a couple of hunfreds of PR managers or a dozen of slick advertising clips? What made the developers from IAG (Intel Architecture Group) make the decision to develop this idea? To understand how the Hyper-Threading functions it is enough to know how any multitask operating system works. How does one processor copes with dozens of tasks? Well, in fact, at a given point of time only one task is executed (on a single-processor system), but the processor manages to switch between different parts of a code of different tasks so quickly that it seems that a lot of applications work simultaneously. The Hyper-Threading offers actually the same technique but on the hardware level, inside the CPU. There is a number of execution units (ALU, MMU, FPU, SIMD) and two "simultaneously" executed code fragments. A special unit finds out what instructions from which code fragment must be fulfilled at a given point of time and then delivers a given instruction to an idle execution unit if there is such and if it is able to fulfill such instruction. Certainly, instructions can be sent forcedly, otherwise one process could embrace the whole processor (all execution units), and execution of the second part of the code (on the second "virtual" CPU) would be interrupted. At present this mechanism is not intellectual, i.e. it can't account for priorities, and it simply uses instructions from both chains in turn. If instructions of one chain do not have to share the same execution units with those from the other chain, this is an entirely parallel execution of two code fragments. What are strong and weak points of the Hyper-Threading? The most obvious consequence is increased efficiency of the processor. Well, if one of two programs uses mostly integer calculations and the other floating-point ones, the FPU will stand idle the most part of time while the first program is executed, and the ALU will sit still while the second is dealt with. But this is an ideal case. But what if both programs use the same processor units? It's clear that it's impossible to speed up the process as the physical number of the units is the same. But can it slow down? In case of a processor without Hyper-Threading we have an alternate execution of two programs on the same core with the OS (which is one more program) as an arbiter and the overall time depends on:

And what do we have with the Hyper-Threading?

Well, Intel offers quite a logical solution: only the third items can compete. In the first case operations are performed on the soft hardware level (the OS switches between threads using the processor's functions), and in the second case it's done on the hardware level - the processor works alone. Theoretically, a hardware solution must be quicker. But let's see what we have in reality. Another unpleasant drawback is that the Pentium 4 executes the classical x86 code which actively uses direct addressing to cells and arrays located outside the processor - in RAM. That is why the virtual CPUs have to share not only registers but also a processor bus. But today normal dual-processor systems on the Pentium III and Xeon are in the same situation! Because there is always only one AGTL+ bus inherited from the famous Pentium Pro irrespective of the number of CPUs in a system. Only AMD tried to solve this problem with its Athlon MP where each processor had a separate bus to a north bridge of the chipset. However, the problem is not solved completely because there is only one memory bus in all cases (remember that we are speaking about x86 systems). Nevertheless, the Hyper-Threading is able to derive benefit from this situation as well. We can get a considerable performance gain not only when applications use different functional units of the processor, but also when the applications work differently with data located in RAM. For example, if the first application makes intensive calculations inside itself and the other often swaps in data from the RAM, the overall time of their execution must be shorter, provided that the Hyper-Threading is used, even if the applications use the same execution units, simply because instructions for data reading from the memory can be processed while our first application fulfills intensive calculations. Well, let's sum it up: the idea of the Hyper-Threading technology looks excellent and it meets the today's needs. Users often run various applications simultaneously listening to music, browsing the Net, recording MP3 and playing games. On the other hand, there are so many examples when nice ideas are buried by their terrible implementation. So, let's see whether the Hyper-Threading, not as an idea but as its realization and incarnation in silicon, is as good as in theory. TestsTestbed:

[ wcpuid p4 w/ht cpu1 ] [ wcpuid p4 w/ht cpu2 ] [ wcpuid p4 w/ht flags ] [ wcpuid p4 wo/ht ] [ wcpuid p4 wo/ht flags ]

Software:

WAV to MP3 encoding (Lame)

|

There is almost no benefit from the Hyper-Threading, but we didn't provide such opportunity because there are only uni-processor applications and they do not generate simultaneously executed threads; that is why we are dealing with a usual Pentium 4 with just a little higher frequency. It makes no sense to make any conclusions with such a small difference, though they would still be in favor of the Hyper-Threading.

This is a classical test but, still, this is the first application that explicitly supports multiprocessing. The gain is not great (3%), but note that the Hyper-Threading works in quite unfavorable conditions: the 3ds max realizes SMP at the expense of generation of threads and all of them are used with the same purpose (scene rendering) and they thus contain the same instructions. We mentioned that the Hyper-Threading has a considerable effect when the processor executes different programs which use different CPU's units. However, even in this case this technology managed to squeeze out a small benefit. The rumor has it that the 3ds max 5.0 provides a greater gain with the Hyper-Threading, we ill check it next time.

The scores are not surprising at all. Maybe, it's better to use the 3D benchmarks for testing video cards, not processors? However, no results can ever be unnecessary. To some reason, the system supporting the Hyper-Threading has a lower score, but the gap is only 1%.

The situation is similar. However we haven't approached yet the tests able to reveal real advantages and disadvantages of the Hyper-Threading. Sometimes its usage brings in the negative results (by very small margins though). The tests with sound, which must be processed by a separate thread, doesn't help either.

It looks really impressive now! The most interesting thing is that the SYSmark emulates the situation which is considered by Intel the most favorable for the Hyper-Threading - different applications work simultaneously. And during execution of its script the SYSmark 2002 correctly emulates work of a user - it sends to background those applications which have received their "long-term" tasks. For example, video is encoded while other applications are executed from the Internet Content Creation, and in the office subtest it uses antivirus software and voice to text decoding with the Dragon Naturally Speaking program. But we decided not to rely entirely on the tests we hadn't developed and carry out several our own experiments.

First, during a lengthy archiving process we rendered a standard test scene in the 3ds max. After that while a certain long scene was being rendered we carried out standard test archiving of a file in the WinAce. The results were compared with the time of the same tests carried out in turn. And we applied two correction coefficients: to equalize the time of fulfillment of the tasks (the effect of acceleration of parallel execution of two applications can be calculated correctly provided that duration of the tasks is equal) and to eliminate the effect of unequally provided CPU resources for foreground-/background applications. So, the gain from the Hyper-Threading technology is 17% in this test.

Well, the impressive results of the SYSmark are proved in the test using two real applications in parallel. The "spin-up" is certainly not double, and we selected the tests most favorable for the Hyper-Threading. But look at this from the following point of view: the processor under consideration is the good old Pentium 4, except the Hyper-Threading support. The column "without Hyper-Threading" is what we could see if this technology weren't applied to desktop systems. In fact, with the new processor we are given one more method of accelerated execution of certain operations.

In addition to playing a movie compressed in MPEG4 with the DivX codec we carried out archiving in the WinAce (the test wouldn't make any sense if there were drops of frames and hang-ups, but we had no complains about the quality). During rendering of a typical test scene in the 3ds max we played MP3 files with the WinAmp (there were no delays in sound playback). Note that in each case we have a main task and a background one. For estimation we used the time of archiving and of complete scene rendering respectively. The effect of the Hyper-Threading is +13% and +8%.

This is a real typical situation. Well, I must say that the Hyper-Threading is not so unsophisticated as it may seem. A simple direct approach (the OS sees two processors, so let's consider them two) has no a considerable effect. But look at this at a different standpoint: it takes less time to fulfill tasks with the Hyper-Threading compared to the situation without it. Something is better than nothing. The idea is that we are offered not a cure-all but just a means to accelerate the processor core without fundamental changes in it. Although it's far from 30% promised in the press-release, but it always happens.

The new version of the CPU RM supports multithreading (and therefore Hyper-Threading). Note that this is the first time the CPU RM is used for testing a multiprocessor system, that is why it is a mutual test - we tested the Hyper-Threading as a particular case of the SMP on the Pentium 4 3.06 GHz based system, and this system, in its turn, tested our benchmark for correct realization of multithreading. Well, both parties remained satisfied :). Although the CPU RM is not an entirely "multiprocessor" test (several threads are created only in the rendering unit, the Math Solving remains a single-thread unit), the results obtained indicate that the SMP and Hyper-Threading are supported and bring benefit. By the way, implementation of multithreading in the Math Solving unit is not a trivial problem, that is why if you have any ideas, please, share them with us. The CPU RightMark is a benchmark with open source texts, that is why you can make your suggestions on improvement of the code.

Before we turn to the diagrams let me draw your attention to the testing technique. The system was tested in 12 versions. We measured the following parameters:

Let me explain the last item. It might seem that it's insane to refuse from the SSE2 for the Pentium 4. But in this case it can be a good opportunity to test the Hyper-Threading technology. The matter is that the FPU instructions were used only in the computation module, and the rendering module still had the SSE support enabled. We thus made different parts of the benchmark use different computing units of the CPU! If we forcedly refuse from the SSE2, the Math Solving unit of the CPU RM must leave untouched the execution units of SSE/SSE2 instructions and they could be fully used by the rendering unit of the CPU RM. Now we can turn to the results and verify our suppositions. Also, to reach more stable results we change one more parameter - number of frames (300 by default) which was increased up to 2000.

Well, the Math Solving unit was left untouched, that is why the Hyper-Threading has no effect on its performance. But it does no harm to it as well! We know that such situations when the virtual multiprocessing can be harmful are possible. But look how greatly the results of the Math Solving unit changed after we disabled the SSE2!

Perfect! As soon as the number of threads in the rendering unit is more than one this configuration jumps up to one of the first positions. We can also see that exactly two threads are optimal for systems with the Hyper-Threading support, two actively working threads. Well, it looks logical that if we have two virtual CPUs, the most optimal solution will be to have two threads. Four is too much because several threads will fight for each virtual CPU. But even in this case the Hyper-Threading outscores the uniprocessor system.

Well, the experiment with the FPU instructions involved turned out to be successful. It might seem that deactivation of the SSE2 could affect the performance terribly (remember the scores of the Math Solving Speed with the FPU instructions used on the first diagram of this section). But now, in the second line, this configuration becomes one of the champions! The causes are clear, and it proves that behavior of the systems supporting Hyper-Threading is predictable. The negative result of the Math Solving unit on the system with the enabled Hyper-Threading was made up for by the rendering unit which made use of the SSE/SSE2 execution units. Such system takes one of the leading positions at that. Again I should repeat that the Hyper-Threading works best of all when actively working programs (or threads) use different execution units of the CPU. In this case this has a very strong effect which proves that the code of the CPU RM is very well optimized. But the main conclusion is that the Hyper-Threading works - it means it will work in other programs as well. And the better the code is optimized, the stronger the effect of the Hyper-Threading.

...Intel has released a new Pentium 4 whose performance is greater compared to the previous Pentium 4, but it's not the limit, and soon we will get a more efficient Pentium 4... But as I said in the beginning we are not comparing today a performance level of the Pentium 4 3.06 GHz with other models because... look up. We are interested only in the Hyper-Threading.

So, how should we regard it? First of all remember that Hyper-Threading is not SMP. Symmetric Multi-Processing implies several processors, and we have only one processor. However, it is supplemented with a certain feature which lets it pretend it consists of two processors. So, does this feature make any sense?

Well, the Hyper-Threading technology allows increasing efficiency of the processor in certain cases. In particular, when applications of different nature are used simultaneously. This is an advantage, but the effect takes place only in certain situations. The classical market principle says: pay more to get more.

The Hyper-Threading can't be called a "paper" technology as it really boosts up performance sometimes. The effect can be much greater than even when we compare two platforms with the same processor but different chipsets. But the effect depends on a style of working with a computer. Note that the classical SMP style is when a user counts on the response of the classical multiprocessor system.

The style of the Hyper-Threading is a combination of entertaining or

service processes with "working" processes. You won't get a tangible gain

in most classical multiprocessor tasks if you run one application at a

time. But you will surely make shorter the time of execution of most background

tasks used as a makeweight. Intel has actually reminded us that operating

systems we are using are multitask, and it offered a way to speed up fulfillment

of a complex of simultaneously executed applications (not a single one).

This is a very interesting approach, and we are glad this idea is realized.

Write a comment below. No registration needed!

|

Article navigation: |

| blog comments powered by Disqus |

| Most Popular Reviews | More RSS |

|

Comparing old, cheap solutions from AMD with new, budget offerings from Intel.

February 1, 2013 · Processor Roundups |

|

Inno3D GeForce GTX 670 iChill, Inno3D GeForce GTX 660 Ti Graphics Cards A couple of mid-range adapters with original cooling systems.

January 30, 2013 · Video cards: NVIDIA GPUs |

|

Creative Sound Blaster X-Fi Surround 5.1 An external X-Fi solution in tests.

September 9, 2008 · Sound Cards |

|

The first worthwhile Piledriver CPU.

September 11, 2012 · Processors: AMD |

|

Consumed Power, Energy Consumption: Ivy Bridge vs. Sandy Bridge Trying out the new method.

September 18, 2012 · Processors: Intel |

| Latest Reviews | More RSS |

|

Retested all graphics cards with the new drivers.

Oct 18, 2013 · 3Digests

|

|

Added new benchmarks: BioShock Infinite and Metro: Last Light.

Sep 06, 2013 · 3Digests

|

|

Added the test results of NVIDIA GeForce GTX 760 and AMD Radeon HD 7730.

Aug 05, 2013 · 3Digests

|

|

Gainward GeForce GTX 650 Ti BOOST 2GB Golden Sample Graphics Card An excellent hybrid of GeForce GTX 650 Ti and GeForce GTX 660.

Jun 24, 2013 · Video cards: NVIDIA GPUs

|

|

Added the test results of NVIDIA GeForce GTX 770/780.

Jun 03, 2013 · 3Digests

|

| Latest News | More RSS |

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook

Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved.